We all have it—even if we can’t see it. That invisible zone surrounding your body that tells you when someone’s too close or when to duck a flying object. Scientists call it peripersonal space, and it’s the dynamic, constantly updated buffer between ourselves and the world. Now, in a remarkable fusion of neuroscience and artificial intelligence, researchers have created the most comprehensive model yet of how the brain builds that personal “bubble.”

Their findings, published recently in Nature Neuroscience, show that this space isn’t just a sensory boundary. It’s a fluid map that reflects risk, reward, and motion—and could soon help robots and prosthetic devices better understand how to behave around humans.

Led by researchers at the Chinese Academy of Sciences and the Italian Institute of Technology (IIT), the study doesn’t just mimic biology—it redefines it. By creating virtual animals driven by trial-and-error learning, the team showed how certain neurons, known as peripersonal neurons, naturally emerge to track threats and opportunities near the body. The result is a theoretical and computational breakthrough that echoes both monkey brain data and human imaging studies.

And it all started with a blink.

A Reflex That Sparked a Revolution

Sometimes, great discoveries begin not with a grand plan, but a curious observation.

Giandomenico Iannetti, senior author of the study, recalls the moment the team’s journey began. “We were running unfunded experiments, purely driven by curiosity,” he said. “We discovered something surprising: when you electrically shock the hand, the blink reflex is stronger if the hand is closer to the face.”

At first glance, it was just a protective reaction. But to Iannetti and his team, the deeper meaning was clear. The blink reflex was behaving like peripersonal neurons—those that fire when objects get close to the body. What followed was a deep dive into existing literature, and a growing frustration.

Theoretical models of these neurons, they found, were scattered, inconsistent, and unable to explain key features. Why, for example, did these neurons change depending on how fast a stimulus was moving? Why did they respond differently based on whether the object was good or bad, or whether a tool was in use?

Rather than adding more scattered data, Iannetti and his colleagues decided to start fresh—with a model that could explain it all.

Building a Brain from Scratch

Enter artificial neural networks (ANNs), the AI models loosely inspired by how real brains work. These systems can be trained to learn from their environment by maximizing rewards and minimizing punishments—much like a rat navigating a maze, or a human learning to ride a bike.

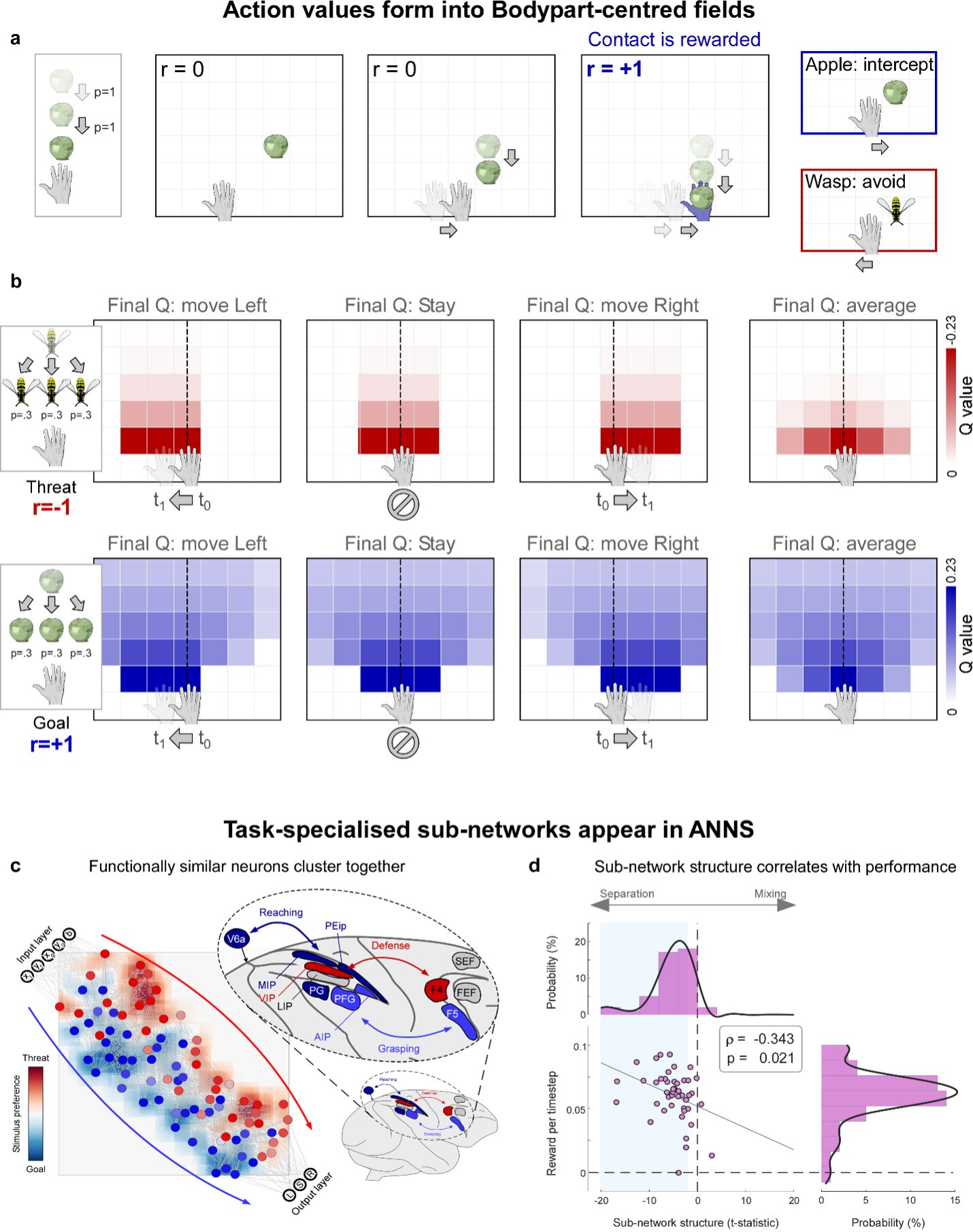

First author Rory John Bufacchi explained the team’s core idea: what if peripersonal neurons simply reflect the value of potential actions? If an object is moving toward your eye, maybe the neurons don’t just measure distance—they’re predicting danger and triggering avoidance. Conversely, if the object is food or a helpful tool, the same neurons might guide a reaching movement.

“In simple terms,” said Bufacchi, “we built computer simulations of simplified ‘animals’ that learned through trial and error which actions to take, depending on whether those actions brought reward or punishment.”

As these virtual agents learned to navigate, the researchers watched something extraordinary happen: neurons began to cluster around different parts of the body, mirroring how real biological peripersonal neurons behave.

They weren’t programmed to do this. They just… did.

The Egocentric Value Map

What emerged was what the researchers call an egocentric value map—a fluid, body-centered representation of the space near an individual, coded not just by distance but by value. This map predicted where rewards might lie, where risks might loom, and how best to act in each situation.

The model didn’t just look good on paper. When the researchers compared it to empirical data—from macaque neuron recordings, to human fMRI scans, to EEG waveforms and behavior—it lined up again and again.

Neurons in the model expanded their receptive fields in response to faster-moving stimuli, just like in the brain. They reconfigured during tool use. They separated into sub-networks for different tasks, like intercepting versus avoiding—a mirror image of how primate brains are organized.

“Our theory was the only one to successfully fit extensive experimental data,” said Iannetti. “It outperformed all previous explanations and offers a generalizable framework for understanding peripersonal responses.”

Why This Matters for More Than Just Neuroscience

Understanding how the brain maps the space around us has profound implications for medicine, technology, and the future of robotics.

In the field of neuroprosthetics, these findings could help create artificial limbs that feel more natural—not just in movement, but in how they integrate with the user’s sense of space. Imagine a prosthetic arm that knows when someone’s hand is approaching too fast, and reflexively prepares for impact—just like your biological limb would.

In robotics, this research could pave the way for machines that better understand personal space. Current robots operate using pre-set rules about proximity. But an AI armed with an egocentric value map could adapt its behavior based on context: stepping back if it gets too close, approaching more confidently when invited, or prioritizing actions based on safety and usefulness.

“This could revolutionize human–robot interaction,” said Iannetti. “Robots could simulate egocentric value maps to develop adaptive, context-specific representations of appropriate human interaction distances, making collaboration more natural and effective.”

What’s Next: Beyond the Bubble

The team isn’t finished. While their model already matches decades of neuroscience data, they believe there’s more ground to cover. One current limitation is that the reinforcement learning framework they used doesn’t explicitly account for sensory uncertainty—a big factor in how real organisms perceive and act.

To fix that, the researchers plan to build models based on active inference, a more complex approach that incorporates uncertainty and predictive processing. They also aim to integrate more real-world data, collaborating with labs that collect high-resolution brain recordings during live interactions.

It’s a step toward what might eventually become a unified theory of how organisms—biological or artificial—sense, evaluate, and navigate the world just beyond their skin.

The Intimate Intelligence of Space

At its core, this research reminds us of how deeply the brain is tuned to the immediate environment—not in abstract ways, but through the very real physics of motion, value, and touch. That personal space you feel when someone leans in too close? It’s not just social instinct—it’s the work of thousands of neurons performing a delicate, ongoing calculation.

And now, thanks to a combination of curiosity, computation, and creativity, we’re beginning to understand that dance—not only in our own brains, but in the machines we design.

In the end, this invisible map might be one of the most tangible links between self-awareness, action, and the shared space in which we all exist.

Reference: Rory John Bufacchi et al, Egocentric value maps of the near-body environment, Nature Neuroscience (2025). DOI: 10.1038/s41593-025-01958-7