The world of artificial intelligence (AI) is evolving at an astonishing pace, driven by the need for increasingly powerful computing systems. At the heart of many modern AI tasks lies tensor computation—the complex mathematical operations that power everything from image recognition to natural language processing. But as the demand for faster and more efficient computing grows, traditional digital platforms like GPUs are hitting their limits. That’s where a groundbreaking new approach comes in, developed by researchers at Aalto University: single-shot tensor computing at the speed of light. This innovation, which harnesses the power of optical computation, promises to revolutionize AI hardware and move us closer to next-generation artificial general intelligence (AGI).

The problem with traditional computing is simple yet profound. While classical computers can perform tensor operations through step-by-step calculations, this method is limited by both speed and energy consumption. As AI models grow in complexity and scale, the amount of data that needs to be processed is overwhelming, leading to slower performance and higher energy costs. To overcome these hurdles, researchers have turned to light, using its incredible speed and natural ability to perform multiple operations simultaneously. The result? A breakthrough in tensor computation that could reshape the future of AI.

Unleashing the Power of Light

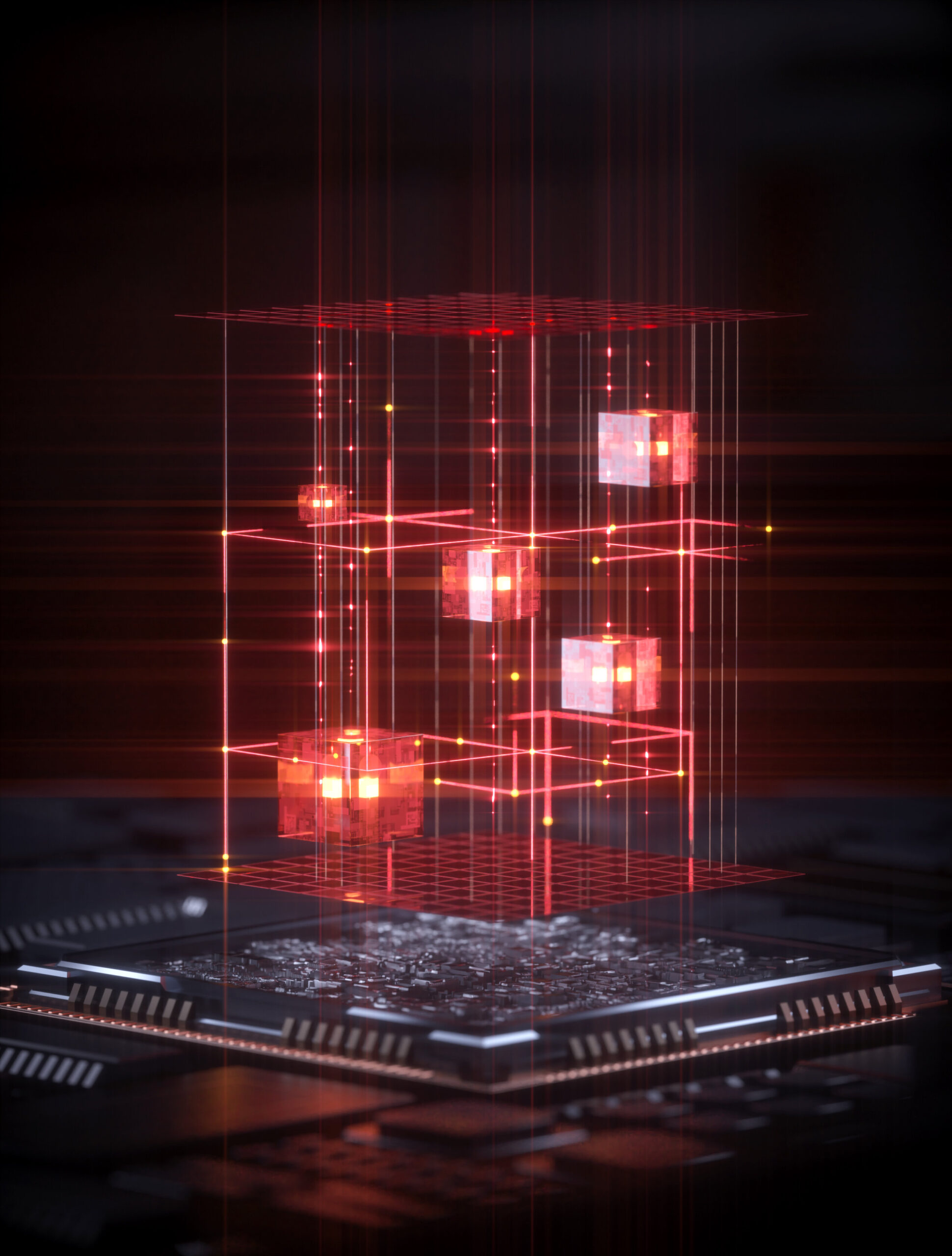

The work, led by Dr. Yufeng Zhang and his team from Aalto University’s Photonics Group, represents a leap forward in the quest for faster, more efficient AI computation. Published in Nature Photonics, their research demonstrates the ability to perform tensor operations using a single propagation of light—a method known as “single-shot tensor computing.” This concept is so powerful because it takes advantage of the physical properties of light, such as its amplitude and phase, to perform complex calculations in parallel. Instead of processing each operation individually, as traditional electronics do, light can handle them all at once, creating the potential for extraordinary speed and efficiency.

“Imagine you are rotating, slicing, or rearranging a Rubik’s cube along multiple dimensions,” explains Dr. Zhang. “This is similar to the mathematics behind tensor operations, but light can complete these steps in one go, rather than one at a time.” Light’s ability to perform these computations simultaneously is what makes this breakthrough so exciting. For AI, where tensor operations form the backbone of machine learning algorithms, this development could be transformative.

From Digital Data to Light Waves

The innovation behind this method lies in how digital data is encoded into light. The researchers use the amplitude and phase of light waves to represent numbers, effectively turning them into physical properties of the optical field. When these light waves interact, they naturally carry out mathematical operations such as matrix and tensor multiplications—the building blocks of deep learning algorithms.

In a conventional system, tensor operations are carried out sequentially by digital circuits. Each step is a tiny piece of the overall puzzle. But with the optical approach developed by Dr. Zhang’s team, the calculations are done all at once, in parallel, as the light propagates. This allows for faster processing, lower power consumption, and the possibility of scaling to new heights. By introducing multiple wavelengths of light, the researchers have even been able to extend this method to handle higher-order tensor operations—bringing it even closer to the complexity of real-world AI applications.

Breaking the Limits of Traditional Computing

The power of this optical computing approach lies in its ability to leap over the barriers that traditional electronics struggle with. For instance, GPUs, which are commonly used for AI tasks, rely on electronic circuits to perform tensor operations. While GPUs have been revolutionary in their ability to accelerate computations, they come with significant limitations. These limitations include energy consumption, heat generation, and the fact that they can only perform operations one step at a time. As data grows exponentially and AI models become more complex, the need for a new approach becomes ever clearer.

Dr. Zhang compares the process of traditional computing to a customs officer inspecting each parcel through multiple machines with different functions, sorting them into the correct bins one by one. In contrast, the optical method developed by his team is like merging all parcels and machines together, allowing everything to be sorted at once with just a single pass of light. This makes the process far more efficient, both in terms of time and energy.

Another key advantage of optical computing is its simplicity. The optical operations occur passively as the light propagates, meaning there is no need for active control or electronic switching during computation. This significantly reduces the complexity and power requirements of the system, making it ideal for integration into real-world computing platforms.

The Potential Impact on AI and Beyond

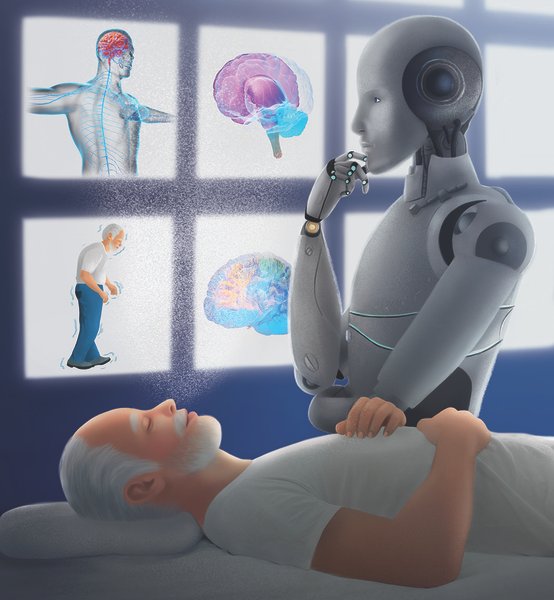

The implications of this research extend far beyond just faster AI. If this technology can be successfully integrated into optical computing systems, it could have profound effects on industries ranging from healthcare to finance, and from robotics to telecommunications. Optical computing could make AI tasks that once took hours or days to complete achievable in real-time, opening the door to new applications and possibilities. For example, imagine medical imaging systems that can process vast amounts of data instantaneously, or autonomous vehicles that can make faster, more accurate decisions based on real-time data.

The research team envisions a future where optical computation is embedded directly into photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption. Professor Zhipei Sun, a leader of the Photonics Group at Aalto University, emphasizes that this technology could be implemented on almost any optical platform, making it scalable and adaptable for a wide range of uses. As the technology matures, it could be integrated with existing hardware and platforms, further accelerating the evolution of AI systems.

A Glimpse into the Future of Computing

The single-shot tensor computing method developed at Aalto University is more than just a scientific breakthrough—it’s a vision of the future of computing. If the research continues to progress as expected, we could see optical computing systems deployed within the next three to five years. This would mark the beginning of a new era in AI, one where optical processors handle the complex mathematical computations that are currently bottlenecking digital platforms.

Ultimately, the goal is to create a new generation of optical computing systems that can perform AI tasks much faster and more efficiently than today’s electronic systems. This could lead to significant improvements in a wide range of industries, from autonomous systems to drug discovery, from climate modeling to personalized education. By harnessing the speed and power of light, researchers are creating the foundation for next-generation artificial general intelligence hardware—AI that is faster, more powerful, and more energy-efficient than ever before.

In the coming years, as this technology develops, the world may look back on this moment as the beginning of a major shift in computing. Light, which has long been a symbol of speed and brilliance, is finally becoming the engine that powers our most ambitious technological dreams. As this new era unfolds, one thing is clear: the future of AI is bright, and it runs at the speed of light.

More information: Direct tensor processing with coherent light, Nature Photonics (2025). DOI: 10.1038/s41566-025-01799-7.