There was a time—not so long ago—when intelligence was a uniquely human trait. The capacity to learn, reason, adapt, and create belonged solely to us. Then came machines: calculators, then computers, and finally, something else entirely—machines that learn. Artificial Intelligence was once a fringe fantasy confined to science fiction. Today, it shapes nearly every corner of modern life.

AI recommends the next song you’ll listen to, routes your GPS through back alleys to save you five minutes, detects fraudulent credit card activity before you even know it happened, diagnoses disease better than seasoned doctors, writes poetry, solves equations, creates art, and yes, even helps build itself. As this artificial brain grows smarter and more entwined with our daily lives, a pressing question looms larger than ever: is it taking over the world—or making it better?

The answer, it turns out, is as complex and fascinating as the technology itself.

Understanding the Brain Behind the Machine

To know whether AI is a threat or a gift, we must first understand what it really is. Artificial Intelligence is not one single thing. It is a constellation of technologies—machine learning, deep learning, natural language processing, computer vision, robotics—that aim to replicate or simulate human intelligence.

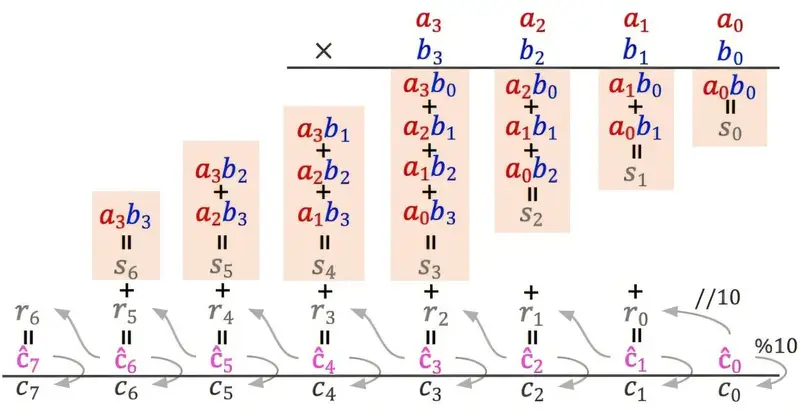

At its core, AI is about pattern recognition and decision-making. Machine learning algorithms analyze vast amounts of data and learn patterns that humans may miss. Deep learning—modeled loosely on the brain’s neural networks—has given machines the ability to recognize images, translate languages, and even understand context in speech. The more data these systems absorb, the more accurate they become.

Yet, unlike a human child, AI does not truly “understand.” It has no consciousness, no emotion, no goals—unless those goals are programmed into it. AI is not alive. It doesn’t dream. But it can now do many things we once believed only living beings could.

A Tool That’s Reshaping Society

From healthcare to agriculture, transportation to education, AI is already transforming industries in profound ways. It’s helping radiologists detect cancerous tumors with greater precision. It’s enabling blind people to navigate cities using AI-powered glasses. Farmers are using it to optimize crop yields by analyzing soil health and weather patterns. In classrooms, adaptive learning software tailors education to each student’s unique pace and style.

AI is accelerating the pace of discovery. In 2020, DeepMind’s AlphaFold solved the decades-old challenge of protein folding, allowing scientists to predict the 3D structure of proteins with remarkable accuracy—something that could revolutionize medicine. In climate science, AI models are analyzing satellite data to better predict natural disasters and track environmental change.

All this is making the world smarter, faster, and in many ways, better. But as with any powerful tool, the benefits depend on how it’s used—and who controls it.

The Automation Dilemma

One of the earliest and most enduring fears about AI is the loss of jobs. And it’s a fear rooted in reality. Machines have always displaced human labor—from the cotton gin to the assembly line to the self-checkout kiosk. AI takes this further by threatening not just manual labor, but cognitive work as well.

Banking analysts, paralegals, data entry clerks, translators, even software developers—jobs that once required years of education—are now increasingly being done, or at least assisted, by algorithms. A 2023 report by Goldman Sachs estimated that AI could eventually automate the equivalent of 300 million full-time jobs.

But the picture is not all bleak. While AI eliminates some roles, it also creates new ones—AI trainers, ethics officers, data scientists, robotic engineers. Historically, technological revolutions have eventually led to net job growth and higher productivity. The question is not just what jobs will exist, but who will have access to them.

As automation spreads, the gap between those who can work alongside machines and those replaced by them may widen. Without thoughtful policies—reskilling programs, education reform, social safety nets—AI could deepen inequality even as it grows the economy.

The Surveillance Paradox

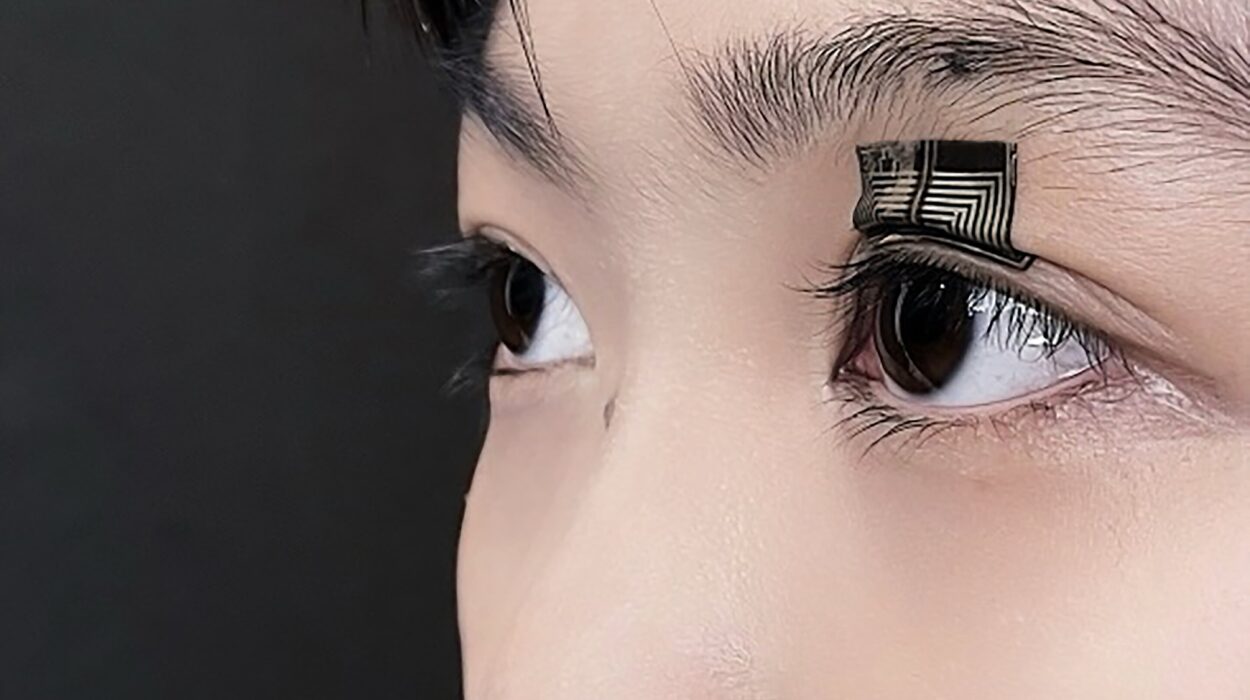

AI doesn’t just see. It watches. It doesn’t just hear. It listens, categorizes, predicts. And in the hands of governments and corporations, it has become a powerful tool for surveillance.

Facial recognition systems can now track individuals across cities in real time. Social media algorithms can infer everything from your political leanings to your sexual orientation. Predictive policing tools use historical crime data to forecast where crimes are likely to occur—often perpetuating racial biases embedded in the data itself.

In China, AI-driven surveillance is used to monitor minority populations and suppress dissent. In the U.S., private companies and law enforcement agencies have quietly built vast networks of surveillance without public consent. The right to privacy—a cornerstone of democracy—is increasingly difficult to defend in a world where every move can be tracked and analyzed.

Yet, AI can also protect. It’s used to detect cyberattacks, track missing persons, and uncover criminal networks. The same technology that threatens freedom can also safeguard it—depending on who holds the keys.

The Rise of the Machines—Or Just Hype?

Stories about AI “taking over” often conjure images of rogue superintelligences, conscious machines that escape human control and wreak havoc. This idea, popularized by films like The Terminator and Ex Machina, has dominated the public imagination. But how close is this to reality?

Most scientists agree: general AI—machines with human-level understanding across all domains—does not yet exist. Today’s AI is narrow, specialized. It can beat humans at chess, diagnose pneumonia from X-rays, or write human-like essays—but it cannot reason across contexts or possess common sense.

That said, research is advancing rapidly. OpenAI’s GPT-4 and Google’s Gemini are beginning to exhibit behaviors once thought impossible for machines—fluid conversation, complex reasoning, even creativity. The line between mimicry and intelligence grows blurrier by the year.

Some experts, including Elon Musk and the late Stephen Hawking, have warned that unchecked AI development could pose existential risks. Others, like Yann LeCun, Meta’s Chief AI Scientist, believe such fears are overblown. The debate is far from settled.

What’s clear is that AI is becoming more powerful, and with power comes responsibility. Whether AI evolves into a helpful companion or a dangerous force depends less on the technology itself and more on the values of the people building it.

Bias in the Machine

AI learns from data. But data is not neutral. It reflects the world as it is—with all its injustices, prejudices, and historical baggage. When AI systems are trained on biased data, they often replicate and even amplify those biases.

Job recommendation algorithms have been found to favor male candidates over female ones. Facial recognition software performs poorly on darker-skinned individuals. Predictive policing disproportionately targets communities of color. These are not bugs; they are consequences of design choices, data selection, and blind spots.

To build fair and ethical AI, we must interrogate not just the code, but the culture behind it. Diverse teams, transparent practices, and accountability mechanisms are essential. Otherwise, we risk encoding inequality into the digital infrastructure of the future.

The Ethics of Creation

AI raises questions that go beyond science and enter the realm of philosophy. What does it mean to create something that can mimic human behavior? If a machine generates art, who owns it? If it makes a mistake—crashes a car, misdiagnoses a patient—who is responsible?

There are no easy answers. Ethics in AI is a rapidly growing field, but it often lags behind technological progress. Tech companies race to release products, driven by profit and market pressure, while regulatory frameworks struggle to keep up.

Some countries are beginning to take action. The European Union’s AI Act is one of the first comprehensive efforts to regulate artificial intelligence. It classifies AI systems by risk level and imposes restrictions accordingly. But globally, the landscape remains patchy.

If we want AI to serve humanity, not harm it, we must build not just smarter machines, but wiser societies.

Collaborators, Not Competitors

Despite fears, many experts envision a future where humans and machines work together, not against each other. AI can augment human capabilities, freeing us from drudgery and enabling deeper creativity.

In medicine, AI doesn’t replace doctors—it assists them. It offers second opinions, catches anomalies, and accelerates research. In writing and journalism, AI can help draft articles, but the spark of original thought remains human. In education, AI tutors can personalize learning, but the empathy of a great teacher is still unmatched.

Collaboration, not competition, may be the key to a harmonious future. Just as calculators didn’t destroy math, but enhanced it, AI may elevate what it means to be human—if we let it.

Toward a Conscious Future

Artificial Intelligence is not destiny. It is design. It reflects our goals, our flaws, our dreams. It is a mirror held up to civilization—a mirror we are only beginning to understand.

As we stand on the edge of a new epoch, we must decide what kind of future we want to build. A future of control or liberation? Of domination or cooperation? Will we shape AI to reflect our highest ideals—or allow it to magnify our darkest impulses?

Already, the world is being redrawn by this invisible force. Algorithms decide who gets a loan, who sees a job ad, who receives healthcare. This is not science fiction. It is now.

Yet within this moment lies immense potential. AI could help solve the climate crisis, cure diseases, end hunger, and create a renaissance of human creativity. But only if we approach it with humility, wisdom, and courage.

We are not bystanders. We are the architects.