In a quiet lab at the University of California San Diego, a small team of researchers may have just cracked one of the biggest challenges in medical AI: how to train powerful image recognition tools with only a handful of examples. Their innovation? A new artificial intelligence system that learns faster, needs far less data, and could help bring life-saving diagnostics to hospitals and clinics around the world.

Medical imaging is one of the most promising frontiers in artificial intelligence. From X-rays to MRIs, doctors rely on images to detect cancer, track organ damage, and diagnose disease. But training machines to understand those images—especially down to the level of individual pixels—is no easy task. Traditional methods require massive amounts of data: thousands of scans that must be painstakingly labeled by experts, identifying exactly which parts of an image show healthy tissue, tumors, or other abnormalities.

For many clinics, especially in resource-limited settings, that kind of data simply doesn’t exist. The result is a widening technology gap between the AI tools being developed and the reality of clinical practice. But now, a breakthrough from UC San Diego researchers promises to change that.

From Scarcity to Possibility

Led by electrical and computer engineering professor Pengtao Xie and Ph.D. student Li Zhang, the team has created an AI tool that flips the script on traditional training. Rather than demanding enormous amounts of labeled data, this system can learn from just a small set of annotated images—sometimes as few as 40. And remarkably, it doesn’t just match existing methods. In many cases, it outperforms them.

“We wanted to break the bottleneck,” said Zhang, the lead author of the study, published in Nature Communications. “In real-world scenarios, doctors might only be able to label a few dozen images. So we built a system that could learn from that—without sacrificing performance.”

The tool is designed for medical image segmentation, a crucial task where every pixel in an image is classified. This allows AI to isolate a tumor from surrounding tissue, identify polyps in a colonoscopy, or even map delicate placental vessels during fetal surgery.

Traditionally, deep learning methods excel at segmentation—but only when fed thousands of pixel-perfect annotations. Zhang’s system needs just a fraction of that. It can reduce the required training data by up to 20 times, dramatically lowering costs and time, while opening the door for widespread use in clinical settings.

How the AI Learns from So Little

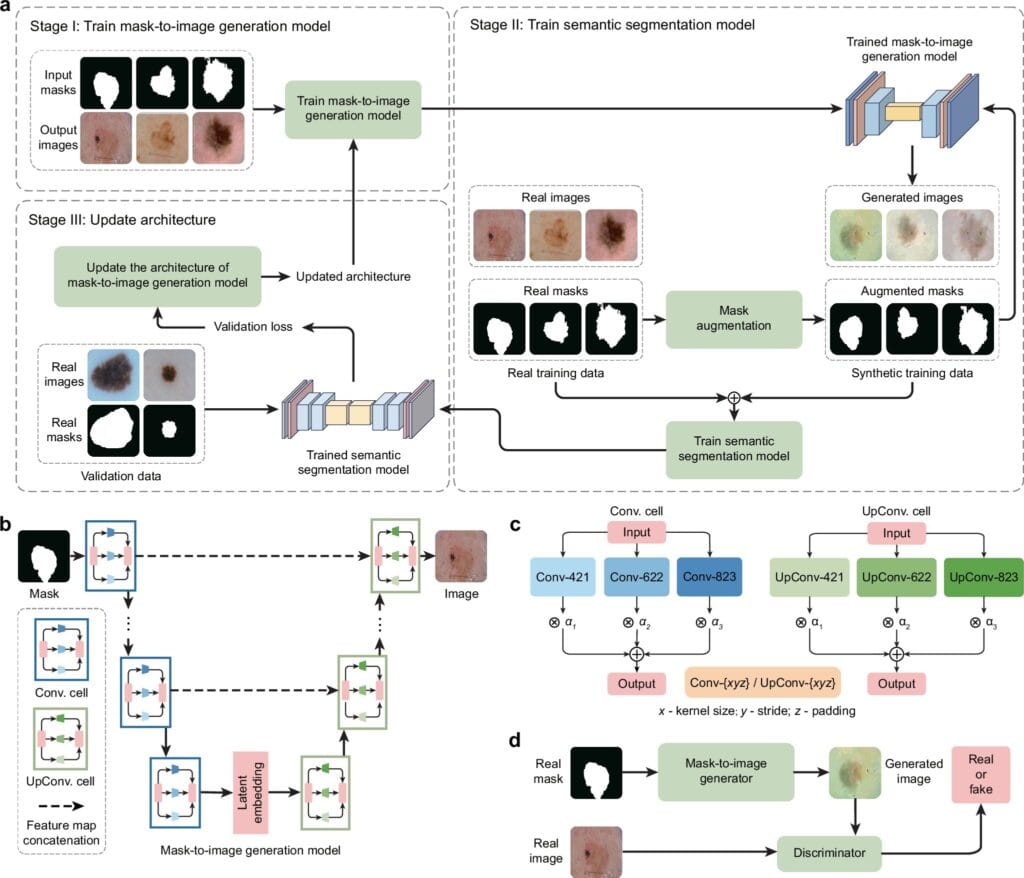

At the heart of this new system lies a surprisingly human-like strategy: creativity. The AI doesn’t just memorize what it sees—it imagines new examples based on what it’s learned, and then uses those to improve its own understanding.

Here’s how it works. The system begins with a handful of real-world image-segmentation pairs—pictures where each pixel has already been labeled as healthy, diseased, or something else. Then, using those examples, it learns how to generate new, synthetic images that mimic real medical scans, complete with corresponding masks that identify features like tumors or lesions.

But this isn’t just random image generation. The AI is guided by a feedback loop, where it tests how well its synthetic data help a segmentation model learn. If the new data improve performance, the AI keeps going in that direction. If not, it adjusts. Over time, this loop creates synthetic images that are not only realistic, but tailored to boost diagnostic accuracy.

“This is the first system to tightly integrate data generation and model training,” said Zhang. “The segmentation performance itself helps shape what kind of data the system creates. It’s not just about realism—it’s about usefulness.”

Big Results from Small Data

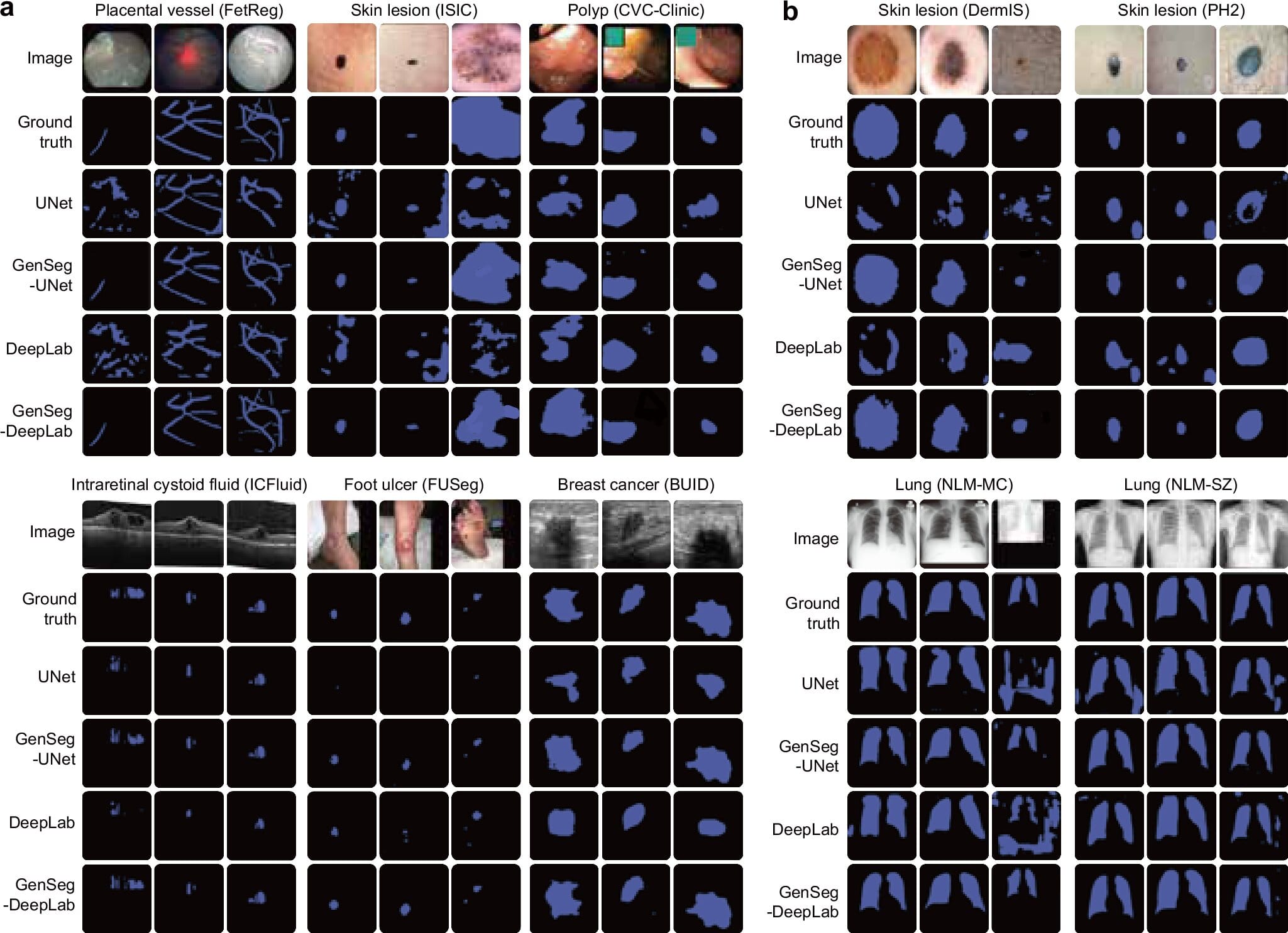

To test the tool, the team applied it across a range of medical imaging tasks. It learned to detect skin lesions from dermoscopy images. It segmented breast tumors in ultrasound scans. It mapped placental vessels from fetoscopic surgery footage, found polyps during colonoscopy, and even identified diabetic foot ulcers in regular photographs.

And the results were stunning. In situations where annotated data were extremely scarce, the AI boosted segmentation accuracy by 10 to 20% compared to existing methods. Even more impressive, it often matched or surpassed the performance of standard models trained on 8 to 20 times more data.

That’s not just a statistical win—it’s a potential lifesaver. In dermatology, for example, early and accurate identification of suspicious skin lesions can be the difference between life and death. Imagine a rural clinic where a dermatologist can only label 40 images. With this tool, those 40 could be enough to train a system that helps flag potentially cancerous moles in real time.

“It could help doctors make faster, more accurate diagnoses,” Zhang said. “That’s especially important in underserved areas, where specialists may be limited.”

A Future Where AI Is a Doctor’s Assistant

The implications of this tool stretch far beyond one lab. In an age where AI holds enormous promise for healthcare, the barriers of data scarcity and annotation cost have limited its reach. This new approach could remove those barriers, making intelligent diagnostic tools accessible even in remote or underfunded clinics.

It could also accelerate research. New diseases, rare conditions, and emerging medical imaging techniques often come with little or no annotated data. With this AI, researchers might no longer have to wait for massive datasets to begin developing useful tools.

And by integrating clinician feedback into future versions of the system, the UC San Diego team hopes to make it even smarter. The goal is to allow doctors to fine-tune the AI’s training process, ensuring that the data it creates not only improve accuracy, but also reflect what truly matters in real-world medicine.

The Bigger Picture: Democratizing Medical AI

There’s something deeply compelling about a system that learns more by needing less. In a world overflowing with data, this AI tool embraces precision over quantity, modeling its learning after something more organic—how people, especially doctors, often work with limited information but still make life-saving decisions.

“Einstein showed us that we don’t need to know everything to understand something deeply,” Zhang said. “Sometimes, the key is knowing how to ask the right questions—and how to learn from what little we have.”

By allowing machines to do more with less, this breakthrough represents a quiet but powerful shift in the future of medicine. It’s a reminder that even in a pixelated scan, a few well-labeled images—paired with the right technology—can illuminate a path to better care, greater equity, and smarter solutions.

And in that sense, it’s not just an AI tool. It’s a bridge between science and humanity.

More information: Li Zhang et al, Generative AI enables medical image segmentation in ultra low-data regimes, Nature Communications (2025). DOI: 10.1038/s41467-025-61754-6