At first glance, the experiment might have looked like something out of science fiction—or perhaps a circus act. In a lab at UC Berkeley, a tall tower of Jenga blocks stood balanced and motionless. A robot, black and white, bent like a mechanical giraffe, rolled forward holding an unusual tool: a black leather whip. With a sudden flick, the whip cracked through the air, striking just the right spot to knock a single block from the tower without sending the rest tumbling.

What looked miraculous was no trick. It was the product of a new approach to robot learning, designed by researchers in Sergey Levine’s Robotic AI and Learning Lab. The technique has now allowed robots to perform a feat most humans struggle with: “Jenga whipping.” For reference, even study co-author Jianlan Luo, who tested the challenge himself with a whip in hand, admitted he had a 0% success rate.

The robot, however, mastered it completely.

The Challenge of Teaching Robots the Impossible

Teaching a robot to whip a Jenga block sounds like a stunt—but it is in fact a serious benchmark for artificial intelligence. Most robots excel at repetitive, predictable tasks, such as stacking boxes on a conveyor belt or screwing in identical bolts. The problem comes when the world becomes messy, uncertain, and unpredictable. Real-world tasks—whether flipping an egg in a pan, assembling electronics, or handling fragile materials—demand adaptability.

This is where reinforcement learning enters the picture. Unlike traditional programming, where humans hand-code instructions, reinforcement learning allows robots to learn through trial and error. They attempt a task, analyze whether they succeeded, adjust, and try again. Over time, their performance improves.

Yet reinforcement learning is notoriously slow. A robot might require thousands of attempts before it shows competence. The Berkeley team set out to solve this problem with a new method: Human-in-the-Loop Sample Efficient Robotic Reinforcement Learning, or HiL-SERL.

How HiL-SERL Works

At its core, HiL-SERL combines the best of both worlds: human intuition and robotic persistence. Early in training, a human researcher can “guide” the robot’s movements using a special mouse, nudging it away from catastrophic mistakes. Each correction is recorded and fed into the robot’s memory. From there, the robot not only learns from its own attempts, successful and unsuccessful, but also incorporates human feedback as part of its experience.

As Jianlan Luo explained, “I needed to babysit the robot for maybe the first 30% or something, and then gradually I could actually pay less attention.” In other words, the more the robot practiced, the less the human needed to step in. By the end, the robot was performing difficult tasks independently—and at a 100% success rate.

Beyond Jenga: Mastering Real-World Complexity

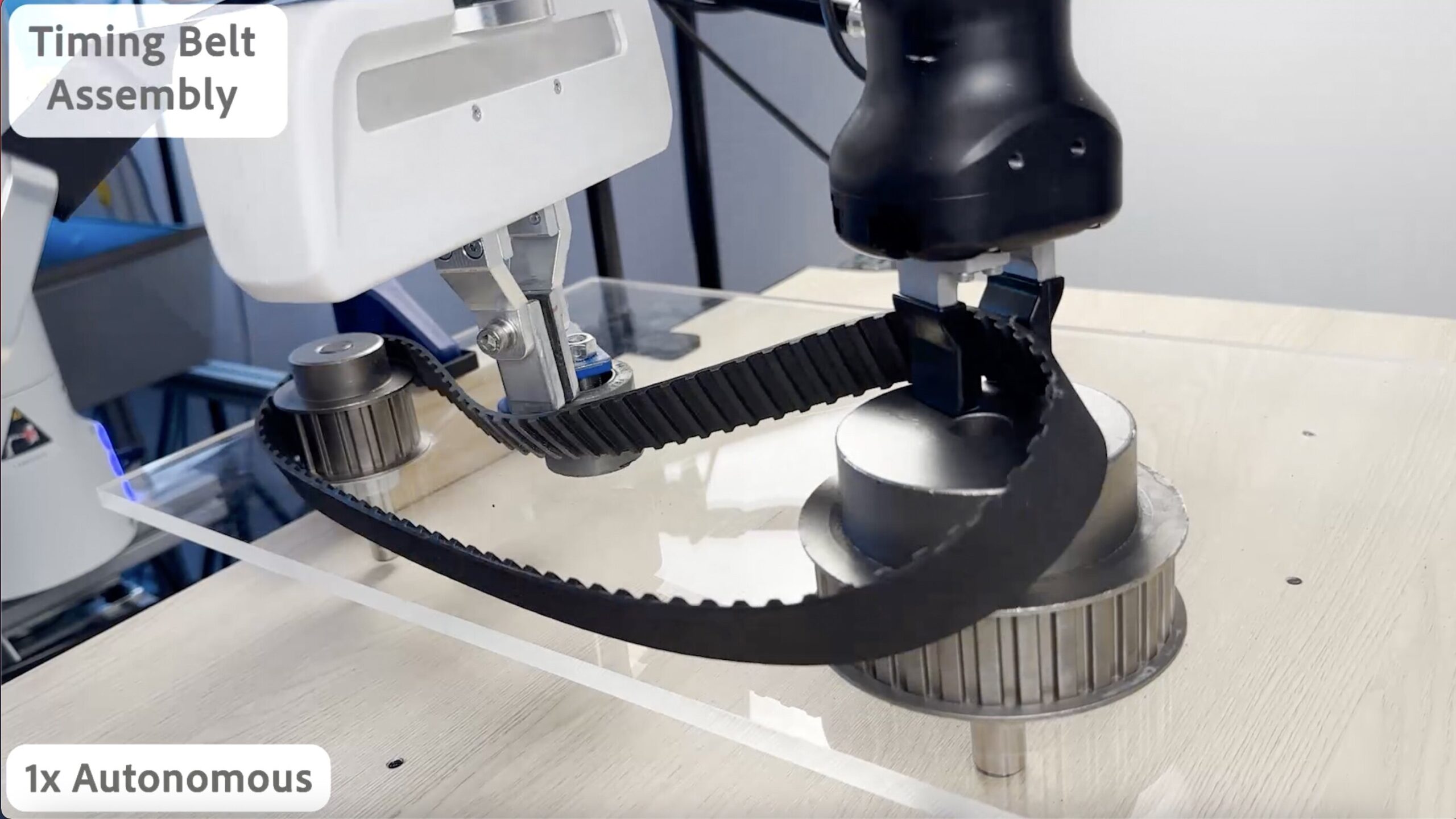

Jenga whipping was only the beginning. The research team tested the robots on a wide variety of tasks, each chosen for its unpredictability. They taught the robots to flip a raw egg in a frying pan without breaking it, to transfer an object smoothly from one robotic arm to another, and to assemble delicate machinery such as a motherboard and a car dashboard.

To make the training more realistic, the researchers even introduced deliberate mishaps: objects were moved mid-task, grippers were forced open so the robot dropped items, and assembly pieces were shifted unexpectedly. The robot adapted seamlessly, adjusting in real time to the new circumstances.

Compared to traditional methods like behavioral cloning—where robots simply copy human demonstrations—the new system was faster, more accurate, and more resilient. In the end, HiL-SERL produced robots capable of handling tasks in a messy, human-like environment, rather than the sterile perfection of a factory floor.

Why This Matters

At first, these experiments might seem playful—after all, what practical use is whipping Jenga blocks? But the implications go far deeper. The world outside of research labs is unpredictable. Objects slip, conditions change, and no two situations are quite alike. If robots are to be useful in real-world settings—from manufacturing electronics to assisting with home care—they must not only repeat tasks but adapt when things go wrong.

Luo emphasized the stakes clearly: “The bar for robot competency is very high. Regular consumers and industrialists alike don’t want to buy an inconsistent robot.” Industries such as aerospace, automobiles, and consumer electronics rely on processes that are both precise and flexible. A robot that can adapt quickly while maintaining accuracy could transform entire sectors of the global economy.

The Road Ahead

The Berkeley researchers are already envisioning the next steps. One plan is to pre-train the robots with basic object manipulation skills—like grasping or rotating—so that they don’t need to learn these fundamentals from scratch for every new task. This would accelerate learning even further, bringing robots closer to human-level adaptability.

Equally important is accessibility. Levine’s team has made their work open source, ensuring that researchers worldwide can build on it. Luo compared their vision to the accessibility of smartphones: “A key goal of this project is to make the technology as accessible and user-friendly as an iPhone. I firmly believe that the more people who can use it, the greater impact we can make.”

A Future of Collaborative Intelligence

The story of a robot whipping a Jenga block is more than just a curiosity. It represents a leap toward a future where robots can operate seamlessly alongside humans—not as clumsy machines limited to predictable routines, but as adaptable partners capable of learning from both their environment and from us.

For now, the sight of a whip-wielding robot pulling a block from a Jenga tower may draw laughter or amazement. But behind that spectacle lies a vision of technology that could one day assemble spacecraft, repair electronics, or even cook dinner in your kitchen, all while adjusting gracefully to the unpredictable rhythms of the real world.

The miracle is not just that a robot can play Jenga. It’s that a robot can now learn, adapt, and succeed in tasks as subtle and complex as those we once thought only humans could handle.

More information: Jianlan Luo et al, Precise and dexterous robotic manipulation via human-in-the-loop reinforcement learning, Science Robotics (2025). DOI: 10.1126/scirobotics.ads5033