An innovation that brings robots closer to the human touch could transform how machines handle the delicate and the unpredictable.

In a world where automation is rapidly becoming part of everyday life—from the precision of robotic surgery to the speed of package-sorting lines—one deceptively simple problem continues to pose a massive challenge: how do you teach a robot to hold something without letting it slip?

Slippery, asymmetric, or fragile objects—like a wet glass, a crumpled package, or a surgical tool—are notoriously difficult for machines to handle. Traditional robots often rely on brute force, gripping objects tightly to prevent slippage. But that strategy can backfire. Squeeze too hard and you risk crushing what you’re holding. Grip too lightly, and you drop it. For years, engineers have walked a tightrope, searching for the elusive balance between secure handling and sensitivity.

Now, a new study led by researchers at the University of Surrey, published in Nature Machine Intelligence, may offer a way forward. Inspired by the subtle, instinctive ways humans prevent slips, the team has taught robots to move more like us—and less like machines.

When Robots Feel a Slip Coming

Think about what you do when you feel a plate slipping out of your hand. You don’t just grip it tighter. You adjust your motion—maybe tilt your wrist, slow your step, or change how you’re holding the object. These micro-adjustments are so automatic we barely notice them, yet they are powered by a combination of tactile sensing and movement control shaped by a lifetime of experience.

The Surrey-led team has now replicated this kind of behavior in robots.

“We’ve taught our robots to take a more human-like approach,” says Dr. Amir Esfahani, associate professor in robotics at the University of Surrey and the study’s lead researcher. “They can now sense when something might slip and adjust how they move to keep it secure.”

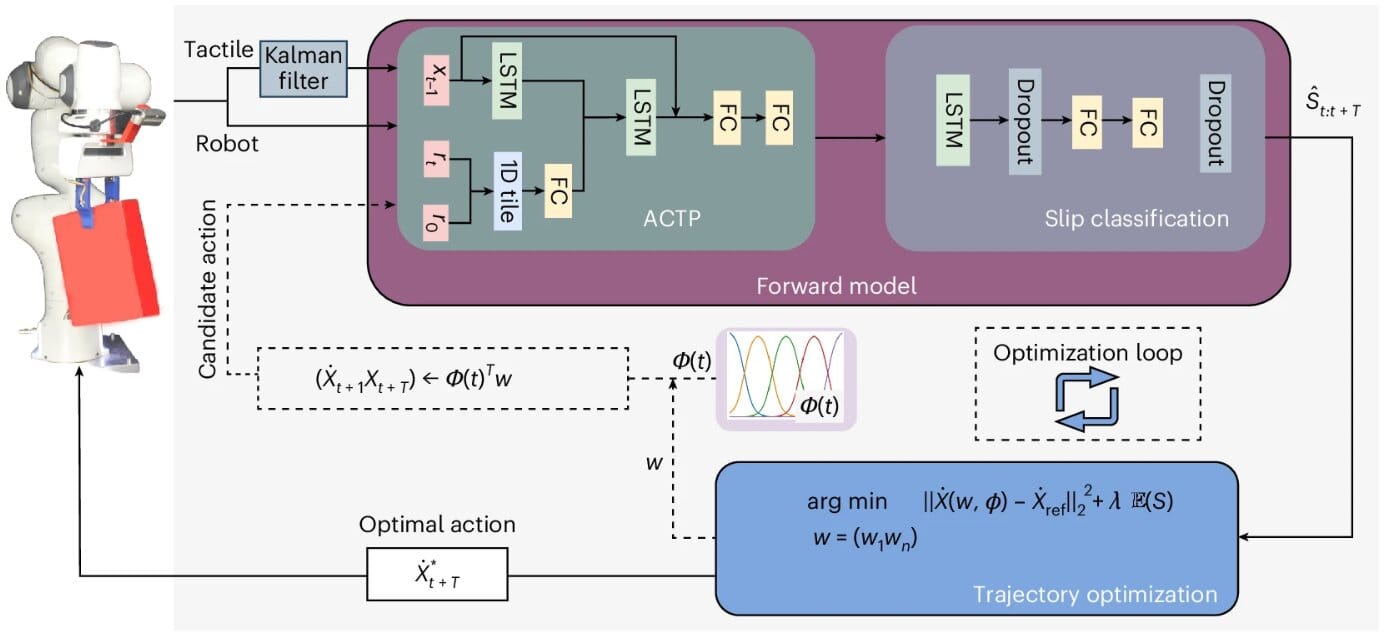

This breakthrough is built around a predictive control system powered by what the researchers call a tactile forward model. Rather than waiting for something to go wrong and reacting, the robot continually predicts how likely it is that the object in its grip will slip. It then fine-tunes its movements in real time—altering speed, orientation, and motion path to maintain a firm hold without applying unnecessary force.

In effect, the robot is learning to “feel” its way through uncertainty.

Why Grip Force Alone Isn’t Enough

Until now, most robots have been programmed to respond to slippage by squeezing harder. But this method has critical limitations. Some objects, such as delicate glassware, can’t withstand added pressure. Others—like odd-shaped packages or human hands—are too unpredictable for a fixed grip strength to work every time.

Esfahani explains the shortcoming through a relatable metaphor: “If you imagine carrying a plate that starts to slip, most people don’t simply squeeze harder—they instinctively adjust their motion to stop it from falling. Robots have never been good at that kind of nuance.”

The new approach borrows from nature. It’s bio-inspired, echoing how humans and animals handle objects without conscious thought. Instead of rigid instructions, the robot uses real-time sensory feedback to make subtle changes, mimicking the complex coordination between muscles and nerves that we rely on every day.

A Leap in Robotic Dexterity

The study didn’t just simulate these ideas—it tested them.

In collaboration with teams from the University of Lincoln, Arizona State University, KAIST (Korea Advanced Institute of Science and Technology), and Toshiba Europe’s Cambridge Research Laboratory, the researchers tested their system on both robotic hands and human participants. The results were striking.

Not only could the robot successfully prevent slips during normal operation, but it could also handle objects and movement patterns it had never seen before. This generalization is a major milestone—one that suggests the system could adapt to real-world variability, a key requirement for robots working outside controlled environments.

“This is the first time trajectory modulation for slip prevention has been demonstrated and quantified in both robots and humans,” Esfahani notes. “And the fact that it works even in new scenarios shows real promise for flexible, adaptive automation.”

Changing How Robots Help Us

The implications of this technology extend far beyond the lab.

In manufacturing, where robots already assemble cars and electronics, improved grip control could enable more precise work with smaller, more delicate components. In health care, surgical robots could manipulate tools and tissues with more finesse and less risk of damage. In logistics, warehouse robots could handle soft packages, slippery bottles, or awkward boxes without dropping or crushing them.

And in home care, where robots might one day assist the elderly or people with disabilities, this human-like touch could make robotic help not just functional, but safe and reassuring.

“We believe our approach has significant potential in a variety of robotic applications,” says Esfahani. “By making robots smarter and more sensitive in how they handle objects, we can open up new opportunities for how they’re used in daily life.”

A New Chapter in Human-Robot Collaboration

What makes this research particularly compelling is not just the technical achievement, but the philosophical shift behind it. It moves away from the idea of robots as rigid tools and toward a vision of them as responsive partners—capable of adaptation, anticipation, and even something close to grace.

This is more than just robotics. It’s about engineering intuition.

By teaching machines to adjust like humans—using prediction, sensitivity, and motion, not just power—the University of Surrey and its collaborators are helping write a new chapter in the evolution of automation.

In that chapter, the future doesn’t grip harder.

It holds smarter.

Reference: Kiyanoush Nazari et al, Bioinspired trajectory modulation for effective slip control in robot manipulation, Nature Machine Intelligence (2025). DOI: 10.1038/s42256-025-01062-2