Imagine snapping a photo of your dog and, within moments, seeing a lifelike 3D version wagging its tail, running joyfully, or curling up for a nap—right in front of your eyes in a virtual world. For millions of pet owners, this once-fantastical idea is moving closer to reality.

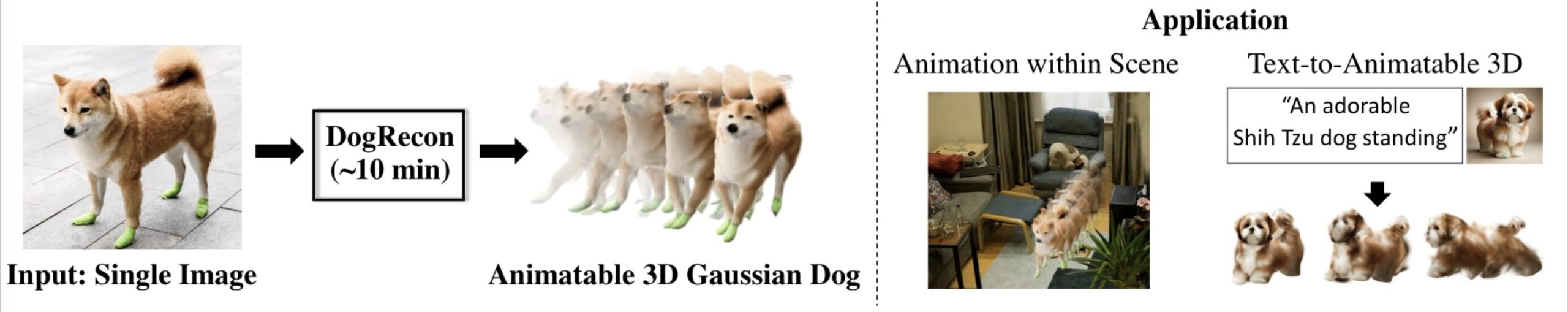

Researchers at the Ulsan National Institute of Science and Technology (UNIST) in South Korea have developed DogRecon, a pioneering artificial intelligence technology that can reconstruct highly detailed, animatable 3D models of dogs from just a single photograph. The achievement was recently published in the International Journal of Computer Vision and represents a major leap in digital modeling of animals for use in virtual reality (VR), augmented reality (AR), and metaverse platforms.

Why Dogs Are So Difficult to Recreate in 3D

Building realistic 3D models of humans has become increasingly sophisticated with modern AI and computer vision. But dogs present a unique set of challenges. Unlike the relatively consistent proportions of the human body, dogs vary dramatically across breeds—from the compact, round French Bulldog to the slender, elegant Greyhound. Their fur textures, floppy ears, tails, and flexible postures add another layer of complexity.

Moreover, dogs’ four-legged stance often hides joints and body parts from a single viewpoint, making reconstruction from just one photo extremely difficult. Traditional methods often end up producing distorted results—unnaturally stretched limbs, bundled fur, or oddly bent postures that fail to capture the natural grace of a dog in motion.

The Innovation Behind DogRecon

DogRecon tackles these hurdles with an innovative mix of statistical modeling, generative AI, and Gaussian Splatting techniques.

The system first uses breed-specific statistical models to account for the vast diversity in canine body shapes. This ensures that the reconstructed avatars respect realistic proportions for each breed. Next, it employs generative AI to synthesize additional viewpoints of the dog, filling in details that are hidden in the original image. For instance, if a photo only shows the left side of a dog, DogRecon can intelligently infer the right side with striking accuracy.

One of the most groundbreaking features is the use of Gaussian Splatting, a rendering technique that excels at capturing fine textures and curvilinear surfaces. This allows DogRecon to recreate not only the structure of the dog but also the subtle contours of fur, the roundness of muscles, and even the gentle slope of ears and tails.

The result? A 3D avatar that looks and moves naturally, closer than ever to the living animal itself.

Performance Beyond Expectations

To test its effectiveness, the UNIST team compared DogRecon with existing reconstruction methods. The evaluations showed that DogRecon produced avatars on par with those generated from video data—except it required only a single photo. Where earlier systems often struggled with natural poses, such as crouching or lying down, DogRecon delivered models with realistic joint positions and fluid body lines.

This means that whether a dog is leaping, playing, or simply resting, the digital version remains believable and faithful to life.

A Future of Virtual Companionship

The implications of this technology stretch far beyond scientific novelty. With over a quarter of households worldwide owning pets, the demand for digital representations of animals is enormous. DogRecon makes it possible for pet owners to create personalized digital avatars of their beloved companions—avatars that can be animated, interacted with, and even preserved as lasting memories.

Such technology could soon enable people to bring their dogs into VR and AR games, create immersive videos featuring their pets, or even interact with them in metaverse environments. For many, this could mean reliving joyful moments with a pet, or sharing those experiences in ways never possible before.

Voices Behind the Discovery

The project was spearheaded by Professor Kyungdon Joo at the UNIST Artificial Intelligence Graduate School, with first author Gyeongsu Cho, alongside Changwoo Kang (UNIST) and Donghyeon Soon (DGIST).

Cho explained the motivation: “With over a quarter of households owning pets, expanding 3D reconstruction technology—traditionally focused on humans—to include companion animals has been a goal. DogRecon offers a tool that enables anyone to create and animate a digital version of their companion animals.”

Professor Joo added, “This study represents a meaningful step forward by integrating generative AI with 3D reconstruction techniques to produce realistic models of companion animals. We look forward to expanding this approach to include other animals and personalized avatars in the future.”

Beyond Dogs: A Broader Horizon

Although this research focuses on dogs, the same principles could eventually apply to other animals—and even to customized avatars for humans. Imagine being able to bring not just your pet, but also wildlife, extinct species, or stylized fantasy creatures into virtual environments with the same realism.

For now, DogRecon highlights a touching intersection of technology and emotion: using cutting-edge AI to deepen the bond between humans and their pets, not by replacing real companionship, but by creating new ways to preserve and share it.

A Glimpse into Tomorrow

Albert Einstein once remarked that imagination embraces the entire world. DogRecon feels like a step toward that vision—where imagination, powered by AI, turns a single photo into a living digital presence. It’s not just about pixels or algorithms; it’s about giving form to memory, companionship, and love.

From the playful bark of a puppy to the quiet presence of an old friend, our dogs leave pawprints on our hearts. Now, with DogRecon, they may also leave pawprints in the digital worlds we are building for the future.

More information: Gyeongsu Cho et al, DogRecon: Canine Prior-Guided Animatable 3D Gaussian Dog Reconstruction From A Single Image, International Journal of Computer Vision (2025). DOI: 10.1007/s11263-025-02485-5