In a remarkable fusion of physics and artificial intelligence, researchers from the University of Tokyo have uncovered a long-missing link between a branch of physics known as nonequilibrium thermodynamics and the mechanics behind some of today’s most powerful image-generating AI models. This interdisciplinary breakthrough doesn’t just offer a theoretical explanation for what makes these generative models work so well—it opens a new avenue for designing smarter, faster, and more reliable AI systems.

Published in Physical Review X, one of the most prestigious journals in physics, the study was led by physicist Sosuke Ito and features contributions from undergraduate students, demonstrating not only scientific rigor but also the immense potential of young minds driving innovation.

The Science Behind Stunning AI Imagery

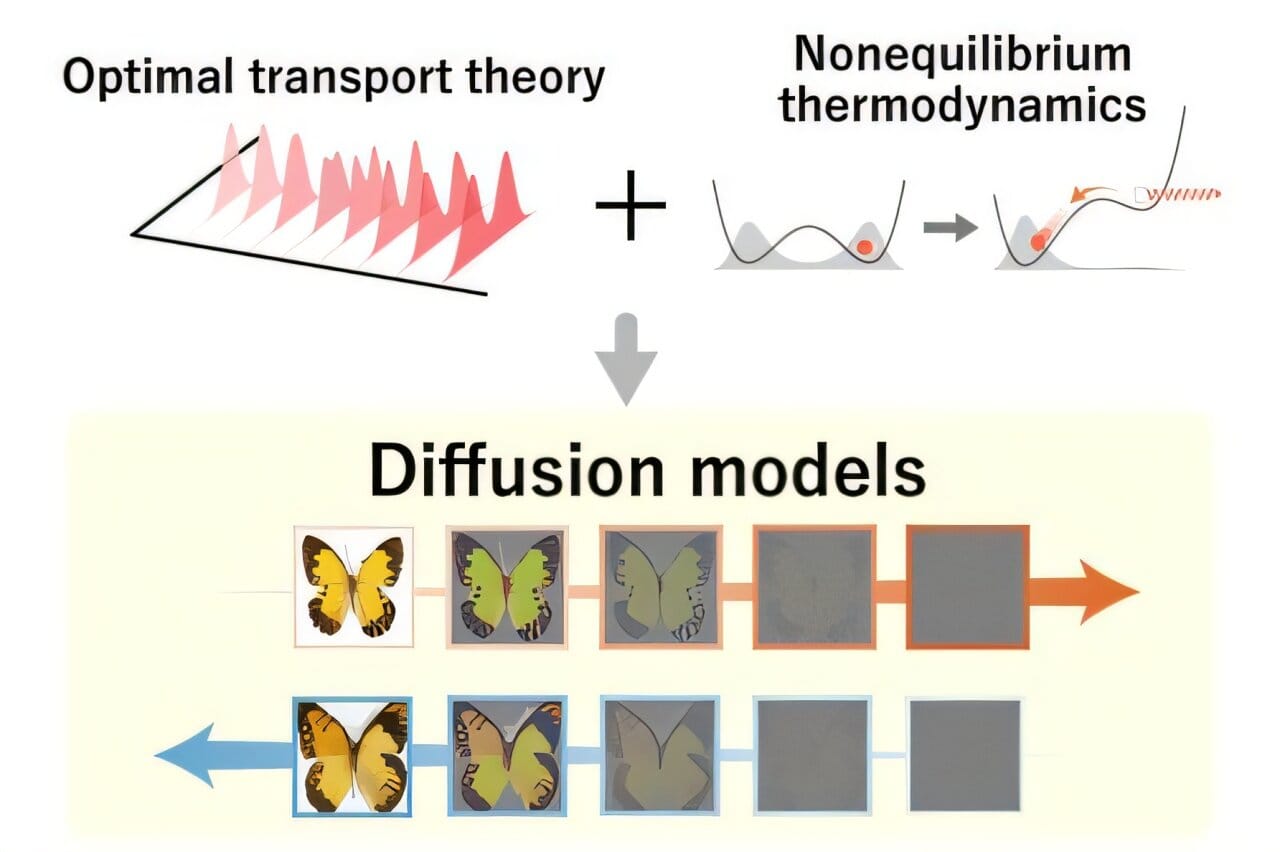

Over the past few years, AI has revolutionized how we generate digital images, with models producing photorealistic portraits, landscapes, and even imaginative art that rivals human creativity. Behind this transformation lies a class of algorithms called diffusion models. These models start with pure noise—imagine a television screen filled with static—and gradually refine it into a coherent image. The process works by learning to reverse a noisy transformation applied during training.

It might sound like magic, but it’s rooted in the laws of physics. During training, diffusion models intentionally corrupt image data with randomness—a process inspired by diffusion in physical systems, where particles spread out over time. When generating new images, the model essentially plays the process in reverse, carefully removing noise step-by-step until a new image appears.

But there’s a puzzle: how should the noise be added and removed for the model to perform optimally? This is called the noise schedule or diffusion dynamics. It’s a fundamental design choice that can make or break the performance of an image-generating AI. Until now, the best practices were based on intuition and empirical results. A clear theoretical framework had been missing—until this study connected the dots.

The Hidden Physics Guiding AI Learning

The breakthrough came when Ito and his team turned to nonequilibrium thermodynamics, a field of physics that deals with systems constantly changing in time—unlike the calm, unchanging equilibrium of a cooled cup of tea. Diffusion models are, in essence, nonequilibrium systems: they don’t stay still, and their state evolves as the AI introduces and removes noise to and from data.

In particular, the researchers focused on a concept known as thermodynamic trade-off relations. These mathematical tools describe how the amount of energy dissipated in a system relates to how fast it changes. Think of it like revving up a car: speeding up the process demands more fuel (or more dissipation), while taking things slowly uses less. The same holds true for AI models transforming noisy data into clear images.

Ito’s team used this framework to establish a set of inequalities—mathematical bounds—that connect thermodynamic dissipation to how robustly the AI model generates images. They discovered that a specific mathematical strategy known as optimal transport dynamics—which guides the AI in choosing the most efficient path from noise to image—actually minimizes thermodynamic dissipation and maximizes robustness in data generation.

In simpler terms, they found the physical reason why some of the best AI image generation methods work so well: they naturally align with the most energy-efficient, stable way to transform information, as dictated by the laws of physics.

A New Era for Machine Learning Design

While optimal transport theory has long been known for its usefulness in diffusion models, this is the first time it’s been proven to be theoretically optimal from a thermodynamic point of view. That’s a profound leap—from practical tool to physical principle.

“The selection of diffusion dynamics, also known as a noise schedule, has been controversial in diffusion models since their inception,” says Professor Ito. “Optimal transport dynamics has been empirically shown to be useful in diffusion models, but it has not been theoretically demonstrated why it would be so—until now.”

This revelation is more than academic. It offers a powerful new design principle for machine learning engineers. By embracing the insights of nonequilibrium thermodynamics, future models can be crafted to generate data more robustly, with fewer errors, and potentially with less computational cost.

Young Minds Leading the Way

Beyond the physics and the algorithms lies another compelling aspect of this discovery: much of the work was conducted by undergraduate students. The first and second authors, Kotaro Ikeda and another student, were enrolled in a university class when they contributed to the project. Ikeda, in particular, played a crucial role in both the theoretical and numerical aspects of the study.

“The first and second authors of the paper are undergraduate students,” says Ito, clearly proud. “This research was partially conducted as part of a class. In particular, the first author contributed greatly to this study, from numerical calculations to theoretical analysis.”

The success of these young researchers underscores the value of interdisciplinary learning and mentorship. It’s a reminder that scientific breakthroughs aren’t reserved for seasoned professionals in ivory towers—they can come from classrooms filled with curious minds and supportive teachers.

Why This Discovery Matters for Everyone

This research might seem esoteric at first glance—a complex interplay between physics and algorithms. But its impact ripples far beyond academic journals. As AI becomes more integrated into our daily lives—from creative tools and medical imaging to climate modeling and autonomous vehicles—the need for reliable, interpretable, and energy-efficient models becomes ever more pressing.

By rooting machine learning in physical laws, we gain not only better models but a deeper understanding of how intelligent systems process information—whether artificial or biological. In fact, Ito hopes these findings will spark interest in using thermodynamics to understand not just AI, but also the information-processing systems found in nature.

“We hope our results raise awareness of the importance of nonequilibrium thermodynamics in the machine learning community,” he says. “And we, including the next generation, continue to explore its usefulness in understanding biological and artificial information processing.”

The Future of AI Lies in the Laws of Nature

In a world where AI technology often moves faster than we can understand it, this study offers a breath of clarity. It reminds us that even the most advanced algorithms are not beyond comprehension—they are grounded in the same universal principles that govern falling apples, spinning planets, and boiling water.

By bridging the gap between thermodynamics and machine learning, Sosuke Ito and his students have not only solved a longstanding mystery—they have built a bridge between two worlds. And in doing so, they’ve shown that the future of AI may not lie in ever-more complex code, but in our ability to see the elegant physics already guiding it.

As Einstein himself once said, “Look deep into nature, and then you will understand everything better.” This discovery proves that wisdom still holds true—even in the age of artificial intelligence.

More information: Kotaro Ikeda et al, Speed-Accuracy Relations for Diffusion Models: Wisdom from Nonequilibrium Thermodynamics and Optimal Transport, Physical Review X (2025). DOI: 10.1103/x5vj-8jq9