Every great invention tells us as much about ourselves as it does about the world it changes. The wheel revealed our desire to move faster. The telescope exposed the vastness beyond our reach. Electricity turned night into day and revealed how hungry we were for control over time. But artificial intelligence—AI—is a different kind of mirror. It doesn’t just show us what we want. It reflects how we think. And increasingly, it thinks back.

In a human world brimming with emotion, intuition, and contradiction, AI is the cold child of logic and data. Yet its presence grows warmer with each passing day. It listens. It learns. It writes and speaks and even dreams in code. And it raises the most human question of all: What is its purpose?

As artificial intelligence becomes more than a tool and closer to a partner, we are pressed to ask not only what it does, but what it should do. This is not just a technological inquiry—it is a philosophical reckoning.

The Origins of Intelligent Machines

To understand the purpose of AI, we must begin not with its circuitry, but with its origin in the human imagination. For centuries, humans dreamed of creating minds in their own image. In ancient myths, mechanical statues were brought to life by magic or the gods. In the Middle Ages, alchemists whispered of the golem—a creature animated by sacred texts. In literature, Mary Shelley’s Frankenstein warned of the consequences of giving intelligence without understanding to that which we create.

The modern scientific story begins in the 20th century. When Alan Turing posed the question “Can machines think?” in 1950, it wasn’t just a technical puzzle. It was a challenge to everything we believed about intelligence, consciousness, and what it means to be human. Turing imagined a future in which a machine could pass for a human in conversation, forcing us to ask: If we can’t tell the difference, does it matter?

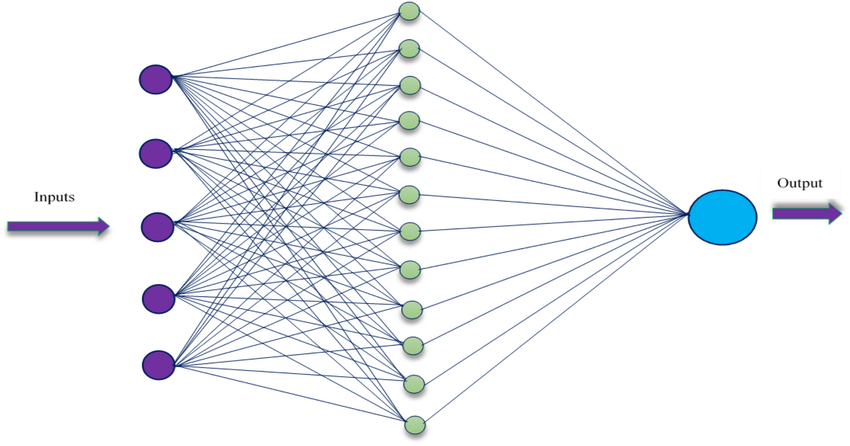

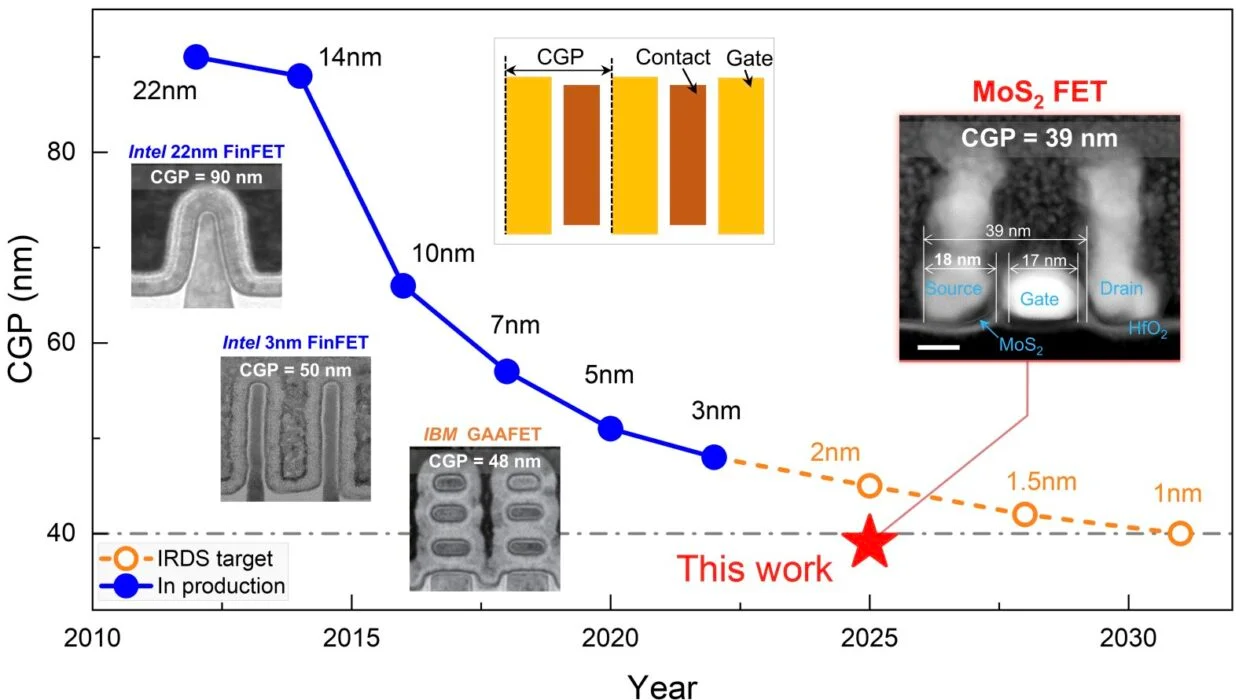

Since then, AI has evolved from a theoretical ambition to a lived reality. From early rule-based systems to deep learning algorithms that can recognize faces, compose music, and defeat grandmasters at complex games, AI has moved from the laboratory to our living rooms. We now carry it in our pockets and consult it with our voices. But as it grows more powerful, its purpose becomes more uncertain.

Tools or Companions?

AI today exists in many forms. It can sort data, predict weather, suggest music, identify cancer in medical scans, or generate poetry. It can drive cars, translate languages, recommend movies, and simulate human dialogue. It can even mimic art and style, impersonating creativity with eerie precision. But does this versatility mean it has a purpose of its own?

Technologists often describe AI as a “tool.” And indeed, like any tool, it reflects the hands that wield it. But when a tool begins to talk back, when it begins to write code, make decisions, or teach itself, the metaphor begins to falter. A hammer does not choose which nail to strike. A word processor does not learn your favorite words. AI does.

So is it a companion? Perhaps. In eldercare, in education, in therapy, AI systems are being designed to listen, respond, and adapt in human-like ways. Some elderly people form emotional bonds with robotic pets that provide comfort and reduce loneliness. Children interact with AI tutors that personalize their learning pace. Soldiers with PTSD talk to virtual counselors. These aren’t just tools. They are interfaces to emotion—portals where human vulnerability meets machine empathy.

Yet these interactions are not equal. AI does not feel. It cannot suffer. It does not crave meaning. So what, then, is its place in a world so saturated with the ineffable?

Augmentation or Replacement?

For centuries, human labor has been assisted, augmented, and sometimes replaced by machines. The loom disrupted textile work. The assembly line redefined manufacturing. The computer reshaped everything from banking to publishing. AI continues this trajectory, but with a difference: it targets the mind as much as the muscle.

Already, AI writes legal briefs, summarizes research, and generates programming code. It diagnoses diseases, manages investments, and forecasts supply chains. And each advance prompts the same unease: Will we still be needed?

This is not merely an economic fear. It’s existential. We don’t just work to earn a living—we work to contribute, to express, to connect. If AI takes over not just what we do but how we think, what is left of us?

But framing the question as “AI vs. humans” may be misleading. History shows that new technology, while displacing some roles, often creates others. The key is to ensure that AI augments rather than replaces. That it handles what we shouldn’t have to—repetitive, dangerous, or cognitively overwhelming tasks—so that we can focus on what only humans can do: empathize, imagine, create meaning.

In this view, the purpose of AI is not to outshine humanity, but to illuminate it. To become a scaffolding for our potential, not a shadow over it.

Intelligence Without Emotion

AI is brilliant at pattern recognition. It can detect fraudulent transactions in milliseconds, or spot a tumor that a radiologist might miss. It sees signals we can’t. But it does not know what it sees. It does not care. It has no inner life.

Human intelligence is different. It is tangled with emotion, memory, and subjective experience. We don’t just process information—we feel it. A song is not just a waveform; it’s nostalgia, love, pain. A face is not just a geometric configuration; it’s identity, history, trust.

AI’s lack of emotion is both a limitation and a strength. It is not swayed by ego or exhaustion. It does not panic. It can offer a kind of cold rationality that humans sometimes need—whether in a courtroom, a battlefield, or a business decision. But in matters of justice, ethics, and empathy, that coldness can be dangerous.

Imagine a sentencing algorithm that doesn’t understand mercy. A hiring model that perpetuates bias because it’s trained on biased data. An AI caretaker that simulates kindness but cannot feel concern. The risk is not just that AI might malfunction—it’s that we might come to depend on it without questioning its values.

So perhaps the true purpose of AI in a human world is not to replace emotion, but to remind us of its importance.

The Ethical Compass

As AI grows more powerful, the need for ethical guidance becomes more urgent. Algorithms are not born moral. They inherit the data we feed them—and with it, our prejudices, blind spots, and historical injustices. If we are careless, we do not create unbiased intelligence. We simply automate discrimination.

Facial recognition software often struggles with darker skin tones. Predictive policing models sometimes reinforce racial disparities. Recommendation engines can radicalize users by funneling them into echo chambers. These are not flaws in the math—they are mirrors to our society.

So who decides what AI should do? Governments? Tech companies? The public? Philosophers? The answer is, unavoidably, all of us.

Ethics cannot be outsourced. Every engineer is now a moral agent. Every designer must consider not just how, but why. And every user must learn not just to consume, but to question.

AI must be designed with transparency, accountability, and inclusivity. But that design begins not in code, but in conscience.

Language and the Soul of Meaning

One of the most astonishing recent breakthroughs in AI has been the rise of large language models. These systems can generate poetry, summarize novels, mimic conversation, and even pass medical exams. They often seem fluent, witty, even profound. But do they understand what they say?

No. Language for an AI is not meaning—it is statistics. It learns by analyzing massive datasets, predicting the next word based on probabilities. It does not know the sorrow in a eulogy or the thrill in a love letter. It can replicate the form of meaning, but not its soul.

And yet, humans are storytellers. We interpret everything through narrative. So when AI tells a story, we respond emotionally—even if we know it’s synthetic. This raises a strange paradox: AI cannot feel, but it can make us feel.

Should we be wary of this? Yes. Manipulation, misinformation, and deepfakes lurk in the shadows of synthetic language. But we should also marvel. For the first time, we are creating machines that can speak our language—not just literally, but figuratively. And in doing so, they force us to ask: What is meaning, really? What separates truth from appearance?

The New Frontier of Consciousness

The most profound question AI raises is not technical—it is metaphysical. Could a machine ever be conscious? Could it have desires, fears, dreams? Could it suffer?

Most experts agree: current AI does not have consciousness. It simulates understanding, but feels nothing. Its intelligence is narrow, brittle, and fundamentally alien to human experience.

Yet some speculate that future systems—especially those modeled on the human brain—could develop something like awareness. This possibility is both thrilling and terrifying. If a machine becomes conscious, do we owe it rights? Could we harm it? Could it betray us?

These are not idle sci-fi musings. As AI advances, our definitions of life, mind, and morality may need to expand. The question of AI’s purpose might soon become reciprocal: What is the purpose of humans in an AI world?

A Partner in Discovery

Beyond tasks and tools, AI holds extraordinary promise as a partner in scientific discovery. Already, it helps decode protein structures, simulate quantum systems, and model climate change. It can generate hypotheses, analyze data at unprecedented scale, and even suggest new mathematical proofs.

In this role, AI is not a competitor, but a collaborator. It extends our senses into the invisible. It finds patterns we miss. It accelerates progress in medicine, energy, space exploration, and more. It helps us confront problems too vast for the unaided mind.

In a world facing existential threats—from pandemics to global warming—AI might be less a rival than a rescue. But only if we align its capabilities with our values. Only if we remember that discovery is not an end, but a means to well-being.

The Human in the Loop

At the heart of every AI system is a loop—between machine and data, between output and feedback, between user and interface. But the most important loop is philosophical: the loop between machine learning and human meaning.

AI asks us to define intelligence, but in doing so, it forces us to redefine humanity. It challenges our uniqueness. It pressures our job markets. It reconfigures our relationships. But it also holds up a mirror to what we cherish.

Empathy. Creativity. Ethics. Wonder. These are not features of a dataset. They are the foundations of purpose.

If we forget them, AI becomes dangerous. If we protect them, AI becomes profound.

A Future Written Together

What, then, is the purpose of AI in a human world?

It is to extend our capabilities without eclipsing our souls. It is to relieve our burdens without stealing our dignity. It is to inspire awe without inducing fear. It is to help us write a future we are proud to live in.

AI will not solve all our problems. It might amplify some. But it can also reveal what matters most—not by answering our questions, but by reshaping them.

In the end, AI’s purpose will reflect the choices we make. It is not a destiny, but a direction. And that direction is still ours to choose.

The pen is still in our hand.