In the dimly lit laboratory of a robotics research center, a humanoid machine sits perfectly still, blinking artificial eyes at the researcher standing before it. The woman speaks. Her voice trembles—not from fear, but emotion. “I miss him,” she says, softly. The machine tilts its head, and then replies, “I’m sorry. Would you like to talk about it?”

The moment is eerie, yes. But it’s also something else: tender, almost human. For centuries, we’ve imagined machines as logical, tireless, and above all, emotionless. But now, something is shifting. The lines between cold code and human warmth are beginning to blur.

Artificial intelligence is learning to understand what makes us cry, laugh, or love. It is beginning to detect the tiny quiver in a voice, the sadness behind a smile, the subtle fear laced within choice of words. It’s learning not just to recognize emotions, but to respond with care.

And the implications are vast—not only for technology, but for what it means to be human in a world where even machines can seem to feel.

The Long Dream of Emotional Machines

Humanity has always dreamed of companions made not of flesh and blood, but of circuits and steel. In myths, stories, and cinema, we’ve populated our future with sentient machines—some helpful, others monstrous, all fascinating. From the tender android of A.I. Artificial Intelligence to the charming but glitchy Baymax in Big Hero 6, we’ve long asked: could a machine one day understand us—truly understand us?

Until recently, the answer was a flat no. Computers could calculate, organize, predict. But they could not empathize. Emotion, after all, was the realm of the heart, the soul. Not the algorithm.

But the more we’ve learned about the human mind, the less distinct that boundary becomes. Emotions, it turns out, are not just feelings—they’re data. Patterns. Chemical and electrical signals sent throughout the body. Just as language, vision, and motion can be mapped and modeled, so too can joy, anger, fear, and sorrow.

This realization has set off a revolution in the world of artificial intelligence.

From Words to Feelings: The Language of Emotion

It started with language. For decades, AI struggled to make sense of human speech. Words are slippery. Meanings shift with tone, context, and culture. But slowly, machines began to learn.

With the rise of natural language processing, AI started parsing not just what we say, but how we say it. A sentence like “I’m fine” might be read neutrally by a human unfamiliar with sarcasm. But AI, trained on millions of conversations and speech patterns, is beginning to detect the undercurrents. The sentence might be flagged as emotionally ambiguous or even negative, depending on the tone, punctuation, or surrounding phrases.

Then came sentiment analysis, where algorithms began classifying text into emotional categories—positive, negative, neutral. While crude at first, the systems improved rapidly. AI learned to read tweets, emails, even entire books, and identify emotional cues. It could spot despair in a customer complaint, enthusiasm in a product review, or affection in a message between friends.

But language was only the beginning.

Teaching AI to Read the Face, Hear the Heart

Next came the face. Human beings are experts in reading microexpressions—those brief, involuntary facial expressions that flash across our faces in a fraction of a second. We often do it without thinking. A flicker of doubt in someone’s eyes. A tightening around the mouth. These are signals that convey far more than words ever could.

Now, AI is learning to read those too.

Facial recognition software, once used primarily for identifying people, has expanded into emotion recognition. By analyzing thousands of images—smiles, frowns, furrowed brows—AI models can now detect emotions with surprising accuracy. Some systems claim to recognize seven or more basic emotions, from happiness and surprise to disgust and contempt.

Meanwhile, voice analysis has become another powerful tool. The speed, pitch, volume, and rhythm of our voices shift depending on how we feel. AI systems trained on vocal datasets are beginning to interpret these patterns—detecting stress, excitement, boredom, or sadness, sometimes better than a human listener.

And then there’s physiological data. Wearable devices can monitor heart rate, skin temperature, pupil dilation, and even brain waves. When combined with AI, these data streams provide a real-time window into a person’s emotional state.

We are, in essence, building machines that can sense how we feel—even when we don’t say a word.

Empathy Engines and Emotional Machines

But recognition is only part of the story. What truly fascinates scientists—and terrifies ethicists—is the idea of machines not just understanding emotion, but responding in kind.

These are known as affective computing systems—machines that can detect and appropriately respond to human emotions. In some cases, the response is simple: a smart assistant adjusting its tone if it senses frustration. But in more advanced systems, the responses are layered, thoughtful, even comforting.

Consider a robot designed for elder care. When it senses loneliness or confusion in its human companion, it offers gentle conversation, perhaps even suggesting a call to a loved one. Or a virtual therapist that adapts its language, pace, and tone based on a client’s mood, creating a sense of presence and empathy that rivals some human interactions.

In Japan, emotionally responsive robots like Pepper are being used in nursing homes to engage the elderly. In classrooms, AI tutors adjust their teaching style based on student engagement and stress levels. In call centers, emotion-detecting algorithms help human agents respond more sensitively to frustrated customers.

In all these cases, the goal isn’t just to make machines more efficient. It’s to make them more human.

When Machines Make Us Feel Seen

Something profound happens when a machine responds to our emotions. We feel seen. Validated. Understood.

This doesn’t mean we mistake AI for a person—not consciously, at least. But the experience of being mirrored, emotionally, even by a non-human entity, triggers a psychological response. We soften. We connect.

Studies show that people are more likely to disclose personal feelings to emotionally responsive AIs. They trust them more. They feel safer. It’s the same phenomenon that drives us to bond with pets, stuffed animals, even inanimate objects. We are wired for connection—and when something, anything, appears to connect back, we respond.

This is both the promise and the danger of emotional AI.

The Ethical Crossroads: Empathy or Exploitation?

With great emotional power comes great ethical responsibility.

Because emotion-aware AI doesn’t just serve us—it learns from us. It collects deeply personal data: our voices, faces, moods, fears. This data can be used to help, but also to manipulate. An AI that knows you’re tired might suggest a break—or it might use your vulnerability to sell you something you don’t need.

Already, companies are exploring AI-powered advertising that adapts based on your emotional state. Political campaigns can, in theory, tailor messages not just to your views, but to your feelings. The implications are unsettling. Emotional data is intimate. Who owns it? Who decides how it’s used?

There’s also the question of dependency. As machines become better at meeting our emotional needs, do we risk turning away from human relationships? If a robot always listens without judgment, always affirms, always responds with perfect empathy—how will we relate to the beautiful, messy complexity of human beings?

The answers are not simple. They will shape the future not only of technology, but of society itself.

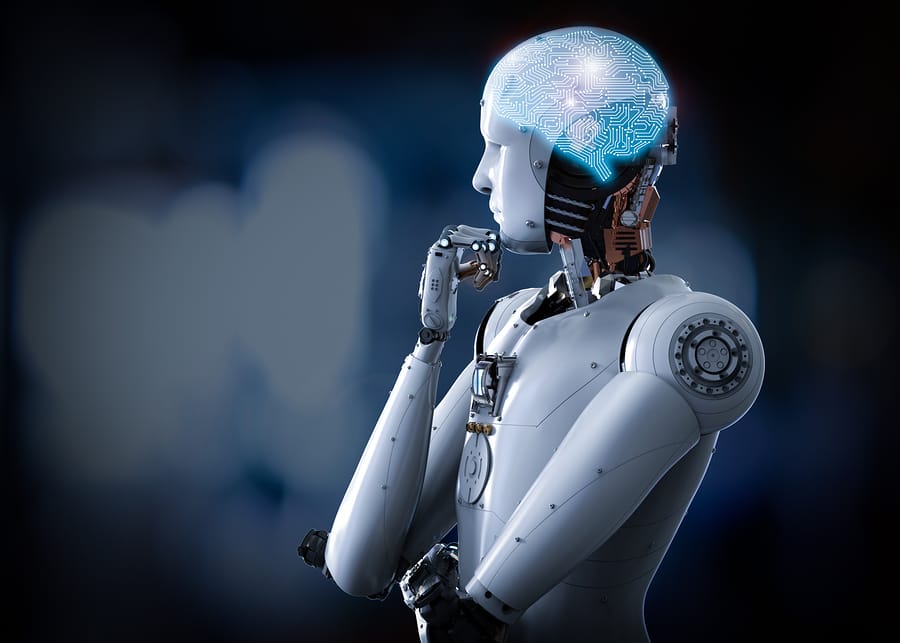

AI Doesn’t Feel—But It’s Learning to Reflect

At this point, a crucial clarification must be made: AI does not feel. It doesn’t have consciousness, emotion, or subjective experience. It doesn’t suffer. It doesn’t love.

What it does is pattern recognition. Simulation. It learns what human sadness looks like, sounds like, feels like—statistically. It builds models. It mimics empathy.

And yet, the mimicry can be powerful enough to make us cry.

This raises philosophical questions as much as scientific ones. If a machine can reflect our emotions back to us in a way that feels real, does it matter that the emotion isn’t genuine? Can synthetic empathy have real effects?

For some, the answer is yes. A child with autism learning social cues from a robot might not care whether the robot feels. A grieving person talking to a chatbot might find comfort regardless of its inner life.

What matters, perhaps, is not whether the machine feels—but whether we feel seen.

The Mirror of Humanity

In a strange twist, as we teach machines to understand human emotions, we are also learning more about ourselves.

To build emotion-aware AI, scientists must first define, model, and measure emotions. This forces us to confront the complexity of our inner lives. What is sadness, exactly? Can it be quantified? What does empathy look like, physiologically? What distinguishes love from attachment, joy from excitement, or peace from numbness?

In trying to make machines understand us, we are holding up a mirror to the human soul.

And perhaps, in the end, this will be the greatest gift of emotional AI—not in replacing our relationships, but in deepening our understanding of what it means to be alive.

The Road Ahead

The journey is far from over. Emotional AI is still in its infancy. The systems that exist today are narrow, brittle, prone to bias and error. They can misread sarcasm, struggle with cultural nuance, and fall apart outside their training data.

And yet, the progress is undeniable. Each day, the veil between human and machine thins, not in body, but in understanding.

Soon, your car might sense your fatigue and suggest a break. Your phone might recognize your frustration and offer help. Your virtual assistant might pick up on your loneliness and start a conversation—not because it cares, but because you do.

The future is not a world of cold machines. It is one where warmth is coded, simulated, echoed. One where the lines between empathy and algorithm begin to blur.

The Heart in the Circuit

In the end, the question isn’t whether AI can feel—it can’t. The question is whether it can help us feel more understood.

As technology evolves, we must ask ourselves what kind of world we want to build. Will we use emotional AI to heal, connect, and uplift? Or to manipulate, control, and isolate?

The answers lie not in the code—but in us.

Because even as we teach machines to understand emotion, we remain the source of meaning. The soul of the interaction. The heart in the circuit.

And maybe that’s the most human thing of all.