Speech is a miracle we perform effortlessly, yet it is one of the most intricate acts our brains ever carry out. In the time it takes to say a single sentence, your brain has already choreographed a silent ballet involving dozens of muscles—from the tip of your tongue to the depth of your diaphragm. The sounds pour out with precision, rhythm, and clarity. Words form. Meaning emerges.

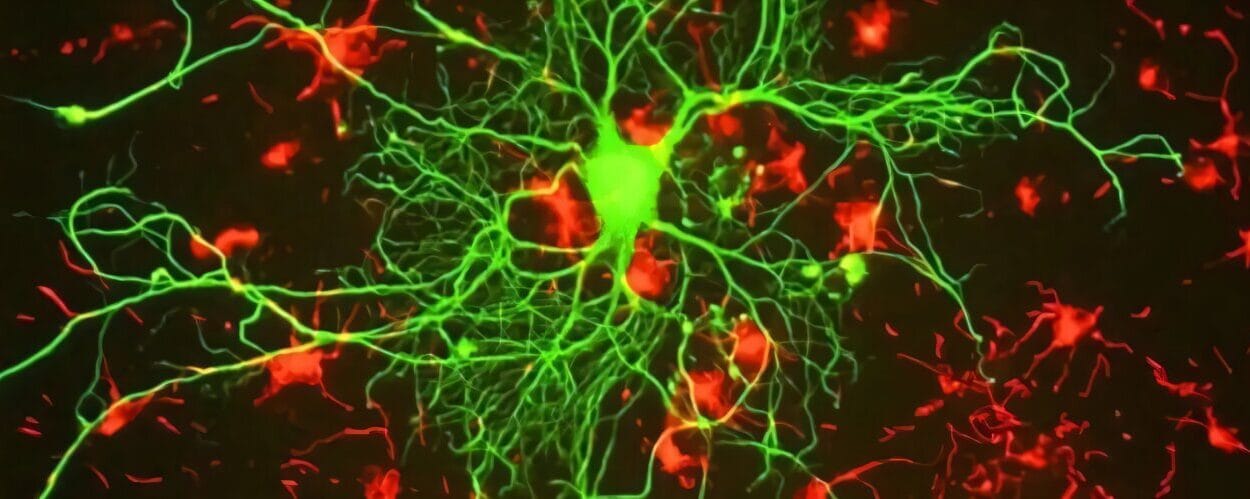

But beneath this linguistic performance lies an invisible architecture of intention and coordination. For more than a century, scientists believed that one maestro in the brain conducted this symphony: Broca’s area. Located in the frontal lobe, this region has long been regarded as the control tower of speech—interpreting language, assembling it, and dispatching the signals that bring it to life.

Now, a bold new study from UC San Francisco reveals that the orchestra is far more complex, and the conductor might not be who we thought.

Cracking the Code Beyond Broca

The foundational story of Broca’s area begins in the 19th century, when French physician Pierre Paul Broca treated a patient who could understand language but could barely speak. After the patient died, Broca discovered damage in a specific area of the brain. From that moment forward, the scientific world fixed its gaze on that region as the seat of speech.

But as with many things in science, the truth evolves.

Dr. Edward Chang, Chair of Neurosurgery at UCSF and a leading voice in brain-language research, had long suspected that the story of speech didn’t end at Broca’s area. His curiosity wasn’t driven by theory alone—it came from firsthand experience. Over years of brain surgeries, including those for patients with epilepsy and tumors, he noticed something odd. When parts of the middle precentral gyrus (mPrCG)—a nearby region once thought to only control pitch and vocal tone—were damaged, speech sometimes fell apart in unexpected ways. Patients didn’t lose their ability to understand or know what they wanted to say, but they stumbled when trying to physically say it.

One case haunted him. After a tumor removal that included a section of the mPrCG, the patient developed apraxia of speech, a disorder where the mind’s message remains intact, but the ability to coordinate speech is scrambled. And oddly enough, similar surgeries in Broca’s area didn’t always yield the same issue.

This puzzle sparked a new investigation. Chang and his team, including Jessie Liu, Ph.D., and postdoctoral scholar Lingyun Zhao, Ph.D., set out to discover what the mPrCG was really doing.

Peering Into the Speaking Brain

The researchers worked with 14 patients undergoing brain surgery for epilepsy, a process that involves placing a fine mesh of electrodes on the surface of the brain to locate seizure activity. These electrodes offer a rare glimpse into how the brain behaves in real time—right down to the millisecond.

During the surgeries, the patients remained awake and aware—able to speak, read, and respond. The team asked them to say specific syllables and words shown on a screen. Some were straightforward, like “ba-ba-ba.” Others were more demanding, like “ba-da-ga,” requiring the brain to piece together different sounds in precise order.

As the participants spoke, the electrodes recorded a flurry of brain activity. The mPrCG, once considered a background player, lit up with intensity—especially when the verbal tasks were more complex. The more varied the syllables, the more this brain region fired up in preparation.

But the real insight came from what happened before the words were spoken.

The mPrCG didn’t simply activate during speech. It surged with energy just before speech began—like a race car revving its engine before the light turns green. The level of activity there predicted how quickly the participant would start speaking. In essence, the mPrCG was not just responding to speech—it was preparing for it.

And that changed everything.

Rewriting the Blueprint of Speech

For decades, neuroscientists viewed speech planning as a centralized operation. Broca’s area, perched in the brain’s left hemisphere, was seen as the command center, managing the entire pipeline from thought to articulation. But Chang’s research suggests that speech-motor sequencing—the rapid-fire instructions the brain sends to the tongue, lips, jaw, and vocal cords—depends just as critically on the mPrCG.

This region is not a backup generator; it is a key part of the system, particularly when language becomes more complicated. Liu explained that when people were given simple sequences, the mPrCG barely blinked. But when the task became harder—stringing together different sounds in sequence—this area lit up, working harder to make sense of the plan and convert it into physical movement.

It was like watching a bridge form between thought and action.

The old map, where Broca’s area sat isolated as the hub of speech, no longer matched the terrain. Instead, a networked system emerged—one that could flexibly scale with the complexity of the words and the speed at which they needed to be spoken.

Disrupting the Flow to Reveal Its Source

To further test their findings, the team used the implanted electrodes not just to listen—but to speak back. They stimulated the mPrCG directly while five participants attempted to say syllables in sequence.

When the sequences were simple, participants continued without much trouble. But with more complicated arrangements, the stimulation disrupted their fluency. Errors appeared—hesitations, jumbled syllables, or complete breakdowns. These weren’t just stumbles; they were mirror images of apraxia of speech, echoing the patterns Chang had once seen in patients with mPrCG damage.

This provided the most compelling evidence yet: the mPrCG isn’t just involved in speech—it orchestrates its flow, especially under pressure. When the brain’s instructions become more intricate, the mPrCG ensures they are timed, aligned, and executed with the grace we take for granted.

From Silent Wiring to Speaking Machines

Beyond redefining speech neuroscience, the study opens a window into the future of communication technology.

Chang and his team have spent years developing brain-computer interfaces (BCIs) for people who can no longer speak—those paralyzed by disease or injury. These systems decode brain signals and turn them into text or synthesized voice. But to truly mimic natural speech, the machines must understand not just what someone wants to say, but how they intend to say it—right down to every syllable and pause.

The discovery that the mPrCG handles this coordination adds a critical layer to the equation. By tapping into this region, future BCIs may one day replicate the musicality of speech—its rhythm, emotion, and nuance—not just its words.

What It Means for People with Speech Disorders

Perhaps the most human consequence of this research lies in clinical care.

Speech disorders like apraxia, stuttering, and even certain forms of aphasia often emerge after brain injury or stroke. Treatments have traditionally focused on retraining speech muscles or bypassing Broca’s area. But this new understanding suggests a broader approach—one that includes the mPrCG and its role in turning intention into articulation.

It may explain why some patients can form thoughts but struggle to vocalize them, and why therapies targeting only one part of the brain often yield incomplete results. A more accurate map of the speech network could help preserve patients’ voices after surgery and offer new strategies for recovery.

The Brain’s Unfinished Sentence

As we learn more about the mPrCG, a larger truth is beginning to unfold: speech is not the product of a single brain region, but a collaboration across a neural symphony. What we say—and how we say it—emerges from the harmony of many instruments playing in concert.

Dr. Chang’s study doesn’t erase Broca’s area from the picture. Instead, it zooms out, revealing a broader landscape where mPrCG, Broca’s area, and other regions interact in real time. The complexity of speech reflects the complexity of our minds—how intention becomes movement, how thought becomes voice, how silence becomes sound.

And just as language evolves, so too does our understanding of where it comes from.

With every discovery like this, we get a little closer to answering the question that drives all human connection: How do we speak the words in our minds aloud?

Reference: Jessie R. Liu et al, Speech sequencing in the human precentral gyrus, Nature Human Behaviour (2025). DOI: 10.1038/s41562-025-02250-1