In a quiet lab in Shanghai, a team of scientists achieved something that once belonged to the realm of science fiction. They managed to decode Mandarin Chinese—a tonal, complex, and deeply nuanced language—directly from a person’s brain in real time. Using an advanced brain-computer interface (BCI) framework, the researchers reported in Science Advances that they successfully translated neural signals into Mandarin words and sentences, allowing a participant not only to communicate but also to control a robotic arm, a digital avatar, and even interact with a large language model—all through thought alone.

It’s the first time such a feat has been achieved for a tonal language, marking a monumental leap in the field of neurotechnology. This study doesn’t just represent progress in neuroscience or computer science—it’s a glimpse into a future where the boundary between thought and technology may one day disappear.

What Are Mind-Reading BCIs Used For?

For many, the idea of a computer reading the human mind evokes fascination, curiosity, and maybe even unease. But for people who have lost the ability to speak—whether through a stroke, amyotrophic lateral sclerosis (ALS), or other neurological conditions—this technology represents hope.

Speech-decoding BCIs are designed to interpret the neural signals that accompany speech attempts. Even when the body can no longer move or speak, the brain often continues to generate these signals. By decoding them, BCIs can restore communication, allowing individuals to express themselves once again.

Beyond restoring speech, BCIs can also give people the ability to control devices directly with their minds—robotic arms, wheelchairs, or digital systems. For patients with paralysis or motor disabilities, this level of autonomy can be life-changing.

While BCIs are not new, most of the existing research has focused on English and other non-tonal languages. Mandarin, with its rich tonal variations, poses unique challenges that have long stymied researchers—until now.

Why Mandarin Is So Difficult to Decode

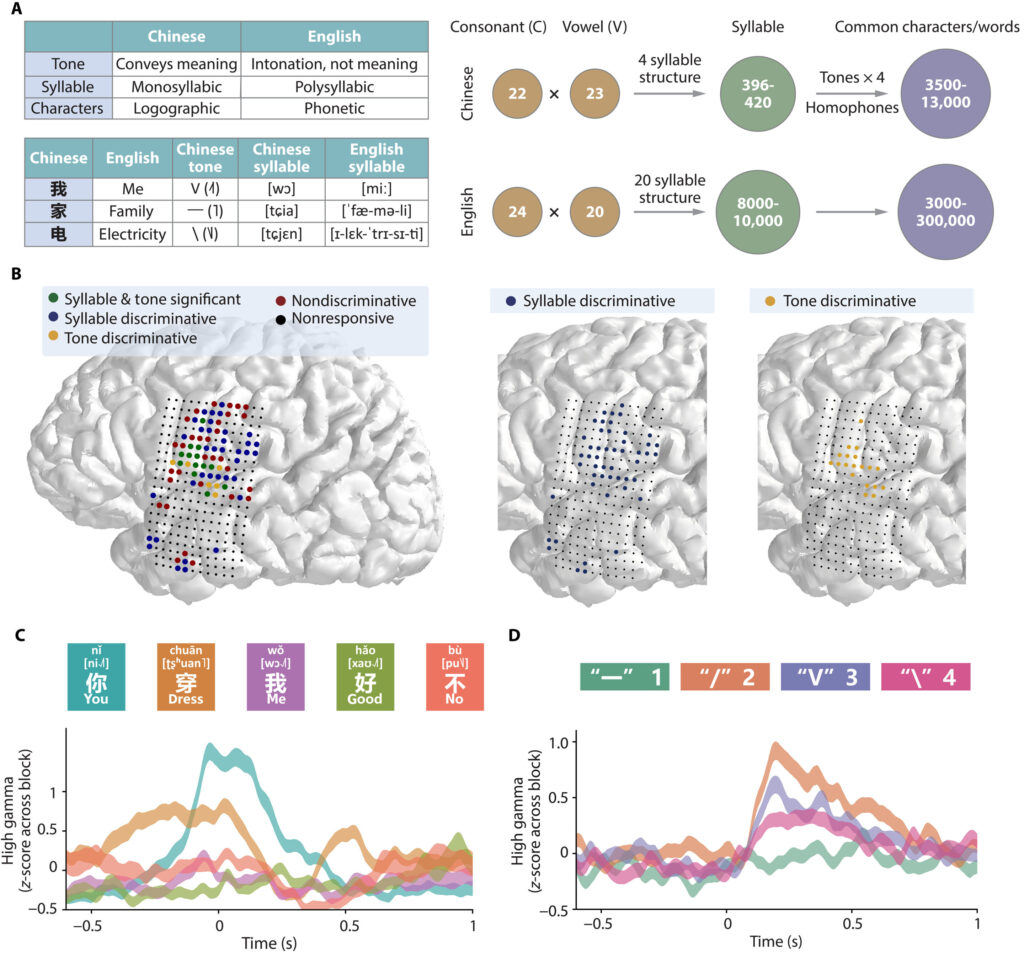

To appreciate the scale of the achievement, one must understand why Mandarin is so difficult for brain-computer interfaces. Unlike English, Mandarin is a tonal and monosyllabic language. A single syllable can carry multiple meanings depending on the tone—rising, falling, flat, or dipping. For example, the syllable “ma” can mean “mother,” “hemp,” “horse,” or “to scold,” depending solely on tone.

This creates enormous complexity for decoding speech directly from brain activity. A neural signal must not only indicate what sound is being produced but also how it is being modulated. Previous studies could only manage to decode limited sets of Mandarin syllables, often in controlled settings and without real-time accuracy.

The Shanghai team changed that by developing a new approach—one that listens to the symphony of neural signals in unprecedented detail.

Inside the Experiment: The First Real-Time Mandarin Decoder

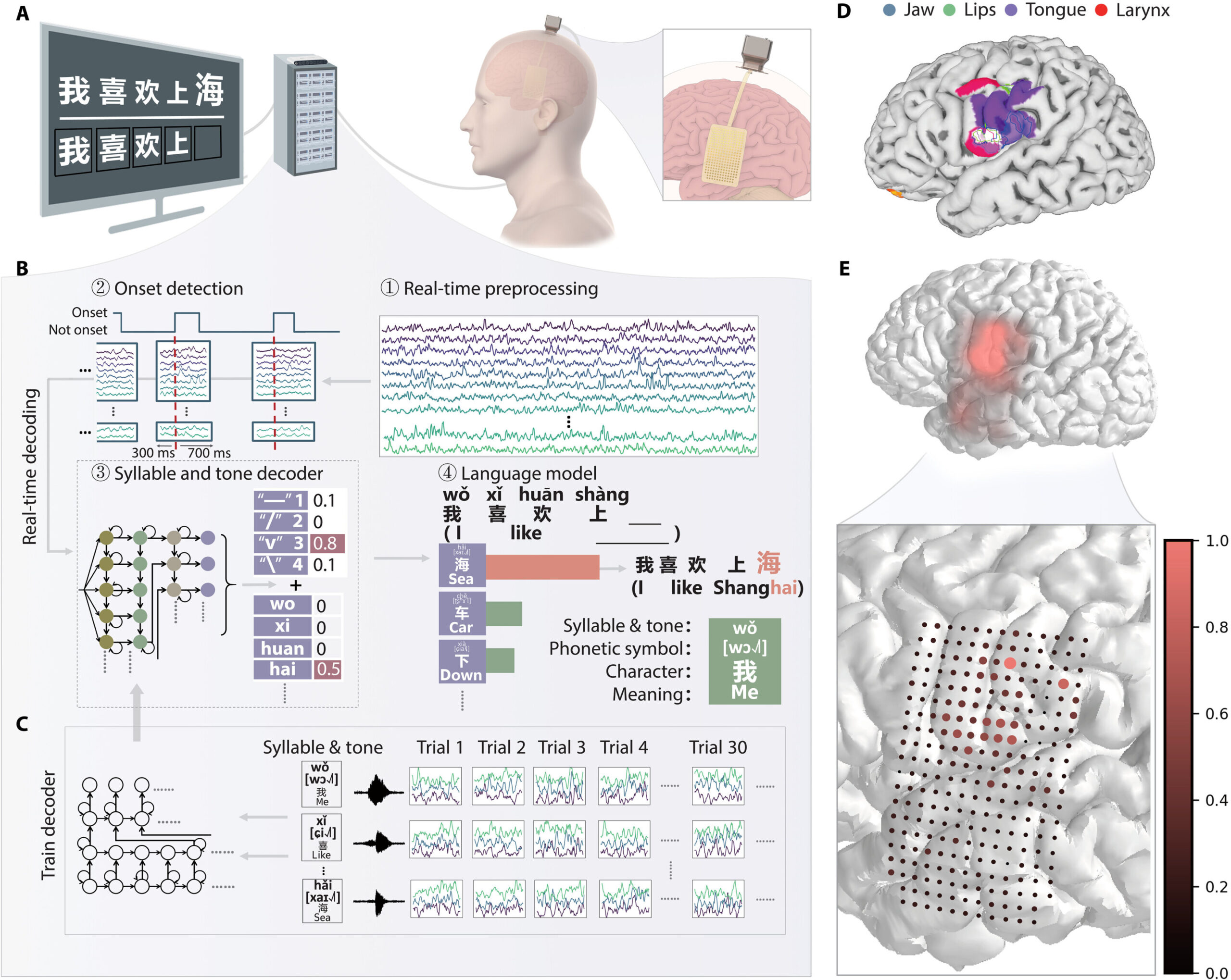

The study involved a 43-year-old woman with epilepsy who had undergone clinical monitoring using a 256-channel high-density electrocorticography (ECoG) array. This array, implanted on the surface of her brain, allowed researchers to record neural activity at extremely high resolution. Over the course of 11 days, the participant engaged in a series of tasks, reading both single characters and full sentences aloud while the BCI system recorded her brain signals.

The team then integrated these neural recordings with a sophisticated 3-gram Mandarin language model to interpret patterns and predict likely character sequences. What emerged was a framework that could translate brain activity into Mandarin text in real time.

The results were astonishing. In single-character tasks, the system achieved a median syllable identification accuracy of 71.2%. In real-time sentence decoding, it reached 73.1% character accuracy and a communication rate of 49.7 characters per minute.

These numbers might sound technical, but they represent something profoundly human: a thought turned directly into language—without the need for speech, movement, or typing.

Understanding the Science Behind It

To decode speech from brain signals, the researchers focused on the ventral sensorimotor cortex, a region known to encode articulatory movements—the subtle muscle actions involved in speaking. Neural signals from this area were transformed into distinct linguistic units or articulatory gestures.

By combining these neural features with language models, the system could infer entire sentences. What made this approach remarkable was its ability to detect both syllable structure and tone, the twin pillars of Mandarin pronunciation. The ECoG array’s ultra-dense electrodes provided stable coverage over critical speech regions, enabling precise mapping of these neural signatures.

The team identified clear, distinct neural correlates for different tones and syllables, suggesting that the brain processes these elements in separable, yet interrelated ways. This understanding may prove key not only for Mandarin but for other tonal languages spoken by billions around the world.

What This Means for People with Speech Loss

For those who have lost their ability to speak, communication is often the greatest longing. Technologies like this offer a bridge back to the world—a way to express thoughts, emotions, and desires once trapped within the mind.

In practical terms, the Shanghai study demonstrates that brain-computer interfaces can now handle more complex linguistic systems. It opens the door for future devices that can restore fluent communication to people who speak not only English, but Mandarin, Thai, Vietnamese, or any other tonal language.

It also shows that BCIs can serve as universal translators of thought—potentially freeing communication from the barriers of speech, movement, or even written language.

The Limitations and the Road Ahead

Despite its success, the study had important limitations. The experiment involved only one participant, meaning the results can’t yet be generalized. The electrodes, while powerful, were originally designed for epilepsy monitoring and didn’t cover every brain region involved in tone perception or higher-level language processing.

Future research will need to expand electrode coverage and involve more participants to refine the models. The authors also envision incorporating signals from higher-order language regions such as the middle temporal gyrus, inferior frontal gyrus, and supramarginal gyrus—areas responsible for understanding meaning, syntax, and semantics.

By blending motor control (how we speak) with linguistic understanding (what we mean), future BCIs could achieve even greater accuracy, producing seamless, natural speech directly from thought.

The Promise of Thought-to-Speech Communication

Imagine a world where a person who has been silent for years can once again say “I love you” simply by thinking it. Where a paralyzed artist can describe a vision in their mind, and an AI assistant brings it to life on screen. This is not fantasy—it’s the trajectory of neurotechnology.

BCIs are evolving from experimental tools to potential life-changing medical devices. Each improvement brings us closer to restoring dignity and communication to those who have lost them. But the implications go even further.

As BCIs become more sophisticated, they may one day allow for direct human-computer interaction. Instead of typing or speaking, we could think commands, compose messages, or design creations with our minds. Such possibilities will raise ethical questions—about privacy, autonomy, and the boundaries of thought—but they also carry immense promise.

The Ethical Dimension of Reading the Mind

With great technological power comes profound responsibility. Mind-reading BCIs inevitably raise ethical concerns: Who controls the data? How do we protect mental privacy? Could such systems be misused for surveillance or manipulation?

Researchers emphasize that medical applications—like restoring communication—must remain the primary focus. Strict ethical frameworks, consent protocols, and data protection standards will be essential. The mind is the last frontier of privacy, and safeguarding it must be at the core of every innovation.

Still, the dream of decoding thought is not inherently dystopian. Used wisely, it could enhance empathy, accessibility, and human connection in ways never before possible.

A Step Toward a Thought-Driven Future

The Shanghai team’s success represents more than a technical achievement—it’s a human one. It shows that the mind’s complexity can be understood, translated, and shared. It shows that silence, even the silence imposed by illness or injury, need not be permanent.

By decoding Mandarin in real time, researchers have proven that BCIs can transcend linguistic barriers and tap into the universal neural architecture of speech. It’s a step toward a world where thought itself becomes a form of communication—direct, immediate, and profoundly personal.

The Mind Speaks, and the Machine Listens

The participant in this groundbreaking study did more than just communicate. She reached across the divide between biology and technology, bridging the human brain and artificial intelligence. Through her, a new form of dialogue was born—one where neurons and algorithms converse in the same language.

In that moment, science became something poetic. A thought, once private and invisible, became sound, symbol, and meaning. The human mind, for the first time in a tonal language, was heard clearly by a machine.

It’s a reminder that technology, at its best, is not about replacing humanity—but amplifying it. Every neural signal decoded, every word restored, is an act of compassion disguised as innovation.

And so, in a lab in Shanghai, with the quiet hum of machines and the steady rhythm of neural activity, humanity took another step toward understanding the most mysterious language of all—the language of the mind.

More information: Youkun Qian et al, Real-time decoding of full-spectrum Chinese using brain-computer interface, Science Advances (2025). DOI: 10.1126/sciadv.adz9968