From the moment we open our eyes, our brains launch into a lifelong process of building an internal model of the world. It’s an astonishingly rapid and seamless operation: we look, we recognize, we understand. Yet behind this smooth interface lies a vast web of neural activity, constructing representations of objects, scenes, and motion—assembling the visual world from raw light. For decades, scientists believed they had a relatively clear understanding of how this process unfolded. But recent work from the lab of Charles D. Gilbert at The Rockefeller University is challenging that picture, revealing a far more dynamic and complex role for the brain’s visual processing system—one that includes feedback loops, moment-to-moment adaptability, and deep ties to memory and experience.

The Visual Pathway: More Than a One-Way Street

At the heart of vision is a network of specialized brain regions located along the ventral visual cortical pathway, which stretches from the primary visual cortex (V1) at the rear of the brain to the temporal lobes. For many years, researchers conceptualized this pathway as a one-way street: visual information traveled in a feedforward direction, building from simple to complex as it ascended the hierarchy of brain areas.

In this traditional view, early neurons in V1 were seen as specialists in simplicity—detecting edges, lines, and basic orientations. As signals moved forward to higher regions like V2 and beyond, the brain would incrementally construct more complex representations—shapes, objects, and ultimately, meaning.

But this feedforward-only model has long had a glaring omission: the existence of feedback connections. These are neural pathways running in the opposite direction, from higher brain areas back to earlier ones. Though anatomically observed, their role remained poorly understood—until now.

Feedback in Focus: A New Model of Visual Perception

Gilbert’s research brings this overlooked feedback system into sharp relief. In a groundbreaking study published in Proceedings of the National Academy of Sciences (PNAS), his team shows that these top-down connections are not mere neural redundancies—they are essential to how we see and understand the world.

According to Gilbert, feedback carries prior knowledge and contextual information—what the brain already knows about an object—and delivers it to earlier stages of processing. This allows neurons in those early regions to respond in adaptive and unexpected ways. Rather than being fixed instruments that only handle low-level features, these neurons dynamically change their behavior based on the task at hand and the memory of past encounters.

“Even at the first stages of object perception,” Gilbert explains, “the neurons are sensitive to much more complex visual stimuli than had previously been believed, and that capability is informed by feedback from higher cortical areas.”

This marks a significant shift in how scientists think about visual processing. It suggests that perception is not a linear build-up of features, but a continual interaction between past and present—between what we’ve seen before and what we’re seeing now.

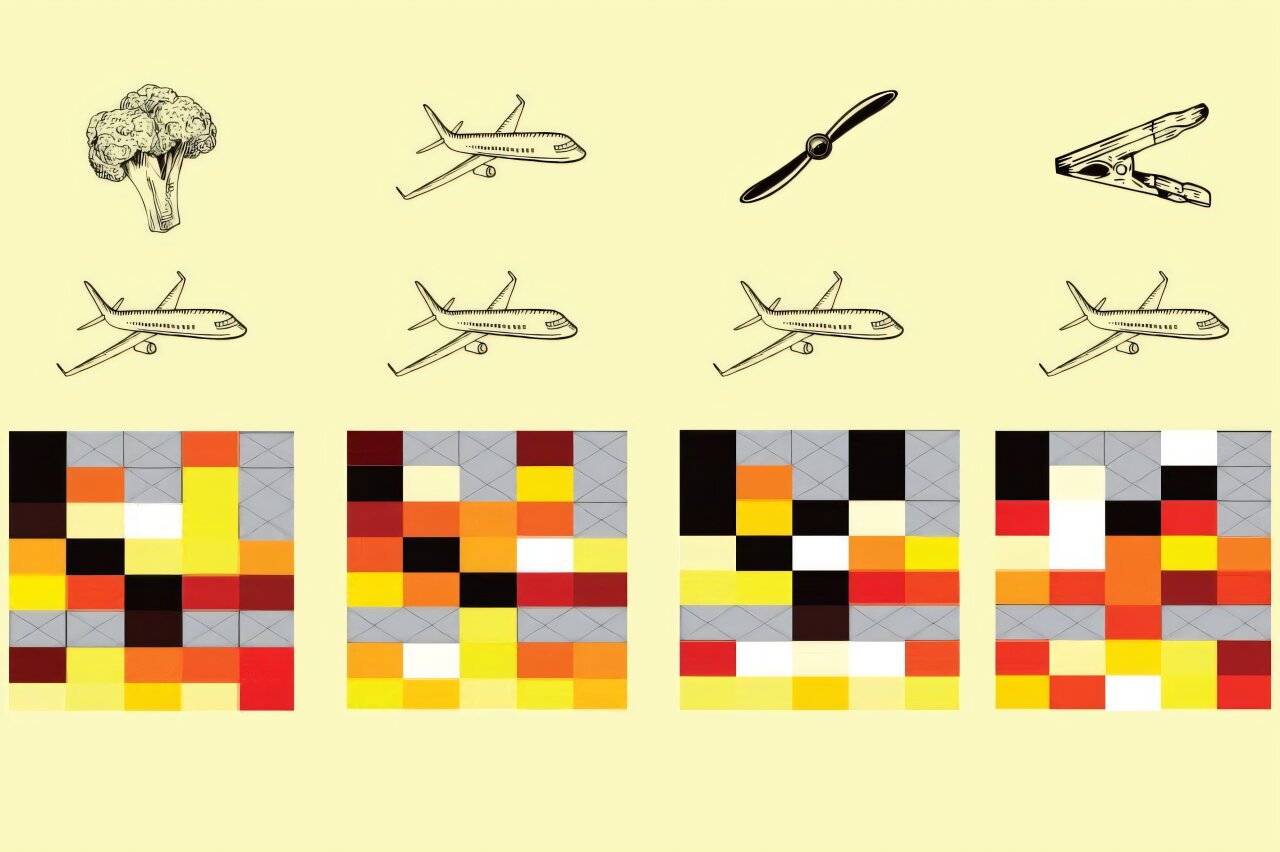

The Experiment: Training Monkeys to See

To probe this theory, Gilbert’s team spent several years working with macaque monkeys trained to recognize a wide range of objects—fruits, tools, machines, and other everyday items. Using state-of-the-art techniques developed at Rockefeller, such as fMRI brain imaging and implanted electrode arrays, they monitored neural activity as the animals performed visual tasks.

The experiment used a “delayed match-to-sample” format. First, the monkey was shown an image of an object. After a brief delay, it was presented with a second image and had to decide whether it matched the first. This required the monkey to hold the initial object in working memory, even as its visual system processed the new stimulus.

Importantly, the second image was often only a partial view or a cropped version of the original object—forcing the brain to draw on memory and contextual clues to make the match.

As the monkeys became proficient in the task, researchers saw dramatic changes in the way neurons responded to visual input. Individual neurons that once only fired in response to simple shapes began responding selectively to more complex features, especially when those features matched an object the monkey had learned to recognize.

“We learned these neurons are adaptive processors,” Gilbert says. “They change on a moment-to-moment basis, taking on different functions that are appropriate for the immediate behavioral context.”

Plasticity and the Power of Learning

This adaptability is part of what neuroscientists call plasticity—the brain’s ability to change its structure and function based on experience. Gilbert and his long-time collaborator, Nobel laureate Torsten N. Wiesel, have previously shown that neurons in the visual cortex can form long-range horizontal connections, allowing them to link information across large areas of the visual field.

In the new study, they build on this finding by showing that neurons can also change which inputs they prioritize, switching from task-irrelevant to task-relevant signals as needed. This adds a new layer of flexibility to the brain’s perceptual machinery.

What’s more, the feedback connections appear to play a key role in this process. They don’t just echo what’s already been processed—they shape how neurons respond in real-time, modifying the function of early cortical areas based on high-level knowledge and expectations.

“In a sense,” Gilbert says, “the higher-order cortical areas send an instruction to the lower areas to perform a particular calculation, and the return signal—the feedforward signal—is the result of that calculation.”

This iterative loop between top-down and bottom-up processing allows the brain to integrate sensory input with stored knowledge, creating a coherent and adaptive perception of the world.

Not Just Seeing, but Understanding

One of the most profound implications of Gilbert’s work is that vision is not merely about detecting objects—it’s about understanding them. When we see a partially obscured car, or a friend’s face in shadow, our brains don’t passively receive information. Instead, they actively fill in the gaps, guided by memory and context.

This means that perception is deeply subjective and deeply tied to experience. What we see is shaped not just by the light entering our eyes, but by everything we’ve seen before.

The findings also call into question the long-standing idea that early visual areas are hardwired and immutable. Instead, they suggest a brain that is constantly updating itself—a flexible and dynamic system, even in adulthood.

This has broad implications for fields ranging from education and artificial intelligence to clinical neuroscience.

Implications for Autism and Beyond

Perhaps most exciting are the potential applications in understanding neurodevelopmental disorders like autism. Gilbert’s lab is now investigating how differences in feedback processing may underlie the altered perceptual experiences seen in autism spectrum disorder (ASD).

Working with research specialist Will Snyder, they are using animal models of autism to explore how cortical circuits operate differently during perception. By comparing autistic mice with their wild-type littermates, and observing their brain activity during natural behaviors, the team hopes to uncover fundamental differences in how feedback and feedforward signals interact.

These studies are being conducted at the Elizabeth R. Miller Brain Observatory, a cutting-edge research facility on the Rockefeller campus equipped with advanced neuroimaging technologies. The goal is to identify whether disruptions in top-down processing may contribute to the sensory sensitivities and perceptual differences often reported in autistic individuals.

“If we can understand the cellular and circuit basis for these interactions,” Gilbert says, “it could expand our understanding of the mechanisms underlying brain disorders—not just in autism, but across a range of conditions.”

The Broader Picture: A Brain in Dialogue with Itself

Gilbert’s findings offer a compelling new view of the brain—not as a rigid hierarchy, but as a deeply interconnected system in constant dialogue with itself. Feedback isn’t an afterthought or a correction—it’s a fundamental part of how we perceive and interpret the world.

This new model doesn’t just redefine vision—it hints at broader principles of brain function. Similar feedback loops may operate in hearing, touch, motor control, language, and even abstract thinking. Anywhere the brain must interpret incomplete or ambiguous information, top-down influences may guide the process.

This helps explain why our perceptions are so richly informed by context, why we can recognize a melody in a noisy room or read a word despite a missing letter. It also sheds light on the brain’s remarkable ability to learn, adapt, and rewire itself throughout life.

Conclusion: Seeing with the Mind’s Eye

In the end, Charles Gilbert’s work illuminates a profound truth: to see is not just to look, but to know. Our brains don’t simply record the world—they interpret it, reshape it, and build it anew with each passing moment.

Vision is a conversation between memory and sensation, between past experience and present input. And it’s a conversation powered by feedback—a countercurrent stream of information flowing down the visual hierarchy, enriching and refining what we perceive.

By mapping these flows and understanding their mechanisms, researchers like Gilbert are bringing us closer to one of neuroscience’s greatest goals: to truly understand how the brain creates the experience of reality. In doing so, they remind us that seeing is not just a mechanical act—but a deeply human one, rooted in learning, context, and the shimmering interplay of mind and world.

Reference: Tiago S. Altavini et al, Expectation-dependent stimulus selectivity in the ventral visual cortical pathway, Proceedings of the National Academy of Sciences (2025). DOI: 10.1073/pnas.2406684122