It’s a crisp morning in Silicon Valley. Sunlight glints off sleek glass facades, where logos like Apple, Google, Amazon, Meta, and Microsoft shimmer like modern-day coats of arms. Inside these fortresses of technology, in conference rooms perfumed with artisanal coffee and electric anticipation, a new kind of arms race is unfolding. It’s not for search dominance or social networks, not for cloud supremacy or smartphone market share. Instead, it’s for the very beating hearts of modern machines:

Chips.

These silicon slivers, smaller than a postage stamp yet dense with billions of transistors, have become the lifeblood of the digital age. And in boardrooms and engineering labs, tech giants are increasingly asking a provocative question:

Why let someone else build our brains?

For decades, tech companies bought chips from outside specialists—names like Intel, AMD, Nvidia, Broadcom, Qualcomm. But in the last decade, an epic pivot has emerged. The world’s most powerful companies have decided to design—and sometimes even manufacture—their own custom chips. It’s a transformation reshaping not just technology but geopolitics, supply chains, and the very future of computing.

To understand why, we must journey into a world where tiny transistors carry trillion-dollar implications, where a few nanometers separate winners from laggards, and where the race for silicon supremacy may decide who rules the 21st century.

The Cathedral of Moore’s Law

In the early days of Silicon Valley, the digital economy was built atop a simple faith:

Moore’s Law.

Coined by Intel co-founder Gordon Moore in 1965, it predicted that the number of transistors on a chip would double roughly every two years, making computers exponentially faster, cheaper, and more efficient. For half a century, that prophecy delivered a miracle: machines that leapt from room-sized giants to pocket-sized supercomputers, each new generation crushing the last in raw power.

Back then, tech companies relied on specialized semiconductor titans—Intel for CPUs, Nvidia for graphics chips, Qualcomm for mobile processors—to supply the brains behind their devices and services. It was a symbiotic ecosystem. Chipmakers handled the mind-bending complexity of silicon design and manufacturing. Tech giants focused on software, user experience, and global distribution.

But by the 2010s, something shifted. Moore’s Law began to sputter. Shrinking transistors further became fiendishly expensive and technically perilous. Progress slowed. And as chip improvements plateaued, software giants felt squeezed, constrained by generic chips ill-suited for their specialized needs.

They started to dream of chips crafted not for the masses, but tailored precisely for their own workloads. From AI to streaming video, from cloud computing to virtual reality, custom silicon promised to unlock performance, reduce costs, and seize competitive advantage.

The era of “Silicon as Commodity” was ending. The age of “Silicon as Strategy” had dawned.

Apple’s Great Silicon Escape

Perhaps no company embodies this transformation more vividly than Apple.

For decades, Apple’s computers relied on Intel chips. The marriage was uneasy. While Apple’s designers envisioned laptops that were impossibly thin and fanless, Intel’s chips often ran hot and lagged behind schedule. When iPhones launched in 2007, Apple depended on Samsung and other suppliers for their processors.

But Apple, driven by an obsession with control and perfection, began designing its own mobile chips. In 2010, it unveiled the A4 processor inside the iPad—a custom chip designed in-house. It was Apple’s first major foray into silicon, and it worked spectacularly.

Over the next decade, Apple’s custom A-series chips rocketed past competitors in performance and efficiency. By designing chips around exactly how iOS and apps worked, Apple created a synergy impossible for generic chipmakers to match. The iPhone became not just a smartphone but a technological tour de force.

Then, in 2020, Apple pulled off its most daring silicon coup. It announced it was severing ties with Intel entirely. No longer would Macs run on someone else’s silicon. Apple would build its own chips, starting with the M1 processor.

The M1’s debut stunned the industry. It delivered jaw-dropping speed, battery life, and thermal efficiency. Reviewers gushed. Developers raced to adapt software. And Apple secured full-stack control over its entire product line—from silicon to software to hardware design.

It wasn’t just a technological victory. It was a business revolution. By going custom, Apple slashed its reliance on suppliers, increased profits, and differentiated itself in a brutally competitive market. The lesson to other tech giants was clear:

Design your own chips—or risk becoming obsolete.

The Cloud’s Hidden Furnace

Far from Cupertino’s gleaming headquarters, the world’s biggest chip transformation was brewing in massive concrete bunkers filled with blinking lights and whirring fans. These were the data centers—the hidden engines of the internet.

Amazon, Google, Microsoft, Meta—all were building vast cloud empires, offering computing power to businesses, AI researchers, governments, and startups. Billions of searches, emails, TikTok videos, financial trades, and voice queries flowed through these server farms every second.

And those data centers were devouring power. Enormous arrays of standard chips—CPUs, GPUs, networking processors—churned through oceans of data. But the cost was staggering, both financially and environmentally. Energy bills soared. Latency lagged. Generic chips, designed for general-purpose computing, were often wastefully inefficient for specialized cloud workloads.

Cloud giants saw a tantalizing opportunity. By designing their own chips tailored for specific tasks—like AI inference, video transcoding, or storage optimization—they could achieve:

- Dramatically lower costs per operation.

- Lower power consumption.

- Competitive differentiation over rivals.

Amazon blazed the trail with its Graviton line of custom Arm-based CPUs for AWS. Graviton chips offered better price-performance than Intel or AMD alternatives, enticing customers to stick with Amazon’s cloud. Then Amazon introduced Trainium and Inferentia, custom chips optimized for machine learning.

Google unveiled its Tensor Processing Units (TPUs), custom-built for deep learning. These silicon marvels accelerated neural networks far beyond what CPUs or GPUs could achieve. Google’s AI services—like language translation, photo recognition, and search ranking—ran orders of magnitude faster and cheaper on TPUs.

Microsoft launched custom silicon initiatives for both cloud workloads and AI acceleration, including FPGAs integrated into its Azure data centers. Meta, too, began designing chips for video processing and AI recommendation engines.

Suddenly, the chip landscape was no longer divided between semiconductor companies and tech companies. The cloud giants had become chip designers in their own right—wielding silicon as a strategic weapon to defend their territories and attack rivals.

AI’s Insatiable Appetite

If there’s one force turbocharging the chip arms race, it’s artificial intelligence.

AI workloads—particularly deep learning—are unlike traditional computing. Training a massive language model or an image classifier requires staggering amounts of computation, multiplying matrices with billions of parameters, often repeated trillions of times. Running these models in real-time, known as inference, demands equally formidable hardware.

In the early days of AI, researchers relied on CPUs. But CPUs proved too slow. The AI community pivoted to GPUs, whose parallel architecture was perfect for the math behind neural networks. Nvidia, which had built GPUs for gamers, became the unexpected king of AI hardware.

But as AI exploded, tech giants realized they didn’t want to remain hostage to Nvidia’s high prices or off-the-shelf designs. AI was becoming central to everything they did—from TikTok’s video recommendations to Alexa’s voice responses to autonomous vehicles interpreting the world around them.

Custom AI chips promised better performance, lower power consumption, and unique features tailored to each company’s proprietary algorithms. Google’s TPUs were an early triumph. Meta is investing in its MTIA chips for AI inference. Microsoft integrates AI acceleration into its Azure servers. Even Tesla builds custom chips to process the flood of data from its self-driving cars.

Every tech giant sees AI as existential—and custom silicon has become the secret sauce to keep them ahead.

Economics of Scale and Differentiation

Designing chips is mind-bendingly expensive. A single new chip design can cost hundreds of millions—or even billions—of dollars when you factor in R&D, software tools, engineering teams, and manufacturing runs at cutting-edge process nodes.

So why are tech giants diving in anyway?

Because the economics tip in favor of in-house chips once you reach massive scale. For a small company selling modest volumes of hardware, designing custom silicon is madness. But if you’re shipping tens of millions of iPhones or running millions of servers, the savings and performance gains can easily justify the investment.

Moreover, custom chips let companies differentiate. Generic chips are available to everyone. But a custom chip can deliver unique capabilities:

- Apple can build iPhones that run faster, cooler, and longer than any Android rival.

- Google’s AI services run best on TPUs, locking customers into its cloud.

- Amazon’s Graviton CPUs make AWS more attractive and cost-efficient.

Custom silicon becomes not just an engineering triumph but a business moat.

The Great Semiconductor Squeeze

Yet as tech giants surged into chip design, they hit a brutal reality:

The semiconductor manufacturing bottleneck.

Even the mightiest tech companies don’t own their own chip factories—because building an advanced fab costs upwards of $20 billion. The tools are staggeringly complex, involving ultraviolet lasers finer than a DNA strand. Only a handful of companies—chiefly Taiwan’s TSMC and South Korea’s Samsung—can manufacture chips at the bleeding edge.

This has created a precarious dependency. When COVID-19 swept the globe, chip manufacturing faltered. Demand for laptops, phones, and cloud services skyrocketed, but factories couldn’t keep up. Automakers idled plants. Game consoles vanished from shelves. Prices soared.

Tech giants designing their own chips found themselves in line behind everyone else, desperate for precious wafer capacity. Apple, as TSMC’s biggest customer, fared relatively well. Smaller players struggled.

The geopolitical stakes grew terrifying. Taiwan sits just 100 miles off China’s coast, and tensions between Beijing and Washington have placed the global chip supply chain under existential threat. A military conflict over Taiwan could cripple the world’s access to advanced semiconductors, paralyzing industries from smartphones to AI.

Governments are now pouring billions into domestic chip manufacturing. The U.S. CHIPS Act promises over $50 billion in incentives. Europe, Japan, and China are racing to build local fabs. Yet building capacity takes years—and geopolitical uncertainty looms large.

Custom silicon offers tech giants a competitive edge—but no one can escape the geopolitical risk tied to the global semiconductor supply chain.

The Rise of RISC-V and the Open Silicon Revolution

While tech giants invest billions in proprietary chips, another revolution simmers quietly: the rise of open-source hardware.

For decades, most processors were based on proprietary instruction sets like Intel’s x86 or Arm’s architectures. But in recent years, an open-source alternative called RISC-V has emerged—a royalty-free architecture that anyone can use to design custom chips.

Companies like SiFive, Alibaba, and Western Digital are exploring RISC-V to build everything from data center CPUs to microcontrollers. For tech giants, RISC-V promises freedom from licensing fees and legal entanglements. It’s still young and less mature than Arm or x86—but momentum is growing.

Custom chips built on open architectures could become the next frontier, lowering costs and democratizing chip innovation. The open-source software revolution transformed computing once. Could open silicon do it again?

Culture Clash: Hardware vs. Software

Inside tech giants, building custom chips has triggered a fascinating culture clash.

Software engineers thrive in a world of rapid iteration. Code can be written, tested, and shipped in days. Bugs can be patched overnight. But silicon is different. Designing a chip takes years. Fabricating it is slow and costly. A single mistake can doom a chip worth hundreds of millions.

This cultural divide has posed massive challenges. Tech companies famous for agile software development have had to adopt the painstaking discipline of hardware design:

- Verification teams labor over endless simulations to ensure chips work flawlessly.

- Engineers write “hardware description languages” to define logic circuits—a world far removed from Python or JavaScript.

- Hardware roadmaps must be planned years in advance, with high stakes if a chip slips schedule.

Yet tech giants are learning. Apple’s chip team has become one of the best in the world. Amazon, Google, Meta, and Microsoft have recruited veteran chip designers from Intel, AMD, and Nvidia. Slowly, software behemoths are transforming into hardware powerhouses.

Metaverse, AR, and the Next Silicon Frontier

The chip race is not slowing. Instead, it’s expanding into new frontiers.

The dream of immersive computing—augmented reality (AR), virtual reality (VR), and the so-called metaverse—demands chips that can process vast amounts of visual data with minimal power and heat. Tiny headsets require high-performance silicon that’s cool, efficient, and capable of rendering photorealistic worlds in real time.

Meta is pouring billions into custom chips for AR and VR. Apple is designing chips for its rumored mixed-reality headset. Qualcomm dominates current AR/VR silicon but faces fierce competition from in-house efforts.

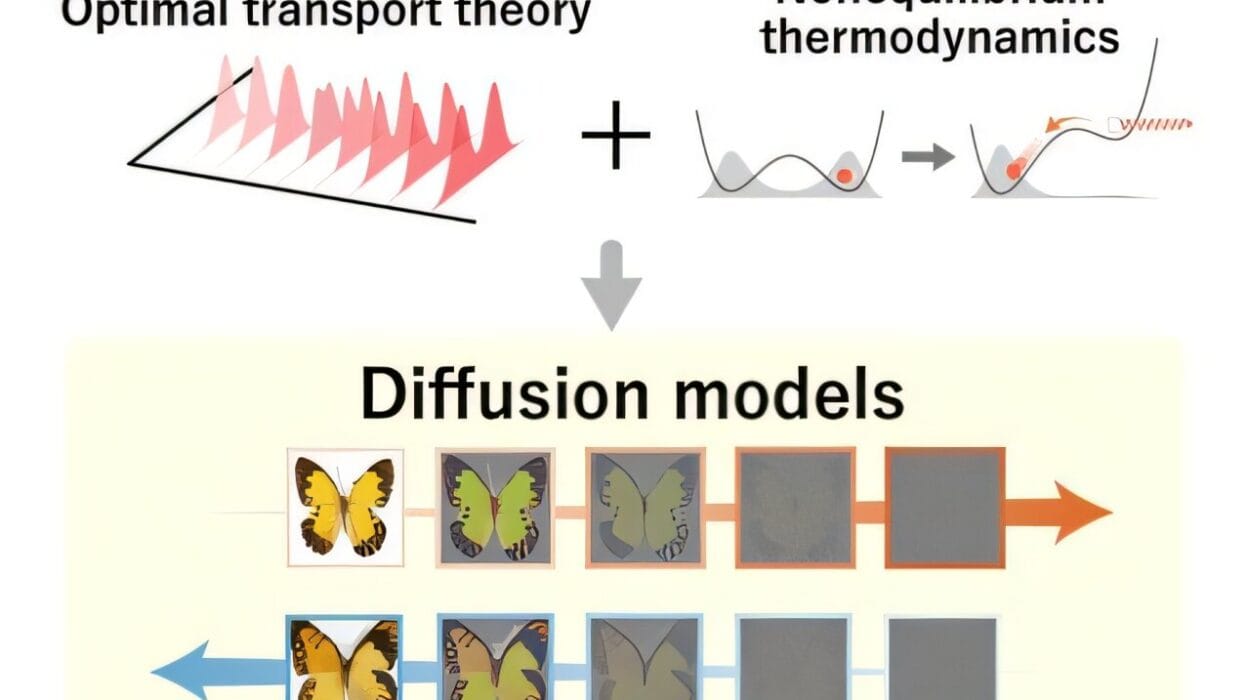

At the same time, AI is evolving rapidly. New AI architectures like transformers and diffusion models push hardware limits even further. Generative AI models like GPT-4 or DALL-E require specialized silicon to run efficiently. Each advance in AI fuels the need for new chips, new architectures, and relentless innovation.

As quantum computing edges closer to practical use, yet another layer of silicon complexity beckons. Tech giants are funding quantum chip research, hoping to leap ahead in solving problems that would cripple today’s classical computers.

The chip race, in other words, is far from over. It’s accelerating into realms we’re only beginning to imagine.

Beyond the Chip: The Battle for the Future

Custom silicon is not just about making phones faster or data centers cheaper. It’s about who defines the future of computing.

If you control the chips, you control:

- The economics of cloud services.

- The capabilities of AI models.

- The energy footprint of devices and data centers.

- The competitive moat against rivals.

Apple, Google, Amazon, Microsoft, and Meta are no longer merely software giants. They are increasingly hardware companies too. The line between software and silicon is blurring, creating a new industrial landscape where vertical integration is king.

This battle will shape:

- What devices we carry in our pockets.

- How intelligent our digital assistants become.

- How immersive virtual worlds feel.

- Who profits in the trillion-dollar digital economy.

The stakes are existential.

The Human Side of Silicon

Behind all the equations, fabs, and transistor counts lies something profoundly human. The engineers toiling over circuit layouts. The visionaries dreaming of machines that think and learn. The geopolitical strategists worrying about supply chains. The customers demanding longer battery life and instant AI responses.

And perhaps the deeper truth: humanity’s eternal drive to build tools that extend our minds.

In the transistor’s delicate lattice lies a story older than Silicon Valley itself—a story of ambition, creativity, rivalry, and relentless curiosity. A story where tiny slivers of silicon become vessels for our grandest hopes.

And so the giants keep racing, pouring billions into the atom’s secrets, each believing that somewhere in the shifting sands of silicon lies the power to shape the future.

As one chip engineer at Google once confessed, staring into his monitor filled with tangled logic gates:

“It’s more than just chips. We’re carving our destiny, one transistor at a time.”