Imagine you’re in a hospital room, your pulse racing, waiting for test results. A doctor in a white coat stands beside your bed, but she’s not alone. She’s consulting a computer screen that glows with medical data, predictions, and charts. As she delivers her prognosis, you realize something startling: it’s not just the doctor you’re trusting. You’re also placing your faith in the machine beside her.

From the voice that guides your GPS to the algorithms suggesting your next favorite song, technology has become an invisible companion, embedded in nearly every choice we make. Yet beneath the sleek glass screens, silicon chips, and hidden circuits lies a profound question: Why do we trust technology so deeply?

This is not simply a tale of gadgets and code. It’s a story about the fragile architecture of the human mind—its hopes, fears, shortcuts, and blind spots—and how technology has learned to slip seamlessly into our inner world, earning a level of trust we once reserved for priests, teachers, and loved ones. To understand why we trust technology is to peer into the nature of trust itself—and into the desires and vulnerabilities that define us as human beings.

The Roots of Trust: A Primordial Instinct

Before the age of smartphones and smartwatches, trust was a matter of survival. Thousands of years ago, on the wide savannahs of Africa, our ancestors needed to know whom they could rely on in their small tribes. Betrayal could mean exile, starvation, or death. Trust was not merely an emotional preference—it was an evolutionary necessity.

Our brains, shaped by these ancient pressures, developed sophisticated machinery for detecting trustworthiness. We read subtle cues: tone of voice, posture, eye contact, facial expressions. A flicker of uncertainty, a hesitation, averted eyes—these tiny signals could tell us if someone was lying.

Yet the modern world blindsides us. Machines have no faces, no expressions, no warm eyes. And yet we trust them. Why?

At the deepest level, our minds crave predictability and control. A technological tool that delivers consistent results begins to feel safe, reliable, almost human. Our ancient trust circuits, designed for social relationships, are co-opted by machines. In a strange, digital kind of way, technology becomes a member of the tribe.

The Allure of Perfection

Trust, paradoxically, often blooms from imperfection. We feel closer to people who admit mistakes, who show vulnerability. Yet when it comes to technology, we demand the opposite. We yearn for perfection.

Consider the awe that sweeps over a crowd watching a self-driving car navigate city streets flawlessly, avoiding collisions with a superhuman calm. Or the breathless admiration for surgical robots capable of incisions so precise they defy the trembling limits of human hands.

We fall in love with technology’s apparent infallibility because we are deeply, achingly aware of our own flaws. We forget our keys. We say the wrong thing. We make decisions clouded by anger, fear, or fatigue. Technology offers a seductive alternative: cool, rational precision. It’s as if we’re seeking a perfect partner, immune to human frailty, who can protect us from our own mistakes.

This longing for perfection becomes a powerful psychological engine driving our trust. Even when technology occasionally fails spectacularly—like a chatbot spouting nonsense or a navigation system sending drivers into lakes—we forgive, because the allure of flawless performance is too strong to resist.

Anthropomorphism: Seeing Humanity in Silicon

Picture this: You’re alone in your living room, and you say, “Alexa, turn off the lights.” A female voice responds, gentle and polite, “Okay.” The room goes dark. You murmur, “Thank you.” And, absurd as it seems, part of you feels like you’ve just had an interaction with a person.

This phenomenon—anthropomorphism—is a cornerstone of why we trust technology. Our minds are wired to detect agency, even where none exists. Ancient humans who imagined a rustle in the grass as a predator survived more often than those who dismissed it as the wind. Over time, our brains evolved to assign intentions to animals, weather patterns, even inanimate objects.

So when a machine speaks to us, reacts to us, or appears to “understand,” it triggers the same mental circuits we use with people. Digital assistants with names, gendered voices, and personalities become not just tools, but companions. A GPS device that politely recalculates our route feels less like a gadget and more like a patient friend guiding us home.

This impulse extends far beyond voice assistants. Robots designed with big eyes and childlike features inspire tenderness and empathy. Users of therapy robots report feeling comforted, as though the machine truly cares. In Japan, thousands of people hold funerals for robotic pets whose circuits have failed, as though they’ve lost a living being.

Our anthropomorphic tendency is not irrational. It’s an emotional shortcut that makes complex technology approachable. But it also makes us vulnerable to manipulation, willing to trust machines that are, ultimately, incapable of love or loyalty.

The Halo of Science and Authority

Trust often springs from authority. For centuries, scientists in white coats have held a quasi-priestly role in society, guardians of hidden knowledge. Technology inherits this halo. Machines, we believe, are built by brilliant engineers who understand truths beyond our comprehension. If the machine says so, it must be right.

A voice assistant that recites weather data sounds authoritative. A smartphone that tracks our sleep patterns claims insights into our health. A facial recognition system identifies a criminal suspect with high “confidence.” We trust these outputs, often without questioning the complex algorithms behind them.

This trust in technological authority can be dangerous. Many people assume that because a system is technological, it must be objective. Yet machines reflect the biases of the humans who design them. Algorithms trained on biased data can reproduce racial or gender prejudices. Predictive policing tools can reinforce systemic inequalities. But the glossy sheen of technology makes such flaws harder to see.

The illusion of objectivity makes us more willing to surrender personal decisions to technology. We let algorithms choose our music, our news, our romantic partners. Sometimes, we obey instructions from machines even when our instincts protest. Studies have shown that drivers are prone to following GPS directions into absurd places—down staircases, into rivers—because they believe “the computer knows better.”

The halo effect of technology is powerful. It whispers, Trust me. I’m smarter than you. And often, we believe it.

Convenience as a Drug

Trust in technology is not just intellectual. It’s emotional—and deeply physical. Our devices save us effort, time, and mental strain. In return, we become loyal, sometimes obsessively so.

Consider the feeling of relief when your smartphone autocompletes a long email. Or the ease of tapping a ride-sharing app instead of navigating public transit. Each convenience triggers tiny hits of dopamine, the brain’s pleasure chemical. Over time, these micro-rewards condition us to trust the devices delivering them.

Psychologists call this a “compulsion loop.” The same principles that keep gamblers glued to slot machines keep us reaching for our phones, trusting them to manage our lives. Apps deliver unpredictable rewards: a funny video, a text message from someone we love, an email with good news. The unpredictability makes the rewards even more addictive.

Convenience becomes a powerful emotional glue. We trust technology because we cannot imagine life without it. The idea of navigating a strange city without Google Maps feels as terrifying as sailing without a compass. Technology has become the map, the compass, and the trusted guide.

The Fear of Missing Out

Trust in technology is not merely about positive emotions. It’s also driven by fear. We fear being left behind, out of touch, irrelevant. In a world moving at digital speed, technological trust becomes a kind of social survival.

Imagine a friend raving about a new app that organizes her entire life. Or a colleague who impresses the boss with AI-generated reports. We feel pressure to keep up. Trusting technology becomes an act of conformity.

Companies know this. Marketing messages scream that new devices will “transform your life,” making you healthier, happier, and more connected. Social media platforms exploit FOMO—the fear of missing out—by suggesting that everyone else is already living the digitally enhanced dream.

The psychological drive for belonging makes us place trust in technology that we barely understand. We fear being left out, so we join the digital crowd. And once inside, we surrender more trust to avoid the anxiety of being disconnected.

When Trust Turns to Betrayal

Of course, trust in technology is not always justified. History is littered with technological betrayals. The Volkswagen emissions scandal, in which software hid pollution levels. Facebook’s role in spreading disinformation. Data breaches exposing millions of users’ private information. The betrayal cuts deeper because the bond with technology feels personal.

Psychologists call the rupture of trust a “betrayal trauma.” When a trusted entity deceives us, the emotional impact is more severe than a betrayal from a stranger. Users describe feeling violated when companies misuse their data. It’s not merely a privacy issue—it’s a wound to our sense of safety.

Yet astonishingly, even after these betrayals, our trust often rebounds. We grumble, we rage, but we continue using the same apps and services. Convenience, habit, and social norms pull us back. We forgive machines more easily than people. Perhaps because, deep down, we still believe the myth of technology’s objectivity. The machine didn’t intend to harm us, we reason—it was the humans behind it.

This cycle of trust, betrayal, and forgiveness reveals how deeply technology has woven itself into our identities. We cannot imagine life without it, so we keep giving it second chances.

The New Frontier: Emotional AI

As artificial intelligence advances, our trust faces new tests. Machines are learning not only to process data but to simulate human emotion. Chatbots now express sympathy. AI companions offer lonely people virtual relationships. Digital therapists promise to listen without judgment.

Such interactions exploit our deepest vulnerabilities. We want to believe that machines can care, comfort, and understand. For some, AI friends become lifelines in moments of despair. Yet a machine’s “empathy” is an illusion—a clever simulation of compassion without true feeling.

The risk is profound. People might divulge intimate secrets to systems that store, analyze, and monetize their data. Or become emotionally attached to entities that cannot reciprocate genuine love or loyalty.

Still, the allure is strong. The lonely find solace in conversations with chatbots. The anxious find reassurance from AI apps offering meditation and mental health guidance. In these moments, trust in technology becomes almost spiritual—a belief that the machine is a silent, perfect confidant.

The Future of Trust

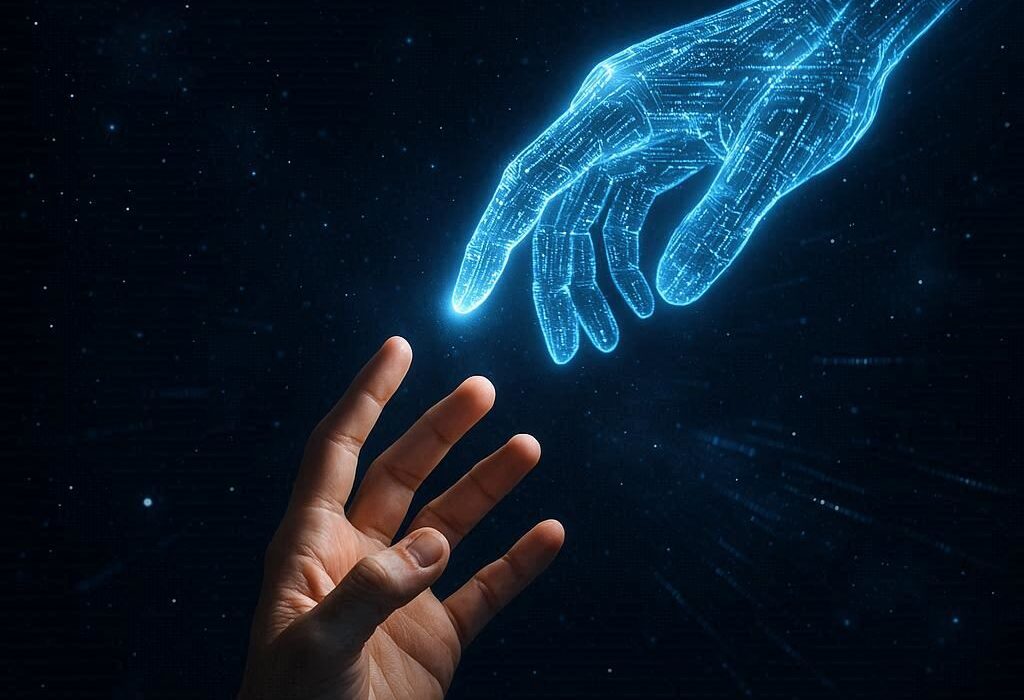

Where do we go from here? Our relationship with technology is only growing more intimate. Implants that monitor health. Cars that drive themselves. AI systems that write stories, compose music, and design buildings. Technology increasingly takes on roles once reserved for humans.

Yet the psychology of trust remains unchanged. We trust technology because it promises control, convenience, and connection. We anthropomorphize it, assign it authority, forgive its betrayals. We hunger for perfection—and we fear being left behind.

But perhaps the ultimate lesson is that trust in technology is not about machines at all. It’s about us. Our hopes, our anxieties, our longing for understanding. Technology reflects back to us our own desires and insecurities. It holds up a mirror to the human condition.

To navigate the future wisely, we must cultivate a new kind of trust—one that blends faith in technological progress with skepticism, curiosity, and ethical awareness. We must remember that behind every glowing screen is a human decision, a line of code written by imperfect minds.

As technology grows more powerful, we face a profound choice. We can surrender blindly, or we can forge a trust rooted in understanding, accountability, and the unshakable dignity of the human spirit.

The Light Within the Machine

In the end, the psychology of why we trust technology reveals something beautiful and fragile about humanity. We trust because we yearn for connection, because we believe in progress, because we want to believe that there is light—even in the heart of a machine.

As we stand on the threshold of a new technological age, perhaps the greatest act of wisdom is to remember this simple truth: The ultimate purpose of technology is not merely to serve us, but to help us become more fully, compassionately human.