When you glance at a busy street corner, you don’t just notice “a car,” “a dog,” or “a person.” Your mind instantly weaves these details into a bigger story: perhaps a man hurrying to work, a child chasing a ball, the faint drizzle on the pavement. This ability to grasp not just individual objects but the entire context—the who, what, where, and why—defines human perception.

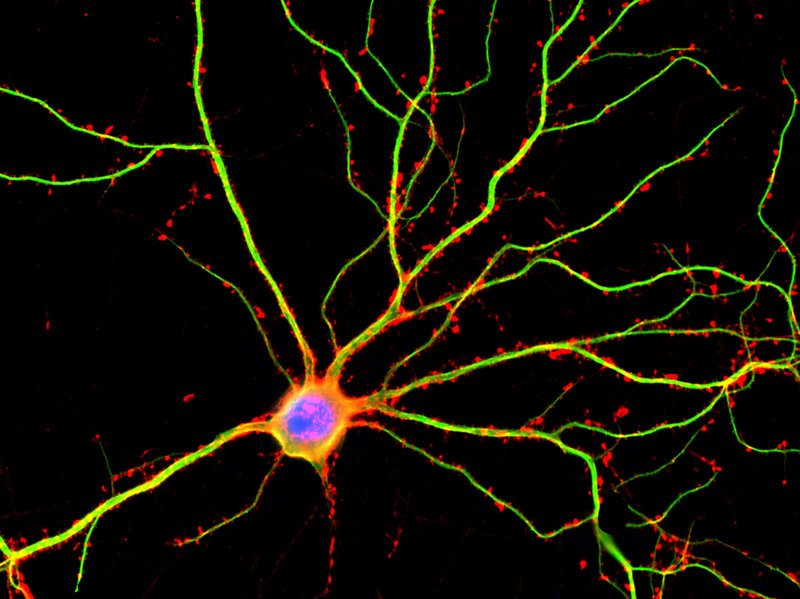

Yet for decades, scientists have struggled to measure this richer, more complex level of understanding in the brain. While researchers could map where we process shapes, colors, and movement, the elusive question remained: how does the brain capture meaning?

Now, a groundbreaking study published today in Nature Machine Intelligence has revealed an answer. Using the same kind of large language models (LLMs) that power AI tools like ChatGPT, researchers have found a way to “fingerprint” the brain’s understanding of complex visual scenes.

Turning Words Into Brainwaves

Led by Ian Charest, an associate professor of psychology at the Université de Montréal and holder of the Courtois Chair in Fundamental Neurosciences, the international team designed a clever experiment. They showed participants a variety of natural scenes—from children playing in a park to sweeping urban skylines—while recording their brain activity in an MRI scanner.

At the same time, they fed detailed descriptions of those same scenes into large language models. These models transformed the descriptions into intricate mathematical patterns—a kind of “language-based fingerprint” capturing the scene’s meaning.

The results were startling: these AI-generated fingerprints closely mirrored the brain activity patterns of participants viewing the same images.

“In essence,” Charest explained, “we could use LLMs to translate what a person was seeing into a sentence. We could also predict how their brain would respond to entirely new scenes—whether of food, faces, or landscapes—by using the representations encoded in the language model.”

Beating the Best AI Vision Systems

But the researchers didn’t stop there. They trained artificial neural networks to take in raw images and predict these LLM fingerprints directly. Remarkably, these networks matched human brain responses better than many of the most advanced AI vision models in existence—even though they were trained with far less data.

This leap forward was supported by the expertise of Tim Kietzmann, a machine-learning professor at the University of Osnabrück, whose team helped design the neural networks. The study’s first author, Adrien Doerig of Freie Universität Berlin, coordinated the multi-institution collaboration, which also included scientists from the University of Minnesota.

A Glimpse Into the Brain’s Code

What’s most striking about the findings is what they imply about the brain itself. According to Charest, the results suggest that the human brain may represent complex scenes in ways surprisingly similar to how modern language models process text.

This connection between vision and language could transform both neuroscience and artificial intelligence. “If we can model the brain’s way of encoding meaning,” Charest said, “we open the door to decoding thoughts more accurately, building smarter AI systems, and even designing brain-computer interfaces that ‘speak’ the brain’s native language.”

From Science to Real-World Impact

The implications are far-reaching. Self-driving cars could one day “see” the world in a more human-like way, making better, safer decisions in unpredictable situations. Medical technologies could develop visual prostheses that tap directly into the brain’s understanding of the world, offering life-changing benefits for people with severe vision loss.

And for neuroscientists, this work is a step toward one of the field’s grandest ambitions: a complete map of how the brain turns raw sensory input into meaning.

“This is just the beginning,” Charest emphasized. “Understanding how our brain perceives meaning from vision doesn’t just teach us about ourselves—it could guide the next generation of AI to think, interpret, and decide more like we do.”

More information: Adrien Doerig et al, High-level visual representations in the human brain are aligned with large language models, Nature Machine Intelligence (2025). DOI: 10.1038/s42256-025-01072-0