In a remarkable fusion of artificial intelligence and brain science, researchers at the University of Toronto Scarborough have uncovered new insights into one of the most perplexing phenomena in human perception: the Other-Race Effect (ORE). This effect, where individuals are better at recognizing faces of their own race than those of other races, has been studied for decades. But now, thanks to cutting-edge brainwave analysis and AI-generated visualizations, scientists are revealing how deeply this bias is wired into our neurocognitive machinery—and the revelations are both surprising and vital.

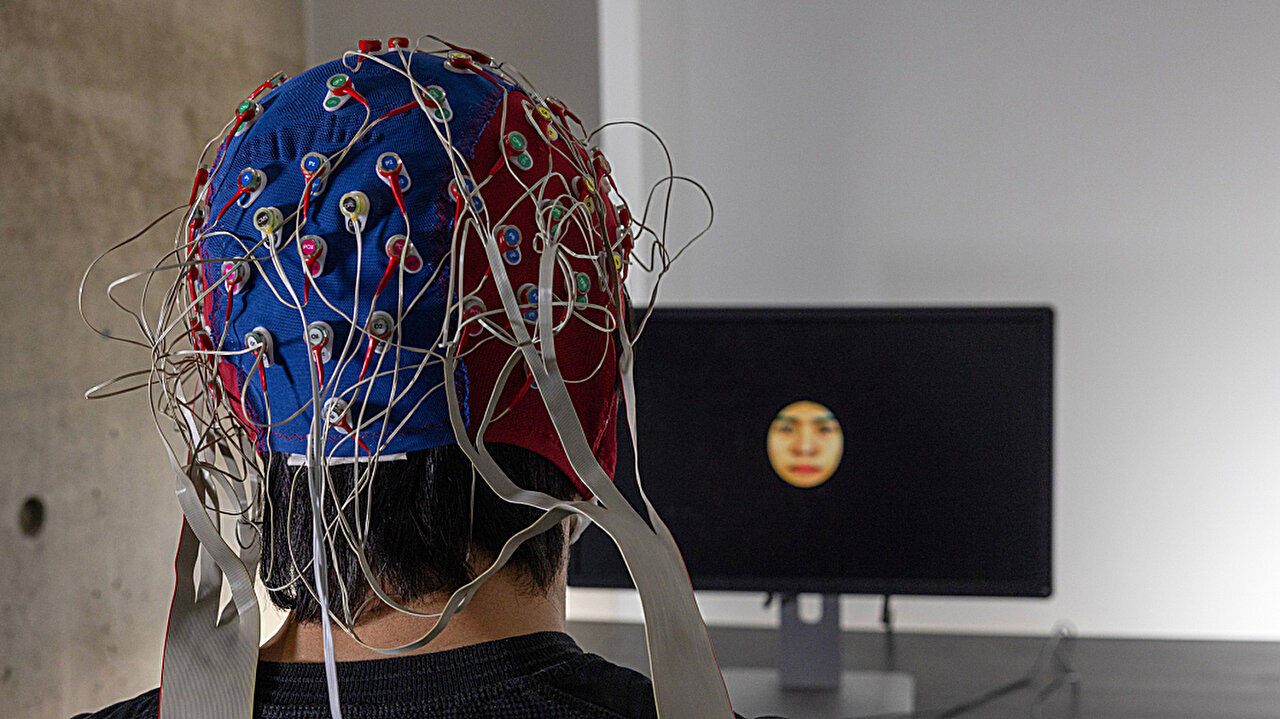

At the center of this innovative research is Adrian Nestor, an associate professor in the Department of Psychology, and his team. Using a combination of generative adversarial networks (GANs) and electroencephalography (EEG), they have delved into how our brains “see” and interpret faces. The findings suggest that the bias in face recognition isn’t just a result of social conditioning or lack of exposure—it is rooted in how our brain constructs and differentiates facial features at an unconscious level, within milliseconds of visual contact.

The Invisible Bias Behind the Eyes

It’s long been known that people have a harder time distinguishing individual features of faces from other racial groups, a psychological effect often linked to societal bias or cultural exposure. But Nestor’s team sought to ask a deeper question: What is the brain actually seeing—or failing to see—when it processes other-race faces?

In one of the studies, published in Behavior Research Methods, two diverse groups of participants—one East Asian, one white—were shown a series of faces on a screen and asked to rate their similarity. What followed was a remarkable use of generative AI to visualize their internal perceptions. Through a GAN trained on thousands of face images, the researchers reconstructed what the participants “saw” in their minds.

The result? Faces from the same racial group were reconstructed with more accuracy, detail, and individuality. Other-race faces, on the other hand, were seen as more “average,” blending together and lacking distinctive detail. Even more intriguing was the consistent perception of other-race faces as appearing younger and more emotionally expressive, even when the original faces showed no such cues. This was no longer just a matter of visual unfamiliarity—it was a neural-level generalization.

Reading the Mind Through Millisecond Brainwaves

In a second, even more groundbreaking study published in Frontiers in Human Neuroscience, the researchers took things further. By analyzing EEG data—the electrical signals produced by the brain—they attempted what might be described as a limited form of mind-reading. And what they found was equally revealing.

“When it comes to other-race faces, the brain responses were less distinct,” explains Moaz Shoura, a Ph.D. student in Nestor’s lab and co-author of the studies. “This suggests that these faces are processed more generally and with less detail.” The implications are clear: our brains seem to compress other-race faces into a less nuanced category, making subtle features harder to detect, and leading to poorer recognition.

Crucially, this generalized processing happens incredibly quickly—within the first 600 milliseconds of seeing a face. The brain is not waiting for conscious reflection or social conditioning. It’s making snap judgments based on ingrained perceptual models.

The EEG data revealed that the differentiation in brain activity was markedly stronger when participants viewed same-race faces. Neural patterns associated with detail recognition and emotional parsing were sharper and more distinct. In contrast, when observing other-race faces, those neural signatures were flatter, more generic, and less finely tuned.

Why It Matters: From Eyewitness Testimony to AI Ethics

These findings may sound esoteric, but their real-world relevance cannot be overstated. They touch on everything from criminal justice to job interviews, from mental health to machine learning ethics.

For instance, if human brains tend to flatten and homogenize other-race faces, then eyewitness testimony involving individuals of different races may be more prone to error than previously believed. Such testimony has already been under scrutiny for its fallibility—now neuroscience adds a stark layer of caution.

Similarly, facial recognition software—often trained on biased data sets—could benefit enormously from understanding these perceptual distortions. By incorporating insights from how human brains perceive facial identity and emotion across races, developers might build more accurate and fair recognition systems.

“This could open up possibilities for improving everything from forensic analysis to social AI applications,” says Nestor. “It’s also important for understanding how bias actually forms at a neural level.”

There are also implications for mental health. The ability to read and interpret emotional expressions accurately is a core feature of social cognition. In conditions such as schizophrenia or borderline personality disorder, misperceptions of others’ emotions can create significant challenges. By revealing how perceptual distortions arise in typical brains, the research could pave the way for better diagnostic tools and even treatments.

Nestor elaborates: “If we can see exactly what’s going on in a person’s mind when they’re misinterpreting emotional cues—like perceiving happiness as contempt or failing to see disgust—we can design more effective interventions.”

Unraveling Bias at the Neural Level

What’s particularly compelling about this research is how it bridges hard neuroscience with social psychology. Bias, typically discussed in terms of education, culture, or ideology, is here revealed to be partly a perceptual issue—deeply embedded in how our brains are wired to make sense of visual stimuli.

It’s not that people consciously choose to see other-race faces as more alike or more average; it’s that their neural circuits aren’t attuned to the unique details in those faces. And unless this is explicitly addressed—through exposure, training, or neurocognitive intervention—the distortion persists.

This raises important questions about how we can train our brains to overcome such biases. Is it possible, through deliberate practice or immersive experiences, to enhance the brain’s sensitivity to other-race facial features? Can VR simulations, for example, help rewire neural pathways to foster more accurate cross-race perception? These are the kinds of questions that Nestor’s lab hopes to explore in the future.

From Face to Face: Social Encounters and First Impressions

Imagine the typical job interview scenario. Two candidates walk in, one of the same racial background as the interviewer, the other from a different one. Even if both candidates smile, speak, and behave identically, the interviewer’s brain might still perceive the latter’s facial expressions as less distinct or emotionally legible. This isn’t necessarily racism in the overt sense—but it is a perceptual bias that could subtly shape decisions.

“If we can better understand how the brain processes faces, we can develop strategies to reduce the impact bias can have when we first meet face-to-face with someone from another race,” says Shoura.

Such strategies could include diversity training rooted not only in ethics and empathy but also in perceptual neuroscience. Rather than merely telling people to “try harder” to be fair, this approach would work on the actual brain patterns that mediate how we see and respond to others.

The Future of Face Perception Research

As AI becomes increasingly integrated into our daily lives—through facial recognition systems, emotion-detecting interfaces, and social robots—the importance of accurate and unbiased face perception grows exponentially. The University of Toronto Scarborough team’s research provides a blueprint for how we can merge neuroscience and AI to decode the mysteries of human perception, and in doing so, make our technologies—and ourselves—more just.

But perhaps the most enduring legacy of this work will be its challenge to how we think about thinking. It’s one thing to say we’re all biased. It’s another to see that bias light up in real time on a brain scan or take shape in a reconstructed image built from our own neural data.

In revealing these inner workings, Nestor and his team are giving us something more than scientific insight. They’re handing us a mirror—not of our faces, but of our minds—and asking us to take a closer look.

References: Moaz Shoura et al, Unraveling other-race face perception with GAN-based image reconstruction, Behavior Research Methods (2025). DOI: 10.3758/s13428-025-02636-z

Moaz Shoura et al, Revealing the neural representations underlying other-race face perception, Frontiers in Human Neuroscience (2025). DOI: 10.3389/fnhum.2025.1543840