For decades, one of humanity’s greatest aspirations in technology has been to teach machines how to see the world as we do. Our eyes and brains interpret the smallest details with breathtaking efficiency—tracking a bird in flight, reading the faintest letters on a page, or instantly recognizing a friend’s face in a crowd. Replicating even a fraction of that ability in machines has been an extraordinary challenge.

Yet, little by little, scientists have been getting closer. Advances in sensors and machine learning have already given machines the ability to inspect electronics on assembly lines, sort produce at lightning speed, and even assist doctors in identifying early signs of disease. This field, known as machine vision, has grown into a cornerstone of modern industry. But until now, there has always been a gap: machines don’t quite see like us.

That gap may finally be narrowing, thanks to a groundbreaking retina-inspired device developed by researchers in China and Denmark. Their new creation doesn’t just mimic the human eye in theory—it replicates its structure in practice. And in doing so, it could redefine the future of machine vision.

Why Machine Vision Matters

Before diving into the innovation, it’s worth remembering just how transformative machine vision already is. Picture a factory floor: rows of bottled drinks pass down a conveyor belt at dizzying speeds. Instead of relying on human inspectors, a vision system equipped with high-speed cameras and algorithms can detect a single mislabeled bottle in a sea of thousands.

In the automotive industry, similar systems check for tiny defects in engine parts, imperfections invisible to the naked eye but critical for safety. In electronics, they verify the placement of minuscule components with near-perfect precision. Even in food production, machine vision ensures that bruised apples or misshapen candies never reach store shelves.

But here’s the catch: the eyes of these machines—the sensors themselves—remain limited. They capture images in ways that are fundamentally different from how our retinas process light. And those differences impose strict boundaries on what machine vision can achieve.

The Shortcomings of Current Sensors

Most current systems rely on either frame-based or event-based sensors. Frame-based sensors work like a traditional camera, capturing snapshots of the world at regular intervals—30, 60, or even hundreds of frames per second. Event-based sensors take a different approach: instead of recording complete images, they track changes in brightness at individual pixels.

Each method has its strengths, but both fall short of the human retina. Our eyes don’t see the world in rigid frames, nor do they simply react to pixel-level brightness changes. Instead, the retina is a marvel of layered processing, adapting instantly to light, filtering noise, and sending rich streams of information to the brain in real time.

As a result, existing machine vision systems can struggle in challenging environments: glare from bright sunlight, shadows in dim warehouses, or rapidly shifting scenes. The sensors may record too much redundant data, miss subtle changes, or fail to adapt quickly.

What’s been missing is a sensor that doesn’t just take pictures but actually sees—adapting, filtering, and refining signals much like our own eyes do.

Learning From the Retina

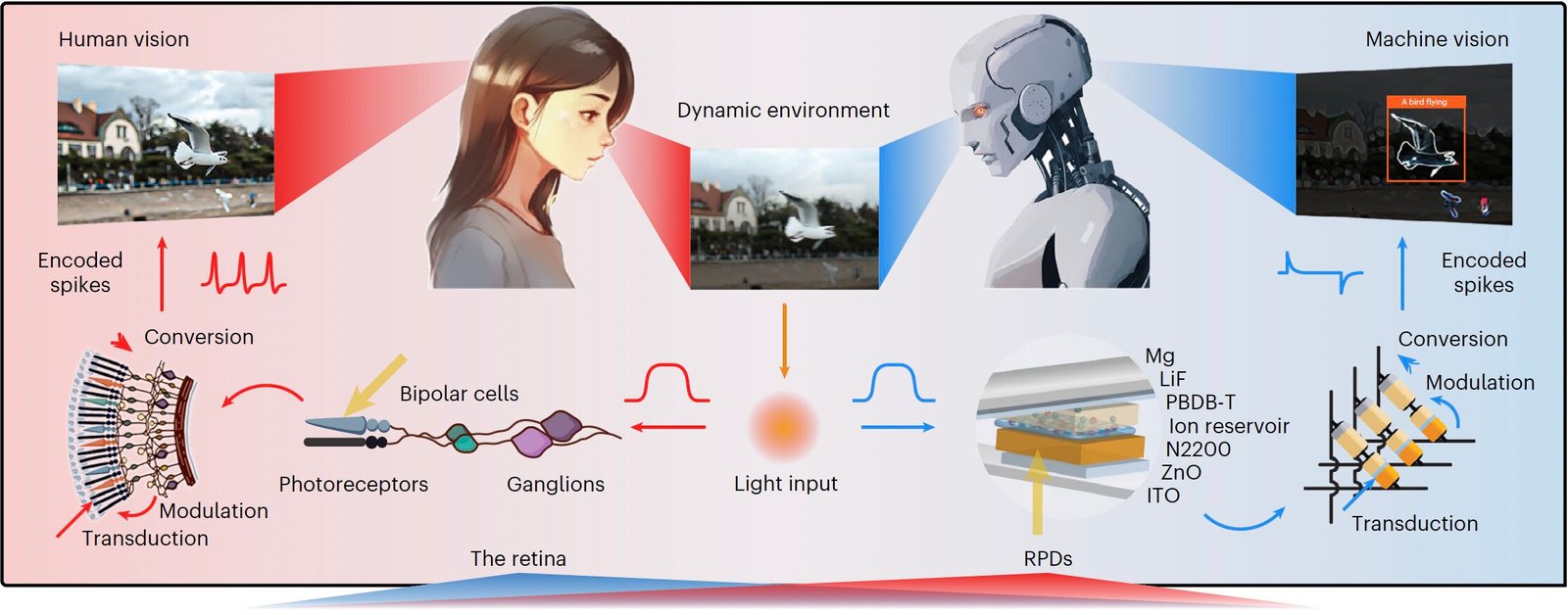

Enter the event-driven retinomorphic photodiode (RPD), developed by researchers at the Chinese Academy of Sciences, the Sino-Danish Center for Education and Research, and other collaborating institutes.

Their device does something revolutionary: it mimics the retina’s layered structure. By carefully designing the sensor at the nanoscale, the team recreated how the retina separates, filters, and transmits light signals.

At the heart of this design are three key components:

- An organic donor–acceptor heterojunction, which helps transfer electrical charge efficiently—like the first stage of retinal processing.

- An ion reservoir made of porous nanostructures, which acts like a sponge for storing and releasing ions, echoing how biological tissues carry signals.

- A Schottky junction, an interface between a metal and semiconductor, which ensures signals flow smoothly in one direction—similar to how neurons pass information forward.

Together, these layers don’t just record images. They interact dynamically, adapting to their environment the way human retinas adjust to shifting light and contrast.

What Makes This Sensor Different

The results are nothing short of astonishing. In early tests, the RPD achieved a dynamic range exceeding 200 decibels—far beyond the capabilities of most traditional sensors. This means it can handle extreme lighting conditions, from glaring sunlight to near-total darkness, without being overwhelmed or losing detail.

It also significantly reduces noise and redundant data, a problem that plagues conventional systems. Instead of flooding computer processors with unnecessary information, the RPD delivers cleaner, more meaningful signals. That efficiency could prove vital in industries where speed and accuracy are paramount.

Imagine a self-driving car equipped with this kind of vision. Instead of being blinded by oncoming headlights or struggling to distinguish shadows at night, it could interpret the road with near-human adaptability. Or picture a medical imaging system that spots anomalies in tissue with sharper clarity, helping doctors detect diseases earlier and more reliably.

Beyond the Factory Floor

The immediate applications for this technology are clear: smarter, faster, more reliable quality control in manufacturing, from food and beverages to electronics and automobiles. But the implications stretch far beyond assembly lines.

In robotics, such sensors could give machines the visual agility to navigate complex environments without stumbling over changes in lighting. In security, cameras equipped with RPDs could operate effectively in conditions that currently defeat conventional systems, such as dimly lit streets or areas of high glare. In space exploration, they could help rovers and probes adapt to the extreme lighting of alien worlds.

Even consumer devices could benefit. Cameras in smartphones, for example, might one day match the versatility of the human eye, capturing crisp, detailed images in virtually any condition.

A Glimpse of the Future

Of course, challenges remain. The RPD is still in its early stages, and scaling it up for mass production will require significant work. Researchers will need to refine its design, ensure long-term stability, and integrate it into existing systems. But the promise is undeniable.

As lead researchers Qijie Lin and Congqi Li put it, this work represents a “bottom-up approach to retinomorphic sensors.” By building the sensor’s architecture from the ground up to resemble biology, rather than tweaking existing designs, the team has opened the door to an entirely new class of machine vision.

It’s a reminder that some of the best ideas in technology come not from replacing nature but from learning from it. The human eye remains one of evolution’s greatest triumphs. And now, with tools like the RPD, machines may begin to share in that gift.

Seeing Machines, Seeing Ourselves

In the end, the story of machine vision is not just about machines. It’s about us—our relentless drive to understand and replicate the extraordinary abilities of life itself. Every time we succeed, we gain not only a new technology but also a deeper appreciation for the natural marvels we are trying to emulate.

With retina-inspired sensors, we may be witnessing the beginning of a new era where machines don’t just watch the world, but truly see it. And in that vision, perhaps, lies the next great leap for industry, medicine, and even our daily lives.

More information: Qijie Lin et al, Event-driven retinomorphic photodiode with bio-plausible temporal dynamics, Nature Nanotechnology (2025). DOI: 10.1038/s41565-025-01973-6