For more than half a century, the progress of computing technology has been driven by a remarkable observation known as Moore’s Law. Coined after Gordon E. Moore, co-founder of Intel Corporation, this principle has shaped the modern digital age, influencing everything from the design of microprocessors to the pace of technological innovation itself. Moore’s Law is not merely a technical forecast—it is a guiding philosophy that has propelled the exponential growth of computational power, reduced the cost of electronics, and revolutionized industries across the globe. Understanding why computers keep getting faster and what Moore’s Law really means requires a journey through the history of semiconductor development, the physics of transistors, the economics of innovation, and the emerging challenges that threaten to end this long-standing trend.

The Origin of Moore’s Law

Moore’s Law began not as a law of nature but as an empirical observation. In 1965, Gordon Moore published a seminal article in Electronics magazine titled “Cramming More Components onto Integrated Circuits.” At that time, Moore was working at Fairchild Semiconductor, one of the pioneering companies in silicon-based electronics. He observed that the number of transistors on an integrated circuit had been doubling approximately every year since the invention of the first silicon transistor in 1954. Based on this pattern, he predicted that this exponential growth would continue for at least another decade.

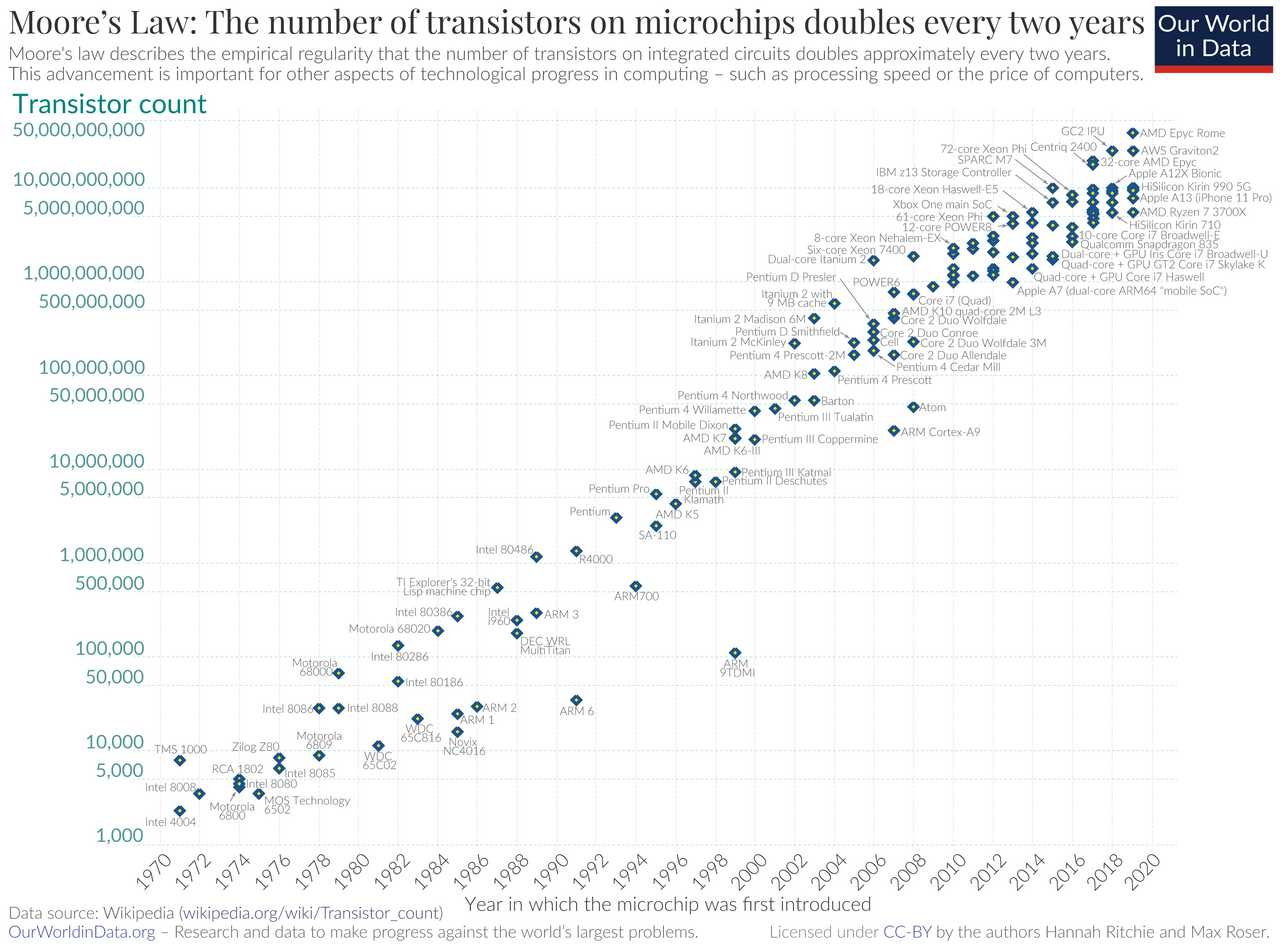

In 1975, Moore revisited his prediction and revised the doubling period to roughly every two years. This updated version became the standard interpretation of Moore’s Law: the number of transistors on a microchip doubles approximately every 18 to 24 months, resulting in an exponential increase in computing power and a corresponding decrease in cost per transistor. Although this was not a physical law, its accuracy and persistence over decades made it one of the most powerful self-fulfilling prophecies in technological history.

Moore’s observation was based on several intertwined trends. Improvements in photolithography—the process of etching circuit patterns onto silicon wafers—allowed engineers to pack more transistors into the same area. Simultaneously, advances in materials science, process engineering, and design automation made it possible to shrink transistor sizes without sacrificing performance or reliability. The semiconductor industry’s collective adherence to Moore’s Law became a roadmap for progress, with companies planning their research and development cycles around this predictable rate of improvement.

The Transistor: The Building Block of Moore’s Law

To understand why Moore’s Law matters, one must first understand the transistor—the fundamental unit of modern electronics. A transistor acts as a switch or amplifier that controls the flow of electric current. It can be turned on or off, representing the binary states 1 and 0 that form the basis of digital computation. When millions or billions of these transistors are combined on a single chip, they perform logical operations, store data, and process information at incredible speeds.

The first transistor, invented in 1947 at Bell Labs by John Bardeen, Walter Brattain, and William Shockley, was made of germanium and was much larger than those used today. By the late 1950s, silicon had replaced germanium due to its superior thermal and electrical properties. The invention of the integrated circuit by Jack Kilby and Robert Noyce soon followed, allowing multiple transistors to be fabricated together on a single semiconductor wafer.

As engineers learned to make transistors smaller, they discovered that not only could more of them fit on a chip, but they could also operate faster and consume less power. This phenomenon is a key reason behind the exponential growth predicted by Moore’s Law. Shrinking transistor size reduces the distance electrons must travel, thus increasing speed and reducing energy loss. However, miniaturization also introduces new challenges, such as heat dissipation, quantum tunneling, and manufacturing complexity, which have become increasingly difficult to manage as transistor dimensions approach the atomic scale.

The Era of Exponential Growth

The period from the 1960s through the early 2000s is often regarded as the golden age of Moore’s Law. During these decades, the semiconductor industry achieved a consistent and predictable rate of improvement that transformed computing power at an exponential pace. The transistor count on commercial microprocessors grew from a few thousand in the early 1970s to billions by the 2010s.

Intel’s early processors illustrate this dramatic progression. The Intel 4004, released in 1971, contained 2,300 transistors and operated at a clock speed of 740 kilohertz. Just five years later, the Intel 8086 contained 29,000 transistors and ran at 5 megahertz. By 1993, the Intel Pentium featured 3.1 million transistors, and by 2019, the Intel Core i9 processor surpassed 8 billion transistors. Over the same period, computing performance increased by a factor of millions while costs plummeted.

This exponential scaling was supported by advances in photolithography, where ever-smaller patterns could be etched onto silicon wafers using shorter wavelengths of light. The transition from ultraviolet to deep ultraviolet (DUV) and, more recently, to extreme ultraviolet (EUV) lithography has allowed manufacturers to continue shrinking transistor sizes well below 10 nanometers. Other process improvements, such as chemical vapor deposition, ion implantation, and chemical-mechanical polishing, contributed to the steady miniaturization and performance enhancement of integrated circuits.

The Economics Behind Moore’s Law

While Moore’s Law describes a technological trend, it also encapsulates an economic principle. The semiconductor industry has operated under a strong feedback loop between innovation, cost reduction, and market expansion. As transistor density doubled, the cost per transistor decreased significantly. This made computing more affordable, which in turn spurred demand for faster, cheaper, and more capable devices—from personal computers to smartphones to data centers.

The economic principle underlying Moore’s Law is sometimes expressed as Rock’s Law, which observes that the cost of building semiconductor fabrication plants doubles approximately every four years. Paradoxically, even as fabrication costs have soared into the tens of billions of dollars, the per-transistor cost has continued to fall due to improved yields and higher production volumes. The result has been a virtuous cycle: cheaper transistors enable more powerful devices, which create new applications and markets, which in turn justify the investment in further scaling.

This interplay between economics and technology has made Moore’s Law both a prediction and a roadmap. Semiconductor companies, consortia, and research institutions have used it as a target for long-term planning. Organizations like the International Technology Roadmap for Semiconductors (ITRS) have coordinated global efforts to sustain the pace of innovation predicted by Moore’s observation. In many ways, Moore’s Law became a self-fulfilling prophecy because the industry collectively worked to make it true.

The Physics of Scaling: Why Smaller Means Faster

The success of Moore’s Law is rooted in the physical properties of transistors and how they scale. As transistor dimensions shrink, their electrical characteristics improve in several key ways. Shorter channel lengths between the source and drain reduce the time it takes for electrons to travel, leading to faster switching speeds. Smaller transistors also require less charge to operate, thereby reducing power consumption.

This principle, known as Dennard scaling, was formulated by Robert Dennard and his colleagues at IBM in 1974. Dennard scaling stated that as transistors are made smaller, their power density remains constant because voltage and current scale down proportionally. This allowed chip designers to increase transistor density without increasing overall power consumption. For decades, this relationship held true and was a key enabler of faster, more efficient microprocessors.

However, as transistors shrank below about 100 nanometers, Dennard scaling began to break down. Voltage could no longer be reduced proportionally due to leakage currents and other physical limitations. As a result, power density began to rise, leading to heat dissipation challenges. This marked a turning point in the evolution of computing performance, as manufacturers could no longer rely solely on shrinking transistors to improve speed and efficiency.

The Multicore Revolution

When increasing clock speeds became impractical due to power and heat constraints, the semiconductor industry turned to parallelism as a new strategy for performance improvement. Instead of making individual transistors or processor cores faster, engineers began adding multiple cores to a single chip. Each core could handle a separate stream of instructions, allowing for simultaneous processing of multiple tasks.

This shift led to the rise of multicore processors in the mid-2000s. Dual-core and quad-core CPUs became standard in consumer devices, while high-performance servers and supercomputers adopted architectures with dozens or even hundreds of cores. The software ecosystem evolved in parallel, with developers learning to write code that could take advantage of parallel execution through multithreading and distributed computing frameworks.

Although multicore designs helped extend the practical lifespan of Moore’s Law, they introduced new challenges in software design and energy efficiency. Not all tasks can be parallelized efficiently, and adding more cores eventually leads to diminishing returns due to communication overhead and memory bottlenecks. Nevertheless, the multicore paradigm remains central to modern computing, complemented by specialized processors such as GPUs (graphics processing units) and TPUs (tensor processing units) that accelerate specific types of computations.

Beyond Traditional Scaling: New Architectures and Materials

As transistor scaling approached the physical limits of silicon, researchers began exploring alternative materials, architectures, and design paradigms to sustain performance growth. Traditional planar transistors gave way to three-dimensional designs such as FinFETs (fin field-effect transistors), where a thin vertical fin of silicon forms the conduction channel. This 3D structure allows for better control of current flow and reduces leakage, enabling continued scaling below 10 nanometers.

In recent years, semiconductor manufacturers have introduced gate-all-around (GAA) transistors, a further evolution of FinFET technology that wraps the gate completely around the channel. This design offers even greater control and efficiency, paving the way for sub-3-nanometer nodes. Beyond silicon, researchers are investigating new materials such as graphene, carbon nanotubes, and transition metal dichalcogenides (TMDs) that could potentially surpass the limitations of silicon in speed and energy efficiency.

In addition to new materials, architectural innovations such as heterogeneous computing, chiplet-based design, and 3D stacking are redefining how performance scaling is achieved. Heterogeneous architectures combine different types of processing units—such as CPUs, GPUs, and AI accelerators—on a single chip, each optimized for specific workloads. Chiplet-based designs, championed by companies like AMD, allow multiple smaller dies to be interconnected within a single package, improving manufacturing yield and scalability. Meanwhile, 3D stacking integrates memory and logic layers vertically, reducing data transfer latency and increasing bandwidth.

The End of Moore’s Law? A Debate in the Industry

In recent years, the question of whether Moore’s Law is coming to an end has become a topic of intense debate. While transistor density continues to increase, the pace of improvement has slowed compared to the historical average. The physical and economic challenges of scaling below a few nanometers are formidable. Manufacturing costs have skyrocketed, quantum effects have become harder to control, and power efficiency gains have diminished.

Some experts argue that Moore’s Law, as originally defined, has already ended. The exponential growth in performance per cost has decelerated, and the doubling of transistor density now occurs over longer intervals. Others contend that Moore’s Law is not dead but evolving—its spirit lives on through alternative means of performance enhancement such as architectural innovation, software optimization, and new computing paradigms.

Companies like Intel, TSMC, and Samsung continue to invest heavily in next-generation manufacturing technologies. The advent of EUV lithography has enabled the production of chips at 3-nanometer nodes and below, albeit at enormous cost and complexity. These achievements demonstrate that while traditional scaling may be slowing, innovation in design and manufacturing continues to push the boundaries of what is possible.

Moore’s Law Beyond Silicon: Quantum and Neuromorphic Computing

The search for continued exponential growth in computing performance has led scientists to explore entirely new paradigms of computation. Quantum computing represents one of the most radical departures from classical digital architectures. Instead of using binary bits, quantum computers use quantum bits, or qubits, which can exist in multiple states simultaneously due to the principles of superposition and entanglement. This allows quantum computers to perform certain types of calculations exponentially faster than classical machines.

While practical, large-scale quantum computers remain in development, they hold the potential to revolutionize fields such as cryptography, materials science, and optimization. In a sense, quantum computing could represent a new phase of Moore’s Law, where exponential performance growth is achieved not through smaller transistors but through new physics.

Another emerging approach is neuromorphic computing, which seeks to mimic the structure and function of the human brain. Neuromorphic chips use networks of artificial neurons and synapses to perform computations in a highly parallel and energy-efficient manner. These systems are particularly suited for machine learning and pattern recognition tasks. By moving beyond the traditional von Neumann architecture, neuromorphic computing could unlock new forms of scalability that are not constrained by the same physical limits as silicon transistors.

The Role of Software and Algorithms

While Moore’s Law is often discussed in terms of hardware, software has played an equally crucial role in making computers faster and more capable. Advances in algorithms, compilers, and programming paradigms have amplified the impact of hardware improvements. For example, more efficient sorting algorithms, data structures, and numerical methods can achieve performance gains equivalent to several generations of hardware upgrades.

As the pace of hardware scaling slows, software optimization becomes increasingly important. Techniques such as just-in-time compilation, parallel processing, and machine learning-based optimization allow developers to extract maximum performance from existing hardware. The synergy between hardware and software innovation ensures that even if transistor scaling plateaus, overall system performance can continue to improve.

The Broader Impact of Moore’s Law

The effects of Moore’s Law extend far beyond the realm of computing. It has shaped entire industries and transformed modern life. The exponential improvement in computing power has enabled the rise of the Internet, mobile communication, artificial intelligence, and the digital economy. It has revolutionized fields such as medicine, finance, transportation, and entertainment. The smartphone in a person’s pocket today contains more processing power than the supercomputers that guided the Apollo missions to the Moon.

Moore’s Law has also driven globalization and democratization of technology. As computing became cheaper and more accessible, billions of people gained access to digital tools and information. It has catalyzed scientific research by providing computational resources for simulations, data analysis, and modeling in physics, chemistry, and biology. In essence, the exponential growth predicted by Moore’s Law has become a central engine of human progress in the information age.

The Future of Computational Growth

As the traditional mechanisms of Moore’s Law face diminishing returns, the future of computing will likely depend on a combination of new materials, architectures, and paradigms. Technologies such as spintronics, optical computing, and molecular electronics aim to harness physical phenomena beyond conventional electronic charge transport. Optical or photonic computing, for instance, uses light instead of electrons to transmit information, offering the potential for ultra-high-speed and low-power data processing.

Moreover, the integration of artificial intelligence into chip design itself is accelerating the pace of innovation. Machine learning algorithms are now used to optimize semiconductor layouts, predict manufacturing defects, and design more efficient circuits. This meta-level application of computation to improve computation represents a new phase of technological evolution.

Even if the exponential growth of transistor density eventually slows, the spirit of Moore’s Law—continuous innovation and relentless improvement—will persist. The focus will shift from raw hardware scaling to system-level optimization, hybrid architectures, and new computing models that transcend the limitations of silicon.

Conclusion

Moore’s Law stands as one of the most influential ideas in the history of technology. It began as a simple observation about the increasing density of transistors on a chip, yet it evolved into a self-reinforcing principle that has guided decades of progress in electronics, computing, and digital innovation. The law’s endurance has been fueled by a combination of scientific ingenuity, economic forces, and human ambition.

While the physical scaling of transistors may eventually reach its limit, the pursuit of faster, smaller, and more efficient computation will continue. Whether through new materials, quantum mechanics, neuromorphic architectures, or entirely unforeseen discoveries, humanity’s quest to push the boundaries of computational power remains undiminished. Moore’s Law is more than a measure of progress—it is a symbol of our collective drive to understand, innovate, and transform the world through the power of technology.