The story of programming languages is the story of human ingenuity transforming logic into language—an ongoing journey from mechanical computation to artificial intelligence. Each era of computing has seen new languages emerge to solve the limitations of the previous generation, gradually shaping how humans interact with machines. From the earliest binary codes punched into cards to today’s high-level languages that power global systems, the evolution of programming languages reflects both technological progress and the creative adaptation of human thought.

Programming languages serve as the bridge between human logic and machine execution. They translate abstract ideas into precise instructions that computers can interpret and execute. As computing technology evolved—from vacuum tubes to microprocessors, from standalone systems to interconnected networks—so too did the languages designed to command these machines. The progression of programming languages has not only advanced computer science but has also transformed industries, economies, and the very fabric of modern life.

The Origins of Programming: Before Computers

The roots of programming languages extend back long before electronic computers. The concept of giving a machine a sequence of instructions originated in the early 19th century. English mathematician Charles Babbage conceptualized the Analytical Engine, a mechanical general-purpose computer, in the 1830s. Although it was never completed in his lifetime, the Analytical Engine was revolutionary—it had memory, a processor, and could execute instructions from punched cards.

Ada Lovelace, often celebrated as the world’s first programmer, recognized that Babbage’s machine could be more than a calculator. In 1843, she wrote a detailed algorithm for calculating Bernoulli numbers using the Analytical Engine. Her notes on the engine described how instructions could be given to the machine using symbols, sequences, and loops—concepts fundamental to modern programming.

This was the birth of programming as an intellectual discipline, even if it took another century for technology to catch up. In the late 19th century, automated looms and player pianos used punched cards to control patterns and music. These mechanical systems prefigured the way early computers would use physical media to represent and process instructions.

The Birth of Machine Language

The first true programming languages appeared alongside the earliest digital computers in the 1940s. These machines—such as the ENIAC, EDVAC, and EDSAC—were monumental in size and complexity, relying on vacuum tubes and wiring to perform calculations.

At first, programs were written directly in machine code: sequences of binary digits (0s and 1s) representing the instructions and data to be processed. Machine language was specific to each computer’s architecture, meaning that programs written for one machine could not run on another without complete rewriting. Programming at this level was extremely laborious and error-prone. A simple operation could require dozens of lines of binary code, and debugging meant examining long strings of numbers for mistakes.

Machine language represented the first generation of programming languages. Although powerful, it was impractical for most humans to use efficiently. The need for a more understandable way to communicate with machines soon led to the invention of symbolic programming.

Assembly Language and the Rise of Symbolic Programming

The second generation of programming languages, assembly language, emerged in the early 1950s as a response to the difficulties of working directly with binary code. Assembly replaced numeric opcodes and memory addresses with symbolic names. For example, instead of writing a binary instruction to add two numbers, a programmer could use a mnemonic like ADD.

Assemblers were programs that translated these symbolic instructions into machine code. This allowed programmers to work more intuitively, while still maintaining precise control over hardware. Assembly language was still machine-dependent, meaning each computer required its own variant. However, it marked a significant step toward abstraction and efficiency.

Assembly programming dominated early computing, from mainframes to embedded systems. It was used to create the first operating systems and to control hardware at a low level. Even today, assembly remains essential in scenarios where speed and memory efficiency are critical, such as microcontrollers, device drivers, and system kernels.

The Emergence of High-Level Languages

By the mid-1950s, as computers became more powerful and widely used in science, business, and engineering, it became clear that even assembly was too complex and time-consuming for large projects. The next step in programming evolution was the creation of high-level languages—programming languages that abstracted away the details of machine operations and allowed humans to express algorithms in a more natural form.

One of the earliest and most influential high-level languages was FORTRAN (Formula Translation), developed by IBM in 1957 under the leadership of John Backus. FORTRAN was designed for scientific and engineering calculations. It introduced structured control statements, loops, and the concept of compiling high-level source code into efficient machine code. FORTRAN dramatically increased programming productivity and made computers accessible to scientists who were not trained engineers.

Shortly after, COBOL (Common Business-Oriented Language) was developed in 1959, led by Grace Hopper and a team of computer scientists. It was tailored for business data processing and emphasized readability and English-like syntax. COBOL played a central role in the expansion of computer use in government and corporate sectors, handling everything from payroll systems to banking databases.

In parallel, LISP (List Processing Language), invented by John McCarthy in 1958, introduced the concept of symbolic computation and recursion. It became the foundation for artificial intelligence research and influenced later functional programming languages.

These pioneering languages established the idea that programming could be about expressing intent rather than manipulating hardware directly. They represented the third generation of programming languages and laid the groundwork for all modern software development.

The Structured Programming Revolution

By the late 1960s and early 1970s, software development had become increasingly complex. Programs were larger, more interconnected, and prone to errors. Early high-level languages, while easier to use than assembly, often led to unstructured code—commonly called “spaghetti code”—that was difficult to maintain.

The structured programming movement emerged as a solution. It advocated for using clear, modular control structures such as loops (for, while) and conditionals (if, else), and avoiding unrestrained jumps like GOTO. This approach emphasized top-down design and readability.

ALGOL (Algorithmic Language), developed in the late 1950s and standardized in 1960, was one of the first languages to embody structured programming principles. ALGOL introduced block structure and lexical scoping, features that became foundational to nearly all later languages. It also provided the basis for Pascal, created by Niklaus Wirth in 1970, which became a popular teaching language and influenced future languages like Ada and Modula-2.

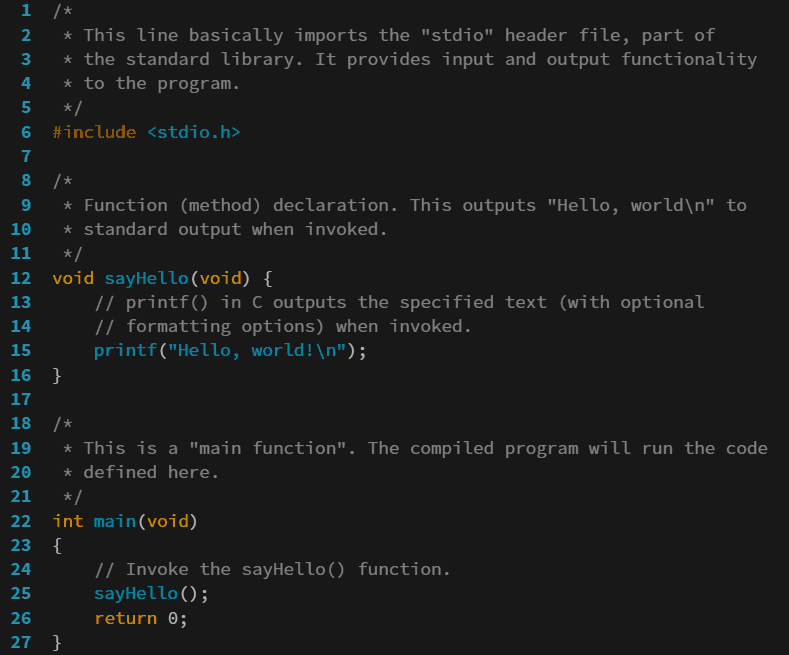

Around the same time, C was developed by Dennis Ritchie at Bell Labs in the early 1970s. C combined the efficiency of assembly with the structure of high-level languages. It became the foundation for Unix, an operating system that revolutionized computing. C’s balance between power, portability, and simplicity made it one of the most influential languages ever created. Even today, it underpins operating systems, compilers, and embedded software.

The structured programming era transformed programming into an engineering discipline, focusing on design, reusability, and maintainability.

The Advent of Object-Oriented Programming

The next major leap came with object-oriented programming (OOP), which emerged in the 1980s as a response to growing software complexity. Object-oriented languages introduced a new way of thinking about programs—not as sequences of instructions, but as collections of interacting entities known as objects.

Each object represented a real-world concept, containing both data (attributes) and behavior (methods). This approach encouraged modularity, code reuse, and encapsulation, making large-scale software systems easier to design and manage.

The origins of OOP trace back to Simula, developed in the 1960s by Ole-Johan Dahl and Kristen Nygaard for simulating complex systems. However, OOP gained mainstream prominence with the development of Smalltalk in the 1970s at Xerox PARC. Smalltalk was a pure object-oriented language, emphasizing message passing, dynamic typing, and an interactive programming environment.

The real explosion of OOP came with C++, created by Bjarne Stroustrup in the early 1980s. C++ extended C by adding classes, inheritance, and polymorphism—key features of OOP—while retaining backward compatibility. It quickly became a dominant language for software development, powering operating systems, games, and large-scale applications.

Later, Java, developed by Sun Microsystems in the mid-1990s, carried OOP principles into the internet era. With its slogan “Write Once, Run Anywhere,” Java introduced a virtual machine that allowed code to run on any platform. Java’s security features, garbage collection, and vast libraries made it a popular choice for enterprise software, mobile apps, and web applications.

OOP reshaped programming culture, encouraging developers to think in terms of objects and hierarchies rather than just functions and variables. It bridged the gap between design and implementation, influencing nearly every modern programming paradigm.

The Rise of Scripting and Web Languages

The 1990s marked a new era driven by the explosion of the World Wide Web. As the internet expanded, so did the need for languages that could handle interactivity, networking, and dynamic content. This gave rise to scripting languages—high-level, interpreted languages designed for rapid development and automation.

Perl, developed by Larry Wall in 1987, became a powerful tool for text processing and system administration. Its flexibility and integration with Unix made it the “duct tape” of the internet. Around the same time, Python, created by Guido van Rossum in 1991, emphasized readability, simplicity, and extensive standard libraries. Python’s philosophy of “there should be one obvious way to do it” made it a favorite for beginners and professionals alike.

In 1995, JavaScript, developed by Brendan Eich at Netscape, revolutionized the web. Originally designed to make web pages interactive, JavaScript evolved into one of the most important languages in computing. Combined with HTML and CSS, it forms the backbone of front-end web development. Later, with the introduction of Node.js, JavaScript moved to the server side, enabling full-stack development using a single language.

Meanwhile, PHP, created by Rasmus Lerdorf in 1994, became the dominant language for server-side scripting, powering websites like Facebook and Wikipedia. Its integration with databases and ease of deployment made it essential during the early growth of the internet.

These languages marked a shift toward accessibility and productivity. They allowed developers to build interactive, data-driven applications rapidly, democratizing software creation for millions.

The Modern Era: Multiparadigm and Open-Source Evolution

The 2000s and 2010s brought a new wave of languages designed to combine paradigms and address the challenges of modern computing: concurrency, scalability, and maintainability.

C#, developed by Microsoft as part of its .NET framework in 2000, merged the power of C++ with the simplicity of Java. It became a core language for enterprise and game development, especially with platforms like Unity.

Ruby, created by Yukihiro Matsumoto in 1995 but popularized in the 2000s through the Ruby on Rails framework, emphasized developer happiness and productivity. Its elegant syntax and convention-over-configuration philosophy influenced many modern frameworks.

Functional programming also saw a resurgence. Languages such as Haskell, Erlang, and Scala introduced concepts like immutability, higher-order functions, and concurrency models that suited distributed systems. These ideas later influenced mainstream languages—modern JavaScript, Python, and C# all adopted functional features.

Go (Golang), developed by Google in 2009, focused on simplicity, concurrency, and performance, making it ideal for large-scale distributed systems. Rust, developed by Mozilla, prioritized memory safety and performance without sacrificing control. Both languages responded to the growing need for reliability in an increasingly networked world.

At the same time, the open-source movement reshaped how languages were developed and adopted. Community-driven projects like Python, Ruby, and JavaScript frameworks thrived on collaboration. Open repositories and package managers created ecosystems where developers could share tools and ideas globally.

The Influence of Artificial Intelligence and Domain-Specific Languages

As computing entered the age of data and intelligence, new languages and frameworks emerged to serve specialized purposes. Artificial intelligence, machine learning, and data science demanded languages optimized for statistics, mathematics, and massive computation.

Python became the dominant language in AI research due to its simplicity and vast ecosystem of libraries like TensorFlow, PyTorch, and NumPy. R, designed for statistical computing, became essential for data analysis and visualization. Domain-specific languages (DSLs) also proliferated—SQL for databases, MATLAB for numerical computation, and Swift for iOS development.

The increasing use of machine learning has also led to efforts in creating languages that can reason or optimize automatically. Research into probabilistic programming and differentiable programming suggests a future where code can adapt, learn, and optimize itself.

The Future of Programming Languages

The evolution of programming languages is far from over. As technology continues to advance, languages are evolving to meet new demands in cloud computing, artificial intelligence, quantum computing, and human-computer interaction.

One emerging direction is automation. Low-code and no-code platforms are reducing the need for manual programming, allowing non-technical users to build applications through visual interfaces. These platforms are themselves built on traditional programming languages, but they represent a shift toward greater accessibility.

Another frontier is quantum programming. Languages like Q# from Microsoft and Qiskit from IBM are designed to express quantum algorithms that operate on qubits instead of classical bits. These languages may one day enable breakthroughs in cryptography, materials science, and optimization.

Additionally, artificial intelligence is beginning to influence how we write code. Tools powered by large language models can now assist programmers, suggesting solutions, generating code snippets, and even translating between languages. The boundary between human logic and machine intelligence is becoming increasingly blurred.

The Philosophy and Legacy of Programming Languages

The evolution of programming languages mirrors the evolution of human thought. Each generation of languages represents an attempt to express ideas more clearly, more efficiently, and more universally. Early programmers communicated directly with machines; today, programmers communicate through layers of abstraction that bring us closer to expressing logic as naturally as we speak.

Behind every programming language lies a philosophy—a vision of how humans should interact with machines. FORTRAN believed in efficiency and mathematical precision. COBOL believed in business logic and readability. C believed in control and universality. Python believes in simplicity and clarity. These philosophies have shaped not only the tools we use but the way we think about problem-solving itself.

The legacy of programming languages is not merely technological but cultural. Each language fosters its own community, traditions, and idioms. Together, they form the living ecosystem of modern computing—a global conversation between humans and machines.

Conclusion

The evolution of programming languages is one of the most remarkable stories in human history—a journey from mechanical logic to digital expression. It is a testament to humanity’s relentless pursuit of abstraction, efficiency, and understanding. From Ada Lovelace’s first algorithm to today’s AI-assisted programming environments, the trajectory of programming has always been about empowering people to shape the world through logic and creativity.

As we look toward a future filled with intelligent systems, quantum processors, and ever more complex networks, programming languages will continue to evolve. They will not merely serve as tools but as companions in thinking—bridging the gap between imagination and reality. The language of code, once spoken only by machines, has become the language of modern civilization—and its evolution continues to write the next chapter of human progress.