Supercomputers are among the most powerful tools ever built by humankind. They represent the pinnacle of computational engineering—machines designed to process massive amounts of data at incredible speeds, performing calculations that would take ordinary computers decades or even centuries to complete. These monumental systems are not simply faster computers; they are fundamentally different in scale, architecture, and purpose. Supercomputers are built to solve the hardest scientific, technological, and societal problems on Earth—those too complex for human reasoning or traditional computers to handle.

From predicting the paths of hurricanes to simulating the origins of the universe, supercomputers are indispensable in modern science and technology. They allow researchers to explore questions that were once beyond human reach. As the world faces unprecedented challenges in climate change, energy production, healthcare, and national security, supercomputers have become the engines driving discovery, innovation, and decision-making at the highest levels.

The Nature and Purpose of Supercomputing

A supercomputer is a highly specialized computer system designed to perform complex calculations at extraordinary speeds. Unlike ordinary personal computers, which focus on single-user tasks such as word processing or browsing the internet, supercomputers are engineered to handle immense workloads that require parallel computation—the simultaneous processing of millions or even billions of mathematical operations.

The power of a supercomputer is measured in FLOPS (floating-point operations per second), a metric that quantifies how many arithmetic calculations the machine can perform in one second. Modern supercomputers operate at scales measured in petaflops (quadrillions of FLOPS) or exaflops (quintillions of FLOPS). For perspective, an exaflop machine can perform as many calculations in one second as a human performing one calculation per second could in over 30 billion years.

Supercomputers are not built for general use. They are designed to tackle grand challenge problems—those involving vast amounts of data, highly nonlinear systems, or intricate models that require precise simulation of real-world phenomena. These include modeling the Earth’s climate, understanding the behavior of atomic particles, designing new materials, decoding genetic information, and ensuring national defense.

The Evolution of Supercomputers

The history of supercomputing mirrors the evolution of computing itself. The first true supercomputer, the CDC 6600, was built in 1964 by Control Data Corporation under the leadership of Seymour Cray. It could perform three million instructions per second—an astonishing speed at the time. Cray’s designs emphasized speed through innovation in processor design, parallelism, and efficient cooling, setting the standard for decades to come.

In the following decades, supercomputers grew exponentially in power. The Cray-1, introduced in 1976, became a symbol of technological prowess. It used a vector processing architecture that could handle multiple data points in a single instruction, making it ideal for scientific calculations. By the 1990s, supercomputers such as Japan’s Earth Simulator and IBM’s Blue Gene series brought massive parallelism—thousands of processors working together—to the forefront.

Today’s supercomputers are vastly more powerful and complex. Systems like the U.S. Department of Energy’s Frontier and Japan’s Fugaku represent the modern frontier of exascale computing, each capable of performing more than a quintillion operations per second. These machines consist of hundreds of thousands of CPUs and GPUs interconnected through high-speed networks and optimized for energy efficiency and scalability.

The evolution of supercomputing reflects not only hardware advancements but also breakthroughs in algorithms, software, and network infrastructure. As technology continues to advance, supercomputers are moving toward architectures inspired by artificial intelligence, neuromorphic computing, and quantum simulation—blurring the lines between traditional computation and intelligent processing.

Architecture and Operation of a Supercomputer

The architecture of a supercomputer is fundamentally different from that of a conventional computer. Instead of relying on a single processor, a supercomputer employs a massively parallel system in which tens of thousands or even millions of processor cores work together on a single problem. Each core performs part of the computation, and the results are combined to produce the final outcome.

At the heart of this architecture lies the concept of parallel processing. Problems are divided into smaller subproblems that can be solved concurrently. This division allows supercomputers to handle extraordinarily complex calculations that would otherwise be impossible to complete within reasonable timeframes. Parallelism can occur at multiple levels—across processors, cores, threads, and even vector operations within a single processor.

High-speed interconnects link the processors, enabling them to share data rapidly. Memory architecture plays a crucial role as well. Supercomputers employ hierarchical memory systems, from ultra-fast cache memory close to the processors to large-scale distributed memory accessible to all nodes. Efficient communication between these levels of memory is vital to ensure that processors are not left idle waiting for data.

Cooling and power management are also central to supercomputer design. A top-tier supercomputer can consume tens of megawatts of power, equivalent to the energy needs of a small town. To manage the heat generated by so many active components, engineers use advanced liquid cooling systems, heat exchangers, and environmentally sustainable designs to improve energy efficiency.

The software stack is equally sophisticated. Operating systems for supercomputers are designed to manage thousands of simultaneous processes while minimizing latency. Specialized compilers, message-passing interfaces (such as MPI), and job scheduling systems coordinate the distribution of tasks across the hardware. The result is a seamless integration of hardware and software that allows the machine to function as a single, coherent computational entity.

Supercomputers in Climate and Environmental Science

One of the most critical applications of supercomputers lies in understanding and predicting the Earth’s climate. The climate system is extraordinarily complex, involving interactions between the atmosphere, oceans, land surfaces, and ice sheets. Simulating these interactions requires solving billions of equations that represent fluid dynamics, radiation transfer, and chemical processes on a global scale.

Supercomputers allow scientists to build high-resolution climate models that capture fine details such as cloud formation, ocean currents, and atmospheric circulation. These simulations help researchers predict future climate scenarios, assess the impacts of greenhouse gas emissions, and guide policymakers in developing strategies for sustainability and adaptation.

For instance, the European Centre for Medium-Range Weather Forecasts (ECMWF) and the U.S. National Oceanic and Atmospheric Administration (NOAA) use supercomputers to produce accurate weather forecasts and long-term climate projections. By processing massive datasets from satellites, sensors, and ground observations, these systems can simulate weather patterns up to weeks in advance, improving disaster preparedness and resource management.

Environmental modeling extends beyond weather prediction. Supercomputers are used to study ocean circulation, deforestation, glacier melting, and the effects of pollution on ecosystems. They help scientists understand the dynamics of natural disasters such as hurricanes, tsunamis, and wildfires—providing vital information that can save lives and mitigate damage.

Advancing Medicine and Genomics Through Supercomputing

Supercomputers are revolutionizing healthcare and life sciences by enabling researchers to analyze the staggering complexity of biological systems. In genomics, for example, decoding an entire human genome involves analyzing billions of DNA base pairs—a computationally demanding task that would be impossible without supercomputing power.

Modern supercomputers allow scientists to compare and analyze genetic data from millions of individuals, revealing the genetic basis of diseases and guiding the development of targeted therapies. This capability is particularly valuable in personalized medicine, where treatments can be tailored to a patient’s genetic profile.

In addition to genomics, supercomputers are indispensable in drug discovery and molecular modeling. The process of developing a new drug involves simulating the interactions between millions of molecules and biological targets such as proteins or enzymes. Supercomputers can model these interactions at the atomic level, dramatically reducing the time and cost required to identify promising compounds.

During the COVID-19 pandemic, supercomputers played a pivotal role in modeling viral structures, analyzing protein folding, and screening potential treatments. Systems like IBM’s Summit and Oak Ridge National Laboratory’s Frontier were used to simulate how the SARS-CoV-2 virus binds to human cells, accelerating vaccine development and public health responses.

Medical imaging and diagnostics also benefit from supercomputing. Advanced algorithms can process massive amounts of imaging data to detect patterns invisible to the human eye. By integrating artificial intelligence with supercomputing, researchers can develop predictive models for disease progression and optimize treatment outcomes with unprecedented accuracy.

Supercomputing in Physics and Cosmology

Physics, perhaps more than any other field, relies on supercomputers to explore the deepest questions about the universe. The equations governing physical systems are often too complex to solve analytically, requiring numerical simulations that demand immense computational power.

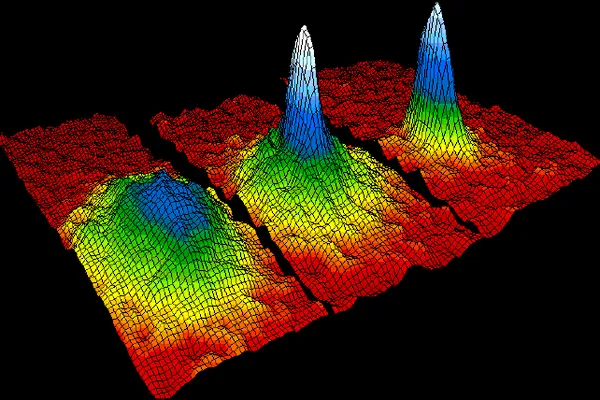

In particle physics, supercomputers simulate interactions within the subatomic realm to complement experiments at facilities like CERN’s Large Hadron Collider. Lattice quantum chromodynamics (QCD), for example, uses supercomputers to model how quarks and gluons—the fundamental components of matter—interact under the strong nuclear force.

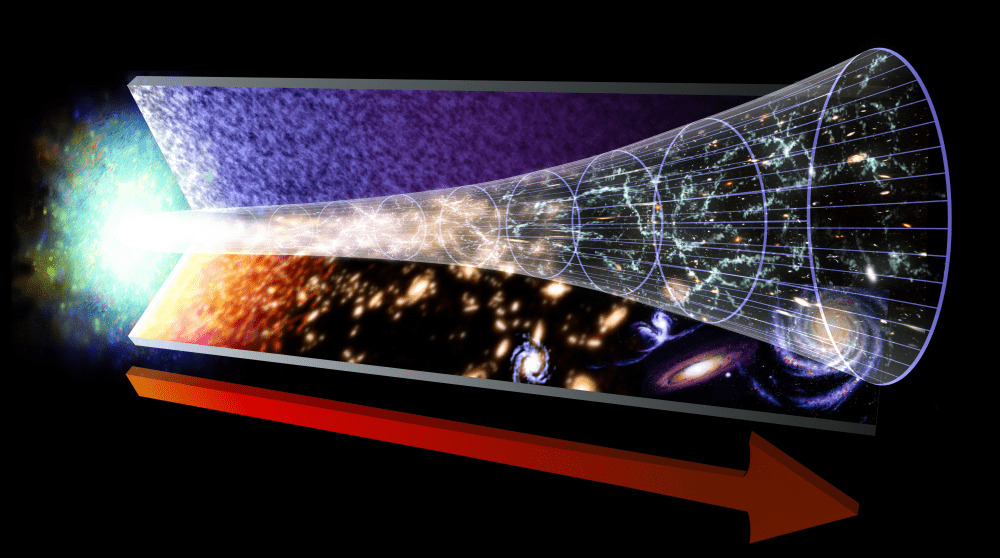

In astrophysics and cosmology, supercomputers enable scientists to simulate the evolution of the universe from the Big Bang to the present day. These simulations track billions of galaxies and dark matter particles across cosmic time, helping researchers understand the formation of cosmic structures, black holes, and gravitational waves.

Gravitational wave research is another frontier where supercomputers have been indispensable. The detection of gravitational waves in 2015 by the LIGO and Virgo collaborations relied heavily on supercomputer simulations to match observed signals with theoretical predictions. Such work confirms Einstein’s general theory of relativity and provides new insights into cataclysmic events like black hole mergers.

High-energy physics, plasma physics, and materials science also depend on supercomputing. Whether simulating the behavior of fusion reactors or exploring quantum materials for next-generation electronics, these computational experiments push the boundaries of human knowledge and technological potential.

National Security and Defense Applications

Supercomputers are critical to national security. Governments use them to maintain and modernize nuclear stockpiles, simulate defense systems, and develop cryptographic technologies. Because nuclear testing is restricted by international treaties, supercomputers allow scientists to simulate nuclear explosions virtually, ensuring the reliability and safety of nuclear arsenals without physical tests.

In cybersecurity, supercomputers analyze vast networks of data to detect potential threats and intrusions. Their ability to process massive information streams in real time allows agencies to identify vulnerabilities and defend against attacks more effectively. Supercomputers also play a role in encryption, helping develop secure communication protocols and analyzing cryptographic algorithms to assess their strength against potential quantum threats.

Defense applications extend into battlefield simulation, logistics optimization, and weapon design. Complex models of aircraft, submarines, and missiles are tested virtually before being built, reducing costs and improving performance. Supercomputers enable the military to understand scenarios that involve thousands of interacting factors, from troop movement to weather conditions, providing strategic advantages in both planning and execution.

Energy Research and Sustainable Development

Energy is another domain where supercomputers are driving transformation. The search for clean, sustainable, and efficient energy sources involves solving complex physical and chemical equations that describe fluid flow, combustion, and material behavior under extreme conditions.

In nuclear fusion research—the quest to replicate the Sun’s energy on Earth—supercomputers simulate the behavior of plasma, the high-temperature state of matter where fusion occurs. These simulations help scientists design reactors capable of containing and sustaining fusion reactions, such as those being developed in the ITER project in France.

In renewable energy, supercomputers optimize wind farm layouts, improve photovoltaic efficiency, and model energy grids to ensure stable power distribution. They also play a crucial role in materials science, enabling the design of new catalysts, superconductors, and battery materials that can revolutionize energy storage and transmission.

By modeling Earth’s natural resources, supercomputers assist in sustainable development and environmental protection. They can simulate groundwater flow, predict oil and gas reserves, and optimize carbon capture technologies—helping balance economic growth with ecological responsibility.

Artificial Intelligence and Supercomputing

Artificial intelligence (AI) and supercomputing are converging in ways that are reshaping the computational landscape. Supercomputers provide the raw power needed to train and refine large AI models, while AI helps optimize the performance of supercomputing systems themselves.

Deep learning models require immense datasets and trillions of calculations during training. Supercomputers, with their parallel architectures and high-speed interconnects, are uniquely suited for this task. The synergy between AI and supercomputing accelerates scientific discovery by allowing machines to analyze data autonomously, recognize patterns, and generate predictions in real time.

In fields such as drug discovery, materials design, and weather forecasting, AI-driven supercomputing has dramatically reduced the time required to process and interpret data. Moreover, AI algorithms are being integrated into the management of supercomputers, optimizing energy use, load balancing, and error detection to improve overall efficiency.

This relationship between AI and supercomputing marks the beginning of an era of intelligent computation, where machines not only perform calculations but also learn from them, continuously refining their performance.

Challenges in Supercomputing

Despite their remarkable power, supercomputers face numerous challenges. One of the most pressing is energy consumption. As performance increases, so does power demand. Designing energy-efficient architectures and cooling systems is critical to making supercomputing sustainable.

Another challenge is software scalability. Writing programs that can efficiently utilize millions of processor cores requires specialized knowledge and complex algorithms. The balance between hardware innovation and software optimization remains a constant pursuit.

Data management is also a growing concern. The output of modern supercomputers can reach petabytes or even exabytes, posing challenges in storage, transfer, and analysis. Scientists must develop new data compression and visualization techniques to make sense of this information.

Finally, the transition to exascale computing—the ability to perform a billion billion calculations per second—introduces new obstacles in hardware reliability, memory latency, and fault tolerance. Ensuring that these systems can operate continuously without interruption or data loss is a formidable task.

The Future of Supercomputing

The next frontier in computing lies beyond exascale performance. Researchers are exploring new paradigms such as quantum computing, neuromorphic computing, and hybrid architectures that combine classical and quantum elements. Quantum computers, in particular, promise to solve certain problems exponentially faster than any classical supercomputer by leveraging the principles of quantum mechanics.

At the same time, the democratization of high-performance computing through cloud-based services is making supercomputing resources more accessible. Scientists, engineers, and businesses worldwide can now harness immense computational power without owning physical supercomputers, fostering innovation across disciplines.

Future supercomputers will likely be more energy-efficient, intelligent, and interconnected. They will work in tandem with artificial intelligence, distributed cloud systems, and quantum processors to solve increasingly complex global challenges—from pandemic prediction to planetary-scale environmental modeling.

Conclusion

Supercomputers stand as humanity’s most formidable intellectual and technological achievements. They are the engines of progress in science, engineering, medicine, and national security, allowing us to simulate, predict, and understand the most intricate systems known to existence. These machines compress centuries of human reasoning into seconds of computation, enabling discoveries that redefine our relationship with the universe.

As we continue to push the boundaries of what is computationally possible, supercomputers remind us of the profound power of human ingenuity. They are not merely tools of calculation but instruments of exploration, bridging the gap between the known and the unknown. In the age of global challenges and rapid technological change, supercomputers are—and will remain—among our greatest allies in solving the hardest problems on Earth.