In a world racing to build bigger and more complex artificial intelligence models, a quiet but powerful shift is underway. At the Rensselaer Polytechnic Institute (RPI), researchers are flipping the script on how AI can and should grow. Instead of adding more data and deeper layers in a horizontal sprawl, what if AI could be designed to think upward—mimicking the intricate vertical and recursive structures of the human brain?

A new study, recently published in the journal Patterns, suggests just that. Led by Dr. Ge Wang, the Clark & Crossan Endowed Chair at RPI and director of the Biomedical Imaging Center, and Dr. Fenglei Fan, assistant professor at City University of Hong Kong, this groundbreaking research introduces a next-generation framework for artificial neural networks—one that doesn’t just seek to expand AI’s reach but to revolutionize its core intelligence.

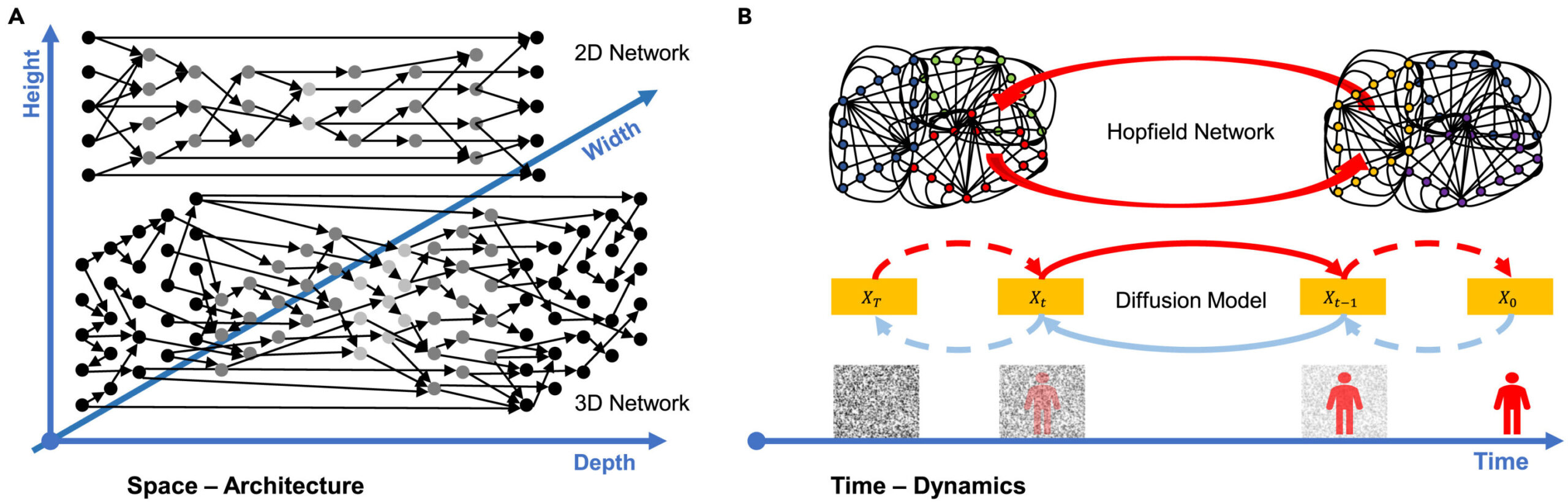

Their central idea? Add a “height” dimension and recursive loops to traditional AI architectures, allowing neural networks to process information in a richer, more self-reflective, and energy-efficient way—much like the neurons inside our own heads.

A Radical New Blueprint: Learning in Three Dimensions

For decades, artificial neural networks have been modeled loosely on the human brain. But those models have stayed relatively flat—layer upon layer stacked in two-dimensional complexity, growing outward, not inward. As a result, modern AI systems like ChatGPT or image generators require immense resources—supercomputers, energy-guzzling data centers, and vast troves of data—to operate effectively.

The new research, titled “Dimensionality and dynamics for next-generation neural networks”, challenges this flat paradigm. By introducing a vertical third dimension—akin to a brain’s depth—and integrating internal loops that mimic introspection, the team has created an AI design capable of refining its own outputs, adapting in real time, and performing at a higher level with far fewer resources.

“This new AI framework not only boosts efficiency but also unlocks practical opportunities,” said Dr. Wang. “It’s a crucial step toward smarter neural networks that are not just more powerful but more human in how they learn and evolve.”

From Brainwaves to Code: How Recursive Loops Change the Game

Recursive feedback loops—the kind the human brain uses constantly to reflect on decisions, correct errors, and refine behavior—are a central feature of this new model. Rather than simply passing data forward through layers like an assembly line, the network can circle back, adjust, and even reevaluate its internal state before producing a final decision.

This self-referential ability creates something closer to “cognitive awareness” in machines—not consciousness in the philosophical sense, but a new kind of flexibility and depth in processing information.

“These recursive loops allow the system to reflect, just as we humans second-guess and reconsider before speaking or acting,” said Wang. “It’s a model that doesn’t just move forward—it thinks vertically and loops inward.”

The implications are enormous. AI models that learn faster, use less power, and require fewer data inputs could make high-performance computing more accessible globally. It could also drastically reduce the environmental toll of training enormous models—some of which currently produce carbon emissions rivaling those of small nations.

Toward Smarter, More Sustainable AI

As society increasingly relies on AI—from search engines and translation tools to robotics and health care diagnostics—the urgency to make these systems not just smarter but more sustainable is growing.

“The current trajectory of AI, where larger equals better, is not viable forever,” said Dr. Fan, Wang’s former student and now a co-leader on the project. “We need smarter architecture, not just bigger models.”

This new approach could pave the way for AI tools that are not only more environmentally friendly but also easier to deploy in real-time scenarios. That means better AI on your phone, in your car, in hospitals, classrooms, and even remote areas without massive computing infrastructure.

And unlike today’s black-box models—powerful but opaque—recursive AI designs may also make systems more explainable. If an AI can show its thought process by retracing its steps, it becomes easier for humans to understand how and why it made a decision. That has major implications for ethics, trust, and safety in AI applications.

Unlocking the Mysteries of the Mind

The story doesn’t stop with machines. The team’s framework is also being explored as a tool for neuroscience—offering a potential window into how our own brains work, how we think, and what goes wrong when cognition falters.

By building brain-inspired AI, researchers are developing new ways to model memory, perception, and even neurological disorders. Wang’s team believes these recursive, vertically organized systems may help uncover patterns in diseases like Alzheimer’s, epilepsy, or schizophrenia by replicating and analyzing similar structures in silico.

“This isn’t just about smarter AI—it’s about understanding intelligence itself,” Wang said. “The closer we get to modeling the brain’s inner workings, the more we may discover about the root causes of mental and neurological conditions—and how to treat them.”

A Legacy of Innovation and the Road Ahead

This study builds on RPI’s long history at the forefront of artificial intelligence. Through initiatives like the Future of Computing Institute and a high-profile AI research collaboration with IBM, RPI continues to lead in developing technologies that stretch the boundaries of what machines—and humans—can do.

From ethics and accessibility to power consumption and cognitive modeling, the RPI approach isn’t just aiming for the next flashy AI breakthrough. It’s targeting foundational change—a smarter future where technology is designed to reflect and respect human needs.

And in a world increasingly shaped by algorithms, that future can’t come soon enough.

“This is a foundational shift,” said Wang. “If we can build machines that not only think but reflect, that learn faster and operate leaner, we’re not just advancing AI—we’re reinventing it.”

Reference: Ge Wang et al, Dimensionality and dynamics for next-generation artificial neural networks, Patterns (2025). DOI: 10.1016/j.patter.2025.101231