In a quiet lab at the crossroads of Manhattan and Silicon Valley, a new kind of revolution is quietly taking shape—one not powered by massive machines or industrial noise, but by smart glasses, subtle gestures, and the silent transfer of human knowledge into robotic minds.

Robots have long been the poster children of the future. We’ve imagined them vacuuming our floors, chopping vegetables, and folding laundry with effortless precision. But despite the promise, the reality has been slower, clunkier. Real-world homes are messy, unpredictable, and full of nuances that throw off even the smartest machines. Now, a team of researchers from New York University and UC Berkeley believe they may have just cracked one of the biggest barriers to household robotics—not with more complex robots, but with better human teaching.

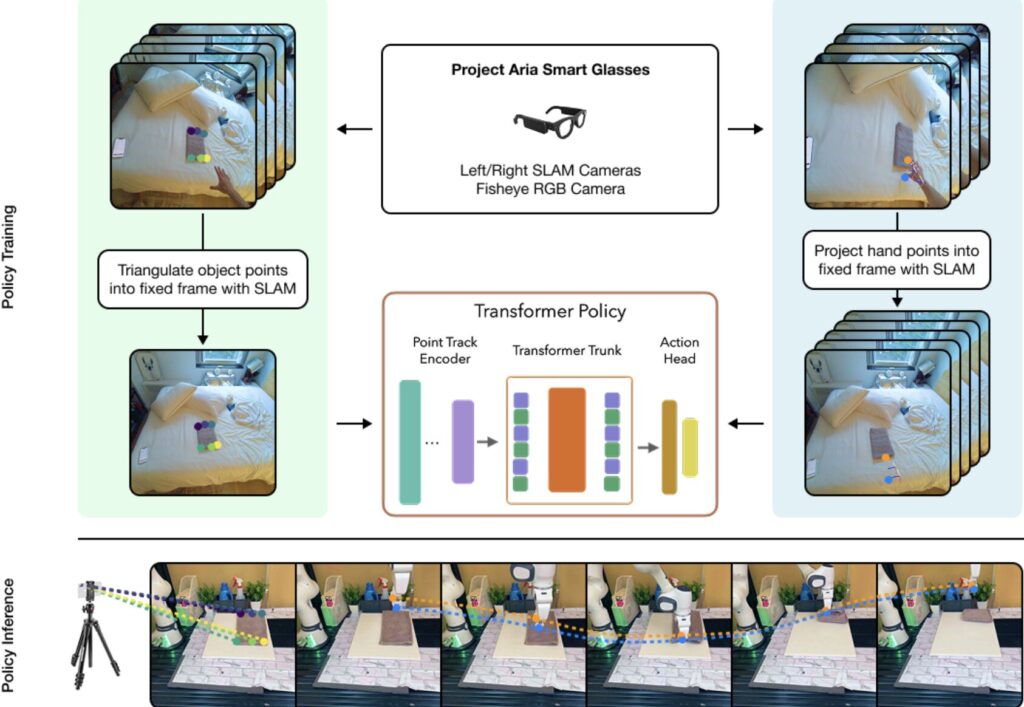

Their solution is called EgoZero, a new method for training robots using nothing more than first-person videos of people completing household tasks. And these videos aren’t shot with clunky GoPros or tethered motion-capture systems. They’re recorded through a sleek pair of Project Aria smart glasses, developed by Meta for augmented reality. The result is a lightweight, surprisingly elegant way to teach robots how humans do things—without needing robots to do any of the learning themselves.

First-Person Teaching for a Robot World

Lerrel Pinto, a senior author on the study and one of the visionaries behind EgoZero, puts it plainly: “We believe that general-purpose robotics is bottlenecked by a lack of internet-scale data.” In other words, robots haven’t failed because they’re not smart enough—but because they haven’t seen enough of the world, the way we see it.

That’s where EgoZero steps in. The system captures videos from the human point of view—walking into the kitchen, opening an oven door, loading a dishwasher. But more than just collecting pretty footage, it labels every action with surgical precision and transforms it into data that a robot can use to mimic the task.

It does this without bulky gear or multiple calibrated cameras. Instead, it uses the Project Aria smart glasses to create 3D representations of human movement, making the learning process for robots dramatically faster and more flexible.

“Unlike prior works that require wrist wearables, motion-capture gloves, or lots of cameras,” said Ademi Adeniji, co-lead author of the paper, “EgoZero does it all with just smart glasses.”

Teaching a Robot in 20 Minutes

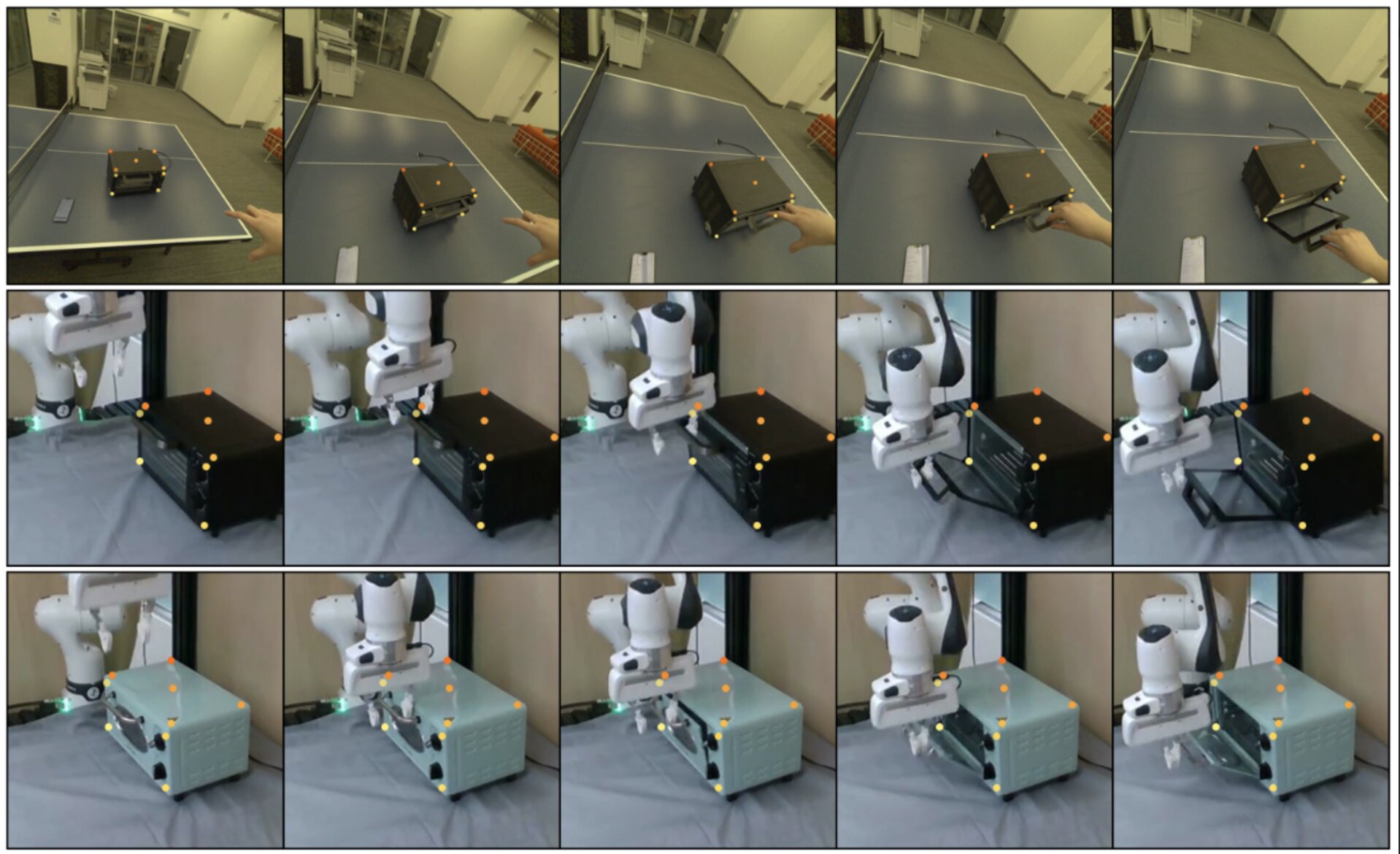

The researchers put EgoZero to the test by recording short videos of humans performing everyday tasks—things like opening cabinets, placing items into drawers, or operating appliances. Then, they used those videos to train a robotic arm known as Franka Panda.

The kicker? The robot learned many of these tasks in under 20 minutes of human demonstration. It didn’t need to practice. It didn’t require teleoperation or trial-and-error testing. Once the videos were converted into robot-understandable data, the machine simply executed what it had “seen.”

And it worked. The robotic arm, equipped with a simple gripper, was able to open doors, pick up objects, and interact with its environment with a surprising degree of success—all without ever receiving direct instruction through a joystick or remote.

“EgoZero’s biggest contribution is that it can transfer human behaviors into robot policies with zero robot data,” said Pinto. “We’re showing that 3D representations enable efficient robot learning completely in the wild.”

A Leap Toward Everyday Robotics

The implications of this approach are massive. For decades, robotics researchers have been constrained by the painstaking need to gather annotated datasets, often in controlled lab settings, and then manually train each robot for specific tasks. EgoZero flips that model.

Now, any person wearing smart glasses could become a robotic teacher simply by doing household chores. The data could be uploaded, processed, and used to train multiple robot assistants, anywhere in the world.

It’s not hard to imagine a future in which robots are sent to people’s homes pre-trained on a library of human demonstrations collected from hundreds of different environments. A robot that knows how to load a dishwasher in a New York apartment might be able to generalize those skills to a condo in Tokyo or a bungalow in Nairobi—because it’s learned not from simulations, but from real human behavior, seen through real human eyes.

And because the EgoZero code is publicly available on GitHub, other researchers and companies can immediately begin contributing to and expanding this method.

Beyond Vision: The Next Frontier

Of course, the team isn’t stopping here. With EgoZero already proving its ability to teach robots in single-task scenarios, the researchers are now setting their sights on broader, more complex applications.

“We hope to explore the tradeoffs between 2D and 3D representations at a larger scale,” said Vincent Liu, another co-lead author on the paper. “We’ve only scratched the surface with single-task 3D policies. The next step is fine-tuning these models to learn multiple tasks—perhaps even using large language or vision-language models.”

Imagine combining EgoZero’s visual data with the reasoning capabilities of AI systems like ChatGPT or GPT-Vision. A robot could not only understand what it’s seeing, but also interpret why an action is being taken, adapting on the fly to new environments or unexpected changes.

It’s a bold vision, but the building blocks are now in place.

The Human Behind the Machine

Perhaps the most poetic aspect of EgoZero is its reversal of roles. For decades, we’ve tried to teach robots by treating them like machines—through code, calibration, and endless debugging. But EgoZero reminds us that the most natural way to teach is the most human way: by showing.

The robot doesn’t need to understand every variable or equation. It simply needs to watch, to observe how we navigate our cluttered, imperfect world—and to learn by example.

With a simple pair of glasses, we become mentors to the machines. And maybe, just maybe, this is how the future finally arrives—not with a factory of robots learning in isolation, but with humans leading the way, one chore at a time.

Reference: Vincent Liu et al, EgoZero: Robot Learning from Smart Glasses, arXiv (2025). DOI: 10.48550/arxiv.2505.20290