In a quiet corner of a Princeton office in the mid-20th century, a man named Alan Turing leaned over his desk, his mind alight with visions of thinking machines. His question, deceptively simple, would ignite decades of human curiosity: Can machines think?

The world he inhabited was recovering from the ruins of war. Computers were room-sized behemoths, all wires, vacuum tubes, and blinking lights. They could calculate artillery tables, break codes, and sort census data—but think? That seemed madness.

Yet Turing imagined something far more radical: machines that didn’t merely follow instructions, but learned. Machines that could change themselves, improve, adapt. Machines that could, in some flickering sense, be alive.

He planted a seed that would take decades to germinate—a seed that has now grown into a forest so vast that its branches touch nearly every part of human life. Today, machine learning is woven into the fabric of modern existence, quietly reshaping how we shop, work, love, and dream. It listens to our voices, filters our photos, predicts our desires, diagnoses our diseases, drives our cars, writes our poetry.

This is the story of how computers are learning on their own—and how their silent education is changing everything.

From Clockwork to Cognition

To appreciate the magic of machine learning, we must remember where computing began. Early computers were perfect servants: obedient, literal, tireless. Feed them instructions, and they performed exactly as told—no more, no less. They could not improvise. They could not generalize. They could not learn.

If you wanted a computer to sort numbers, you had to write out every step: compare these two numbers, swap if necessary, move on to the next pair. Programming was like drafting a legal contract for a fussy robot: do this, then that, unless this other thing happens. Every possibility had to be foreseen and scripted.

But learning is different. Learning means drawing connections, spotting patterns, predicting outcomes even when the rules are not written down. Consider a child learning to recognize cats. No one tells them the precise angles of ears, the ratio of whiskers to nose. They simply see cats—at home, in books, on screens. Soon, they can spot a cat anywhere. That, in essence, is the dream of machine learning.

The term “machine learning” first surfaced in the 1950s, credited to Arthur Samuel, who taught a computer to play checkers. Samuel’s machine didn’t simply memorize board positions. It evaluated moves, learned which strategies led to victory, and improved through experience. The computer became, in Samuel’s words, “self-teaching.” It was a spark of something new—a hint that machines could go beyond mere calculation.

Yet it would take decades, and technological revolutions, for that spark to become a blaze.

Data: The New Oxygen

For much of computing history, machine learning was an academic curiosity, starved for data and processing power. Early computers lacked the memory to hold vast datasets. Scientists could craft elegant algorithms, but there simply wasn’t enough information—or affordable hardware—to make them useful.

All that changed with the rise of the digital age. Suddenly, humanity was swimming in data. Every email, every tweet, every credit card swipe, every GPS ping added to an invisible ocean of information. Sensors tracked the hum of engines, the flicker of heartbeats, the swirl of weather systems. Storage became cheap, networks fast, processors powerful.

Machine learning thrives on data the way plants thrive on sunlight. The more examples a model sees, the sharper its predictions become. A machine learning system is a creature of experience: it trains on historical data to predict the future, whether that means guessing tomorrow’s stock prices or suggesting your next favorite song.

This marriage—vast data and cheap computation—was the alchemy that transformed machine learning from a curiosity into a revolution.

The Core Idea: Learning From Patterns

At its heart, machine learning is staggeringly simple: it’s about finding patterns in data. Imagine plotting dots on graph paper: height on one axis, weight on the other. A human might notice that taller people tend to weigh more. Machine learning formalizes that intuition into mathematical relationships.

Consider linear regression, one of the simplest learning algorithms. It draws a line through data points, capturing the trend that best fits them. If a model learns that “each inch of height adds 5 pounds of weight,” it can predict someone’s weight from their height. That’s machine learning in miniature.

But the power of machine learning lies in scaling up this simplicity to staggering complexity. What if you’re not dealing with just two variables—but two thousand? What if your data isn’t neat numbers, but pixel values in an image or waveforms in a sound file?

The magic comes from algorithms that can sift through massive data sets, identify subtle patterns, and make predictions no human could articulate. A machine learning model might discover that certain pixels arranged in a particular way signify the presence of a dog. Or that an odd rhythm in a heartbeat suggests the onset of atrial fibrillation.

Machine learning is not about writing rules. It’s about letting machines discover rules for themselves.

Supervised Learning: Learning From Examples

The most intuitive form of machine learning is supervised learning. Here, machines learn from labeled examples. You give the model inputs and the correct outputs, and it gradually learns the relationship between them.

Picture a box of fruit. Each fruit is tagged: “apple,” “banana,” “orange.” You feed images of these fruits into the computer, telling it what each one is. Over time, the model learns the visual signatures—colors, shapes, textures—that distinguish a banana from an apple. Later, you show it an unlabeled picture, and it predicts “banana.”

Supervised learning powers spam filters, fraud detection, facial recognition, and medical diagnostics. The model doesn’t “understand” spam or cancer the way humans do. It simply learns statistical patterns that distinguish one category from another.

Unsupervised Learning: Finding Hidden Structure

But what if you have no labels? Suppose you have millions of shopping transactions but no tags saying which customers belong to which demographic. Enter unsupervised learning.

Unsupervised learning seeks hidden structure in unlabeled data. It might cluster similar customers together based on buying habits, revealing unexpected segments—late-night impulse shoppers, bargain hunters, loyal brand devotees. It can detect anomalies, flagging credit card charges that deviate from normal patterns.

In genetics, unsupervised learning has uncovered surprising subtypes of diseases, leading to new avenues for treatment. In marketing, it discovers new customer personas. It’s like giving a machine a pile of puzzle pieces and letting it discover how they fit together.

Reinforcement Learning: Learning Through Trial and Error

Beyond supervised and unsupervised learning lies the realm of reinforcement learning—a method inspired by the way animals learn. Here, machines learn not from static data but through interaction.

A reinforcement learning agent explores an environment, taking actions and receiving feedback in the form of rewards or penalties. Like a dog learning to sit for a treat, the agent gradually figures out which actions maximize its long-term reward.

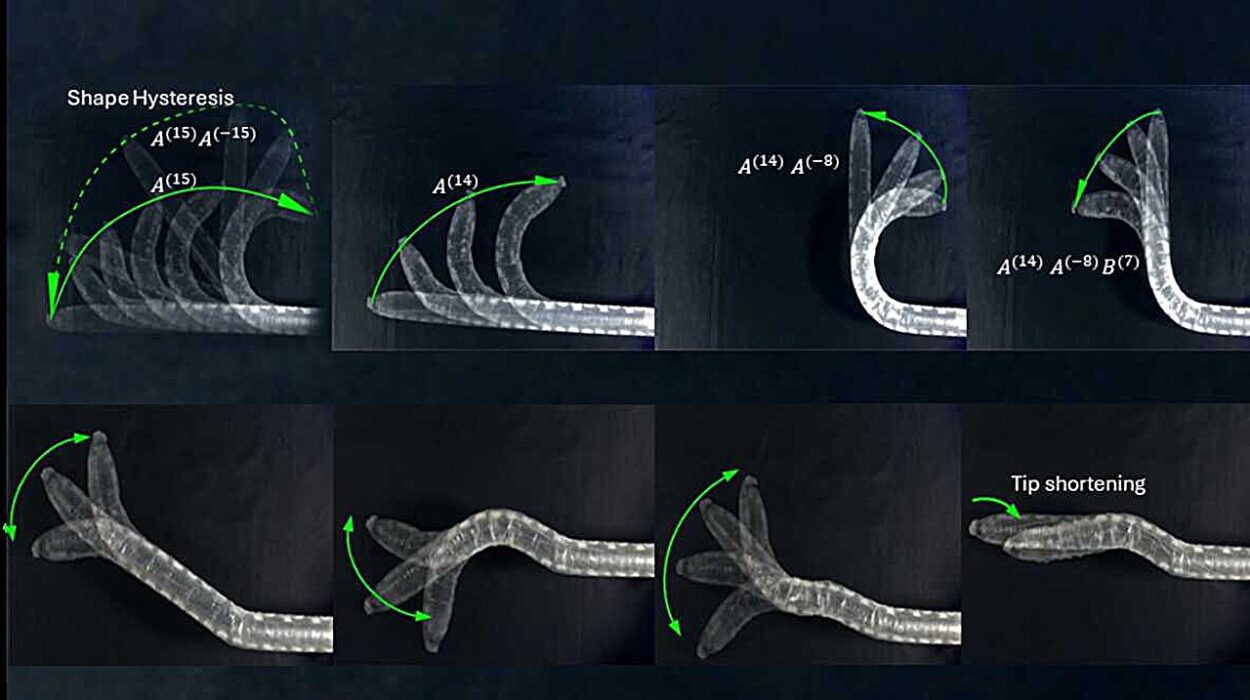

This approach has led to spectacular achievements. Reinforcement learning taught computers to defeat human champions in chess, Go, and poker. It powers robots learning to walk, autonomous cars navigating city streets, and software agents trading stocks.

It’s a field full of drama and discovery—machines stumbling, failing, and finally succeeding in feats that once seemed the exclusive domain of human intelligence.

Deep Learning: The Neural Revolution

If machine learning is the broad concept of computers learning from data, deep learning is its most dazzling subfield. Deep learning uses artificial neural networks—layer upon layer of interconnected “neurons”—to detect complex patterns in data.

The inspiration comes from the human brain, with its billions of neurons communicating through electrical signals. A single artificial neuron is a simple mathematical function, but stack enough of them together, and they become astonishingly powerful.

Deep learning has exploded in the last decade, driven by massive data sets and high-performance GPUs. Its results have often seemed magical.

These networks can look at a photograph and identify faces, flowers, or street signs. They can transcribe spoken words into text, translate languages in real time, even generate stunningly realistic images and videos. Deep learning systems compose music, write poetry, and create paintings reminiscent of Van Gogh.

One of the breakthroughs was the 2012 ImageNet competition, where a deep neural network crushed previous records for image recognition accuracy. Suddenly, machines could see.

Natural Language and the Dawn of Conversational AI

Among deep learning’s triumphs is natural language processing (NLP)—the art of teaching machines to read, understand, and generate human language.

A decade ago, voice assistants were laughably bad, mishearing names and bungling simple commands. Today, systems like ChatGPT and its successors hold conversations that feel eerily human. They write essays, summarize articles, answer questions, and even crack jokes.

These models don’t “understand” language as humans do. They’re statistical engines trained on massive text corpora, predicting which words are likely to follow others. Yet the results are often uncanny.

NLP is revolutionizing everything from customer support to creative writing. Doctors use AI tools to draft clinical notes. Journalists rely on AI for research and summarization. Language barriers crumble as translation models improve.

It’s a field still filled with challenges—bias, misinformation, context limitations—but it’s one of the clearest signs that machines are learning skills once thought uniquely human.

The Invisible Hand of Machine Learning

Many people imagine machine learning as distant science fiction, but it’s already deeply woven into everyday life—often invisibly.

When you unlock your phone with your face, machine learning recognizes your features. When you shop online, recommendations spring forth, guessing your desires. Social media feeds shape themselves to your habits. Your emails get filtered for spam. Your photos get labeled and sorted automatically.

Credit card companies scan for fraud using machine learning models. Hospitals deploy algorithms to flag patients at risk of sepsis. Banks assess creditworthiness with predictive models. Netflix suggests what you’ll love next. Google Maps forecasts traffic. The list is endless.

Machine learning doesn’t merely automate tasks—it augments human capability. It helps radiologists spot tumors, helps lawyers sift through documents, helps scientists discover new molecules.

Yet its silent presence raises profound questions about how much we trust algorithms to make decisions that affect our lives.

Bias, Ethics, and the Ghost in the Machine

As machine learning systems grow more powerful, they inherit the biases of the data they’re trained on. Facial recognition systems have struggled with racial disparities. Hiring algorithms have reflected gender biases. Predictive policing tools have raised civil rights concerns.

An algorithm is only as fair as the data—and society—that shaped it. Machines don’t think like humans, but they can absorb our prejudices.

There’s also the question of transparency. Many deep learning models operate as “black boxes,” producing predictions without easily explainable reasoning. This is acceptable for recommending movies—but deeply problematic when deciding who receives bail, who gets a loan, or who is flagged for medical treatment.

Researchers are working feverishly on “explainable AI” to open these black boxes. Regulators are stepping in, crafting laws to govern how AI can be deployed, demanding fairness, accountability, and transparency.

The question Turing posed echoes more urgently than ever: Can machines think? And if so… how should we treat them—and each other?

The Creativity of Machines

Perhaps the most astonishing turn in machine learning has been its leap into creativity. AI now paints pictures, writes music, crafts poetry, and even generates stories. Systems like DALL-E conjure fantastical images from text descriptions. Music models compose melodies indistinguishable from human works.

Some artists fear replacement. Others see new tools for creative expression. The line between human imagination and machine generation is blurring, challenging age-old notions of authorship and originality.

Are these machines truly creative? Or are they mimicking patterns learned from human art? Perhaps both truths coexist. What’s undeniable is that machines are now participants in the creative dialogue of human culture.

The Road Ahead: Intelligence Unbound

We stand at an extraordinary threshold. Machine learning has given computers astonishing new senses: sight, hearing, speech, language. It has armed them with the ability to learn from experience, to navigate complexity, to create.

Yet we are only at the beginning. Researchers dream of machines that understand causality rather than mere correlation. Models that learn with less data, more efficiently. Systems that explain themselves, adapt in real time, and collaborate with humans as partners rather than tools.

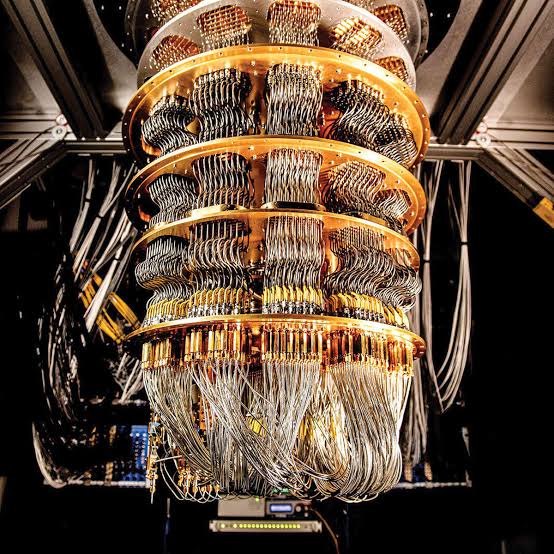

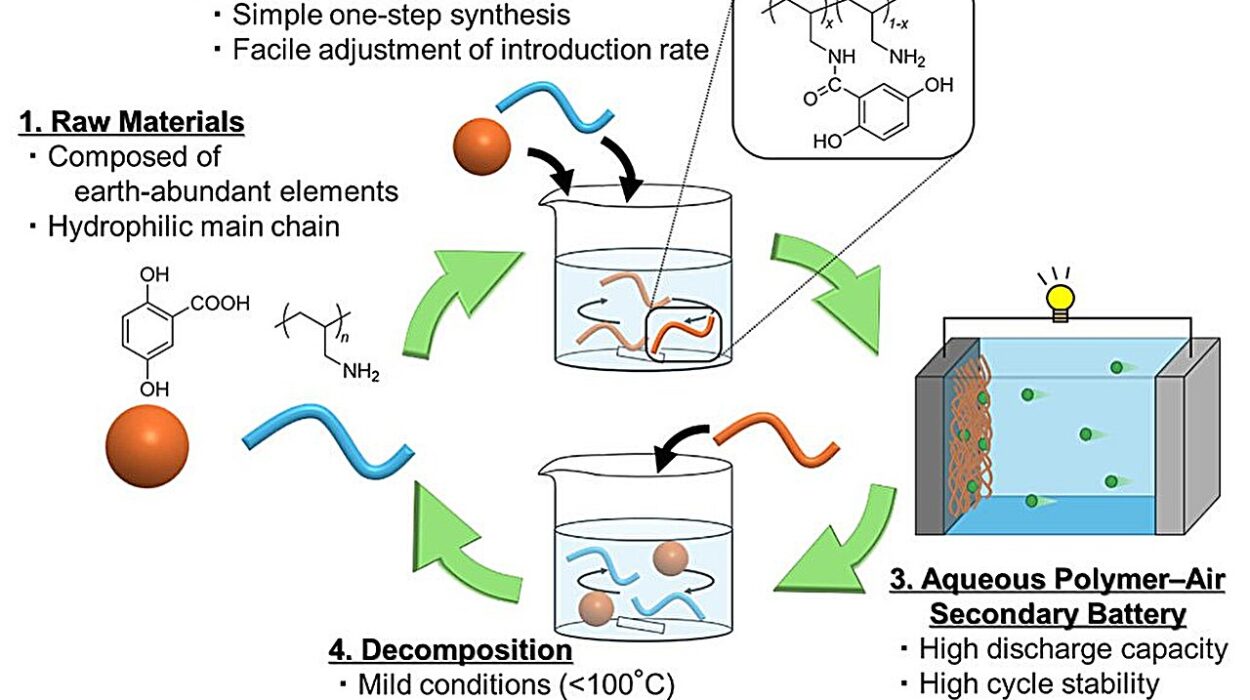

Quantum computing promises to supercharge machine learning, tackling problems beyond the reach of classical machines. Neuromorphic chips aim to mimic the architecture of the human brain, bringing energy-efficient learning to devices we carry in our pockets.

The future glimmers with possibility—and with risk. Machine learning could unlock cures for diseases, solutions for climate change, pathways to a more just and sustainable world. It could also deepen inequalities, magnify misinformation, and place unprecedented power in the hands of the few.

The story of machine learning is not merely technological. It’s human. It’s a mirror reflecting our aspirations and our flaws. We are teaching machines to learn—and in the process, we are learning about ourselves.

A World Forever Changed

Alan Turing’s question still resonates like a distant bell. Can machines think? The answer may depend on how we define thinking, on what we demand of intelligence. Machines do not dream. They do not feel love, fear, or wonder. Yet they learn. They surprise us. They reveal truths we could never find alone.

The true miracle of machine learning is not that machines learn—it’s that they teach us new ways of seeing the world. They whisper patterns hidden in chaos. They illuminate connections invisible to the naked eye.

We have become co-authors of a new chapter in the story of intelligence, one where silicon and carbon learn side by side. The questions ahead are vast, the stakes high. How much will we delegate to these new minds? How will we ensure they serve humanity rather than the reverse?

The journey of machine learning is still young. And if there’s one certainty, it’s that the machines are not done learning—and neither are we.