Imagine this: you describe a friend planting a flag on Mars or diving into a black hole, and a computer instantly conjures the image. No human artist involved. No camera. Just words becoming pixels. This seemingly magical feat—instant AI-generated images from text—is no longer science fiction. It’s science fact, already powered by advanced neural networks and sprawling datasets. And it’s set to become a billion-dollar industry by the end of the decade.

But beneath the polished outputs of platforms like DALL·E or Midjourney lies a deeply resource-intensive process. Training these generative models often takes weeks, sometimes months, requiring millions of images, high-end GPUs, and massive energy consumption. It’s a hidden cost in the race toward artificial creativity. What if there were a way to skip some of that complexity—and still generate images with astonishing precision?

That’s exactly what a group of MIT researchers has done. In a breakthrough that challenges long-held assumptions about how AI systems generate images, the team has shown that it’s possible to generate, manipulate, and reconstruct images without using a traditional generator at all. Their findings, presented at the International Conference on Machine Learning (ICML 2025) in Vancouver, may signal a quieter, leaner revolution in artificial intelligence.

Rethinking the Building Blocks of Images

The foundation of this groundbreaking research began with a deceptively simple question: How do we represent an image using the fewest possible data points without losing its essence?

Traditionally, image tokenizers would slice a 256×256 image into a grid of 16×16 regions. Each region—or token—encodes localized visual information. But in a 2024 paper from the Technical University of Munich and ByteDance, a new concept was introduced: the 1D tokenizer. This powerful neural network doesn’t just break an image into square tiles—it condenses the entire image into just 32 tokens, each represented as a 12-digit binary number.

Think of these tokens as an alien language—a kind of abstract shorthand that tells a machine everything it needs to know about an image. Each 12-digit string can take on 4,096 distinct values. The compression is almost beyond belief. How can just 32 numbers describe an entire robin, a tiger, or a red panda?

That mystery was exactly what Lukas Lao Beyer, a graduate researcher at MIT’s Laboratory for Information and Decision Systems (LIDS), set out to unravel.

The Language of Tokens

Lao Beyer’s curiosity began during a seminar on deep generative models taught by Professor Kaiming He. While others moved on after final grades were issued, he saw the project’s true potential. Teaming up with researchers from MIT and Facebook AI Research, including Tianhong Li, Xinlei Chen, Sertac Karaman, and He himself, the idea grew from a class assignment into a landmark research effort.

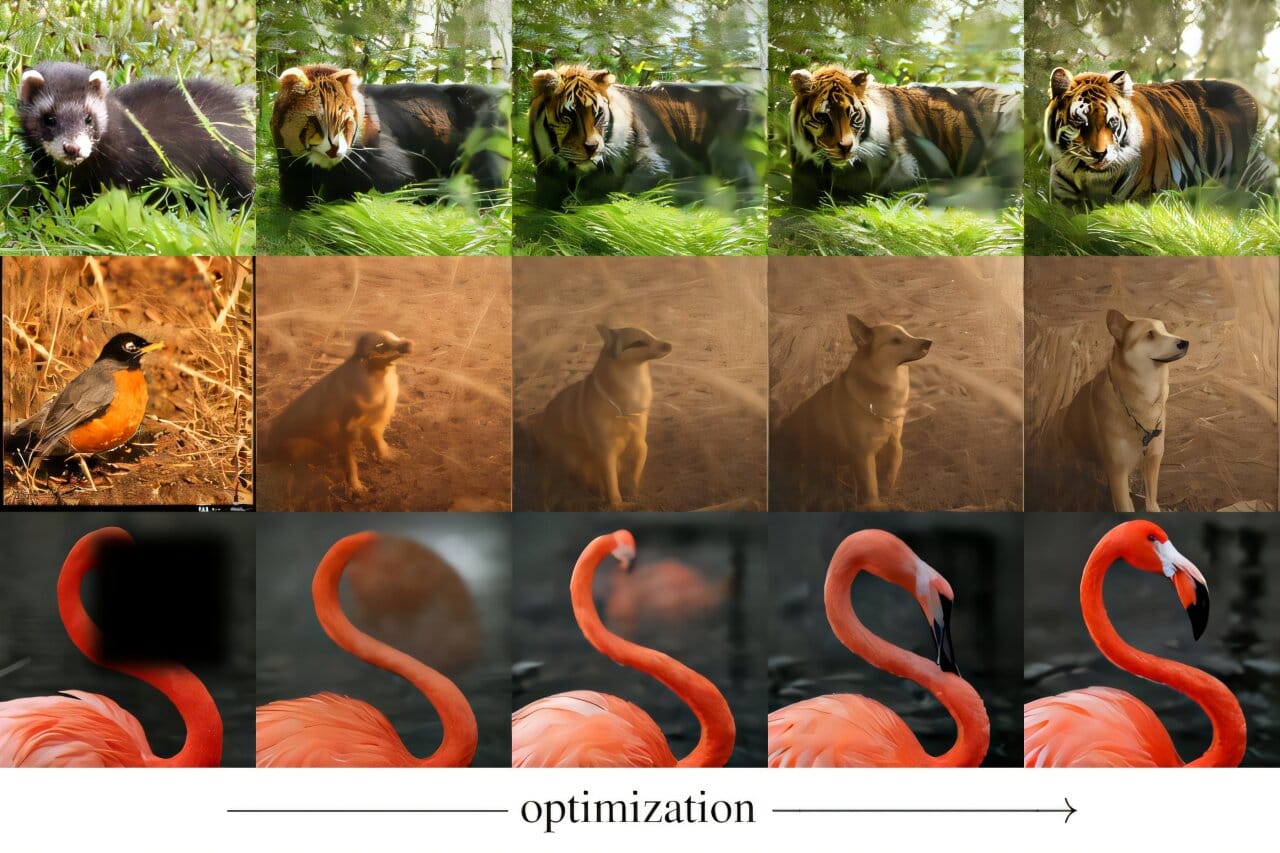

To understand what the tokens were doing, Lao Beyer tried something radical: he started manipulating individual tokens to see how the output image would change. Replace one? The resolution shifted. Swap another? The background blurred. A third altered the orientation of a bird’s head.

No one had ever seen such clear, interpretable transformations from individual token changes before.

“This was a never-before-seen result,” Lao Beyer says, “as no one had observed visually identifiable changes from manipulating tokens.”

The revelation suggested something profound. These compressed tokens weren’t just placeholders. They encoded high-level concepts like sharpness, posture, brightness—even style. And they could be edited.

Goodbye Generator: A New Approach Emerges

Here’s where things got even more astonishing. Conventional image generation requires a tokenizer to compress an image and a generator to turn those tokens into new images. The generator learns to interpret the tokens and imagine new content. Training it is slow, expensive, and cumbersome.

But what if the generator wasn’t needed?

The MIT group discovered they could skip the generator altogether. Instead, they used a detokenizer (essentially a decoder that reverses the compression) and paired it with CLIP, an off-the-shelf neural network developed by OpenAI. CLIP can’t generate images—it simply evaluates how well an image matches a given text prompt.

Using CLIP as a guide, the team started with random tokens—gibberish—and iteratively tweaked them until the resulting image matched a desired prompt. Want a red panda? CLIP nudges the tokens toward panda-ness. A tiger? Same process. The images emerged as the token values converged toward visual alignment with the prompt.

This leap—from raw noise to coherent image, without a traditional generator—could drastically reduce the cost and complexity of image generation systems.

From Red Pandas to Robins: Editing with Precision

The implications of token-level control are staggering. Consider “inpainting”, the act of filling in missing parts of an image. Instead of relying on a trained model to guess what goes where, the team used their token-editing process to reconstruct deleted sections—like patching missing pixels in a photo from memory.

And since each token can be tweaked individually, image editing becomes almost surgical. Change a token here, and suddenly the bird turns its head. Shift one there, and the lighting transforms. It’s like using Photoshop, but without any of the tools—just numbers and smart inference.

“This work redefines the role of tokenizers,” says Saining Xie, a computer scientist at NYU. “It shows that image tokenizers—tools usually used just to compress images—can actually do a lot more.”

The Bigger Picture: Applications Beyond Images

What makes this work so tantalizing is how generalizable it may be. Tokens don’t have to represent images. They could encode actions, behaviors, decisions—any kind of data that can be compressed.

MIT Professor Sertac Karaman, one of the study’s senior authors, suggests that in autonomous robotics, for instance, you could use tokens to represent the possible routes a self-driving car might take. Instead of analyzing images, a system could “tokenize” movement, strategy, or response.

Lao Beyer echoes this vision. “The extreme amount of compression afforded by 1D tokenizers allows you to do some amazing things,” he says. “It opens up doors in fields like robotics, planning, even human-computer interaction.”

In other words, this isn’t just a story about prettier pictures. It’s about smarter machines, faster systems, and simpler tools that could change how we interact with technology.

A Symphony of Existing Tools, Played Differently

One of the most fascinating aspects of this project is that nothing new was invented—at least not in the traditional sense. The team didn’t build a new neural network from scratch. They didn’t invent tokenizers, decoders, or CLIP. Instead, they combined existing tools in a novel way, creating an elegant synergy that yielded unexpected capabilities.

“This work shows that new capabilities can arise when you put all the right pieces together,” Professor He notes. It’s a reminder that in the world of science, innovation doesn’t always mean building something new. Sometimes it means seeing familiar things from a different angle.

The Next Evolution of AI Creativity

AI-generated images already amaze us. They paint dreamscapes, restore ancient ruins, and imagine worlds never seen. But the behind-the-scenes cost—computationally and environmentally—is high. By bypassing the generator and rethinking what tokens can do, the MIT researchers have charted a new course toward efficiency and control.

This approach may one day allow everyone—not just researchers or artists—to build and manipulate digital visuals with nothing more than words and curiosity. Imagine a classroom where children describe a scene and watch it unfold in real time. A surgeon previewing different anatomical layouts. A director reimagining scenes without ever touching a mouse.

The future of image generation, it turns out, might not lie in bigger and more powerful generators—but in leaner, smarter, more interpretable tokens.

And it all started with a class project and a single, burning question: What does a token really mean?

Reference: L. Lao Beyer et al, Highly Compressed Tokenizer Can Generate Without Training, arXiv (2025). DOI: 10.48550/arxiv.2506.08257