In the modern digital world, artificial intelligence is no longer a futuristic dream; it is the silent engine behind everyday miracles. From the voice in your smartphone that understands your casual questions, to the car that navigates city streets without a driver, to the medical system that spots diseases hidden in scans — AI has woven itself into the fabric of human life. But behind every breakthrough, behind every algorithm that learns and adapts, there is a physical foundation: the infrastructure that makes AI possible.

At the center of this foundation are the processing units and the platforms that carry their power to the masses. It is here, in the humming server rooms and vast data centers, that the battles for speed, efficiency, and scalability are fought. Two champions stand out in this race: GPUs and TPUs. And above them looms the vast, seemingly infinite realm of the cloud — the stage upon which modern AI performs.

Understanding AI infrastructure is not just about knowing what hardware does what; it is about grasping the delicate interplay between raw computation, energy, architecture, and accessibility. It is about the unsung engineering feats that allow a model to transform from lines of code into living, breathing intelligence.

The Birth of the GPU Era

For much of computing history, the CPU — the central processing unit — was the undisputed king. Designed for flexibility, CPUs handled everything from running spreadsheets to processing text documents. But when the world’s appetite for graphics exploded in the 1990s, a new breed of processor emerged: the graphics processing unit, or GPU.

Originally, GPUs were created to accelerate the rendering of complex visuals in games and simulations. Unlike CPUs, which have a handful of powerful cores optimized for sequential tasks, GPUs contain thousands of smaller, efficient cores designed to process many operations in parallel. A CPU might excel at solving one problem quickly; a GPU thrives on breaking a problem into a million tiny pieces and solving them all at once.

This parallelism turned out to be more than just a boon for gamers. Around the mid-2000s, researchers realized that the same qualities that made GPUs perfect for rendering shadows and explosions also made them ideal for training machine learning models. Deep learning — with its sprawling networks of interconnected neurons — requires massive amounts of matrix multiplication and vector operations. GPUs were tailor-made for such workloads.

When NVIDIA released its CUDA platform in 2006, allowing developers to program GPUs for general-purpose computing, the floodgates opened. The GPU became the workhorse of AI research, accelerating progress at a pace that CPUs could no longer match.

TPUs: A New Contender Emerges

As AI workloads grew, even GPUs began to show their limitations. The complexity and size of deep neural networks were escalating at a rate that strained even the most advanced parallel processors. In response, Google introduced the TPU — the Tensor Processing Unit — in 2016.

Unlike GPUs, which were born in the world of graphics and adapted for AI, TPUs were designed from the ground up specifically for machine learning. Their architecture is optimized for tensor operations — the mathematical backbone of deep learning models. By focusing solely on the needs of AI, TPUs can deliver exceptional performance per watt, enabling faster training and inference with lower energy consumption.

One of the most remarkable aspects of TPUs is their integration into Google’s cloud infrastructure. Rather than selling TPUs as standalone hardware, Google initially offered them as a service. This meant that researchers and developers could tap into TPU power without ever touching the physical hardware, accessing it instead through APIs and cloud platforms.

This move was both technological and strategic. It blurred the line between hardware and service, making AI infrastructure not just a product to be bought, but a utility to be consumed on demand.

Why Parallelism Matters

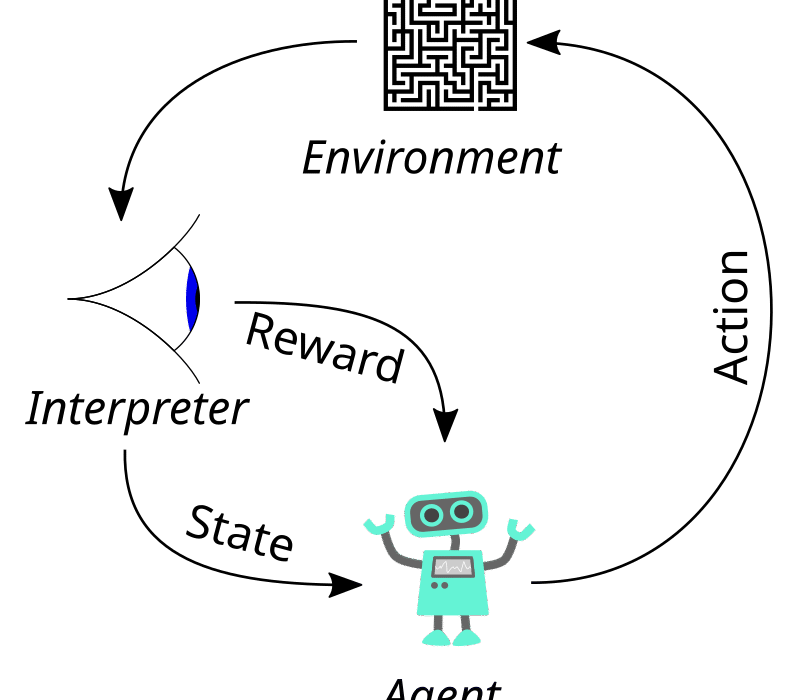

To understand why GPUs and TPUs are so critical, it helps to step into the shoes of a neural network during training. Imagine a model with billions of parameters — tiny knobs that must be adjusted just so to produce accurate predictions. Each adjustment requires calculating gradients, updating weights, and running through enormous datasets.

If a CPU were to handle this task alone, it would be like trying to build a skyscraper brick by brick with a single worker. A GPU or TPU, by contrast, is like having thousands of workers, each laying bricks at the same time, each perfectly coordinated.

Parallelism is not just about speed; it’s about feasibility. Some modern AI models — think of GPT-scale language models or large-scale image recognition systems — would take years to train on CPUs alone. With GPU or TPU acceleration, that time shrinks to weeks or even days.

This compression of time changes the rhythm of AI development. Researchers can experiment more, iterate faster, and refine models in a fraction of the time. Innovation accelerates, and with it, the possibilities for AI in the real world.

The Cloud as the Great Equalizer

In the early days of AI, high-performance computing was the domain of elite institutions and tech giants. Training a deep learning model required racks of expensive hardware, specialized cooling systems, and a team of engineers to maintain it all. For startups, independent researchers, or students, these resources were out of reach.

The rise of cloud computing changed everything. Cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud offered access to GPU and TPU resources over the internet, billed by the hour or even by the minute. Suddenly, anyone with a credit card and an idea could spin up a cluster of GPUs, train a model, and shut it down when finished — no hardware ownership required.

The cloud not only democratized access but also introduced flexibility. Need to train a model overnight? Scale up to dozens of GPUs in minutes. Done training and just need to serve predictions? Scale back to a single lightweight instance. This elasticity transformed AI from a capital-intensive endeavor into something agile, adaptable, and global.

The Hidden Costs of Speed

But while the cloud and specialized processors have fueled a golden age of AI, they have also introduced new challenges. High-performance computing consumes enormous amounts of energy. Training a single large-scale AI model can generate as much carbon as the lifetime emissions of several cars.

This has sparked an urgent conversation about sustainable AI. Data centers are investing in renewable energy, more efficient cooling systems, and chip designs that maximize performance per watt. Researchers are exploring model architectures that achieve the same accuracy with fewer parameters, reducing computational load.

There is also the matter of cost — not just in dollars, but in accessibility. While the cloud has opened doors, the price of large-scale GPU or TPU usage can still be prohibitive for many, creating a gap between those who can afford to experiment at scale and those who cannot. Balancing innovation with equity remains one of the defining challenges of AI infrastructure.

Scaling Beyond Hardware

AI infrastructure is not just about the chips themselves; it’s about the orchestration of entire systems. Training a large model might require hundreds or even thousands of GPUs working together. Coordinating their work involves sophisticated software frameworks, distributed training algorithms, and high-speed networking.

Frameworks like TensorFlow and PyTorch have evolved to handle this complexity, abstracting away much of the pain of parallelization. Meanwhile, networking technologies like NVIDIA’s NVLink and Google’s interconnect fabrics ensure that data can move quickly between processors without bottlenecks.

At the cutting edge, researchers are exploring specialized hardware accelerators beyond GPUs and TPUs — custom ASICs, neuromorphic chips, and even quantum processors. The future of AI infrastructure will likely be a diverse ecosystem of specialized tools, each tuned for different tasks.

The Human Side of Infrastructure

It is easy to think of AI infrastructure in purely technical terms — racks of machines, heat maps of processing loads, charts of performance benchmarks. But behind every watt of power, every petaflop of computation, there are human stories.

There is the startup founder who trains a breakthrough model on rented cloud GPUs, turning a weekend hackathon project into a world-changing product. There is the scientist in a developing country who uses cloud TPUs to analyze climate data, bringing global-scale tools to local problems. There is the engineer who spends months shaving milliseconds off inference times, knowing that those milliseconds mean a better experience for millions of users.

AI infrastructure is not just a cold assembly of metal and silicon; it is a living bridge between human imagination and machine capability. It is the invisible scaffolding that holds up the great cathedral of modern artificial intelligence.

A Future Written in Silicon and Clouds

Looking forward, the trajectory of AI infrastructure is both thrilling and uncertain. The hunger for more computational power shows no sign of slowing. Models grow larger, datasets expand, and new applications demand ever-faster inference.

At the same time, there is growing awareness that raw power is not enough. Efficiency, sustainability, and accessibility must guide the next generation of infrastructure. Cloud platforms will continue to evolve, perhaps becoming more decentralized, perhaps integrating with edge computing to bring AI closer to where it is needed.

GPUs will grow faster, TPUs will grow smarter, and entirely new paradigms may emerge — processors inspired by the human brain, or architectures built to run quantum algorithms. But whatever form the future takes, the core truth will remain: AI is only as powerful as the infrastructure that supports it.

And in that infrastructure lies a story not just of engineering, but of human ambition — our relentless drive to build machines that learn, adapt, and understand, and to give them the stage upon which to change the world.