It begins in flickers and hums. In the darkness of arcades, phosphorescent dots chase each other across curved glass screens. In living rooms, square shapes tumble over each other on buzzing television sets. It’s the 1970s, and video games are still a curiosity, their graphics a language of blocks and lines, abstract and primitive.

Yet behind those blocks, a promise whispers—a promise that one day, games will look as real as life.

In those early days, no one mistakes a Pong paddle for a tennis racket or a Space Invader for an alien lifeform. But it doesn’t matter. Players feel the thrill anyway, filling the gaps with imagination. The first games are simple, raw experiences, but their magic lies not in how they look but in how they feel.

Still, the dream persists. Somewhere, in every developer’s mind, a single question glows like neon: What if the worlds we build could look real?

That question will drive an entire industry forward for the next fifty years.

Silicon and Visionaries

In 1972, a young electrical engineer named Nolan Bushnell founds Atari and releases Pong—a block bouncing between rectangles. It’s crude, yet people crowd arcades, mesmerized. As the 1970s become the 1980s, game developers push hardware to conjure more color, more motion, more detail. They squeeze every bit of power from silicon chips, chasing realism in a medium built on abstractions.

But technology moves slowly. Early consoles like the Atari 2600 and Intellivision can barely draw recognizable humans, let alone simulate reality. Sprites—tiny 2D images—stand in for characters. Backgrounds are flat tapestries scrolling past in loops.

Yet even here, the seeds of photorealism are planted. Visionaries believe games could someday rival film. They dream of polygons and perspective, of textures and lighting that mimic the real world.

Meanwhile, in university labs and tech companies, researchers experiment with computer graphics. They create wireframe landscapes and 3D models, imagining a future when computing power might be fast enough to bring such scenes into games.

Polygons Arrive

The 1980s come alive with bold colors and pixel art that feels magical despite its simplicity. Games like Super Mario Bros. and The Legend of Zelda craft entire worlds from tiny pixels, proof that good art direction transcends technical limits.

But beneath the colorful surfaces, the industry edges closer to 3D. In 1981, Sega’s SubRoc-3D uses stereoscopic effects to give depth to flat images. It’s a novelty rather than true 3D, but players taste the future.

True 3D demands a new kind of rendering: polygons. Instead of painting with pixels, developers begin to build models from triangles, calculating how light and perspective transform each shape. It’s math-heavy, resource-hungry work. The earliest polygon games look skeletal—angular wireframes rotating in space.

Titles like Battlezone and I, Robot show off the possibilities. In the arcades, players peer into vector landscapes that hint at solid worlds. Even in their crude forms, polygons signal a coming revolution.

The Fifth Generation: Stepping Into Depth

By the early 1990s, the gaming industry is ready for a leap. Silicon is getting faster, cheaper, and more powerful. Two consoles—Sony’s PlayStation and Sega’s Saturn—usher in what fans later call the “fifth generation” of gaming.

Here, 3D graphics break free from lab experiments and enter living rooms. Instead of flat sprites, characters and environments become sculpted shapes, textured and shaded. For many players, it’s their first experience navigating fully three-dimensional space.

Yet photorealism remains distant. Early 3D games look blocky, angular, and sometimes grotesque. Faces are flat, textures are muddy, and motion is stiff. But the industry refuses to turn back. Developers pour time and money into mastering 3D tools, learning to build digital models and animate them convincingly.

Games like Tomb Raider and Final Fantasy VII become icons of the era. Despite low polygon counts and blurry textures, they evoke vast worlds and emotional storytelling. Players glimpse realism just beyond the jagged edges.

PC Gaming and the Rise of GPUs

Meanwhile, on personal computers, a silent revolution is brewing. In the mid-1990s, companies like NVIDIA and 3dfx introduce dedicated graphics processing units—GPUs. These chips take the heavy lifting of rendering away from the CPU, allowing for smoother, faster visuals.

The impact is seismic. Suddenly, games like Quake can render complex 3D environments in real-time. Light sources cast shadows, textures wrap seamlessly around models, and movement feels fluid.

Gamers marvel at effects like dynamic lighting, colored fog, and reflections. Although the results are far from photorealistic, they’re a giant step forward. For the first time, developers can chase realism without sacrificing speed.

With each new GPU generation, graphics improve. In Unreal, architecture soars to gothic heights, bathed in shimmering light. In Half-Life, players wander industrial corridors, where steam hisses from pipes and enemies ambush from shadows. The line between game worlds and reality begins to blur.

Shaders and Artistic Alchemy

At the dawn of the new millennium, another leap transforms the landscape: programmable shaders. Instead of relying solely on fixed functions, developers can now write custom code to control how surfaces react to light.

It’s a quiet revolution, one that empowers artistry. Shaders bring water that ripples like glass, metal that gleams with perfect reflections, and skin that scatters light like flesh. Games no longer settle for flat surfaces; they can mimic the physical properties of real materials.

Titles like Far Cry and Doom 3 showcase normal mapping, where intricate textures appear to have depth even on simple geometry. Rocks look rough. Brick walls feel tangible. Gamers walk through digital worlds that feel alive.

Yet the dream of photorealism still teases from the horizon. Characters, despite better models, remain wooden. Animations repeat like clockwork. Eyes, in particular, betray the illusion. They appear lifeless, glassy—an uncanny valley separating virtual humans from the real.

The High-Definition Era

In 2005, Microsoft and Sony launch the Xbox 360 and PlayStation 3. These machines usher in high-definition gaming, capable of displaying graphics at 720p and beyond. Suddenly, players see sharper textures, better lighting, and more detailed character models.

Games like Gears of War dazzle with gritty realism, each brick and droplet of water rendered in stunning detail. Motion capture becomes commonplace, giving characters more natural movement. Facial animation improves, though many digital faces still fall short of true humanity.

Developers now obsess over lighting. Techniques like ambient occlusion simulate how light bounces and softens shadows. Post-processing effects like bloom, depth of field, and motion blur create a cinematic feel.

Still, true photorealism remains elusive. Textures may look real in still frames, but animation and physics reveal the seams. Hair behaves like plastic. Fabrics clip through bodies. But players sense they are approaching a threshold.

The Power of PBR and Physically Based Rendering

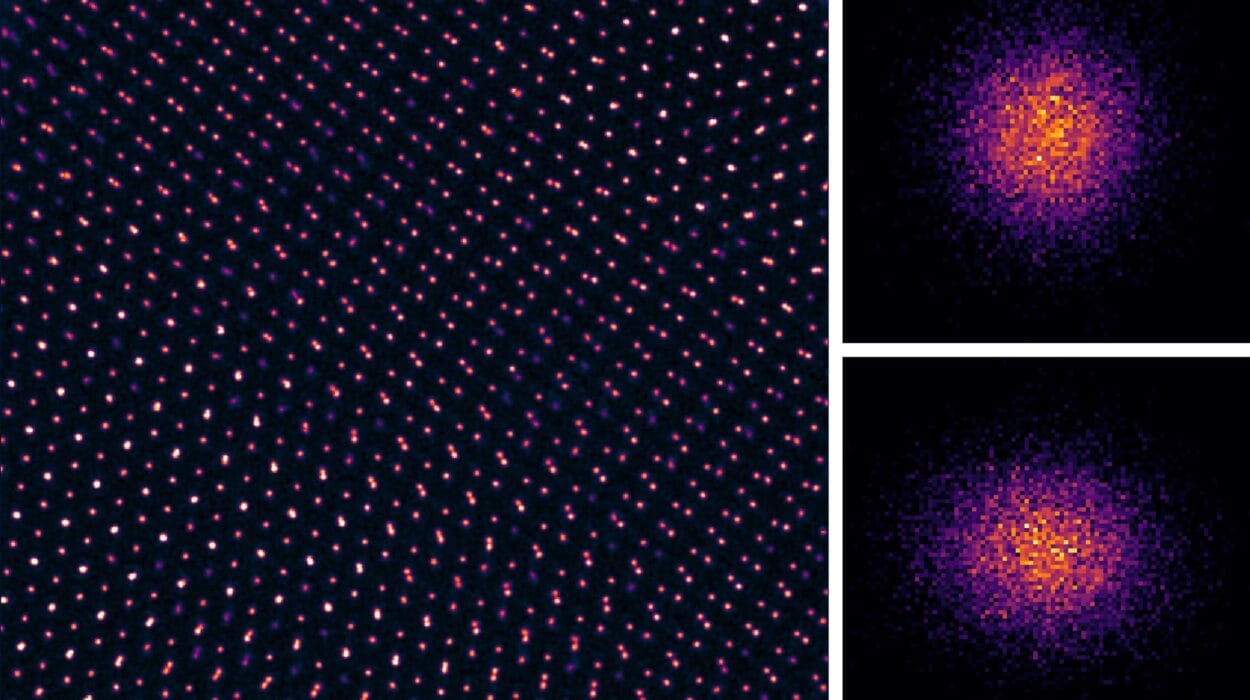

As the 2010s dawn, a new philosophy takes hold in game development: physically based rendering (PBR). Instead of faking materials, artists simulate how light interacts with real surfaces.

With PBR, metal reflects light differently than wood or fabric. Surfaces have measurable roughness, reflectivity, and color. The result is consistency and realism under all lighting conditions. Artists build assets to real-world scales, ensuring that objects react naturally to the game’s illumination.

Games like The Order: 1886 and Star Wars Battlefront showcase PBR’s promise. Players explore environments where brass gleams under gas lamps, leather jackets absorb diffuse glow, and marble reflects footfalls.

Meanwhile, photogrammetry gains popularity. Developers scan real-world objects and environments, transforming photos into detailed 3D models. Forests, cities, and even ancient ruins become stunningly lifelike.

Lighting the Digital World

Throughout gaming history, lighting has been both the greatest challenge and the secret weapon of realism. Early games used baked lighting—static light maps painted onto surfaces. But real life is dynamic. Light bounces, scatters, and changes with the time of day.

In the 2010s, developers explore global illumination techniques that simulate how light reflects and diffuses. Games like Crysis 3 and Metro Exodus push hardware to its limits, calculating how light bounces around rooms, softening shadows and coloring environments.

Yet the true breakthrough comes with real-time ray tracing. For decades, ray tracing has been the holy grail of graphics—calculating each light ray’s path as it reflects, refracts, and diffuses. But ray tracing has always been too slow for games… until now.

In 2018, NVIDIA unveils RTX GPUs, capable of real-time ray tracing. Suddenly, digital scenes fill with reflections, soft shadows, and translucent materials. Glass behaves like glass. Puddles reflect neon signs with perfect clarity. Gamers see worlds that look indistinguishable from live-action cinematography.

Games like Control and Cyberpunk 2077 become showcases for ray tracing. Players wander through neon-lit cities where light dances across wet asphalt, and reflections stretch into infinite corridors. Even the smallest detail—a glint of sunlight on a gun barrel—screams realism.

Photoreal Faces and Digital Souls

While environments reach staggering realism, human characters remain the final frontier. Players instinctively know when a face is fake. The uncanny valley yawns wide, its depths littered with lifeless digital eyes and robotic smiles.

In the 2020s, developers attack the problem with new tools. Epic Games’ MetaHuman Creator lets artists generate ultra-realistic human models in minutes, complete with skin pores, subtle wrinkles, and precise facial animations.

Motion capture evolves, capturing not just body movements but tiny facial muscle twitches. Actors don marker-dotted suits and facial rigs, translating real emotion into digital expression. Games like The Last of Us Part II and Hellblade: Senua’s Sacrifice showcase characters who feel alive, their eyes flickering with vulnerability, rage, or sorrow.

Still, realism is not merely technical. It demands artistry. Developers study acting, psychology, and cinematography, learning how subtle gestures bring characters to life. A furrowed brow, a trembling lip—these small details transform pixels into people.

AI and the Future of Graphics

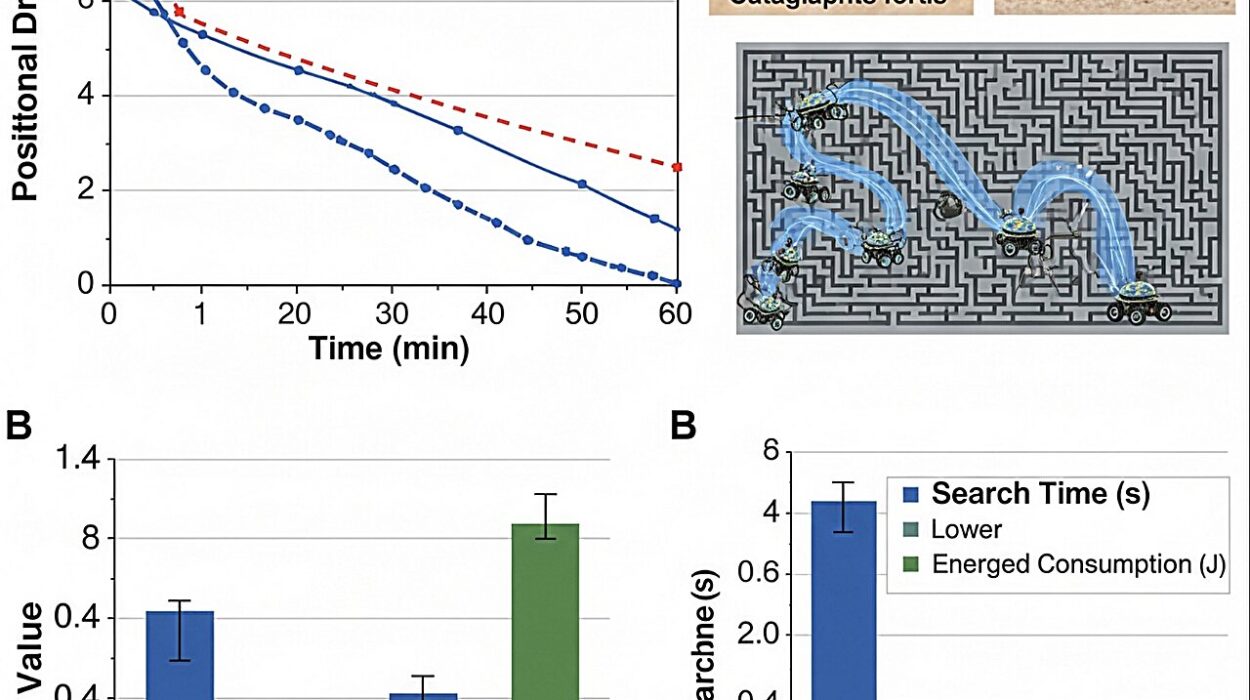

Even as hardware grows powerful, photorealism increasingly relies on artificial intelligence. Deep learning algorithms denoise ray-traced images, upscale textures, and generate realistic facial animations from voice recordings.

Neural networks can create entire environments from photographs, transforming sketches into photoreal landscapes. Tools like NVIDIA’s DLSS (Deep Learning Super Sampling) allow games to run at lower native resolutions while appearing crisp and lifelike.

The future promises generative AI that might design entire worlds on the fly. Imagine a game where each tree, each building, each cloud forms in real-time, procedurally crafted yet indistinguishable from reality.

But AI raises questions. If an algorithm creates photoreal assets, who is the artist? What happens to creative intent in a world of infinite, AI-generated variations? As graphics approach life-like perfection, developers grapple with the ethics of simulation.

Beyond Realism

In this relentless march toward photorealism, an ironic truth emerges: perfect realism is not always the goal. Some developers deliberately step back from photorealistic fidelity to embrace stylization, abstraction, or artistry.

Games like The Legend of Zelda: Breath of the Wild and Hades choose vibrant palettes and painterly textures, proving that beauty lies in interpretation, not just imitation. Even as technology enables photorealism, artistic direction remains the soul of visual storytelling.

Yet when realism is desired, modern games can deliver scenes so convincing they leave players breathless. Consider the opening of Red Dead Redemption 2: sunlight slants through snow-laden trees, each crystal sparkling. Horses steam in frigid air. Snow compresses beneath boots. The illusion is complete.

Players pause not to fight, but to watch the sun rise.

The Emotional Power of Photorealism

Why do we crave photorealism? It’s not simply for visual spectacle. Realism allows games to evoke deeper emotions. When characters look real, players invest emotionally. When environments mimic life, stories feel urgent, impactful, true.

Photorealism bridges the gap between virtual and physical experience. It blurs the line between game and memory. Players walk away from digital worlds with memories that feel as vivid as those of real places.

Yet photorealism also raises philosophical questions. If virtual worlds look—and feel—indistinguishable from reality, what distinguishes the two? Can digital experiences evoke genuine memories, real empathy, true trauma?

These questions will shape gaming’s next chapters. Graphics have grown photoreal, but the true journey lies in exploring what it means to feel alive in a world of zeros and ones.

From Pixels to Infinity

Fifty years ago, a paddle knocked a block back and forth on a glowing screen. Today, games offer worlds of staggering beauty, where light curves around objects, where skin glows with subsurface scattering, where digital eyes glisten with tears.

Photorealism is no longer a distant dream. It is here, a living reality etched in millions of pixels. But the story isn’t finished. New frontiers wait—virtual reality, neural rendering, AI-driven simulations that might one day birth realities we can scarcely imagine.

And through it all, the same question pulses beneath every pixel, every triangle, every ray of light: What if the worlds we build could look real?

Now, they do. And beyond the horizon, new dreams await.