Imagine building a bridge. You pour months into its design, source the best materials, and assemble a team of experts. The structure looks flawless — elegant lines, gleaming surfaces, everything in place. But here’s the catch: no one tests whether it can hold a truck’s weight before opening it to traffic. The first vehicle drives over, and the bridge collapses.

In machine learning, failing to validate a model is like building that bridge without stress testing it. A model may look great when trained — producing dazzling accuracy on the data it has already seen — but this is only a reflection of its memory, not its intelligence. True intelligence, the kind we want in a predictive system, lies in its ability to generalize to unseen situations.

Model validation is the process of making sure the bridge doesn’t collapse. It’s the heartbeat of machine learning, pumping reliability into every prediction. Without it, even the most advanced algorithms risk being hollow shells — superficially impressive but brittle in the real world.

The Problem of Overfitting and the Mirage of Performance

A model that performs perfectly on its training data may, ironically, be the most dangerous model of all. This seduction happens because of overfitting — when a model learns the exact details, quirks, and noise of the training set instead of the broader patterns.

Think of a student who memorizes answers to practice questions but never learns the concepts behind them. They ace the practice test but fail miserably on the real exam. Overfitting works the same way: the model has learned the “answers” to the training data rather than the logic of the problem.

Validation steps in to protect us from this illusion. By holding back some data, unseen during training, we can measure how well the model adapts to new information. This gives a truer measure of its intelligence.

The Essence of Model Validation

At its core, validation is about asking a single question: “If I give this model something it’s never seen before, how well will it do?”

To answer this, data scientists split their dataset into at least two parts: one for training and one for testing. The training set teaches the model. The testing set evaluates it, simulating the moment when the model faces real-world, unseen examples.

But simple train-test splits can be deceptive, especially when datasets are small or imbalanced. This is where cross-validation enters the stage — not as an optional upgrade, but as a foundational method that transforms evaluation from a single snapshot into a panoramic view.

Cross-Validation: The Master Key to Reliable Performance

Cross-validation is like testing a chef’s skills by asking them to cook different meals in different kitchens with slightly different ingredients. You don’t judge them on one dish alone; you judge them on how consistently they can adapt and deliver.

The idea is simple yet profound: instead of making one fixed train-test split, you make several. Each time, you use a different portion of the data for testing, and the rest for training. In the end, you average the results. This way, every data point gets a turn in the testing seat.

This approach doesn’t just give you a number. It gives you confidence. It tells you whether your model’s performance is an accident of the particular data split, or a true reflection of its abilities.

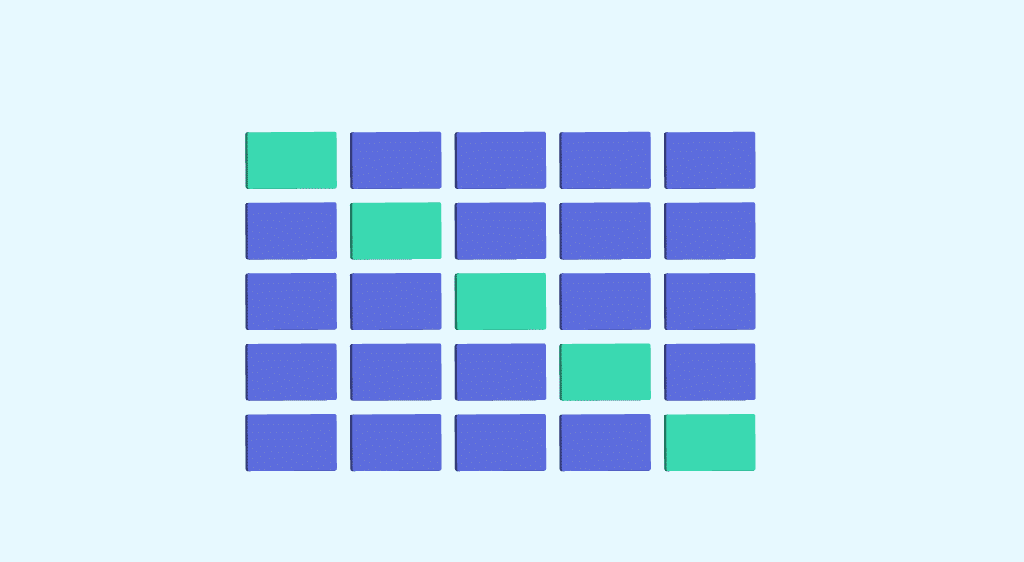

The Anatomy of K-Fold Cross-Validation

In k-fold cross-validation, the dataset is divided into k equally (or nearly equally) sized folds. The model is trained on k-1 folds and tested on the remaining fold. This process repeats k times, with each fold taking its turn as the test set. The results are averaged to produce a single performance metric.

What makes k-fold powerful is its efficiency: all the data is used for both training and testing, without overlap in the same round. This makes it particularly valuable when data is scarce.

For example, with k=5, the data is split into five parts. The model trains on four and tests on the fifth, cycling through until each part has been the test set exactly once. The final score is the mean of the five individual scores — a balanced, comprehensive view of model performance.

Stratification: Fairness in Sampling

In many real-world problems, data isn’t evenly distributed among classes. Imagine trying to classify medical images where only 10% show signs of a rare disease. If you randomly split the data without care, you might end up with a test set that has no diseased images at all. Your accuracy score would then be meaningless.

Stratified cross-validation ensures that each fold has roughly the same class proportions as the whole dataset. It preserves the underlying distribution, making evaluation fair and representative.

When Time Matters: Time Series Validation

In most cross-validation, we shuffle the data randomly. But what if the order of the data carries meaning — as in time series forecasting? If we shuffle financial market data or climate records, we destroy the very patterns we’re trying to predict.

Time series cross-validation respects temporal order. The training set always contains earlier time points, and the test set contains later ones. It’s like peeking into the future using only the past. The method grows the training window gradually, testing at each stage, to simulate how a model would behave in real deployment.

Leave-One-Out Cross-Validation and Its Extremes

When the dataset is tiny, every data point becomes precious. Leave-one-out cross-validation (LOOCV) takes this to the extreme: each fold contains exactly one observation as the test set, and all the rest are used for training. This repeats for every observation.

While LOOCV maximizes data usage for training, it can be computationally expensive for large datasets and prone to higher variance in results. Yet, for small and critical datasets — like rare disease studies — it can be invaluable.

The Bias-Variance Dance

Every model validation strategy lives in the tension between bias and variance. Bias is the error from overly simplistic assumptions — like trying to predict the stock market with a straight line. Variance is the error from overreacting to noise — like chasing every tiny fluctuation in data.

Cross-validation helps strike a balance by giving multiple views of model performance. Too few folds can leave you with high bias; too many can lead to high variance in estimates. Finding the sweet spot is both science and art.

Cross-Validation in Hyperparameter Tuning

Choosing a model is one step; tuning it is another. Hyperparameters — the knobs and levers outside the learning process — control things like tree depth in random forests or regularization strength in linear models. Choosing them blindly risks underfitting or overfitting.

Cross-validation acts as the referee in hyperparameter tuning. By evaluating performance across folds for each candidate setting, you can select parameters that generalize well, not just those that excel on a single lucky split.

Nested Cross-Validation for Honest Evaluation

Sometimes, the process of tuning itself can leak information from the test set into the model. This makes your evaluation overly optimistic. Nested cross-validation solves this by having two layers of CV: the inner loop for tuning hyperparameters, and the outer loop for measuring generalization.

It’s the gold standard for avoiding overfitting during model selection, especially when the dataset is small and the tuning space is large.

Practical Pitfalls in Validation

Even the most rigorous validation can fail if certain traps aren’t avoided. Data leakage — when information from the test set sneaks into training — is a silent killer of reliability. This can happen if preprocessing steps like scaling or encoding are applied before splitting the data, causing subtle contamination.

Another pitfall is using the same validation strategy for wildly different algorithms without considering their specific needs. Some models are more sensitive to certain sampling strategies than others. Understanding the data and model together is crucial for designing meaningful validation.

The Human Element in Model Validation

It’s easy to treat validation as a purely mechanical task — split, train, score, repeat. But behind the metrics lie human decisions: what constitutes a “good” model, what trade-offs are acceptable, what kinds of errors are tolerable. In a medical model, a false negative might be far worse than a false positive. In a spam filter, the opposite might be true.

Validation is not just about numbers; it’s about aligning the model’s behavior with human priorities and values.

Cross-Validation in the Age of Big Data

As datasets grow to millions or billions of samples, traditional k-fold cross-validation can become computationally impractical. But the principles remain. In massive-scale systems, validation often uses holdout sets drawn from different times, geographies, or user segments to mimic the variety the model will face.

Distributed computing frameworks now allow for parallelized cross-validation, bringing back some of the rigor that might otherwise be lost in the rush for speed.

Real-World Impact of Sound Validation

From fraud detection systems that guard financial transactions to medical diagnostic models that save lives, the real-world consequences of validation choices are immense. A poorly validated model might misclassify a critical tumor scan or let a cyberattack slip through undetected.

Conversely, well-validated models build trust — not just among engineers, but among the people whose lives they touch. In industries like aviation, medicine, and finance, trust is the currency that allows technology to take bold steps forward.

The Philosophy Behind Cross-Validation

At a philosophical level, cross-validation reflects a deep humility in science and engineering. It acknowledges that no model should be judged solely by the data it has already seen. It accepts uncertainty, embraces multiple perspectives, and insists that true skill is proven only in unfamiliar territory.

This humility mirrors the scientific method itself: form a hypothesis, test it under varied conditions, and revise it in light of new evidence.

Where We Go from Here

Model validation and cross-validation are not static checkboxes on a data science to-do list; they are evolving practices. As data grows more complex — multimodal, streaming, privacy-constrained — new validation strategies are emerging. Adaptive validation, federated cross-validation, and synthetic data generation are pushing the boundaries of how we test our algorithms.

But one truth will remain: without validation, there is no trust, and without trust, there is no true intelligence. The bridge may look beautiful, but only through rigorous testing will we know if it can stand the weight of the world.