Artificial intelligence often feels like magic. We type a question into a chatbot and receive thoughtful answers. We ask a phone to recognize a song, and it responds in seconds. Cars can now recognize pedestrians, drones navigate crowded skies, and medical software identifies cancer cells with precision rivaling human doctors. Yet behind all of these miracles lies something profoundly material—chips and hardware that give AI its heartbeat.

AI, for all its elegance in code and algorithms, would remain a dream without the silicon and circuitry designed to handle its demands. The story of artificial intelligence is not only about neural networks and software innovations; it is equally the story of the machines built to run them. AI chips are the hidden engines of intelligence, powering the next wave of transformation in technology, society, and human life.

From General Purpose to Specialized Power

In the early days, artificial intelligence ran on traditional central processing units (CPUs), the same kind that powered word processors, spreadsheets, and web browsers. CPUs were versatile and efficient, but they were never designed to handle the unique workload of AI. Training a deep neural network requires performing billions, sometimes trillions, of mathematical operations simultaneously, something CPUs struggled with.

Enter the graphics processing unit (GPU). Originally designed to render images for video games and visual effects, GPUs excelled at parallel processing—breaking down massive tasks into smaller pieces and computing them simultaneously. Researchers quickly realized that this architecture was perfectly suited for the linear algebra that underpins machine learning. Suddenly, models that would have taken months to train on CPUs could be trained in days or even hours on GPUs.

This realization triggered a revolution. GPUs, once the domain of gamers and designers, became the backbone of artificial intelligence. But as AI scaled, new challenges emerged. Researchers wanted larger models, faster training, and more energy-efficient systems. That demand ushered in the era of specialized AI chips.

The Rise of Custom AI Hardware

When the scale of AI exceeded even the capacities of GPUs, companies began designing chips tailored specifically for machine learning. Google was among the pioneers, unveiling its Tensor Processing Unit (TPU), optimized for tensor operations at the heart of deep learning. TPUs didn’t just improve performance; they slashed the power consumption needed to run AI workloads, making cloud-based AI more accessible.

Other tech giants followed suit. Apple designed its Neural Engine to accelerate AI on iPhones and iPads, enabling features like facial recognition and real-time language translation directly on devices. Tesla built custom chips for its self-driving cars, capable of processing terabytes of sensor data every second while operating under strict power and heat constraints.

The message was clear: the future of AI demanded hardware tailored to its needs. The age of one-size-fits-all computing was ending, replaced by a landscape of specialized processors each designed for different domains—cloud AI, edge AI, mobile AI, and autonomous systems.

Architectures of Intelligence

What makes AI chips different from traditional processors is their architecture. CPUs are built for flexibility, handling a wide range of tasks efficiently. GPUs and AI accelerators, however, are designed for throughput: they prioritize processing many small tasks simultaneously rather than one big task quickly.

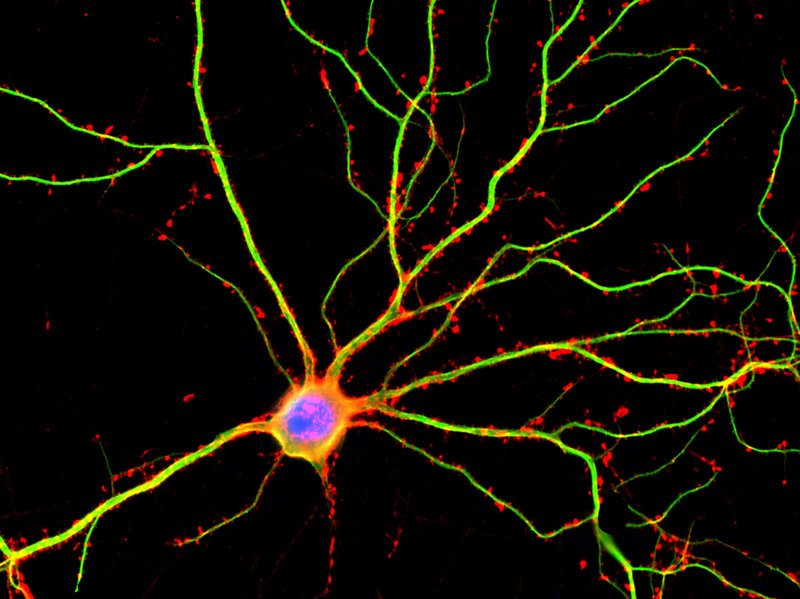

Deep learning relies heavily on matrix multiplication and vector operations—repeatedly multiplying arrays of numbers and adjusting weights. AI chips are optimized to handle these operations at scale, often using simplified arithmetic (like reduced-precision floating points) to speed up computation without sacrificing accuracy.

Beyond raw power, AI chips also focus on efficiency. Training a large model like GPT or a vision transformer consumes enormous energy, sometimes equivalent to powering hundreds of homes for a year. AI hardware designers are in a constant race to maximize performance per watt, balancing speed with sustainability.

Some chips embrace neuromorphic design, mimicking the brain’s architecture. Instead of treating computation and memory as separate, these chips interweave them, allowing for faster, lower-power processing. Others explore optical computing, using photons instead of electrons to transmit data, promising orders-of-magnitude improvements in speed and energy use.

Edge AI: Intelligence at the Source

Not all AI runs in the cloud. Increasingly, intelligence is moving closer to where data is generated—our phones, cameras, drones, and even household appliances. This shift, known as edge AI, reduces reliance on constant internet connectivity, minimizes latency, and protects privacy by processing sensitive data locally.

Edge AI requires chips that are not only powerful but also compact and energy-efficient. A smartphone cannot carry the same cooling systems or battery reserves as a data center. That’s why companies design specialized chips like Apple’s Neural Engine or Qualcomm’s Hexagon processors, bringing AI directly into consumer devices.

The rise of edge AI is not just about convenience; it’s about democratization. By embedding intelligence everywhere—from medical devices in rural clinics to agricultural sensors in remote fields—AI chips extend the reach of technology beyond the cloud, into the everyday world.

The Data Center Race

While edge devices bring intelligence to our pockets, the heavy lifting still happens in sprawling data centers filled with racks of GPUs, TPUs, and custom accelerators. These centers are the training grounds for massive models that require colossal amounts of data and computing power.

The competition is fierce. Nvidia dominates with its GPUs, but challengers like AMD, Intel, and startups such as Graphcore and Cerebras are pushing boundaries. Cerebras, for instance, created the largest chip ever built—the size of a dinner plate—designed to accelerate AI training at unprecedented speeds. Each innovation in this space reduces training times, lowers costs, and enables models of staggering complexity.

But the data center race is also an environmental one. The energy demands of AI are staggering, and hardware innovation is essential to make the future sustainable. Companies are investing in liquid cooling systems, renewable-powered data centers, and chips that squeeze more computations out of fewer electrons.

Quantum Horizons

Beyond silicon lies an even stranger frontier: quantum computing. Unlike traditional bits that exist as 0 or 1, quantum bits—or qubits—can exist in multiple states simultaneously. This property, combined with entanglement and superposition, allows quantum computers to perform certain calculations exponentially faster than classical machines.

While still in early stages, quantum hardware could one day accelerate AI in ways unimaginable. Training models that now take weeks could shrink to seconds. Optimization problems that stump classical machines could become trivial. Companies like IBM, Google, and Rigetti are racing to make quantum hardware stable, scalable, and commercially viable, with AI as one of its most promising applications.

Hardware as the Bottleneck and the Breakthrough

As AI models grow larger—sometimes exceeding hundreds of billions of parameters—the bottleneck is no longer software but hardware. The limits of chip manufacturing, energy efficiency, and data transfer rates define how far AI can advance. Moore’s Law, the decades-old prediction that computing power doubles every two years, is slowing. Transistors are approaching atomic scales, and traditional silicon may soon reach its physical limits.

This looming ceiling is both a challenge and an opportunity. It forces innovation into new realms: three-dimensional chip stacking, advanced materials like graphene, and hybrid architectures combining classical, neuromorphic, and quantum computing. The chips of the future may look nothing like those of today, but they will carry the torch of progress into the next era of AI.

AI Chips and Society

It is easy to think of AI chips as technical curiosities relevant only to engineers and scientists. In reality, they shape the trajectory of society. The speed and accessibility of AI hardware determine who can build AI systems and who cannot. Nations with advanced chip industries wield power not only in economics but also in geopolitics.

The global chip supply chain has become a focal point of tension, with countries investing billions in semiconductor independence. Taiwan, home to TSMC, plays a central role in manufacturing cutting-edge chips, making it strategically vital. The race for AI hardware supremacy is no less important than the space race of the 20th century—it defines who leads in innovation, defense, and global influence.

For individuals, AI chips are quietly transforming daily life. They make our phones smarter, our cars safer, our homes more responsive. They enable medical breakthroughs, agricultural efficiency, climate modeling, and scientific discovery. Each advance in AI hardware ripples outward, shaping industries, cultures, and possibilities.

The Emotional Dimension of Machines

There is something deeply human about our relationship with AI chips. They are tiny, silent slabs of silicon, invisible to the naked eye, yet they carry within them the capacity to create art, solve mysteries, and even converse as if alive. They embody our ingenuity, compressing human knowledge of physics, materials, and engineering into microscopic structures that pulse with possibility.

When we marvel at AI’s achievements, from generating music to decoding proteins, we are also marveling at the chips that make it possible. They are the unsung heroes, the invisible scaffolding of the digital age. Their story is one of patience and progress, of countless engineers and scientists laboring over nanometers and electrons to give form to dreams once thought impossible.

The Road Ahead

The future of AI hardware is both exhilarating and uncertain. Will neuromorphic chips unlock brain-like efficiency? Will quantum processors become practical tools rather than experimental curiosities? Will optical computing revolutionize speed and energy consumption? Each path holds promise, and perhaps the ultimate future will combine them all.

One thing is certain: the next wave of artificial intelligence will be defined not only by clever algorithms but by the chips that run them. The pace of progress will depend on how fast and how sustainably we can push the boundaries of hardware.

The journey is far from over. In fact, it is only beginning. Just as the steam engine powered the Industrial Revolution and electricity powered the modern age, AI chips and hardware are powering the next wave—a wave that will reshape how we live, work, and imagine the future.

Conclusion: Powering Intelligence, Empowering Humanity

At its heart, the story of AI hardware is the story of human ambition. We seek to build machines that think, learn, and create, and in doing so, we stretch the limits of what matter itself can do. Every transistor etched on silicon, every innovation in design, every leap in performance brings us closer to a world where intelligence is woven into the fabric of life.

AI chips are not just components; they are catalysts of transformation. They hold the power to cure diseases, combat climate change, explore the stars, and perhaps redefine what it means to be human. As we stand at the dawn of this new wave, one truth shines clear: the future of artificial intelligence is inseparable from the future of its hardware.

And in those quiet, microscopic circuits lies not just the future of technology, but the promise of humanity itself.