Every second of every day, information flows around us—in the digital networks that power our communication, in the neurons that fire in our brains, and even within the microscopic chemical pathways that sustain life. This constant exchange forms invisible webs known as networks. From social media interactions to global ecosystems, from computer chips to human consciousness, everything is connected.

Understanding how these networks share and process information is one of science’s greatest challenges. How does a brain turn electrical signals into thoughts? How does a financial system respond to sudden shocks? How does a cell interpret chemical signals to make life-or-death decisions? The answers lie in tracing the movement of information—the unseen current that flows between connected nodes.

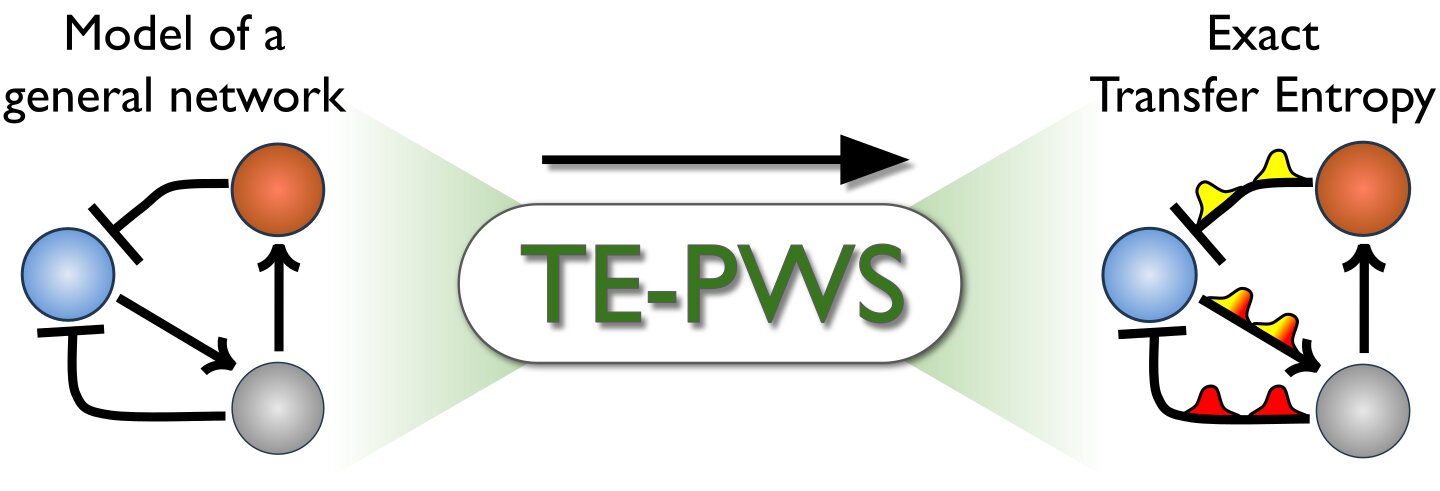

Researchers at AMOLF, a renowned physics institute in the Netherlands, have taken a groundbreaking step toward revealing these hidden pathways. By developing a powerful new algorithm called TE-PWS, they have found a way to measure the directional flow of information in complex systems with unprecedented precision. Their work, published in Physical Review Letters, could revolutionize how scientists study everything from artificial intelligence to biological processes.

Understanding the Language of Networks

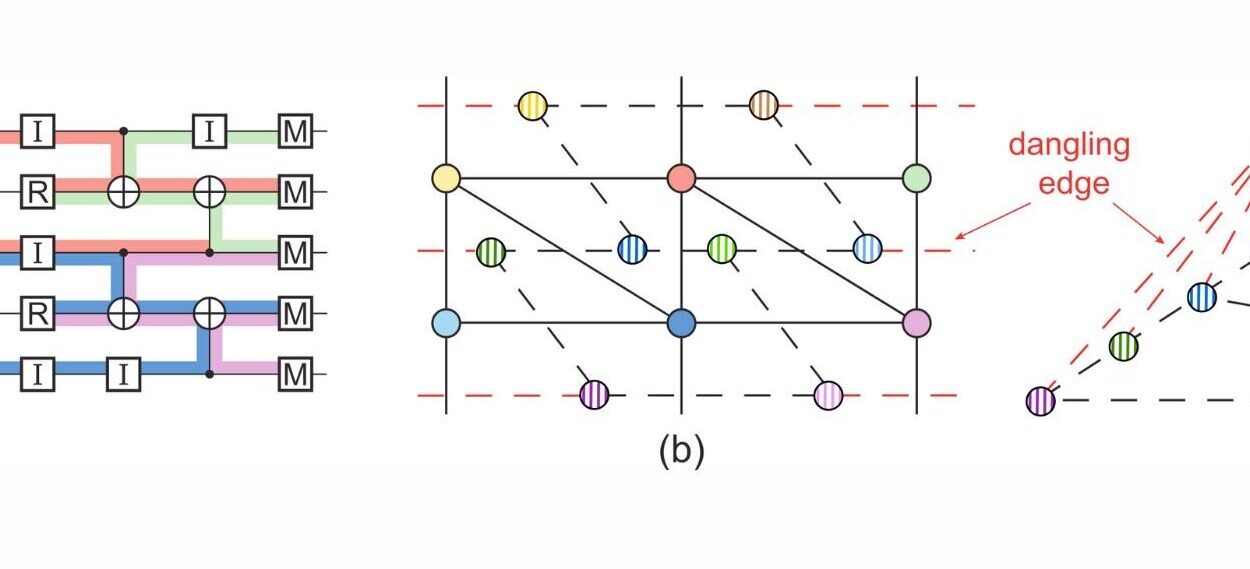

At its core, a network is a system of connected parts—known as nodes—that exchange something between them. In a social network, the nodes are people sharing messages. In a neural network, they are brain cells firing electrical impulses. In a biological cell, they might be molecules sending chemical signals.

What ties all these examples together is the movement of information. Just as rivers carry water across landscapes, networks carry information from one node to another, shaping behavior, decisions, and responses.

To study this movement, scientists use a concept called transfer entropy. It is a mathematical tool that measures how much information one node provides about the future state of another. Imagine watching two friends texting. If you can predict what one will say based on the other’s previous message, there is a flow of information between them. Transfer entropy captures that relationship numerically.

But until now, measuring transfer entropy accurately has been nearly impossible in large, nonlinear, or feedback-heavy systems—exactly the kind of systems that dominate both nature and technology.

The Challenge of Capturing Information Flow

For decades, scientists relied on approximations to estimate how information moves through networks. These methods were good enough for simple models, but they often broke down in the face of real-world complexity. Biological systems, for instance, are full of feedback loops, randomness, and rare events that are difficult to simulate.

“Until now, the directional flow of information in general network models could not be measured without unpredictable errors,” explained physicist Avishek Das, co-author of the new study. “Our paper introduces a computational algorithm, TE-PWS, to quantify it exactly for the first time.”

What makes this such a breakthrough is precision. TE-PWS doesn’t guess; it measures. It replaces estimation with exactness, offering what researchers call ground-truth results—values that are not approximations but true reflections of reality.

A Clever Borrowing from Physics

The key to TE-PWS’s success lies in a clever borrowing from statistical physics, a field that deals with systems of countless interacting particles. Das and his colleague Pieter Rein ten Wolde used a method known as importance sampling—a technique that makes rare fluctuations occur more frequently in simulations.

In biological and engineered systems alike, meaningful events can be extremely rare. Think of a neuron firing at just the right moment to trigger a memory, or a small chemical fluctuation inside a cell that leads to a major metabolic shift. Traditional methods struggle to capture such events because they happen so infrequently. Importance sampling allows TE-PWS to “magnify” these rare moments, recording them with remarkable accuracy.

“TE-PWS uses this to count rare fluctuations accurately, giving the exact transfer entropy for any model,” said Das.

This approach allows scientists to measure how information travels through networks where all other methods fail—systems with feedback, nonlinearity, or extreme complexity.

When Feedback Boosts Communication

One of the most fascinating findings from Das and ten Wolde’s research is that feedback—often thought to slow or complicate information flow—can sometimes enhance it.

Using their new algorithm, they discovered that strong feedback can actually amplify the feedforward transfer of information to distant parts of a network. In simpler terms, when a system “talks back” to itself intelligently, it can sometimes communicate even more effectively.

This insight has profound implications. It suggests that feedback mechanisms in nature—like those found in neural or biochemical networks—might be key to their efficiency and adaptability. In human-designed systems like AI, incorporating such feedback could lead to more robust and intelligent machines.

A Tool for Every Network

Another striking advantage of TE-PWS is its versatility. The algorithm can be applied to virtually any network—digital, biological, or social. It works even when systems are noisy, chaotic, or contain loops that confound traditional analyses.

Das and ten Wolde tested their algorithm across various models and found it to be not only accurate but also fast. “We also found that TE-PWS uses either comparable or less computer time than other methods, making it both accurate and cheap,” said Das.

In an age when data analysis often requires massive computational power, a technique that achieves precision without excessive resource consumption is invaluable.

Measuring the Heartbeat of Living Systems

The potential applications of TE-PWS stretch far beyond physics. Das and his team are already planning to use it to study information processing inside bacterial cells. Though bacteria are simple organisms, their internal signaling networks perform surprisingly complex computations—analyzing chemical gradients, integrating signals, and even optimizing responses to environmental changes.

“Even though bacteria are simple organisms, they perform sophisticated computations like taking integrals and derivatives and finding optima,” said Das. “TE-PWS will help us understand how their signaling networks can do this efficiently.”

This insight could have ripple effects across multiple fields, from medicine to synthetic biology. If we can understand how nature’s simplest forms of life compute information so efficiently, we might learn to design smarter, more sustainable systems ourselves.

From Brain to Machine

The implications for neuroscience and artificial intelligence are equally profound. The human brain is a vast network of nearly 100 billion neurons, constantly exchanging information through electrical and chemical signals. Measuring the exact flow of information between neurons is crucial for understanding how thought, memory, and consciousness arise.

Similarly, artificial neural networks—used in AI systems—mimic this biological information flow. TE-PWS could offer a new way to evaluate and optimize how these networks learn and adapt, making them not only more powerful but also more interpretable.

Financial markets, communication networks, and ecological systems could also benefit. Wherever information flows and feedback loops exist, TE-PWS can provide a clearer, more reliable map of how influence moves through the system.

The Future of Network Science

The introduction of TE-PWS marks a turning point in the study of complex systems. By providing an exact, efficient, and general method for measuring transfer entropy, Das and ten Wolde have given scientists a new lens to look at the interconnected world.

Their work bridges disciplines—combining physics, mathematics, biology, and computer science—to reveal a universal principle: information is the lifeblood of complexity. Whether it flows through a silicon chip or a living cell, information shapes behavior, evolution, and intelligence.

As researchers begin applying TE-PWS to diverse systems, we may soon uncover hidden laws that govern the dynamics of life and technology alike. We might understand not just how networks work, but why they organize themselves the way they do—and how we can design them to be more resilient, adaptive, and intelligent.

More information: Avishek Das et al, Exact Computation of Transfer Entropy with Path Weight Sampling, Physical Review Letters (2025). DOI: 10.1103/t8z9-ylvg. On arXiv: DOI: 10.48550/arxiv.2409.01650