Modern computing relies heavily on the efficient storage, retrieval, and manipulation of data. From smartphones and laptops to supercomputers and data centers, the ability to process information rapidly and store it securely defines performance and capability. Central to this process is the concept of computer memory, which encompasses various components that temporarily or permanently hold data. Among these, Random Access Memory (RAM), cache memory, and storage form the core of how computers operate, interact, and perform tasks.

Computer memory is not a single entity but a layered hierarchy, each level optimized for speed, capacity, and cost. Understanding the science behind RAM, cache, and storage requires exploring how each works, how they interact with the central processing unit (CPU), and how their design affects overall system performance. These components collectively determine how quickly a computer can boot, run applications, handle multitasking, and access stored data.

The Concept of Memory in Computing

In computing, memory refers to any physical device capable of temporarily or permanently storing data and instructions. It is a bridge between the central processing unit and data storage systems. Memory allows computers to perform computations without having to repeatedly access slower devices like hard drives.

The concept of computer memory is inspired by the human brain’s ability to store and recall information. Just as the brain has short-term and long-term memory, computers also have volatile and non-volatile memory. Volatile memory, such as RAM and cache, loses its data when power is turned off. Non-volatile memory, such as hard drives or solid-state drives (SSDs), retains information even after shutdown.

The design of computer memory follows a memory hierarchy, structured according to access speed, cost, and capacity. At the top are the fastest but smallest and most expensive types of memory—registers and cache. In the middle lies main memory (RAM), and at the bottom are storage devices like SSDs and hard drives, which are slower but can hold much larger amounts of data. The closer a memory component is to the CPU, the faster it can transfer data, but it typically stores less.

How Memory Interacts with the CPU

Every computational task begins and ends with data movement between memory and the CPU. The CPU executes instructions stored in memory and uses memory to hold intermediate results and final outputs. However, the CPU operates at extremely high speeds, often measured in gigahertz (billions of cycles per second), while most memory types are slower. This creates a performance gap known as the memory bottleneck.

To mitigate this, computer architectures use multiple layers of memory to balance speed and efficiency. The CPU first checks if the required data is in its internal registers. If not, it looks for the data in the cache, then in RAM, and finally in secondary storage if needed. Each successive step takes longer, so minimizing the need to access slower memory is crucial for performance.

This hierarchical structure allows computers to achieve a balance between speed, cost, and storage capacity. It also ensures that frequently used data can be accessed quickly, while less-used data can be stored more economically in slower memory.

Random Access Memory (RAM): The Computer’s Working Memory

The Nature and Function of RAM

Random Access Memory, commonly known as RAM, serves as a computer’s primary working memory. It is where the system temporarily holds data and instructions that the CPU needs to access quickly. Unlike storage, which holds files and applications permanently, RAM is designed for speed and efficiency in processing active tasks.

When a computer is powered on and an application is opened, the operating system loads the relevant data from storage into RAM. This allows the CPU to access it rapidly without the delays of reading from slower storage devices. The term “random access” refers to the fact that any memory location can be accessed directly, without having to read data sequentially.

RAM plays a vital role in multitasking. Each running program occupies a portion of RAM, and switching between tasks involves the CPU retrieving data from different memory addresses. When RAM is insufficient, the computer uses a slower portion of the storage device, known as virtual memory, which can significantly reduce performance.

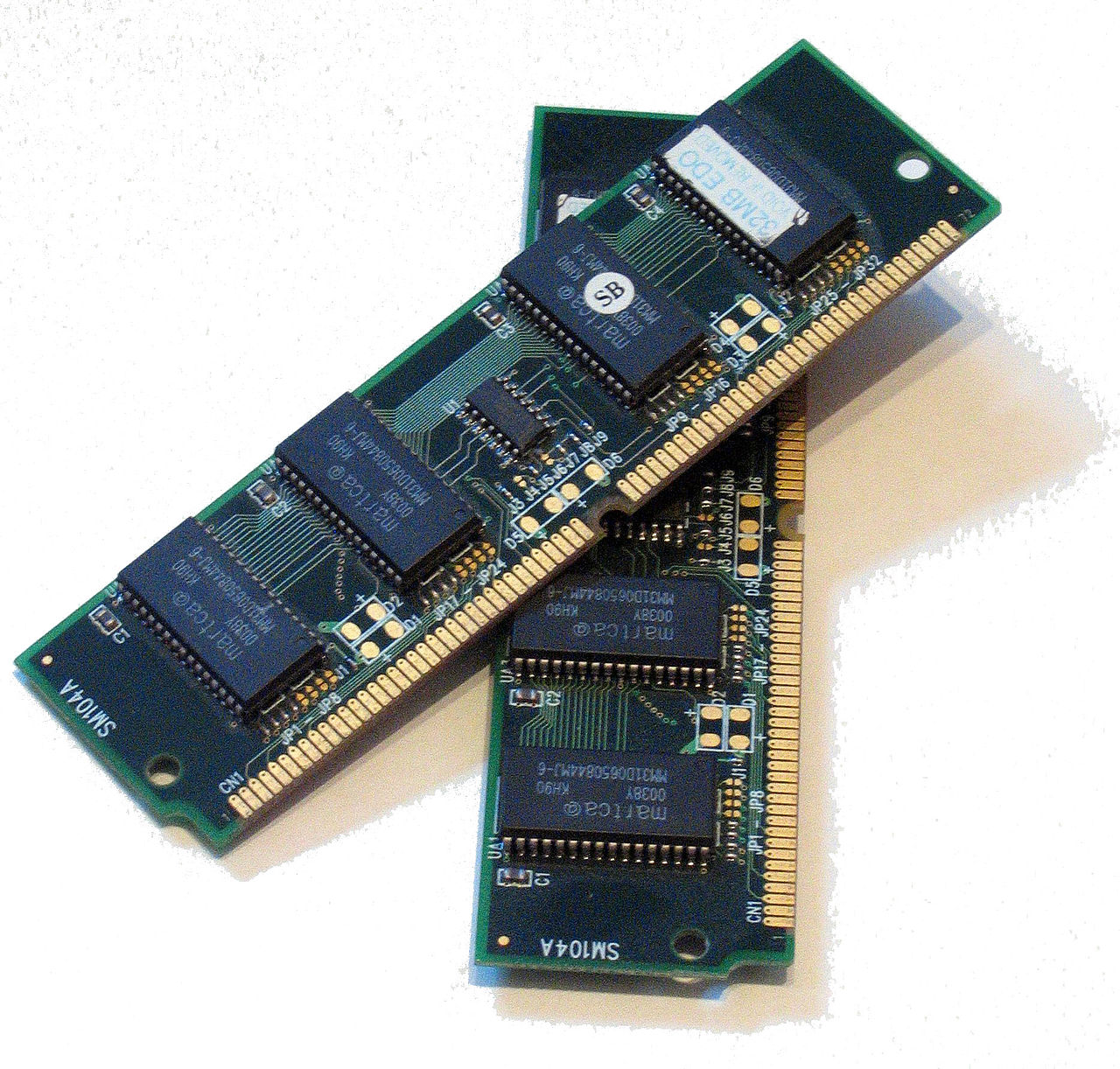

Types of RAM

There are two main types of RAM: Dynamic RAM (DRAM) and Static RAM (SRAM).

Dynamic RAM is the most common form of main memory in modern computers. It stores each bit of data in a tiny capacitor within an integrated circuit. However, because capacitors leak charge, DRAM must be refreshed thousands of times per second to retain its data. This makes it relatively slower but much cheaper and denser, allowing for larger memory capacities.

Static RAM, on the other hand, uses flip-flop circuits made of transistors to store data. It does not require constant refreshing, which makes it faster and more reliable. However, SRAM consumes more power and space, making it more expensive. For this reason, SRAM is typically used in cache memory rather than main system memory.

DRAM Technologies and Evolution

Over the decades, DRAM has evolved to deliver higher speeds, lower power consumption, and greater efficiency. Modern DRAM types include SDRAM (Synchronous DRAM), DDR (Double Data Rate), and its successive generations—DDR2, DDR3, DDR4, and DDR5.

DDR technology allows data to be transferred on both the rising and falling edges of the clock signal, effectively doubling the bandwidth compared to earlier versions. Each new generation of DDR memory increases data rates and reduces power consumption. DDR5, for instance, offers higher capacities per module and improved performance efficiency, making it ideal for high-end computing systems.

RAM Performance and Latency

Two key metrics define RAM performance: speed and latency. Speed, usually measured in megahertz (MHz), indicates how many cycles per second the memory can complete. Latency refers to the delay between a request for data and its delivery. Lower latency results in faster response times.

However, the effective performance of RAM depends not only on raw speed but also on its interaction with the CPU and memory controller. Faster RAM can improve performance in tasks such as gaming, video editing, and scientific computation, but the benefits diminish if the CPU cannot utilize the increased bandwidth efficiently.

Cache Memory: Bridging the Speed Gap

The Purpose and Role of Cache

While RAM is fast, it is still much slower than the CPU’s internal clock speed. To overcome this gap, computers use cache memory, a small but extremely fast type of memory located close to the CPU cores. Cache memory temporarily stores copies of frequently accessed data and instructions, allowing the processor to retrieve them almost instantly.

Cache memory acts as a high-speed intermediary between the CPU and main memory. When the CPU needs data, it first checks whether it is available in the cache. If it finds it there—a cache hit—the CPU proceeds immediately. If not—a cache miss—it retrieves the data from RAM, which takes longer, and stores a copy in the cache for future use.

This mechanism significantly improves processing speed, especially in repetitive or predictable operations. For example, during complex calculations or program loops, the same data may be used repeatedly. Having that data in cache reduces the need for slower memory access, thereby enhancing efficiency.

Structure and Levels of Cache

Modern processors use a multi-level cache hierarchy, typically designated as L1, L2, and L3 caches.

L1 cache is the smallest and fastest, located directly within the CPU core. It usually stores the most frequently accessed instructions and data. The size of L1 cache is often measured in kilobytes but provides nanosecond-level access times.

L2 cache is slightly larger and slower but still much faster than RAM. It serves as an intermediary buffer between L1 and main memory. Depending on the CPU design, each core may have its own L2 cache or share one across multiple cores.

L3 cache is shared among all CPU cores and is typically much larger, measured in megabytes. Although slower than L1 and L2, it still offers much faster access than RAM. The presence of multiple cache levels ensures that the CPU can find needed data at the fastest possible level before resorting to slower memory.

Cache Algorithms and Data Management

The effectiveness of cache memory depends on sophisticated management algorithms that determine which data to store and which to replace. Common strategies include Least Recently Used (LRU), First In, First Out (FIFO), and Random Replacement.

These algorithms ensure that the most relevant and frequently accessed data remain in the cache, minimizing misses and optimizing performance. Moreover, modern CPUs employ prefetching techniques, where the processor predicts which data will be needed next and loads it into the cache preemptively.

The Physics and Technology of Cache Memory

Cache memory is typically built from Static RAM (SRAM) due to its speed and stability. Unlike DRAM, SRAM does not require periodic refreshing, allowing for extremely low latency. However, because SRAM cells use more transistors, they are more expensive and less dense, limiting cache size.

The design of cache circuits involves balancing trade-offs among speed, size, power consumption, and cost. As transistor sizes shrink with each generation of semiconductor fabrication, cache memory continues to grow in capacity and speed, keeping pace with advances in processor architecture.

Storage: The Long-Term Memory of Computers

The Nature of Storage

While RAM and cache handle short-term, high-speed data operations, storage devices provide long-term retention of information. Storage retains data even when the computer is powered off, encompassing everything from the operating system and applications to user files and multimedia content.

Storage technology has evolved dramatically, from mechanical hard drives (HDDs) with spinning platters to electronic solid-state drives (SSDs) that use flash memory. The fundamental purpose remains the same: to store vast amounts of data permanently while providing reasonably fast access.

Hard Disk Drives (HDDs)

Hard disk drives have been the primary form of storage for decades. They store data magnetically on rotating disks, known as platters. A read/write head moves across the surface of the platters to access or modify data.

The speed of an HDD is largely determined by the rotation speed of its platters, measured in revolutions per minute (RPM). Typical consumer drives spin at 5400 or 7200 RPM, while enterprise drives may reach 15,000 RPM. The higher the speed, the faster data can be accessed.

HDDs offer large capacities at relatively low cost, but their mechanical nature introduces limitations. Moving parts make them prone to wear and physical damage, and they cannot match the speed of purely electronic memory. Nonetheless, HDDs remain valuable for bulk data storage and archival purposes.

Solid-State Drives (SSDs)

Solid-state drives represent a revolution in storage technology. Unlike HDDs, SSDs have no moving parts. They store data in NAND flash memory cells, where electrical charges represent bits of information.

The lack of mechanical motion allows SSDs to deliver dramatically faster read and write speeds, lower latency, and greater durability. SSDs are also quieter, consume less power, and generate less heat, making them ideal for modern laptops and servers.

However, SSDs have limitations as well. Flash memory cells can endure only a finite number of write cycles before they degrade, though wear-leveling algorithms and advanced manufacturing techniques have greatly extended their lifespan.

The Science of NAND Flash

NAND flash memory, the foundation of SSDs, stores data using floating-gate transistors that trap electrons. The presence or absence of an electric charge in the gate represents binary states—0 or 1. More advanced flash technologies store multiple bits per cell, such as Multi-Level Cell (MLC), Triple-Level Cell (TLC), and Quad-Level Cell (QLC) flash, which increase capacity but reduce endurance and performance.

Recent innovations like 3D NAND stack memory cells vertically, allowing for much higher densities without increasing chip area. This design improves performance and reduces cost per gigabyte, making high-capacity SSDs more affordable.

Storage Interfaces and Protocols

The interface between the storage device and the computer’s motherboard significantly affects performance. Traditional HDDs and early SSDs used the SATA (Serial ATA) interface, which limited data transfer speeds to around 600 MB/s.

Modern SSDs now utilize the NVMe (Non-Volatile Memory Express) protocol over the PCI Express (PCIe) interface. NVMe is designed specifically for flash memory and provides much lower latency and higher parallelism. This allows data to flow directly between the CPU and SSD, achieving speeds several times faster than SATA drives.

Emerging Storage Technologies

Beyond NAND flash, several emerging technologies promise to redefine storage. 3D XPoint, developed by Intel and Micron, offers performance between that of DRAM and NAND flash, with non-volatility and endurance advantages. Magnetoresistive RAM (MRAM) and Resistive RAM (ReRAM) are also being explored as potential future replacements for traditional storage, combining speed, durability, and persistence.

The Memory Hierarchy: Balancing Speed, Cost, and Capacity

The entire memory system in a computer functions as a hierarchy, from the fastest but smallest registers inside the CPU to the slowest but largest storage drives. This structure ensures that data can be accessed efficiently according to its frequency of use.

At the top are registers, small storage locations inside the CPU that hold immediate operands for execution. Just below registers are cache levels, followed by main memory (RAM), secondary storage (SSDs/HDDs), and finally tertiary storage like magnetic tapes or cloud archives.

The cost per bit increases as speed increases, while capacity decreases. Designers carefully balance these factors to optimize system performance without making computers prohibitively expensive. This hierarchy allows high-speed computing for frequently accessed data while maintaining cost-effective bulk storage for less critical information.

How RAM, Cache, and Storage Work Together

During typical computer operations, all three memory components—RAM, cache, and storage—work in seamless coordination. When a program is launched, its executable code and necessary data are loaded from storage into RAM. As the CPU executes instructions, it retrieves data from RAM into cache memory for even faster access.

If the CPU requires data not found in cache (a cache miss), it retrieves it from RAM. If it is not in RAM either, the system fetches it from storage, often using virtual memory to compensate for limited RAM capacity. This interplay ensures that each operation occurs at the optimal speed available at that moment.

Efficient data flow between these components determines how smoothly applications run. Optimizing cache algorithms, increasing RAM capacity, and using faster storage all contribute to reducing latency and improving throughput.

The Physics of Memory Devices

At the microscopic level, memory technology is a triumph of applied physics and material science. In DRAM, capacitors store charge that represents binary states, while transistors control access to each cell. As technology scales down to nanometer dimensions, quantum effects and leakage currents become major design challenges.

In NAND flash, floating-gate transistors rely on precise electron tunneling mechanisms to trap and release charges. The reliability of these operations depends on the thickness of insulating layers and the endurance of materials under repeated stress.

Emerging non-volatile memories explore quantum tunneling, spintronics, and resistive switching phenomena. These approaches exploit electron spin, magnetic states, or resistance changes to store data with higher speed and durability than current technologies allow.

The Future of Computer Memory

The future of computer memory lies in overcoming the limitations of current architectures. As CPUs become faster and data-intensive applications multiply, memory systems must evolve to keep pace. The memory wall, a term describing the growing performance gap between CPU speed and memory speed, remains a key challenge.

Innovations such as unified memory architectures, persistent memory modules, and optical storage aim to bridge this divide. Persistent memory technologies, for example, combine the speed of DRAM with the non-volatility of storage, enabling systems to resume instantly after power loss.

In addition, the integration of artificial intelligence and machine learning into memory management is allowing computers to predict usage patterns, optimize caching, and dynamically allocate resources for maximum efficiency.

Quantum computing introduces yet another frontier, where quantum memory could one day store qubits—quantum bits—that exist in multiple states simultaneously, exponentially increasing computational potential.

Conclusion

The science behind computer memory—RAM, cache, and storage—forms the backbone of modern computing. Each component plays a specific and interconnected role in balancing speed, capacity, and permanence. RAM provides the high-speed workspace needed for active processes, cache bridges the gap between RAM and the CPU for lightning-fast data access, and storage ensures long-term retention of information.

Together, these layers of memory form a dynamic ecosystem that enables everything from simple calculations to complex artificial intelligence systems. As technology continues to evolve, memory systems will grow faster, more efficient, and more intelligent, reshaping what computers can achieve.

Understanding how these components work not only deepens our appreciation of computer design but also highlights the extraordinary interplay of physics, engineering, and innovation that defines the digital age. The journey from bits stored in silicon to the vast interconnected world of data is, at its core, a story of memory—a story that continues to unfold as we push the boundaries of computation.