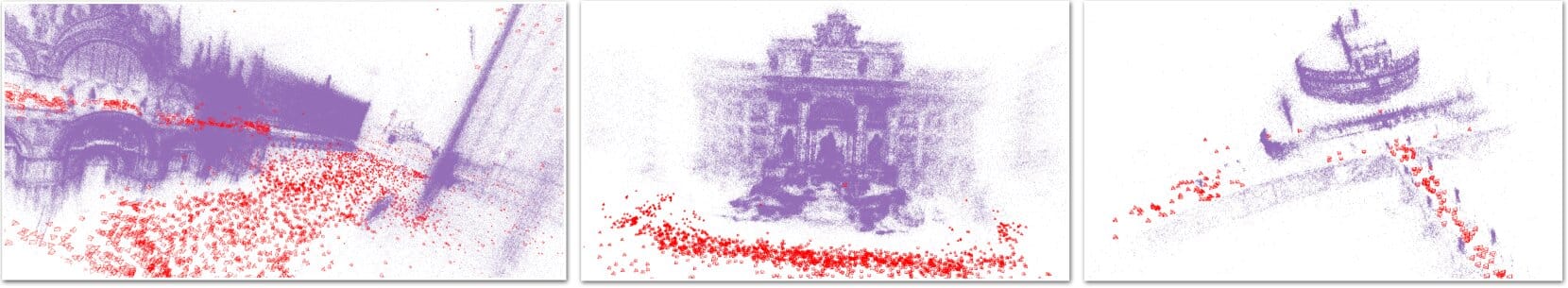

Imagine standing in the middle of a city and trying to create a perfect 3D map of it—except you can only look at photographs taken from different angles, and you have no idea where the cameras were or how far they were from each building. Most humans could use experience, instinct, and a bit of imagination to piece together a mental picture. Computers, however, struggle with that kind of spatial puzzle.

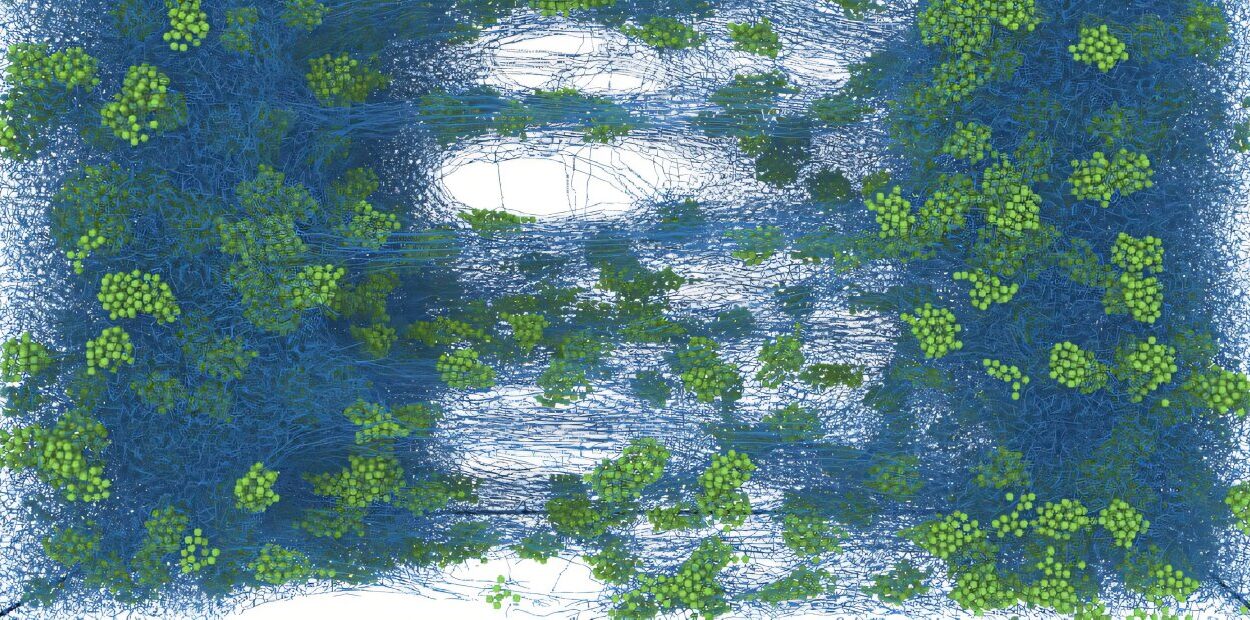

This problem—reconstructing accurate 3D scenes from ordinary 2D images—is one of the grand challenges in computer vision and robotics. It’s crucial for everything from self-driving cars navigating city streets to drones exploring disaster zones. To “see” in three dimensions, robots often build something called a 3D point cloud: a massive set of coordinates that represent the shape and position of every visible surface. But for machines, generating these point clouds from photos is a slow, error-prone process.

Traditional methods try to estimate depth one point at a time, adjusting and guessing until the picture makes sense. If the computer’s initial assumptions are wrong—or if the photos contain distortions—errors can snowball, producing warped or incomplete reconstructions. It’s like trying to assemble a jigsaw puzzle without knowing the image, piece by piece, hoping it all comes together in the end.

A Leap Forward in Robot Vision

Now, computer scientists at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) have unveiled a groundbreaking approach that could change how machines perceive the world. Their new algorithm can reconstruct high-quality 3D scenes faster, more accurately, and without the fragile step-by-step guesswork that slows down older methods.

The research—described in the paper “Building Rome with Convex Optimization”—earned the Best Systems Paper Award in Memory of Seth Teller at the prestigious Robotics: Science and Systems Conference. It was authored by graduate student Haoyu Han and Heng Yang, assistant professor of electrical engineering at SEAS. The findings are available on the open-access platform arXiv.

All at Once, Not Bit by Bit

At the heart of their breakthrough is a clever union of two powerful tools: state-of-the-art AI depth prediction and convex numerical optimization.

Depth prediction algorithms can take a 2D image and make an educated guess about how far away each pixel is. But such predictions are still imperfect—like a sketch of a landscape that captures the overall scene but misses fine details. Convex optimization, on the other hand, is a mathematical technique that excels at finding the best solution to a problem without getting stuck in local errors.

By merging these two techniques, Han and Yang’s method can estimate the positions of all points in a scene simultaneously, eliminating the need for incremental corrections. In other words, rather than painstakingly building a 3D model one pixel at a time, the algorithm solves for the whole puzzle in one go.

“By combining AI depth prediction with a powerful new approach in convex numerical optimization, the method can estimate the positions of all points in a scene at once, with no need for step-by-step guesswork,” Han explained. “The reconstruction process is not only faster and more robust, but also free from the need for initial guesses by the computer.”

Speed, Accuracy, and Reliability

Early tests show that the Harvard method outperforms existing algorithms on both speed and quality. For robotics, this is more than just an efficiency upgrade—it’s a potential game-changer. In autonomous navigation, even a small boost in processing speed can mean the difference between a safe maneuver and a collision. In mapping and surveying, better accuracy means fewer costly mistakes in measurements.

The robustness of the method is also key. Because the algorithm doesn’t rely on an initial approximation, it is far less likely to spiral into the wrong solution when data is imperfect or incomplete—a common problem in real-world conditions where cameras might be obstructed, lighting is poor, or images are captured at awkward angles.

A Future of Sharper Robot Vision

The potential applications are wide-ranging. Self-driving cars could use the technique to instantly model the streets around them. Search-and-rescue drones could build reliable 3D maps of collapsed buildings in seconds. Archaeologists could digitally reconstruct ancient ruins from just a few photographs taken on site. Even consumer devices, like smartphones and VR headsets, could gain sharper and more realistic 3D imaging capabilities.

While the work is still in the research stage, the recognition it has received suggests it’s already making waves in the robotics community. By enabling machines to “see” and understand their surroundings with greater clarity and speed, Han and Yang’s approach could open new frontiers for artificial intelligence, navigation, and spatial computing.

As the paper’s title playfully hints, building Rome—even digitally—might no longer take more than a day.

More information: Haoyu Han et al, Building Rome with Convex Optimization, arXiv (2025). DOI: 10.48550/arxiv.2502.04640