In the quiet hum of a laboratory, a robotic hand lies still on a bench, waiting. A gentle tap lands on its fingers—nothing. Another, then another. The taps continue, identical in pressure, in rhythm. At first, the robotic fingers twitch ever so slightly. But soon, they stop reacting entirely. The stimulus has become familiar, unthreatening. The hand has learned not to care.

But then, something changes. A jolt of electricity accompanies the touch. Suddenly, the robotic fingers jerk back. A warning signal has been introduced, and now the same hand that ignored harmless sensations snaps to attention. It has “felt” pain. And what’s more—it has remembered.

This is not science fiction. This is the edge of neuroscience and robotics, and Korean researchers have just crossed a historic threshold in artificial intelligence.

Beyond Code: Giving Robots a Nervous System

While much of modern robotics has focused on making machines smarter through software—faster processors, advanced algorithms, machine learning—there’s a limit to how much code can teach. At some point, true intelligence must go deeper. It must feel.

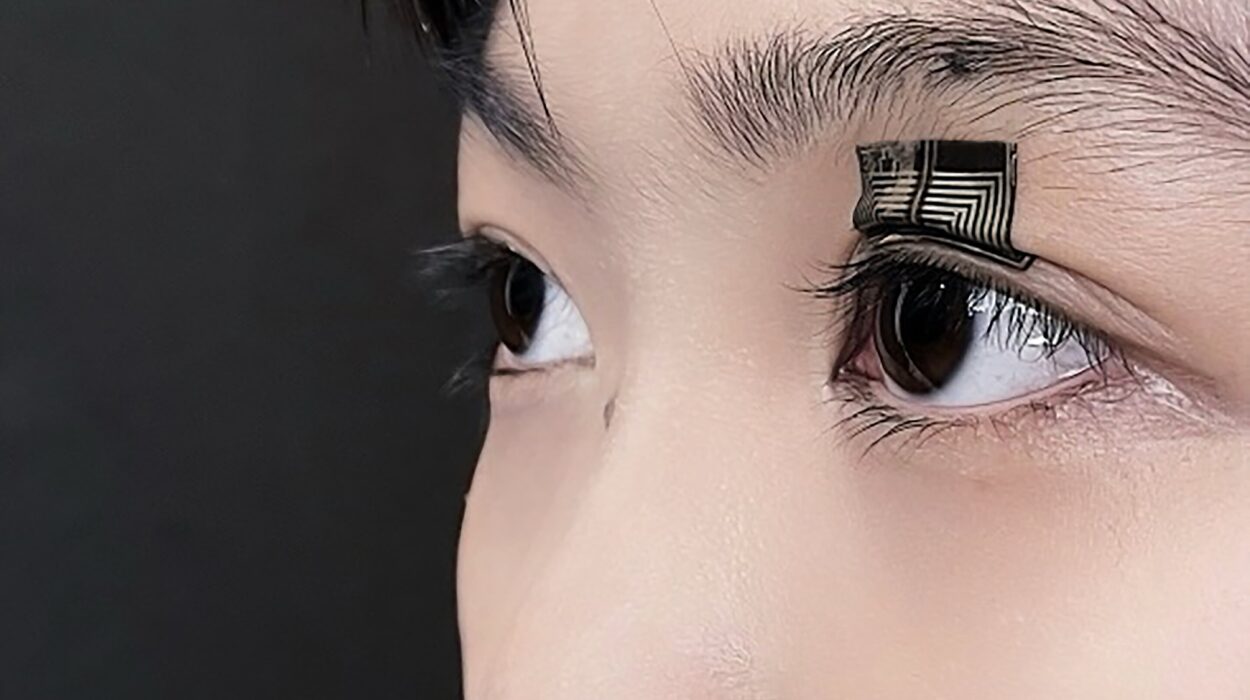

That’s exactly what researchers at KAIST (Korea Advanced Institute of Science and Technology) and Chungnam National University have achieved. Led by Endowed Chair Professor Shinhyun Choi and Professor Jongwon Lee, the joint team has developed a neuromorphic, semiconductor-based artificial sensory nervous system. It’s the first of its kind in Korea—and one of the first in the world to mimic the dynamic, self-regulating behaviors of biological nervous systems in such a compact and energy-efficient way.

Published in Nature Communications, this work doesn’t just introduce a new tool for robotics. It proposes a new way of thinking about how machines interact with the world.

Why Sensory Intelligence Matters

Think about how you experience the world. You don’t notice the clothes on your skin after a few minutes, or the hum of a ceiling fan. But your mind is always scanning, alert for new or threatening sensations—a scream, a burn, the sudden sting of cold. This selective awareness is what keeps us from sensory overload, while still keeping us safe.

Biologists call these two behaviors habituation and sensitization. They’re foundational to how living creatures—mice, birds, humans—manage energy and attention. They allow organisms to ignore what’s safe and predictable, and to sharply focus on what’s important.

For decades, roboticists have dreamed of giving machines this kind of intelligent responsiveness. The idea is simple: create robots that react like we do. The problem? The complexity of our nervous systems has made it almost impossible to replicate—especially in ways that are miniaturized, efficient, and fast.

A New Brain in a Single Device

Enter the memristor.

Often hailed as the building block of future brain-like computers, a memristor is a tiny electrical component that can “remember” the amount of charge that has passed through it. It can store analog information—like the strength of a signal—much like how synapses in our brains adjust based on experience.

But until now, memristors had a limitation. They could only change in one direction—either increasing or decreasing conductivity in a steady, linear way. That meant they could simulate simple memory, but not complex adaptive behaviors like becoming desensitized to safe stimuli or becoming hyperaware of danger.

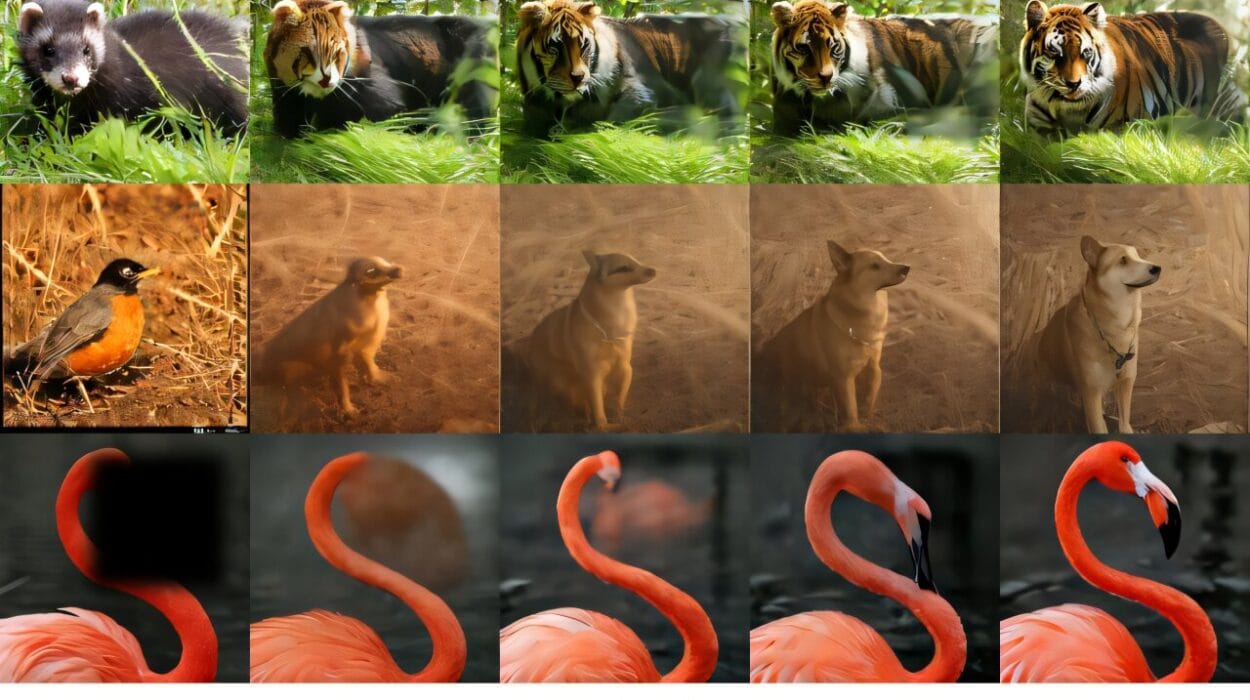

The Korean research team solved this by engineering a dual-layered memristor, in which conductivity could change in opposite directions depending on the nature of the input. When repeated safe stimuli were delivered, the memristor adapted by reducing its response—mimicking habituation. But when an electric “danger” signal was introduced, the same device could suddenly increase its responsiveness, mimicking sensitization.

In essence, a single tiny component was behaving like part of a living nervous system.

Touch and Pain—In a Robot’s World

To test their innovation, the team integrated the artificial nervous system into a robotic hand. Then they began to apply tactile stimuli.

At first, when the hand was touched, it responded quickly—its electronic nerves firing in recognition of the new sensation. But with repetition, the hand began to “ignore” the same touch. Like human skin adapting to pressure, it learned to tune out familiar sensations. This wasn’t due to software reprogramming. It was built into the hardware itself—the behavior was physically embedded in the neuromorphic system.

Then the researchers added a twist—an electric jolt paired with the touch.

The response was immediate and dramatic. The hand’s reactions spiked. The system was now “aware” that the same location, once harmless, could become dangerous. It had adapted again. This transition—from calm to alert, from desensitized to hyper-aware—mirrored real nervous systems more closely than anything previously built.

And all of it happened without traditional processors, complex coding, or layered software instructions.

Revolution on the Smallest Scale

Why does this matter?

Because it opens the door to ultra-small, ultra-efficient intelligent robots that can feel, respond, and adapt—all on their own. For fields like medical robotics, where robotic prosthetics need to interact intimately with human skin and muscle, this breakthrough is transformative. A robotic arm that can stop reacting to a shirt sleeve but immediately detect a pinprick? That’s no longer fantasy.

For military robotics, it means machines that can ignore environmental noise until something abnormal—a sudden movement, a sharp sound—triggers alertness. For space and rescue missions, where robots must rely on minimal power and make decisions quickly without human guidance, these systems offer a survival advantage.

And perhaps most profoundly, it brings us closer to developing robotic limbs and prosthetics that truly feel—devices that don’t just detect pressure, but know when to care about it.

Intelligence Without a Brain

What’s truly groundbreaking is that all of this is happening at the hardware level. These are machines that don’t need to “think” in the way traditional computers do. They feel, and then they react, all through physical processes in synthetic neurons.

Researcher See-On Park of KAIST summarized the vision simply but powerfully: “By mimicking the human sensory nervous system with next-generation semiconductors, we have opened up the possibility of implementing a new concept of robots that are smarter and more energy-efficient in responding to external environments.”

This represents a shift not just in robotics, but in how we understand intelligence itself. Intelligence, this research suggests, doesn’t always require high-level reasoning or massive computational power. Sometimes, it’s the ability to ignore. To focus. To remember danger. To forget what doesn’t matter. And to do it all in real time, without being told.

From Machines That Compute to Machines That Feel

The evolution of robotics has always followed a dream: to create machines that live and move among us, not as tools, but as companions, caregivers, explorers—extensions of ourselves. That dream has often stumbled on the cold reality that most machines can’t understand context. They can see, but not interpret. They can move, but not adapt.

But what KAIST and Chungnam National University have developed changes the paradigm. These are not robots that respond because they are told to. These are robots that respond because they’ve learned to.

And in that shift—from instruction to adaptation—we glimpse a future where machines don’t just follow orders, but sense meaning in the world around them.

Touch is no longer just a signal. It’s a story. And at long last, machines are learning how to read it.

Reference: See-On Park et al, Experimental demonstration of third-order memristor-based artificial sensory nervous system for neuro-inspired robotics, Nature Communications (2025). DOI: 10.1038/s41467-025-60818-x