Imagine sitting at a table and reaching for your morning cup of coffee. You don’t think twice—you see the cup, you sense its distance, and the moment your fingers brush against it, you instantly adjust your grip. Multiple senses—sight, touch, and even balance—work together seamlessly to guide this seemingly simple action. For humans, this is effortless. For machines, however, it has been a monumental challenge.

In the world of artificial intelligence (AI) and robotics, replicating human-like perception is one of the most elusive frontiers. Robots can see through cameras, and they can move with mechanical precision, but integrating senses the way humans do—especially the critical sense of touch—has remained largely out of reach. Until now.

When Robots Learn to Combine Sight and Touch

An international team of researchers has developed a new approach that allows robotic systems to integrate both visual and tactile information when manipulating objects. This marks a significant shift in physical AI, where robots don’t just “see” but can also “feel” their environment, responding adaptively in real time.

Unlike conventional vision-based systems, which rely almost entirely on cameras and image recognition, this multimodal method merges sight with tactile sensing. By doing so, robots can understand more about the texture, shape, and usability of objects—qualities that are difficult to judge from sight alone. The result? A dramatic improvement in success rates when performing complex tasks, bringing machines one step closer to moving and interacting with the world as intuitively as humans do.

The team’s findings were recently published in the IEEE Robotics and Automation Letters, underscoring their importance in advancing the future of robotics.

Building on Human Movement

To give robots more human-like dexterity, researchers have long turned to machine learning. By analyzing and learning from human movement patterns, robots can begin to mimic our ability to carry out everyday tasks—cooking, cleaning, organizing, and even assisting with healthcare.

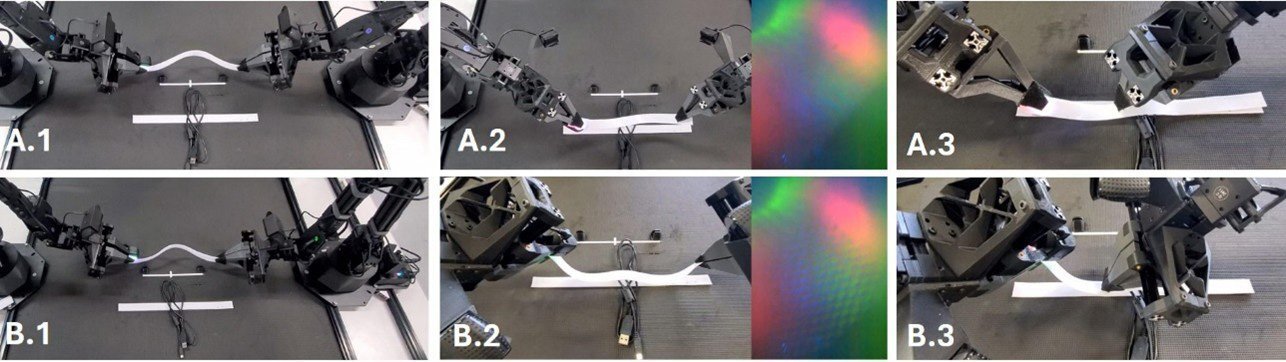

A key example is ALOHA (A Low-cost Open-source Hardware System for Bimanual Teleoperation), developed by Stanford University. This system enables remote operation and learning for dual-arm robots, making it affordable and adaptable for different purposes. Both its hardware and software are open source, giving researchers worldwide a foundation on which to innovate further.

But systems like ALOHA still faced a glaring limitation: they depended almost entirely on visual information. Vision is powerful, but it can be misleading or insufficient. Consider Velcro—without touch, distinguishing its front from its back is nearly impossible. The same is true for zip ties, fabrics, or any object where texture defines function. Humans instantly recognize these differences through touch, but robots without tactile input are left guessing.

Introducing TactileAloha

To address this challenge, Professor Mitsuhiro Hayashibe and his colleagues at Tohoku University, alongside collaborators in Hong Kong, created a system that marries tactile sensing with visual processing. They called it TactileAloha.

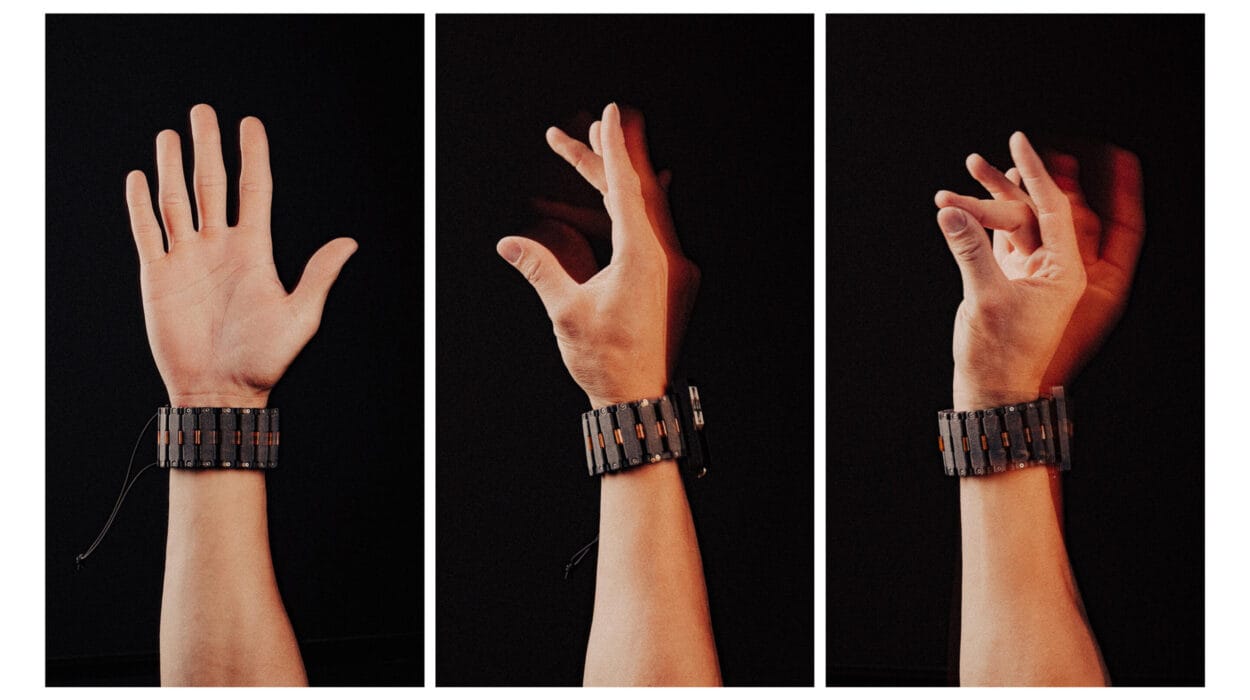

TactileAloha enhances dual-arm robots with the ability to not only see objects but to feel them, interpreting textures and material properties that are invisible to the eye. The researchers applied vision-tactile transformer technology, an AI model designed to integrate and interpret multiple sensory streams simultaneously. This allowed the robot to adapt its movements in ways that purely vision-based systems could not.

When tested on tasks requiring fine tactile judgment—such as handling Velcro or fastening zip ties—the robot demonstrated remarkable adaptability. It could distinguish front from back, recognize adhesiveness, and adjust its grip accordingly. These results highlight the promise of multimodal physical AI in solving real-world problems where sight alone falls short.

Beyond Vision: Toward a Truly Multisensory AI

The implications of this research are profound. Just as humans rely on a symphony of senses—sight, touch, hearing, even smell—future robots may need to do the same to function naturally in human environments. Professor Hayashibe explains, “This achievement represents an important step toward realizing a multimodal physical AI that integrates and processes multiple senses such as vision, hearing, and touch—just like we do.”

This shift from single-sense to multisensory AI represents a fundamental change in robotics. It moves machines from rigid, pre-programmed motions toward adaptive, intuitive behaviors. With tactile input, robots can better handle uncertainty, adjust to unpredictable environments, and collaborate more effectively with humans.

Practical Applications on the Horizon

The possibilities unlocked by TactileAloha and similar innovations are vast. In the near future, robots equipped with multimodal physical AI could assist with caregiving, delicately handling fragile objects like glassware or assisting elderly individuals without causing harm. They could work in kitchens, feeling the texture of food to prepare meals more accurately. In manufacturing, they could manipulate complex materials, improving both efficiency and safety.

Beyond domestic and industrial settings, such robots could play critical roles in healthcare, disaster response, and space exploration—fields where adaptability and precision are essential. Imagine a robot surgeon that not only sees the tissue but feels its resistance, or a rescue robot that navigates debris by sensing surfaces, much like a human firefighter would.

A Step Toward Seamless Integration

The development of TactileAloha demonstrates that combining vision and touch is not just possible but highly effective. The research, carried out by teams from Tohoku University’s Graduate School of Engineering, the Center for Transformative Garment Production at Hong Kong Science Park, and the University of Hong Kong, shows what can be achieved when interdisciplinary expertise comes together.

As robotics progresses, these multimodal systems bring us closer to a future where robots integrate seamlessly into daily life—not as cold machines, but as responsive, adaptable helpers. They will not only follow commands but also interpret and respond to their surroundings with nuance, much like a human companion.

Toward Robots That Truly Understand

What began as an attempt to grab a cup of coffee has evolved into a scientific milestone. The leap from sight-only systems to vision-and-touch integration represents a turning point in physical AI. It shifts robotics from simple mechanical execution to embodied perception—machines that can see, feel, and respond in harmony.

As we look to the future, the dream of robots that truly understand their environment is no longer confined to science fiction. Thanks to breakthroughs like TactileAloha, that dream is rapidly becoming reality. And perhaps one day soon, when a robot hands you your morning coffee, it will do so with the same effortless precision—and human-like grace—that you take for granted.

More information: Ningquan Gu et al, TactileAloha: Learning Bimanual Manipulation With Tactile Sensing, IEEE Robotics and Automation Letters (2025). DOI: 10.1109/LRA.2025.3585396