Artificial intelligence is no longer a futuristic dream—it is woven into the very fabric of modern life. From diagnosing diseases in hospitals to stopping fraudulent transactions in banks, AI has become a trusted partner in decision-making. But what if the very machines powering these systems could be tricked into self-destruction, collapsing in accuracy from sharp brilliance to near-total confusion?

That chilling possibility has been uncovered by a team of computer scientists at the University of Toronto, who revealed that a well-known memory attack once thought to affect only traditional processors can also destabilize the graphics processing units (GPUs) that drive today’s AI revolution. Their discovery, published at the prestigious USENIX Security Symposium 2025, has shaken assumptions about the safety of the hardware running our most powerful models.

From CPUs to GPUs: An Attack Evolves

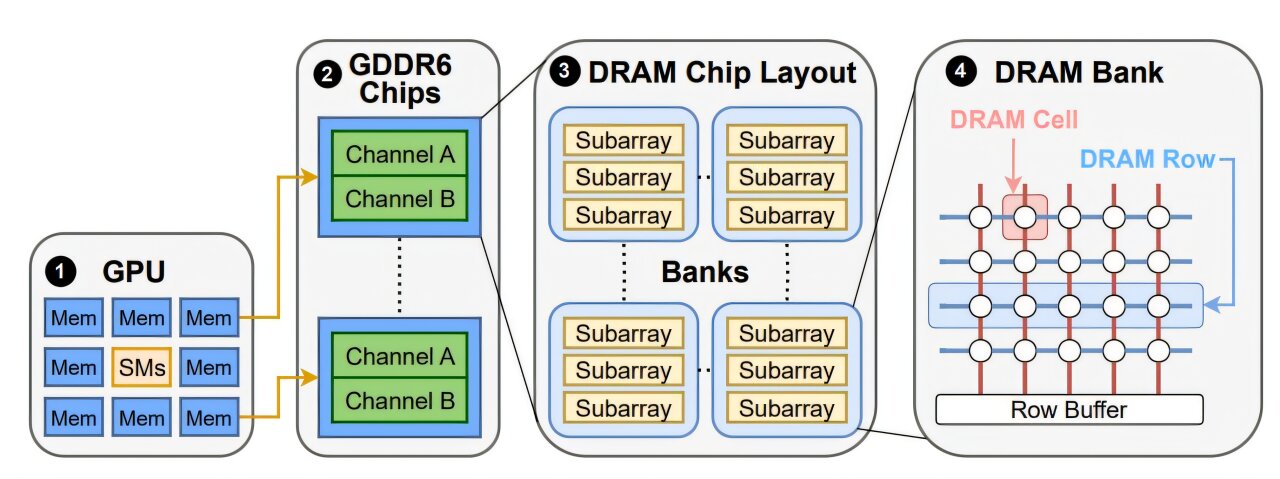

The vulnerability stems from an attack called Rowhammer. For years, security researchers have known that by repeatedly hammering—rapidly accessing—specific rows of memory cells, they could cause electrical disturbances in adjacent cells. These disturbances, in turn, flip bits: microscopic units of data representing 0s and 1s. Even a single flipped bit can rewrite information in ways that bypass software defenses, opening doors to manipulation or takeover of a system.

Until recently, Rowhammer was thought to be the problem of central processing units (CPUs). But the Toronto researchers, led by assistant professor Gururaj Saileshwar, have shown that GPUs—equipped with high-speed graphics double data rate (GDDR) memory—are not immune. GDDR memory, prized for its ability to shuttle massive amounts of data quickly, is standard in the graphics cards that fuel both gaming and machine learning. Yet in the wrong hands, this strength may also be its Achilles’ heel.

“Catastrophic Brain Damage” for AI

The team demonstrated that a carefully executed Rowhammer-style attack on GPUs could devastate AI models. By flipping just a single bit in the exponent of a neural network weight, they reduced a model’s accuracy from a respectable 80% to a disastrous 0.1%.

Saileshwar vividly described this collapse as “catastrophic brain damage” for AI. The phrase captures the stakes clearly: an AI system trained to spot tumors in X-rays or detect suspicious banking activity could suddenly become useless, even dangerous, if compromised at the hardware level.

The implications stretch far beyond academic curiosity. Hospitals, financial institutions, and governments are increasingly relying on GPU-powered AI. If attackers were able to quietly corrupt these models, the consequences could ripple across industries, eroding trust and exposing vulnerabilities in systems once thought robust.

Hammering in the Dark

Executing the attack on GPUs was no easy feat. Unlike CPUs, where researchers can observe how instructions flow between processor and memory, GPUs are far more opaque. Their memory chips are soldered directly onto the board, leaving little room for inspection.

“Hammering on GPUs is like hammering blind,” Saileshwar admitted. The team, which included Ph.D. student Chris (Shaopeng) Lin and undergraduate researcher Joyce Qu, nearly abandoned the project after repeated failures to trigger bit flips.

But persistence paid off. By exploiting the natural parallelism of GPUs—their ability to run thousands of operations at once—the researchers optimized their hammering patterns. This breakthrough finally triggered the elusive flips, proving that GPU memory could indeed be corrupted by the Rowhammer technique.

NVIDIA Responds

Earlier this year, the researchers privately disclosed their findings to NVIDIA, the world’s most valuable semiconductor company and a dominant force in GPU design. By July, NVIDIA issued a security notice to its customers.

The company recommended enabling error correction code (ECC), a protective feature that can catch and correct bit flips. However, there’s a trade-off: ECC reduces performance, slowing down demanding machine learning tasks by up to 10%. For AI developers chasing speed and efficiency, that performance hit could be painful.

Worse still, the Toronto team warned that ECC might not be a silver bullet. While it can handle isolated bit flips, more sophisticated attacks generating multiple flips could overwhelm its defenses. The specter of future attacks remains.

Cloud Computing: The Weakest Link

Who should worry most about GPUHammer attacks? According to the researchers, the risk is greatest in cloud environments. Unlike home users running games or small models on personal graphics cards, cloud providers host GPUs that serve multiple users simultaneously.

This multi-tenant setup creates a dangerous opportunity: one user could deliberately trigger bit flips that corrupt the computations of another, tampering with AI models without ever touching their code or data directly. In a world where companies rent GPU power for everything from drug discovery to financial forecasting, such an attack could undermine entire industries.

A New Frontier of Hardware Security

The GPUHammer discovery is a sobering reminder that security cannot be confined to software alone. “Traditionally, security has been thought of at the software layer,” Saileshwar explained, “but we’re increasingly seeing physical effects at the hardware layer that can be leveraged as vulnerabilities.”

It’s a shift that demands a new mindset. As AI workloads grow in complexity and value, adversaries will search deeper, probing not only for flaws in algorithms but for cracks in the silicon itself. Hardware once treated as reliable and invisible may now be the battlefield where the future of AI security is decided.

Beyond the Bit Flips

The work by Saileshwar and his students underscores an essential truth: AI is only as trustworthy as the systems it runs on. A single flipped bit in a neural network weight is not just a technical curiosity—it is a reminder that intelligence, whether biological or artificial, is fragile.

For now, enabling ECC and strengthening memory protections are the best available defenses. But the researchers emphasize that their work is only the beginning. As GPUs evolve and new architectures emerge, further investigations are needed to expose and mitigate vulnerabilities before malicious actors exploit them.

“More investigation will probably reveal more issues,” Saileshwar warned. “And that’s important, because we’re running incredibly valuable workloads on GPUs. AI models are being used in real-world settings like health care, finance and cybersecurity. If there are vulnerabilities that allow attackers to tamper with those models at the hardware level, we need to find them before they’re exploited.”

The Fragility of Trust

The Toronto team’s findings remind us that technology, for all its power, rests on delicate foundations. In the rush to build ever-faster AI, the silent risks embedded in hardware can be overlooked. But trust in AI depends not only on clever algorithms and vast data but also on the stability of the machines that carry them.

A single rogue bit, flipped in the right place, can reduce intelligence to nonsense. The discovery of GPUHammer is a warning flare for an AI-driven world: the deeper we depend on machines to think for us, the more carefully we must guard the hidden layers that make that thinking possible.

More information: GPUHammer: Rowhammer Attacks on GPU Memories are Practical. gururaj-s.github.io/assets/pdf/SEC25_GPUHammer.pdf