In a quiet radiology suite at the Mayo Clinic, the hum of machines merges with the soft rustle of white coats. The room smells faintly of antiseptic and warm electronics. On a glowing monitor, the black-and-white image of a woman’s lungs spreads like wings across the screen. Tiny threads of tissue and airways swirl in delicate fractals.

To the naked eye—even the well-trained eye of a seasoned radiologist—the scan appears largely unremarkable. No glaring tumors. No dramatic shadows. But the AI model sees differently. Like a digital oracle, it parses pixel values invisible to humans, its algorithms trained on millions of images. After a brief pause, the software flashes a quiet alert:

Early stage adenocarcinoma suspected.

The tumor, no larger than a grain of rice, hides in a forest of healthy tissue. Without the AI’s subtle pattern recognition, it might have evaded detection for months—until it grew into something monstrous.

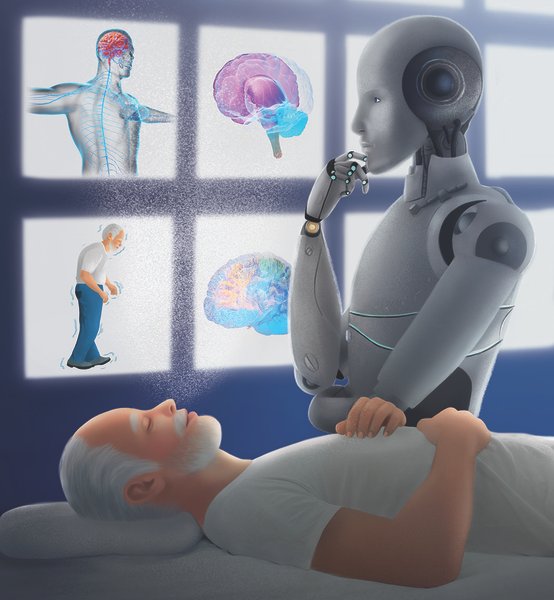

This is the age we live in now: a world where machines with no heartbeat or soul are saving lives in ways that feel almost magical. Artificial intelligence is stepping into the clinical spotlight—not to replace doctors, but to reveal secrets the human eye cannot see.

The Limits of Human Eyes

Throughout history, medicine has depended on human observation. The physician’s gaze, trained over decades, has been the ultimate diagnostic tool. Hippocrates, the father of medicine, wrote about studying a patient’s complexion and pulse. William Osler, a titan of modern medicine, declared:

“Listen to your patient; he is telling you the diagnosis.”

Yet human perception is fallible. Doctors grow tired after twelve-hour shifts. Their eyes blur from reading hundreds of scans. Cognitive biases can creep in—a doctor might dismiss a subtle finding because the patient seems healthy, or anchor on an initial impression and overlook new clues.

Even the best radiologists miss small cancers in chest X-rays up to 20-30% of the time, particularly when tumors overlap with bones or blood vessels. Dermatologists disagree on whether a mole is benign or malignant in about one in five cases. Pathologists examining biopsies can diverge in their interpretations, especially with borderline findings.

These are not failures of intelligence, but of biology. The human brain, marvelous as it is, was sculpted by evolution to hunt game on the savannah, not decode radiographic noise. Fatigue, stress, distraction—these human factors open dangerous gaps where disease can slip through unseen.

And in those gaps, lives hang in the balance.

The Digital Apprentices

Into this fragile ecosystem steps artificial intelligence. It is not a single technology but a constellation of methods—deep learning, neural networks, natural language processing—all designed to recognize patterns and extract meaning from oceans of data.

Imagine an AI model fed with millions of mammograms. It learns to distinguish benign lumps from malignant tumors, finding pixel patterns so subtle even expert eyes miss them. Or an AI that scans electronic health records, flagging patients at risk for sepsis hours before symptoms become obvious.

These machines become digital apprentices to doctors—tireless, objective, unfazed by fatigue or cognitive bias. They do not daydream. They do not argue with their spouses the night before a shift. Their only loyalty is to the truth hidden in the data.

Yet this is not the vision of robots replacing physicians. The future of medicine lies in collaboration—a powerful synergy where human intuition and empathy blend with machine precision.

Learning to See the Invisible

At Stanford University, a team of scientists embarked on a radical project. They trained a deep learning algorithm on more than 129,000 skin images spanning over 2,000 diseases. The question was deceptively simple: Could a machine match or exceed dermatologists in diagnosing skin cancer?

When tested against board-certified dermatologists, the AI matched them almost exactly in accuracy. The machine could distinguish between harmless moles and deadly melanomas, even on ambiguous images. In many cases, it caught subtle features the human eye overlooked.

Consider a patient with a flat, reddish patch on his arm. To an untrained observer, it looks harmless—perhaps a rash or insect bite. But the AI picks up asymmetrical borders and a pattern of colors invisible to human perception. Its diagnosis: melanoma in situ, the earliest stage of skin cancer. Thanks to early detection, the patient’s life trajectory changes. A simple excision removes the cancer before it spreads.

This is not an isolated case. Across medicine, AI is learning to detect the invisible:

- Tiny lung nodules in CT scans that might be the earliest sign of lung cancer.

- Microscopic blood vessel changes in retinal photos that hint at diabetes or hypertension.

- Abnormal heart rhythms hiding within the chaos of EKG signals.

- Molecular signatures in pathology slides predicting how aggressive a tumor might be.

Each revelation carries profound consequences. An early diagnosis can mean the difference between a minor procedure and a terminal disease. Between a long life and a eulogy.

The Music of Data

At the heart of AI’s power lies data—the raw material from which machines learn. Data in medicine is abundant yet chaotic. Scans, lab tests, doctor’s notes, genetic sequences, vital signs—all captured across fragmented systems.

For centuries, medical knowledge resided in human minds and paper charts. Now, in the digital era, data flows like music. Hospitals generate terabytes of images each year. Genomic sequencing spills out billions of DNA letters. Electronic health records collect every heartbeat, every prescription, every allergy.

But human beings cannot absorb this scale. No doctor can memorize millions of images or parse gigabytes of genetic code while juggling patients and paperwork. Here, AI finds its niche.

Consider pathology slides scanned at high resolution. A single slide can be a gigabyte of data. A pathologist might examine a few sections under the microscope, scanning fields of view one at a time. AI can analyze every pixel, searching for hidden clusters of malignant cells.

In radiology, AI compares a patient’s current scan with thousands of prior scans from similar patients, spotting subtle changes invisible to human perception.

In genomics, AI finds patterns linking DNA mutations to treatment outcomes. It predicts which patients might respond to immunotherapy, sparing them toxic treatments that might fail.

The music of data becomes the language of prediction. And in that song, diseases whisper their secrets.

A Second Set of Eyes

In a busy emergency department in London, a man arrives short of breath. His chest X-ray looks mostly normal. The junior doctor, glancing quickly, sees no obvious crisis. Yet the hospital’s AI system quietly flags the scan: “possible pneumothorax.”

A senior radiologist reviews the case and realizes the AI is correct. A thin sliver of air has leaked between the lung and chest wall—a potentially life-threatening condition. The patient receives immediate treatment.

This is the future unfolding in real time: AI as a second set of eyes. It’s not that doctors are incompetent. They’re overwhelmed. Emergency rooms overflow. Radiologists read hundreds of scans daily. In this tide of information, even skilled doctors miss subtle clues.

AI does not replace human judgment. It augments it. Studies have shown that doctors paired with AI perform better than either alone. The machine offers relentless vigilance; the human brings empathy, context, and experience.

Yet the relationship is complex. Doctors sometimes mistrust AI suggestions, especially when the reasoning is opaque. AI systems can produce false positives, leading to unnecessary biopsies or anxiety. The challenge lies in forging a partnership where human and machine respect each other’s strengths—and cover each other’s blind spots.

The Black Box Problem

One of the great paradoxes of AI is its brilliance and opacity. Deep learning networks, especially neural networks, are often “black boxes.” They deliver predictions but cannot easily explain how they arrived at them.

This frustrates doctors trained to demand reasoning. A radiologist might ask:

“Why does the AI say this lung scan shows early cancer? What features did it see?”

In many cases, the AI cannot answer in human terms. It recognizes complex pixel patterns but cannot translate them into anatomical landmarks or medical language.

This “black box problem” creates tension. Doctors are legally and ethically responsible for diagnoses. If an AI makes an error, who bears accountability? The manufacturer? The hospital? The physician who accepted the AI’s recommendation?

Regulators like the FDA are grappling with these questions. New AI tools increasingly must provide “explainability”—ways to show doctors what features influenced the machine’s decision. Heatmaps highlight suspicious areas on images. Charts show which lab results swayed the prediction.

Trust must be earned. No doctor wants to gamble a patient’s life on a digital oracle they cannot question.

The Pandemic Catalyst

In 2020, as COVID-19 swept across the globe, hospitals teetered under a tsunami of patients. ICUs overflowed. Ventilators grew scarce. Doctors and nurses worked grueling shifts in protective gear, eyes red from exhaustion.

Into this chaos, AI emerged as an unexpected ally. Researchers developed AI models to detect COVID-19 in chest CT scans. Others used algorithms to predict which patients were likely to deteriorate, guiding triage and resource allocation.

At Mount Sinai Hospital in New York, an AI model helped flag COVID-19 cases in patients who arrived with atypical symptoms. Early detection meant faster isolation and treatment. In China, AI models sifted lung scans faster than overwhelmed radiologists, providing rapid diagnoses in hard-hit regions.

The pandemic accelerated adoption. Hospitals once cautious about AI suddenly embraced it. The crisis made clear: no human workforce could handle such data volume alone.

But the pandemic also exposed AI’s limitations. Models trained on one hospital’s data sometimes performed poorly in another. Variations in imaging protocols, population demographics, and disease prevalence could undermine AI’s accuracy. Robust validation became critical. A model built in Beijing might stumble in Boston.

The Art of Early Warnings

Imagine a patient whose vital signs remain stable—blood pressure, temperature, pulse. But deep within the electronic medical record, subtle patterns begin to shift. Slight increases in respiratory rate. Mild changes in lab results. A hint of infection brewing.

A human doctor might dismiss these signals as noise. But an AI system, trained on millions of patient records, recognizes the signature of impending sepsis. It sends an alert: High risk of sepsis within six hours.

Nurses intervene early. Antibiotics start. Fluids are given. The patient survives.

Early warning systems are among AI’s most powerful gifts. Sepsis, a deadly systemic infection, kills millions each year. Time is everything—mortality rises with each hour of delayed treatment. Studies show AI can detect sepsis hours before human teams recognize it.

Similarly, AI predicts cardiac arrests, respiratory failure, even suicide risk. At Duke University, AI algorithms scan psychiatric records to identify patients at risk of self-harm, prompting proactive outreach.

These tools transform medicine from reactive to proactive. Instead of waiting for crises, clinicians can intervene while disease still simmers below the surface.

Ethical Frontiers

Yet as AI grows more powerful, new ethical dilemmas emerge. Consider breast cancer screening. AI models sometimes detect lesions too small to ever become dangerous. Should doctors treat them, subjecting women to surgery and radiation, or watch and wait?

Overdiagnosis can harm patients psychologically and physically. False positives trigger anxiety, biopsies, and lifelong medicalization. A machine that sees too much can become a curse as well as a blessing.

Bias is another specter haunting AI. Algorithms trained on predominantly white populations may perform poorly on people of color. A skin cancer model might miss melanomas on dark skin if its training data lacked diversity.

Health inequities, already stark, risk becoming worse if AI inadvertently amplifies disparities. Researchers are now pushing for “algorithmic fairness”—ensuring models perform equally across demographics.

Patient privacy looms large. Medical data is among the most sensitive information we possess. AI requires vast datasets, raising concerns about breaches and misuse.

The stakes are high. A world where machines guide diagnoses must tread carefully, balancing innovation with compassion, privacy, and justice.

Beyond Human Intuition

AI’s ultimate promise lies not just in matching human doctors but transcending them. Machines can discover correlations too complex for the human mind.

At Memorial Sloan Kettering, researchers developed an AI model predicting how lung tumors would respond to immunotherapy. The algorithm found patterns invisible even to expert oncologists, offering patients a glimpse of personalized hope.

In cardiology, AI has detected atrial fibrillation—a dangerous heart rhythm—by analyzing electrocardiograms that appear normal to human eyes.

Some researchers dream of “digital twins”—virtual replicas of patients built from genetic data, imaging, and clinical history. AI could simulate how a specific patient might respond to different drugs, helping doctors choose the safest, most effective therapy.

These visions remain nascent but breathtaking. Medicine may evolve from a field of averages to a realm of deeply personalized care.

A Partnership of Flesh and Silicon

Will AI replace doctors? The answer, echoed by nearly every expert, is no.

Human beings possess qualities no machine can replicate: empathy, intuition, moral judgment. A patient facing a cancer diagnosis doesn’t want to hear it from a screen. They want a doctor who sits beside them, meets their eyes, and says:

“I’m here. We’ll face this together.”

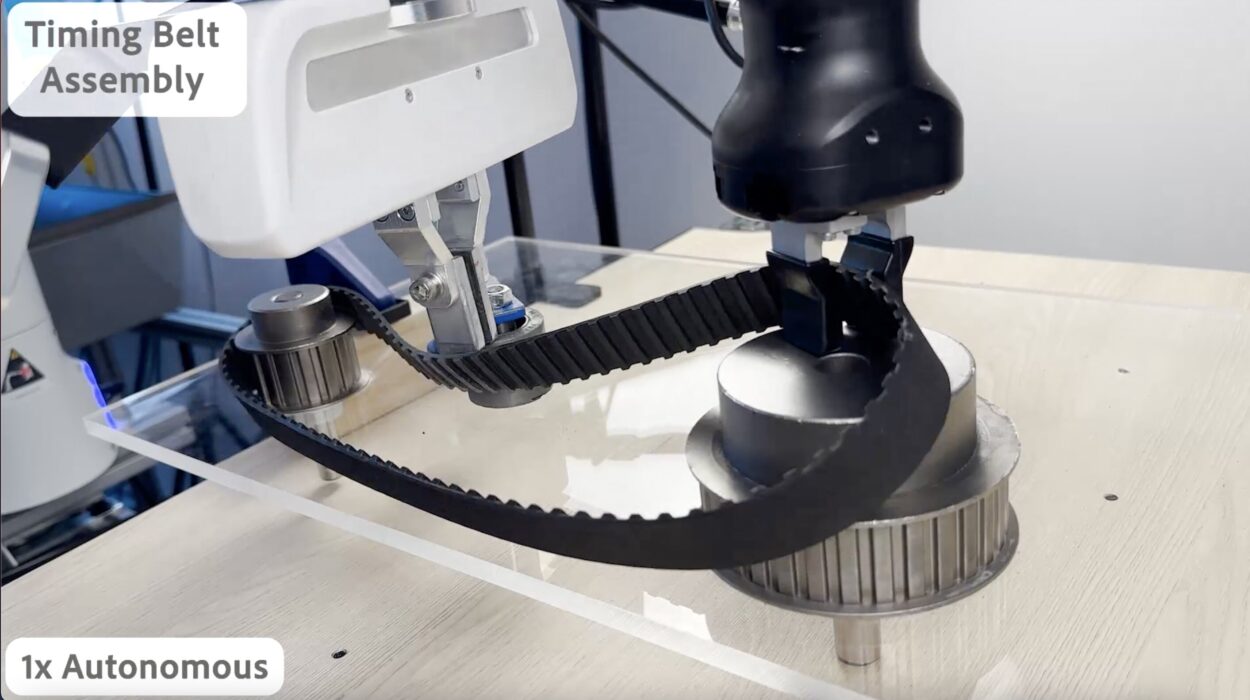

Yet doctors without AI may one day be as outdated as surgeons before anesthesia. Machines will not replace physicians—but physicians who use AI will replace those who do not.

The ideal future is not man versus machine, but man plus machine. A cardiologist with AI can diagnose heart disease earlier. An oncologist with AI can tailor cancer treatments more precisely. A primary care doctor with AI can identify hidden risks lurking in routine checkups.

Together, human compassion and machine precision form a partnership greater than either alone.

The Dawn of Hidden Diagnoses

Back in that quiet radiology suite, the woman whose lung scan raised the AI’s suspicion underwent a biopsy. The result confirmed early adenocarcinoma. Surgery removed the tiny tumor completely. She returned to her family, her life saved by a machine that saw what no human could.

This story, repeated in hospitals worldwide, hints at a revolution still unfolding. Across every specialty, diseases long hidden in shadows are being dragged into the light.

In this brave new world, AI’s greatest gift may be simple: giving doctors superhuman eyes. Eyes that never tire. Eyes that never blink. Eyes that see the future and whisper:

“Look again.”

And somewhere, in the dance between silicon and flesh, another life is saved—because a machine dared to see what a human could not.