In the early days of data analysis, most datasets were small enough to fit neatly into a few columns on a spreadsheet. A scientist might measure two variables — say, the temperature and pressure of a gas — and plot them easily on a two-dimensional graph. Patterns, trends, and correlations would leap off the page.

But as technology evolved, our ability to collect data exploded. A single MRI scan can produce hundreds of thousands of data points. A gene expression dataset may contain measurements for 20,000 different genes across a few hundred samples. A sensor array in an industrial machine might capture dozens of readings every second. Suddenly, instead of two or three columns, we have hundreds or thousands of variables for each observation.

This high-dimensional data carries the promise of deep insights, but it also hides a trap — a mathematical and conceptual challenge known as the curse of dimensionality.

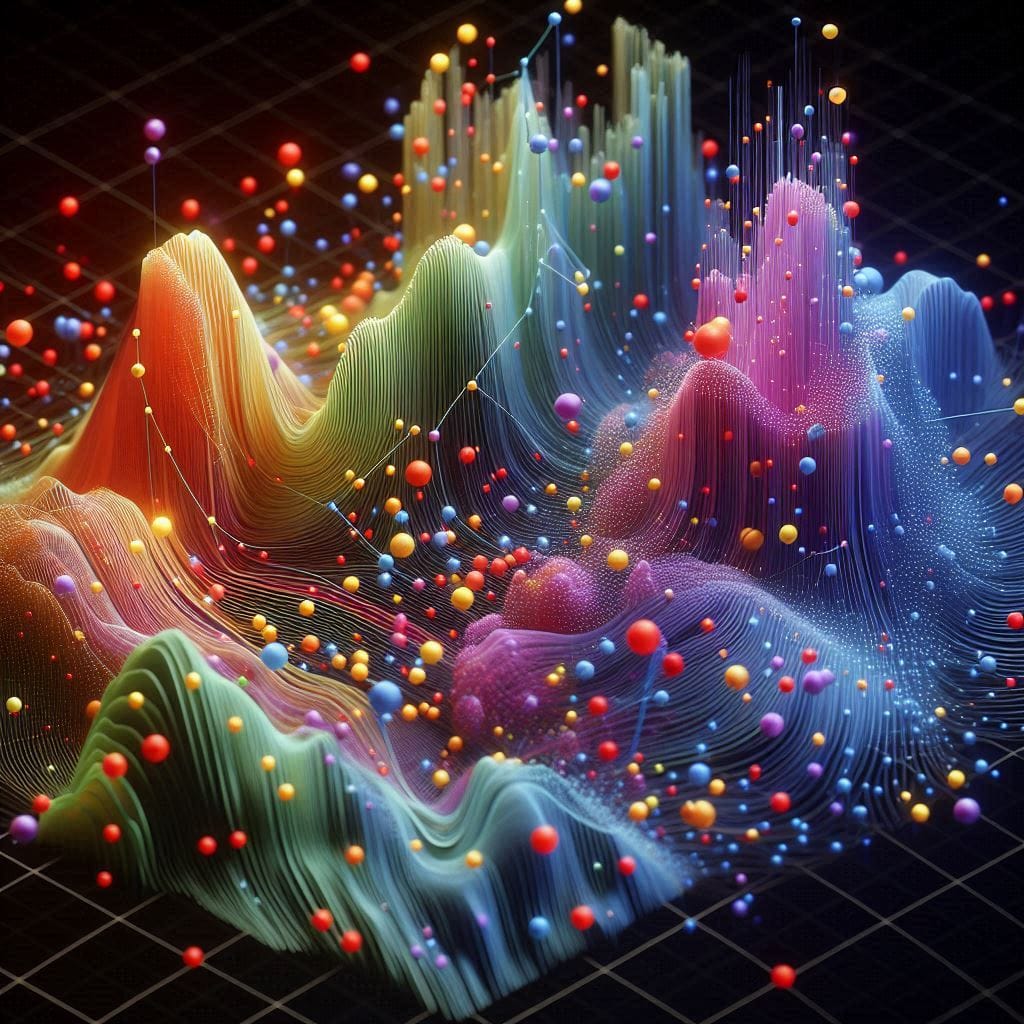

The curse is not simply that the data is “big.” It is that our intuition, our statistical tools, and even our distance metrics begin to break down as dimensions grow. In high dimensions, points that seem far apart in one projection may actually be close in another. Patterns become buried in noise. Simple visualizations become impossible.

This is where dimensionality reduction enters the stage. It is the art and science of transforming high-dimensional data into a lower-dimensional form — ideally two or three dimensions — while preserving as much of the important structure as possible. Done well, it can turn a tangled knot of data into something that the human eye and mind can grasp.

Why Dimensionality Reduction is More Than Compression

At first glance, dimensionality reduction might sound like compression — a way to take large data and make it smaller. And in a sense, it is. But it is far more profound than that.

Imagine you have a photograph of a sunset. You could reduce its file size by throwing away pixels or colors, but you might lose the essence of the image. Dimensionality reduction, done well, is like creating a painting of the sunset: fewer details, but the core emotion, the gradients, and the relationships between colors remain.

In data science, that “essence” might be the clusters of similar patients in a medical study, the separation between different handwritten digits, or the trends in a financial market. The goal is to keep the signal and lose the noise.

This is where three powerful techniques — Principal Component Analysis (PCA), t-Distributed Stochastic Neighbor Embedding (t-SNE), and Uniform Manifold Approximation and Projection (UMAP) — come into play. Each has its own philosophy, mathematical foundation, and strengths. Together, they form a kind of toolkit for navigating the high-dimensional landscape.

Principal Component Analysis: The Grandfather of Dimensionality Reduction

If dimensionality reduction were a family tree, PCA would be the wise elder — simple, elegant, and enduring. Born in the early 20th century from the work of Karl Pearson and later Harold Hotelling, PCA has been the foundation of data analysis for generations.

The idea behind PCA is deceptively simple: find the directions in your data that capture the most variance — the most “spread.” These directions are called principal components.

Mathematically, PCA works by:

- Centering the data (subtracting the mean).

- Computing the covariance matrix, which measures how each pair of variables varies together.

- Finding the eigenvectors of this matrix — the principal components — and ordering them by their corresponding eigenvalues, which indicate how much variance each component explains.

If you imagine your data as a cloud of points in high-dimensional space, PCA finds the axes along which this cloud is widest. Projecting the data onto these axes gives you a new, reduced-dimensional view that preserves as much variance as possible.

One of PCA’s beauties is its linearity. It is straightforward, fast, and interpretable. In fields like finance, genetics, and neuroscience, PCA is often the first tool applied to make sense of raw data.

But PCA has its limits. Because it is linear, it can miss patterns that lie along curved or twisted manifolds in high-dimensional space. It assumes that variance is the best measure of importance, which is not always true — sometimes, small-variance structures are crucial. And while PCA can reveal global structure, it may blur local relationships.

The Leap to Non-Linear: Enter t-SNE

As datasets grew more complex, it became clear that linear tools like PCA could not always capture their intricacies. High-dimensional data often lives on non-linear manifolds — curved surfaces embedded in a higher-dimensional space. Think of a spiral drawn on a sheet of paper: to understand its structure, you need more than straight-line projections.

In 2008, Laurens van der Maaten and Geoffrey Hinton introduced t-Distributed Stochastic Neighbor Embedding (t-SNE), a non-linear method designed for visualizing high-dimensional data in two or three dimensions.

The philosophy of t-SNE is different from PCA. Instead of preserving variance, t-SNE aims to preserve local neighborhoods. If two points are close together in the original high-dimensional space, they should remain close in the low-dimensional projection.

It does this by:

- Converting distances between points in the original space into probabilities that reflect similarity.

- Doing the same in the low-dimensional space.

- Minimizing the difference between these two probability distributions using a cost function called Kullback–Leibler divergence.

The “t” in t-SNE refers to the Student’s t-distribution, which the algorithm uses in the low-dimensional space to handle the so-called “crowding problem” — the tendency for points to bunch up when projected down.

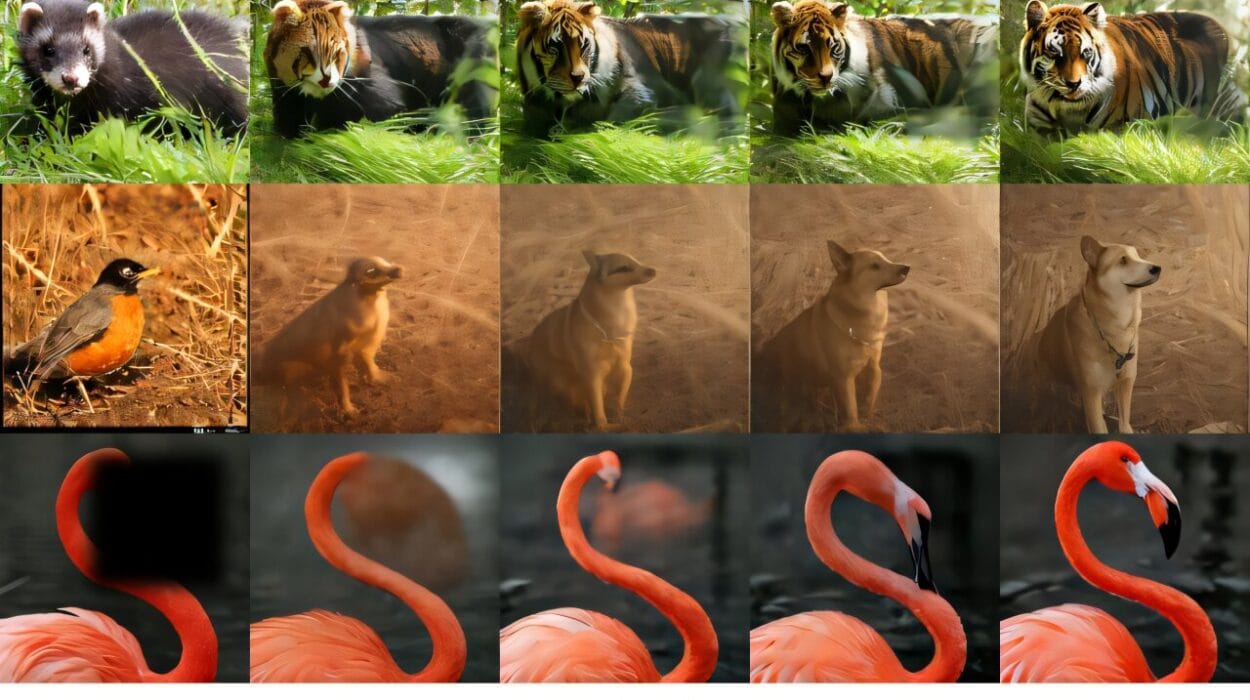

t-SNE produces maps that are often breathtakingly clear: clusters leap into view, revealing structure that may be invisible in the raw data. It has been transformative in areas like single-cell genomics, where complex cell types emerge as distinct islands on a t-SNE plot.

But t-SNE has quirks. It is computationally intensive, sensitive to hyperparameters like perplexity, and it does not preserve global distances well. A t-SNE map is best read as “these points are neighbors” rather than “these clusters are far apart.”

UMAP: The Newcomer with Big Ambitions

In 2018, Leland McInnes, John Healy, and James Melville introduced UMAP — Uniform Manifold Approximation and Projection. Like t-SNE, UMAP is non-linear and focuses on preserving local structure. But it is built on a more formal mathematical foundation in manifold theory and topology.

UMAP begins with the assumption that your high-dimensional data lies on a manifold — a shape that may be curved and folded but is locally flat. It uses concepts from Riemannian geometry and fuzzy simplicial sets to build a graph of the data’s local relationships, then optimizes a low-dimensional layout that preserves both local and some global structure.

UMAP has several advantages:

- It is faster than t-SNE, especially on large datasets.

- It often preserves more of the global structure.

- It scales well to millions of points.

In many applications, UMAP produces plots that resemble t-SNE but run in a fraction of the time. It has rapidly gained popularity in machine learning, bioinformatics, and beyond.

Choosing the Right Tool

PCA, t-SNE, and UMAP are not competitors so much as complementary tools. PCA is fast, interpretable, and ideal for initial exploration or when relationships are mostly linear. t-SNE excels at revealing local clusters in complex, high-dimensional data, especially when visualization is the goal. UMAP offers much of t-SNE’s power with greater speed and often better preservation of both local and global structure.

A common workflow is to use PCA first to reduce very high dimensions (say, from 20,000 to 50), then apply t-SNE or UMAP for the final step to 2D or 3D visualization. This reduces noise and speeds up computation.

Beyond Visualization: Dimensionality Reduction in Machine Learning

While visualization is the most visible application, dimensionality reduction also plays a crucial role in machine learning pipelines. Reducing the number of features can:

- Improve generalization by removing noise and irrelevant variables.

- Speed up training by reducing computational load.

- Help with interpretability by focusing on the most important components.

In high-dimensional spaces, even simple models can overfit badly. Dimensionality reduction acts as a form of regularization, smoothing the decision boundaries.

The Human Element in Dimensionality Reduction

It is tempting to treat these algorithms as magic boxes: feed in high-dimensional data, get out a neat 2D plot. But every dimensionality reduction method carries biases — assumptions about what “structure” matters. The patterns you see are shaped by those assumptions and by the hyperparameters you choose.

And there is an art to interpreting the results. A t-SNE or UMAP plot can inspire joy when clusters emerge, but those clusters may or may not correspond to meaningful categories. Sometimes, the absence of structure is itself the truth.

At its best, dimensionality reduction is not just a computational trick but a form of translation — a way of turning the alien geometry of high dimensions into a language our brains can understand. Like any translation, it involves choices, compromises, and the possibility of distortion.

Looking Forward: The Future of Low-Dimensional Maps

As datasets grow ever larger and more complex, new methods are emerging that build on and extend the ideas of PCA, t-SNE, and UMAP. Some use deep learning, like autoencoders and variational autoencoders, to learn non-linear mappings that can reduce dimensionality while preserving key structures. Others explore hybrid methods that combine the speed of PCA with the flexibility of manifold learning.

But the core challenge remains: the universe of data is vast and high-dimensional, and our minds are small and low-dimensional. Bridging that gap will always require tools that are as much about human perception as about mathematics.