The internet has become the bloodstream of modern civilization. In its flow move our communications, finances, healthcare records, government secrets, and even the control systems of our infrastructure. But within this bloodstream lurk pathogens — malicious codes, deceptive intrusions, and stealthy actors whose intentions range from theft to sabotage.

Unlike the wars of the past, the battle in cyberspace is rarely announced. It’s silent, invisible, and constant. You do not hear the footsteps of an attacker in the night; you see the breach only after the data is gone, or the damage is done. The complexity of modern cyber threats has surpassed the limits of purely human monitoring.

Into this hidden battlefield comes a new ally — Artificial Intelligence. With its ability to learn, adapt, and detect patterns at speeds no human analyst can match, AI is reshaping how we defend our digital world. Central to this revolution is machine learning, a branch of AI that thrives on patterns, anomalies, and prediction.

The question is not whether AI will be used in cybersecurity — it already is — but how far it can go in tipping the scales against cybercrime.

Why Human Defense Alone Is No Longer Enough

In the early days of computing, a cyber threat was often just a clever virus spread by floppy disk or email attachment. Security teams could rely on signature-based detection — keeping a database of known malicious patterns and blocking anything that matched. It was like checking a passport against a list of known criminals.

But today’s attackers have become protean. They adapt their code to evade detection, employ polymorphic malware that changes form each time it spreads, and use sophisticated phishing campaigns that mimic legitimate communication with near-perfect accuracy. Some attacks are automated, operating at machine speed to find weaknesses before humans even know they exist.

The scale of the problem has exploded. Millions of intrusion attempts occur daily against large organizations. A single corporate network can generate billions of log entries each day. No human — or even a large team — can sift through that much data in real time.

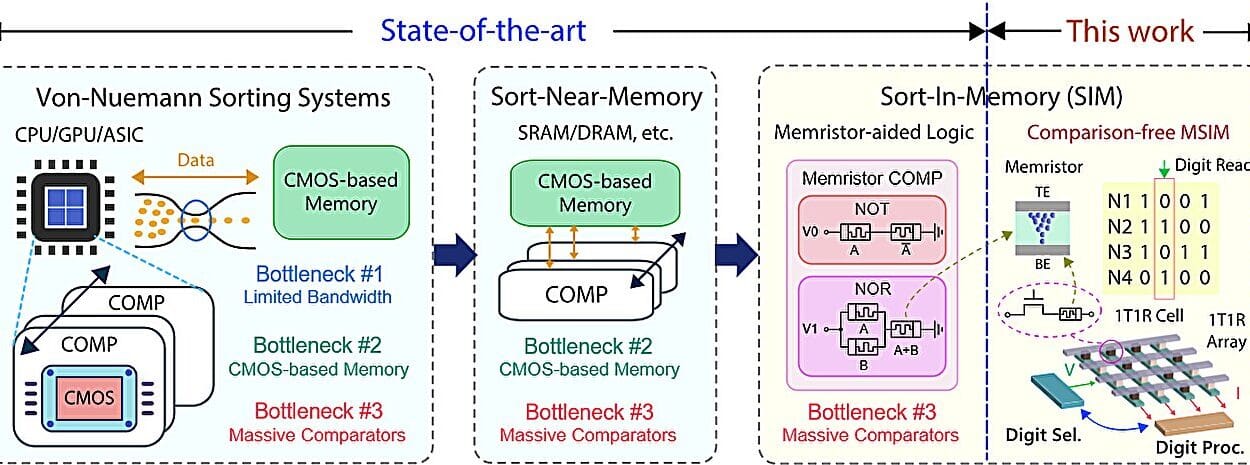

This is where machine learning becomes essential. Instead of relying solely on known signatures, it can learn what “normal” looks like for a network and detect even the faintest whisper of something unusual.

How Machine Learning Thinks Differently

At its core, machine learning is about patterns. You feed it historical data — logs of past network traffic, records of known attacks, benign user activity — and it learns the statistical relationships within that data. Then, when new data arrives, it compares it against what it has learned to flag anything that doesn’t fit.

In cybersecurity, this might mean training an algorithm to recognize normal login times for employees. If someone’s account suddenly logs in from another country at 3 a.m., the system can immediately raise a red flag. But it can also work at a deeper level — identifying strange sequences of commands, abnormal file access patterns, or subtle deviations in network packet structure.

Machine learning models can be supervised (trained on labeled examples of “attack” and “not attack”) or unsupervised (finding anomalies without needing explicit labels). Both have their place. Supervised learning can be razor-sharp in spotting known attack behaviors, while unsupervised learning can uncover entirely new threat types that have never been cataloged before.

The strength lies in speed and scale. A well-trained model can process thousands of events per second, continuously refining its understanding. And unlike static rule-based systems, it evolves — learning from new attacks to guard against tomorrow’s variants.

The Dance Between Attacker and Defender

Cybersecurity has always been an arms race. As defenders grow smarter, so do attackers. Machine learning shifts the battleground by giving defenders the ability to predict rather than just react.

Take phishing, for example. Traditional systems might block emails containing known malicious links or suspicious domains. A machine learning model, however, can analyze the subtle linguistic patterns of the email’s text, the timing of the send, the sender’s historical behavior, and even image metadata to determine if it is fraudulent — even if it’s the first time that particular scam has ever been seen.

But here’s the twist: attackers can also use machine learning to their advantage. They can craft spear-phishing campaigns tailored to individual targets by analyzing their social media activity, or develop malware that learns to evade AI-based detection. This “AI versus AI” scenario is already emerging.

The result is a dynamic duel — each side constantly probing, adapting, and counter-adapting. For defenders, the challenge is to ensure their algorithms learn faster and smarter than those of their adversaries.

When the Machines See What We Cannot

One of the most transformative aspects of AI in cybersecurity is its ability to see in dimensions humans cannot. A human analyst might spot a suspicious IP address or a strange file name, but an AI can detect faint statistical correlations buried in terabytes of network data — patterns so subtle they are invisible without mathematical analysis.

For example, a distributed denial-of-service (DDoS) attack might start with just a few unusual requests to a server, scattered over hours, before it escalates into a flood. To a human, those early requests look like background noise. To a trained machine learning model, they might light up as the early tremors of a larger quake.

Similarly, insider threats — employees or contractors who misuse their access — are notoriously hard to catch. AI can quietly learn the normal behaviors of each user and spot when someone begins to deviate, even if they’re trying to mask their actions.

This level of vigilance operates continuously, without fatigue, across all hours of the day. It doesn’t replace human analysts, but it extends their reach into realms they could never monitor alone.

The Emotional Stakes of Digital Protection

It’s easy to talk about cybersecurity in abstract terms — firewalls, packets, protocols. But behind every breach is a human cost. The ransomware attack that locks up a hospital’s systems can delay surgeries, put lives at risk. The data breach at a bank can drain the savings of thousands of families. A government system compromised by a foreign power can undermine national security and public trust.

AI in cybersecurity is not just a technical evolution; it’s a moral one. It’s about using our most advanced tools to protect the vulnerable, to shield personal dignity, to ensure that the digital future is not ruled by fear.

The emotional dimension of this work is often underappreciated. Analysts who respond to threats know they’re racing against not just machines, but human malice. For them, AI is more than an efficiency boost — it’s a partner that helps carry an unbearable load.

The Human-Machine Partnership

AI does not replace the human mind in cybersecurity. It augments it. Machines excel at scanning vast oceans of data, but humans excel at understanding context, making ethical judgments, and responding creatively to unexpected situations.

In practice, this means AI handles the first line of defense — detecting anomalies, prioritizing alerts, filtering noise — while human analysts investigate the most critical cases. The synergy reduces burnout, allowing humans to focus on strategy and complex incident response.

This partnership also demands trust. An AI that generates constant false alarms will be ignored, just as a guard dog that barks at every passing leaf becomes background noise. Training, calibration, and feedback loops between humans and machines are essential to maintain credibility.

The Future Battlefield

The next decade will see AI in cybersecurity evolve beyond detection into autonomous response. Already, experimental systems can not only spot an intrusion but take immediate action — isolating affected devices, blocking suspicious IPs, or rolling back changes to prevent damage.

In theory, a fully autonomous defense could operate like an immune system, detecting and neutralizing threats before they cause harm, without human intervention. But such power comes with risks. An overzealous AI might shut down critical systems based on a false positive, causing more harm than the attack it was trying to stop. Balancing speed with caution will be the central challenge.

Another frontier is adversarial machine learning — techniques where attackers feed subtle, maliciously crafted data to AI systems to trick them into misclassifying inputs. Defending against these AI-specific attacks will require entirely new layers of cybersecurity.

Meanwhile, the rise of quantum computing threatens to upend many encryption schemes. AI will likely be essential in developing and managing post-quantum cryptography to safeguard data in this new era.

A Moral Compass in the Age of Intelligent Defense

As AI becomes more embedded in cybersecurity, ethical considerations multiply. How much monitoring of user behavior is acceptable in the name of security? How do we ensure AI systems are not biased, targeting certain groups unfairly? And who is accountable if an AI’s decision causes harm?

The answers to these questions will shape not only the technical design of AI systems, but the trust society places in them. Transparent algorithms, clear oversight, and the integration of diverse perspectives in AI development will be critical in ensuring that security does not come at the cost of freedom.

The Light in the Network’s Shadows

Machine learning in cybersecurity is not a magic wand. It will not end cybercrime overnight, nor will it make networks invulnerable. But it is a transformative force — one that shifts the balance toward defenders in a game that has too often favored attackers.

The story of AI in cybersecurity is, at its heart, a story of resilience. It’s about harnessing our most advanced technologies not to control people, but to protect them. It’s about watching the endless streams of data, the flickering pulses of the network, and finding in that noise the signal that says: something is wrong, and we can stop it before it’s too late.

Every time an AI model catches a breach before it spreads, it safeguards more than data. It protects the trust on which our digital society is built. In an age where that trust is constantly under siege, machine learning stands as both sentinel and shield.

And perhaps that is the most important victory of all — not merely detecting threats, but preserving the fragile, vital connection between technology and the people it serves.