Behind every AI system that predicts, recommends, diagnoses, or detects lies a quiet, invisible pulse — the act of evaluation. It’s the heartbeat that determines whether a model is truly intelligent or merely clever in a narrow, brittle way. While the public marvels at AI’s visible feats — a chatbot’s witty banter, a camera’s ability to spot a face, a system’s uncanny prediction of what song you’ll like next — scientists, engineers, and ethicists are watching something far less glamorous but infinitely more important: the metrics.

An AI model without rigorous evaluation is like a bridge built without stress-testing its supports. It may look sturdy, even elegant, but the first strong wind could send it into catastrophe. Evaluation is where science meets responsibility, where precision meets ethics. The stakes are profound: in medicine, a faulty AI could misdiagnose a patient; in criminal justice, it could perpetuate bias; in finance, it could ruin lives with a flawed credit score.

The metrics we choose to evaluate an AI model are not neutral. They shape not only the model’s performance but also the social impact it will have once it steps into the real world. That’s why accuracy, while essential, is never enough — fairness must be woven into the very fabric of evaluation.

Accuracy: The First Mirror an AI Faces

When a newborn model first emerges from the training process, its creators need a way to look it in the eye and ask: “Did you learn?” Accuracy, in its simplest form, answers that question by measuring the proportion of correct predictions to total predictions. For a binary classifier — say, a spam filter — it’s straightforward: if out of 100 emails, the model labels 90 correctly, it has 90% accuracy.

But accuracy, for all its apparent clarity, is a seductive and sometimes misleading mirror. It reflects only the average correctness, hiding the terrain beneath the surface. Imagine a medical AI that diagnoses a rare disease that affects 1% of the population. If the AI simply predicts “no disease” for everyone, it will have 99% accuracy — and yet, it will have failed every single sick patient.

That’s why accuracy is the first glance, not the full picture. In the early stages of model evaluation, it tells us whether the model has learned anything at all, but not whether it has learned what we truly need it to know.

Precision and Recall: The Twin Lenses of Truth

In most real-world scenarios, the cost of a wrong prediction is not the same for every mistake. A false alarm can be irritating but harmless in one context, devastating in another. A missed detection can be catastrophic in one case, negligible in another. This is where precision and recall step into the light.

Precision is the measure of how often the model’s positive predictions are actually correct. Recall, on the other hand, measures how many of the true positive cases the model successfully catches. They are like two lenses that together bring the truth into focus.

Consider an AI system screening for cancer in mammograms. High precision means that when the AI says “cancer,” it is usually right, sparing patients the trauma of unnecessary biopsies. High recall means that the AI catches almost every cancer case, ensuring that patients are not missed. But pushing for perfect recall often sacrifices precision — the AI may start flagging healthy patients just to make sure it catches every sick one.

The balance between precision and recall is more than mathematics; it is a moral decision. It forces us to confront the human cost of errors, to choose which type of mistake we are more willing to risk.

The F1 Score: Harmony in Trade-Offs

Because precision and recall often pull in opposite directions, we need a way to measure their harmony. The F1 score does exactly that. By taking the harmonic mean of precision and recall, it rewards models that achieve balance. A model that has perfect recall but terrible precision will have a low F1 score, just as one with perfect precision but poor recall will.

In domains like information retrieval, fraud detection, and medical diagnosis, the F1 score becomes a compass, guiding researchers toward models that are both alert and careful, neither missing too much nor crying wolf too often.

But even the F1 score, for all its elegance, lives within a universe of numbers that only tell part of the story. Models can have beautiful metrics and still fail us in deeper, subtler ways.

Beyond Numbers: The Tyranny of Averages

Metrics like accuracy, precision, recall, and F1 are aggregates. They summarize performance over an entire dataset. But averages can lie. If a facial recognition model has 95% accuracy overall but only 70% accuracy for darker-skinned women, that average hides a dangerous inequity.

In such cases, subgroup analysis becomes essential. We must look at performance broken down by race, gender, age, language, geography — whatever dimensions are relevant to the domain. A model that works beautifully for the majority but fails a vulnerable minority is not a good model. In fact, it can be worse than having no model at all, because it cloaks discrimination in the language of objectivity.

This is where fairness metrics come into the evaluation landscape.

Fairness: The Ethical Horizon of Evaluation

Fairness in AI is not a single number; it is a family of concepts, each born from different philosophical traditions and social concerns. Demographic parity demands that positive outcomes be equally distributed across groups. Equalized odds insists that error rates — both false positives and false negatives — be the same for all groups. Predictive parity focuses on ensuring that a given prediction means the same thing regardless of who receives it.

Each of these fairness definitions has trade-offs. In some situations, they cannot all be satisfied simultaneously — a reality proven in mathematical impossibility theorems. Choosing which fairness criterion to prioritize is not a purely technical choice; it is a societal one, tied to values, context, and historical injustices.

The danger is in treating fairness as a box to be checked rather than an ongoing commitment. A fairness audit at the end of model development is like inspecting the ethical wiring of a building only after it’s built. True fairness requires integrating these considerations from the earliest stages of data collection and feature selection, all the way through deployment and monitoring.

The Role of ROC and AUC in Seeing the Whole Field

While fairness grapples with who is affected and how, another family of metrics — ROC curves and the Area Under the Curve (AUC) — help us understand a model’s discriminative power across all possible thresholds.

A ROC curve plots the true positive rate against the false positive rate for different decision thresholds. A perfect model hugs the top-left corner; a useless model traces the diagonal of random guessing. The AUC distills this curve into a single number between 0 and 1, with higher being better.

These tools are invaluable in comparing models in situations where the threshold can be adjusted later — like fraud detection systems that can decide how aggressive to be in flagging transactions.

Yet even a model with a stellar AUC can stumble if the real-world threshold is poorly chosen, or if its underlying data is skewed. The curve doesn’t tell us who is being misclassified, only how well the model separates classes in general.

Calibration: Trusting the Confidence

Another dimension of evaluation often overlooked is calibration — the alignment between a model’s predicted probabilities and the actual likelihood of outcomes. A well-calibrated weather model that predicts a 70% chance of rain should see rain 7 out of 10 times in such forecasts.

In critical applications, calibration becomes a matter of trust. A medical AI that assigns a 90% probability to a diagnosis must mean exactly that; otherwise, doctors will either over-trust or under-trust its recommendations, both of which can harm patients.

Calibration plots and metrics like the Brier score help diagnose this alignment, reminding us that it’s not enough for a model to be accurate — it must also know what it doesn’t know.

The Human Context of Model Evaluation

Every metric, no matter how sophisticated, exists in a human context. The thresholds we set, the trade-offs we accept, the subgroups we examine — these choices are shaped by values, priorities, and power dynamics.

An AI that predicts recidivism risk with 85% accuracy may seem impressive until we ask: 85% for whom? At what cost to the remaining 15%? And what historical biases are embedded in the data it was trained on?

Evaluation is not just about judging a model’s technical performance; it is about judging its moral footprint. And that means involving not only data scientists, but also domain experts, ethicists, policymakers, and — crucially — the people whose lives will be affected by the AI.

Continuous Evaluation in a Changing World

A model’s performance is not frozen in time. The world changes — data shifts, behaviors evolve, and what was accurate yesterday can become dangerously wrong tomorrow. This phenomenon, known as concept drift, makes continuous evaluation essential.

In finance, consumer spending patterns shift; in healthcare, new diseases emerge; in language, words change meaning. A model that once passed every test can quietly degrade if not monitored.

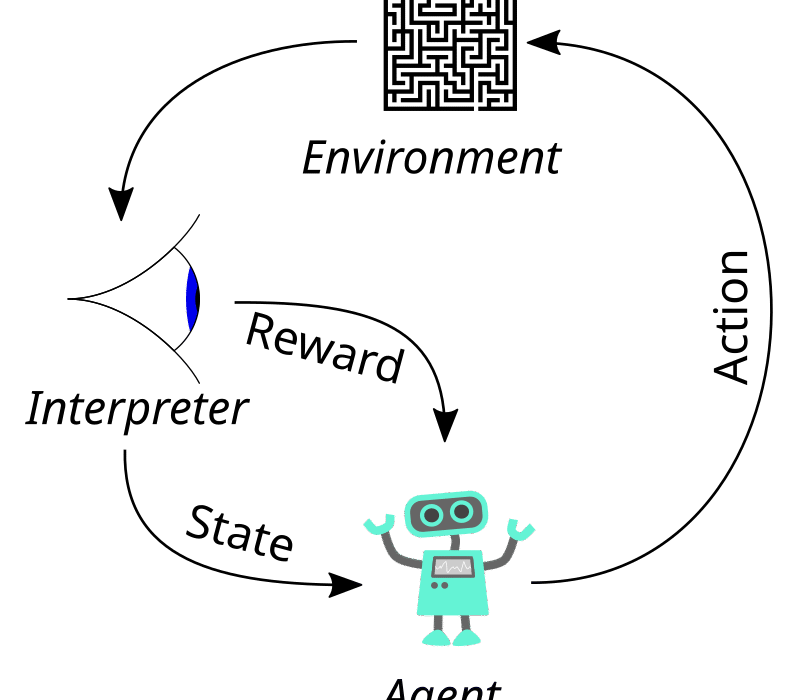

That’s why modern AI deployment strategies treat evaluation as a loop, not a one-time hurdle. Automated monitoring systems track key metrics in real time, and alert developers when performance dips for any group or in any context.

The Future of Metrics: Toward Holistic Evaluation

As AI permeates more areas of life, the very nature of evaluation must expand. Traditional metrics will remain important, but they must be joined by measures of interpretability, robustness, privacy preservation, and environmental impact.

Interpretability asks: Can we explain why the model made a decision? Robustness asks: Does the model hold up under noisy or adversarial input? Privacy preservation asks: Can the model make accurate predictions without exposing sensitive data? Environmental impact asks: How much energy does training and running this model consume, and what is its carbon footprint?

In the future, a truly high-performing AI will be one that scores well not only in accuracy and fairness, but also in transparency, sustainability, and resilience.

The Moral Weight of Metrics

In the end, AI model evaluation is about more than numbers. It is about the kind of world we want to live in. Metrics are the rulers we use to measure intelligence, but they are also the blueprints that shape it. If we measure only speed and accuracy, we will get AI that is fast and precise but potentially blind to justice. If we include fairness, transparency, and human-centered values in our measurements, we invite AI to grow in ways that serve the many, not just the few.

The challenge, and the opportunity, is to recognize that every choice of metric is also a choice of future.