In the grand tapestry of science, there are few goals as profound as understanding how matter organizes itself, transforms, and dances under the laws of nature. From the sudden freezing of water into ice to the delicate folding of a protein molecule that gives life its shape, the underlying story is one of statistical mechanics—the study of how large systems emerge from the behavior of countless tiny parts.

At the heart of this story lies the Boltzmann distribution, one of the crown jewels of physics. It describes how particles in a system spread themselves out among different energy levels when in thermal equilibrium. For scientists, being able to sample this distribution is like having a key to unlock the secrets of phase transitions, chemical reactions, and even the inner workings of biological life. But while the equation itself is elegant, turning it into practical tools for simulation has long been a thorny challenge.

Now, a team led by Prof. Pan Ding, Associate Professor in the Departments of Physics and Chemistry, and Dr. Li Shuo-Hui, Research Assistant Professor in Physics at the Hong Kong University of Science and Technology (HKUST), has opened a new door. Their novel direct sampling method, powered by deep generative models, enables efficient sampling of the Boltzmann distribution across a continuous temperature range. Their findings, published in Physical Review Letters, could transform how scientists probe the complex landscapes of matter.

Why Sampling Matters

To appreciate the breakthrough, imagine a vast mountain range. Each valley represents a stable configuration of a physical system—perhaps a folded protein or a crystal structure—while the peaks are high-energy barriers that the system rarely crosses. Traditional methods like molecular dynamics (MD) or Markov chain Monte Carlo (MCMC) are like hikers wandering this rugged terrain. Over time, they explore the valleys and occasionally climb over the peaks, but the journey is slow, and many regions remain poorly charted.

The Boltzmann distribution tells us how often the system should be found in each valley, depending on temperature. But sampling it efficiently is like trying to explore every valley in the mountains without taking centuries of wandering. This challenge has haunted statistical mechanics for decades.

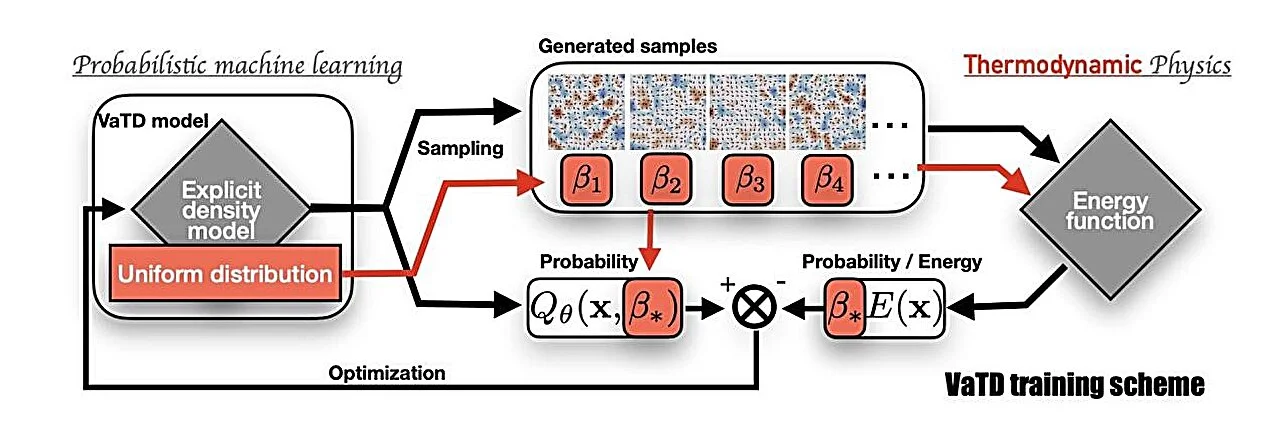

The new method, called variational temperature-differentiable (VaTD), reshapes the journey entirely. Instead of hikers trudging through the mountains, VaTD acts more like a drone equipped with a perfect map. It learns the whole energy landscape and allows direct, efficient access to the right probability distribution, even across different temperatures.

The Power of Deep Generative Models

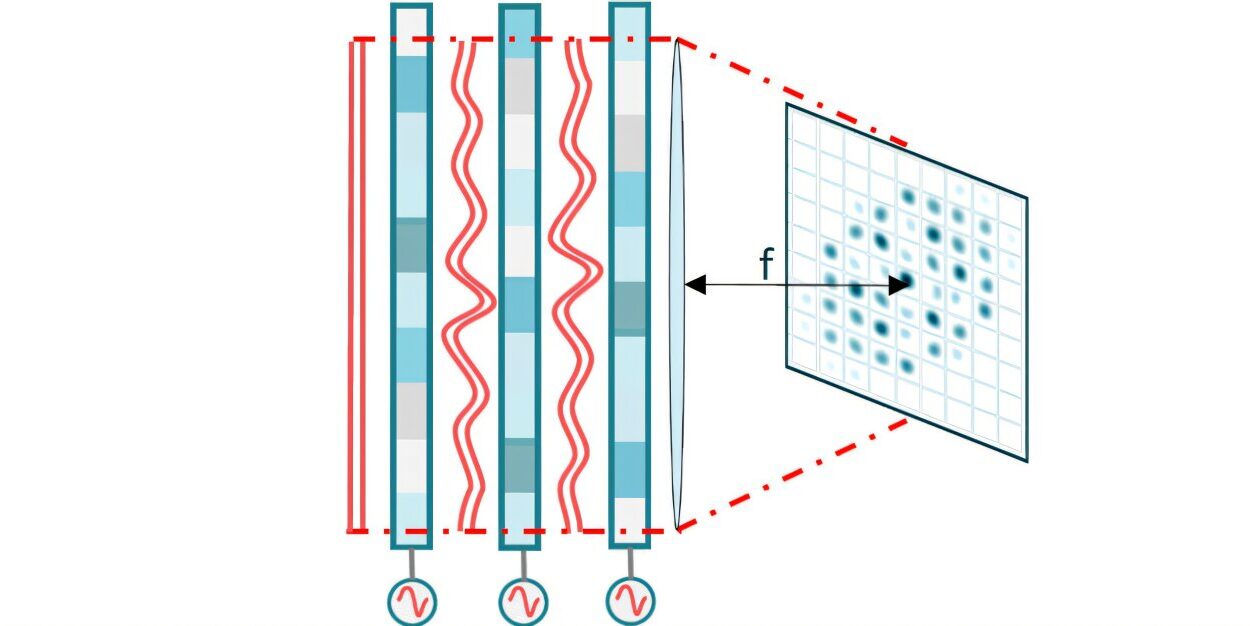

In recent years, deep generative models—the same technologies behind AI-generated images, music, and text—have been revolutionizing science. These models excel at learning complex patterns and generating new data that matches them. In physics, this means they can learn the intricate statistical patterns of systems governed by the Boltzmann distribution.

What makes VaTD so remarkable is its generality. It can be applied to any tractable density generative model, such as autoregressive models or normalizing flows, both of which are powerful tools in modern machine learning. By embedding the problem of physics into the framework of generative modeling, VaTD turns what was once a computational bottleneck into a problem of learning and differentiation.

Unlike many existing machine learning approaches in statistical mechanics, VaTD does not depend on massive datasets pre-computed by molecular dynamics or Monte Carlo simulations. It only requires the potential energy of the system itself. This independence marks a profound shift: instead of relying on laborious simulations to teach the model, the physics directly guides the learning process.

A Continuous Temperature Bridge

One of VaTD’s most striking features is its ability to handle a continuous range of temperatures. Traditional methods often focus on one temperature at a time, which means crossing high energy barriers can take enormous effort. By contrast, VaTD smoothly integrates across temperatures, creating a bridge that allows the system to move freely between valleys in the energy landscape.

This approach not only makes sampling more efficient but also reduces bias in the results. Through automatic differentiation, the method can calculate both the first- and second-order derivatives of thermodynamic quantities with respect to temperature. This capability is akin to approximating an analytical partition function—a long-sought goal in statistical mechanics.

Under optimal conditions, the framework even provides a theoretical guarantee of producing an unbiased Boltzmann distribution, offering both accuracy and efficiency.

Putting Theory to the Test

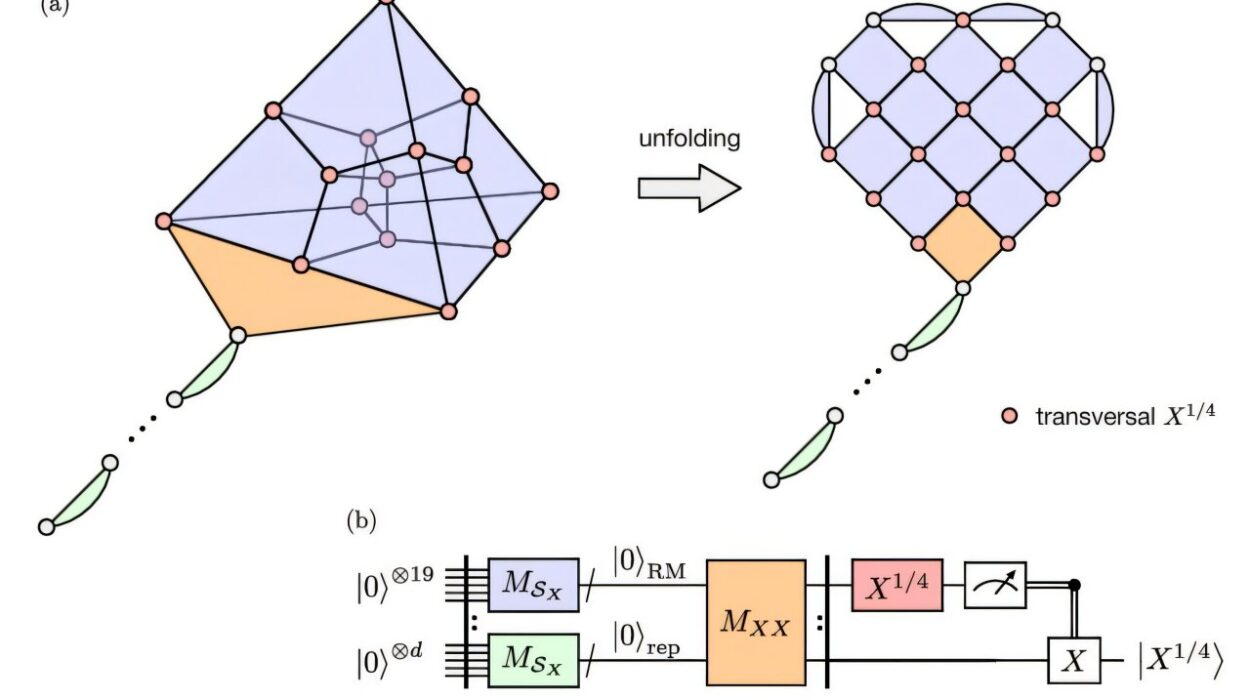

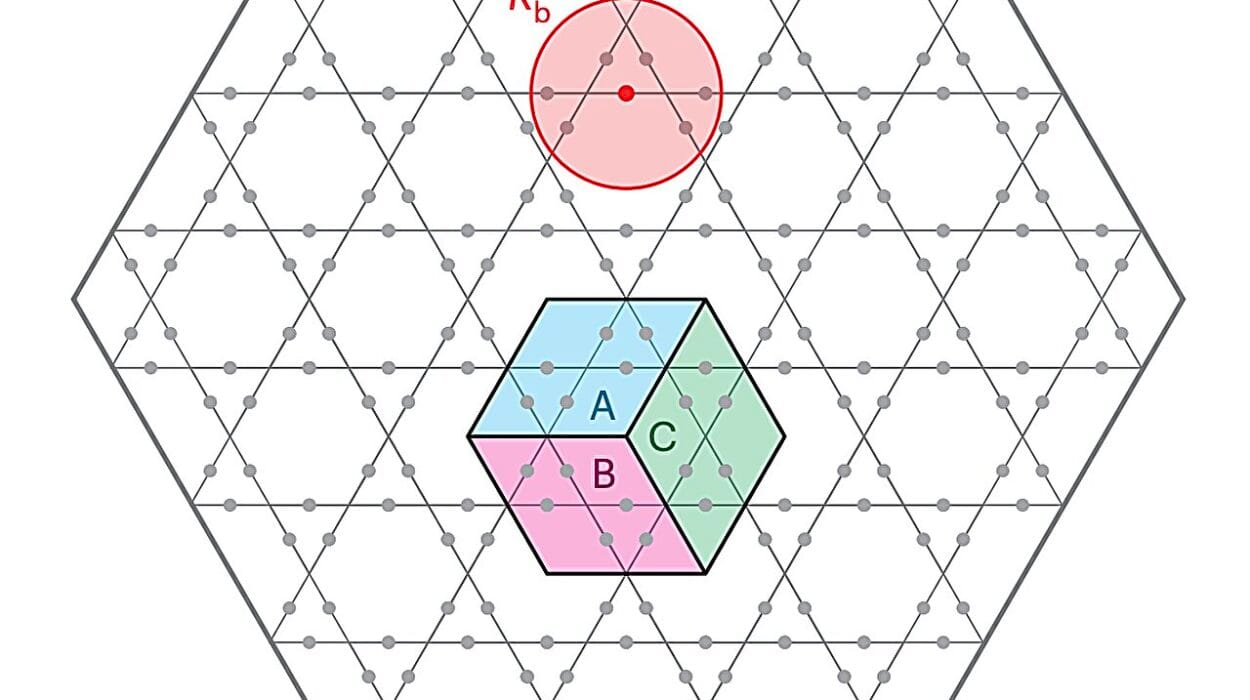

A theory is only as strong as its validation, and the team tested VaTD on classical models of statistical physics, including the Ising model and the XY model. These systems, though simplified, are fundamental playgrounds for exploring phase transitions and collective behavior.

The results were clear: VaTD not only matched but outperformed conventional methods in efficiency, capturing the correct thermodynamic behavior across temperature ranges while requiring fewer computational resources. For scientists used to waiting long hours—or days—for simulations to converge, this efficiency could feel like stepping from a horse-drawn carriage into a high-speed train.

Beyond Physics: A Tool for Many Sciences

Though born in the halls of physics, the implications of this breakthrough stretch far beyond. The ability to efficiently sample the Boltzmann distribution touches nearly every field where complexity and thermal fluctuations matter.

In chemistry, it could accelerate the study of reaction rates and molecular structures. In materials science, it may help design novel compounds with properties tailored to industry and technology. In life sciences, it could offer new insights into the folding of proteins or the dynamics of biomolecules, potentially impacting drug design and biotechnology.

As Prof. Pan Ding noted, “This breakthrough paves the way for studying novel phenomena in complex statistical systems, with potential applications in physics, chemistry, materials science, and life sciences.”

The Human Side of Discovery

Behind every scientific innovation lies human imagination and perseverance. For Prof. Pan, Dr. Li, and their colleagues, this work represents more than a technical advance—it is a continuation of the age-old scientific spirit of finding clarity amid complexity. Their achievement blends the rigor of physics with the creativity of machine learning, bridging two intellectual traditions into a unified vision.

There is also something profoundly poetic in the idea that the same generative models capable of creating lifelike images and stories can also be harnessed to simulate the fundamental laws of nature. It is a reminder that human ingenuity knows no boundaries, and that the tools we create for art and communication can also illuminate the deepest mysteries of the universe.

Toward a New Era of Statistical Mechanics

The development of VaTD is not the final word but a beginning. Like every breakthrough in physics, it opens as many questions as it answers. How will it perform on even more complex systems, such as large biomolecules or quantum many-body problems? Can it be scaled to guide experiments in real time, suggesting new directions for discovery as data is collected?

The answers lie in the future, but the path is now illuminated. With VaTD, scientists have a new instrument in their orchestra of tools—a way to hear the subtle harmonies of matter more clearly than ever before.

A Universe Becoming Knowable

In the end, this breakthrough is not only about faster simulations or elegant mathematics. It is about our relationship with the universe. Physics has always been the human attempt to understand why things happen as they do, and statistical mechanics, with its interplay of chance and necessity, has always embodied the complexity of reality.

By teaching machines to sample the Boltzmann distribution with unprecedented efficiency, Prof. Pan and Dr. Li’s team have brought us one step closer to seeing that complexity in full detail. It is as if we have tuned a blurry lens and, for the first time, can glimpse the universe’s patterns in sharp focus.

The story of physics is the story of human curiosity refusing to stop. And today, with deep generative models guiding us through the mountains of probability, we are reminded that the universe, however intricate, is not beyond comprehension. It is waiting to be understood, one breakthrough at a time.

More information: Shuo-Hui Li et al, Deep Generative Modeling of the Canonical Ensemble with Differentiable Thermal Properties, Physical Review Letters (2025). DOI: 10.1103/8wx7-kyx8