In the modern world, software has become so deeply intertwined with human activity that it is almost invisible. Every aspect of contemporary life—from communication and healthcare to transportation and finance—depends on the functioning of software systems. Despite its ubiquity, many people interact with software daily without fully understanding what it is, how it works, and why it forms the foundation of the digital age. Software is not merely code or programs; it is a vast ecosystem of logic, design, algorithms, and data that allows machines to perform meaningful tasks. It represents human creativity expressed in computational form, bridging the abstract realm of thought with the concrete functionality of technology.

To understand software is to understand the essence of modern civilization. Just as electricity powered the industrial revolution, software powers the information revolution. It defines how we work, learn, communicate, and even think. This article explores what software truly is—its nature, types, history, structure, development, and its profound role in shaping society.

The Definition and Nature of Software

Software is a set of instructions, data, or programs that tell a computer how to operate. It is the intangible counterpart to hardware—the physical components of a computer system. While hardware represents the body of a computing machine, software acts as its mind, controlling and directing operations according to human intent. Without software, hardware is inert; it cannot interpret or execute any meaningful task.

The word “software” originated in the mid-20th century as a counterpart to “hardware.” Early computers were manually programmed with switches and punch cards, but as complexity increased, engineers recognized the need to abstract logic from physical machinery. Software emerged as this abstraction—a layer of logic that could be modified independently of the machine.

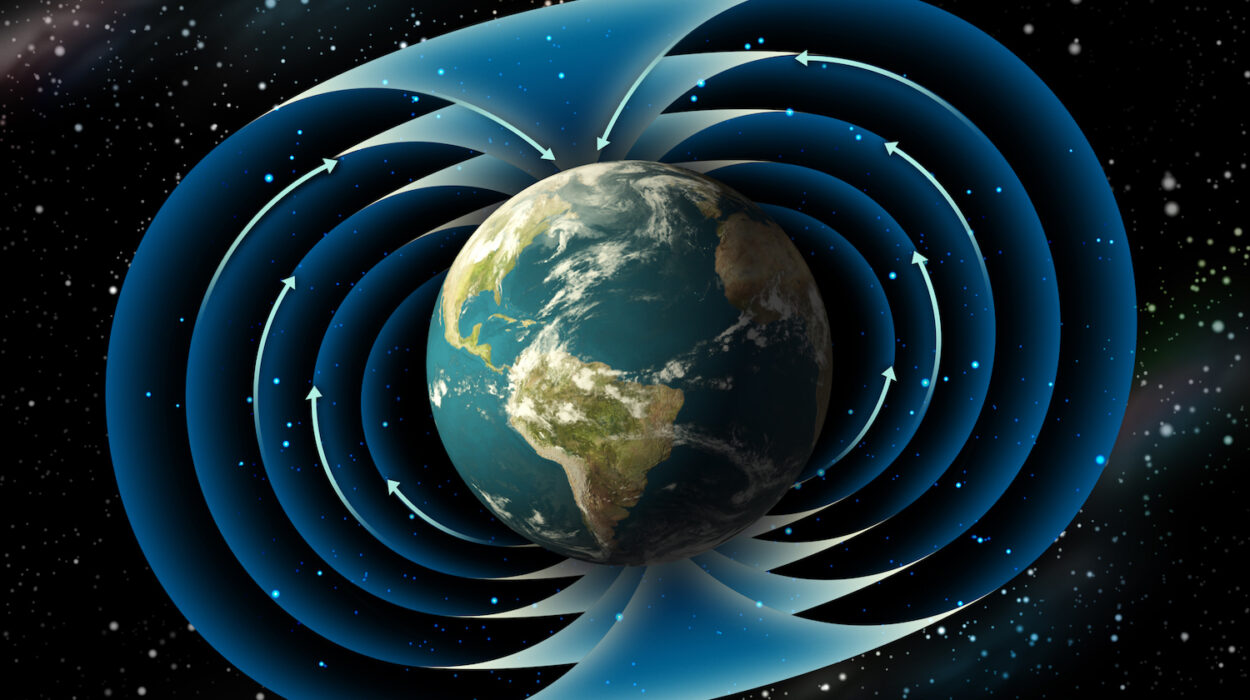

At its most fundamental level, software operates through binary code—strings of zeros and ones that represent electrical signals interpreted by hardware. These binary sequences form machine instructions, which can be organized into higher-level programming constructs. However, software is more than just binary instructions. It encompasses the entire conceptual structure that governs computation: algorithms, data models, interfaces, protocols, and user experiences.

From a philosophical standpoint, software is both an art and a science. It is an art because it requires creativity, design, and expression. It is a science because it relies on mathematical logic, engineering principles, and systematic analysis. Together, these aspects make software one of the most complex intellectual creations in human history.

The Historical Evolution of Software

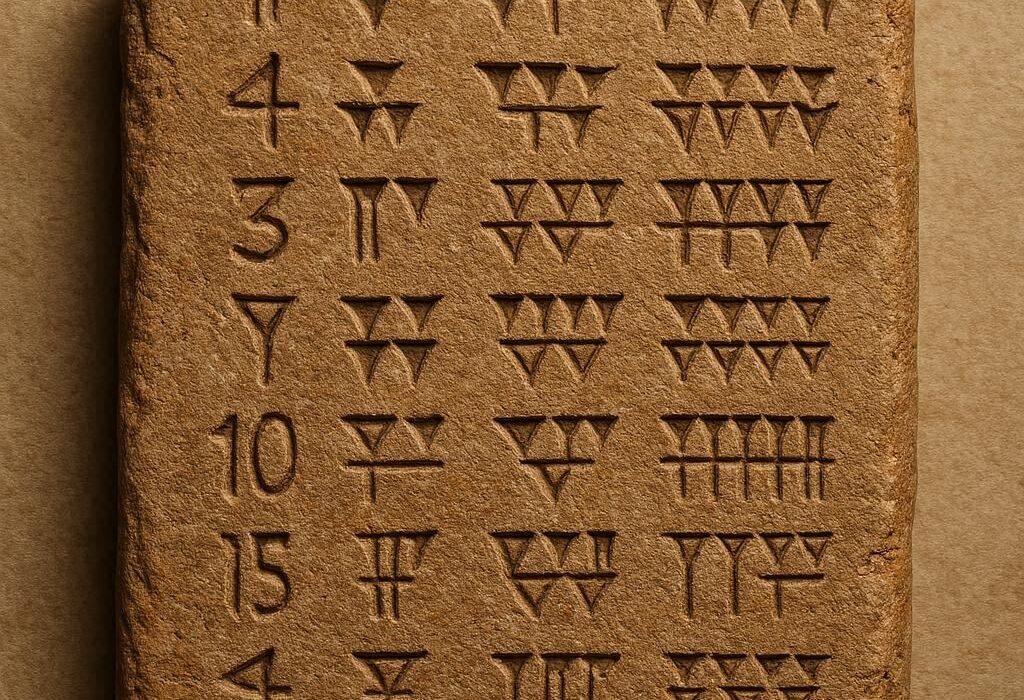

The evolution of software mirrors the evolution of computing itself. In the earliest days of computation, during the 1940s and 1950s, software as we know it did not exist. Early computers like ENIAC and Colossus were programmed manually through physical rewiring or punched cards. Programs were limited in flexibility and required expert operators.

The concept of stored programs, introduced by John von Neumann in the late 1940s, revolutionized computing. His architecture allowed instructions to be stored in memory alongside data, making software reprogrammable without changing hardware. This marked the birth of modern computing and the beginning of the software era.

The 1950s and 1960s saw the rise of high-level programming languages such as FORTRAN, COBOL, and LISP, which allowed programmers to write instructions in human-readable syntax rather than raw machine code. Compilers and assemblers emerged to translate these languages into executable code, vastly improving productivity.

In the 1970s and 1980s, software became central to the emerging personal computer revolution. Operating systems like UNIX, MS-DOS, and later Windows and macOS brought structure to how computers executed tasks. Graphical user interfaces (GUIs) made software accessible to non-technical users.

The 1990s introduced networking and the internet, transforming software from isolated applications into interconnected ecosystems. Web browsers, servers, and online platforms became the new frontier. Software was no longer confined to a single machine—it became a distributed, global phenomenon.

In the 21st century, software has transcended traditional computing devices. It powers smartphones, cloud infrastructure, vehicles, household appliances, and even wearable devices. Artificial intelligence, blockchain, and the Internet of Things (IoT) represent the next frontiers, where software continues to expand into every conceivable domain.

The Components of Software

Software is not a single entity but a layered construct composed of interacting components. Each component serves a specific purpose within the overall structure.

At the base lies system software, which interfaces directly with hardware. This includes operating systems, device drivers, and utility programs that manage system resources. System software provides the foundation upon which all other programs run, controlling memory, processors, storage, and input/output operations.

Above the system layer sits application software—programs designed to perform specific tasks for users. This includes everything from word processors and web browsers to accounting systems and video games. Application software translates user intent into actions that the computer can execute, serving as the primary interface between humans and machines.

Between system and application software lies middleware, which facilitates communication and data management across different applications or systems. Middleware acts as the connective tissue of modern computing, enabling distributed systems and cloud services to function seamlessly.

Another critical component is development software—tools used by programmers to create, test, and maintain other software. Compilers, debuggers, text editors, integrated development environments (IDEs), and version control systems all fall under this category.

Together, these layers form a hierarchy that mirrors the human nervous system: the hardware is the body, system software is the brainstem controlling essential functions, middleware is the network of nerves connecting different regions, and application software represents conscious activity and decision-making.

The Role of Programming Languages

Programming languages are the medium through which software is created. They provide the syntax and semantics that allow humans to express computational logic in a structured form. Over time, hundreds of programming languages have been developed, each designed for particular goals or paradigms.

Early languages like Assembly and FORTRAN were close to the machine, providing fine-grained control but requiring meticulous attention to detail. Later, higher-level languages like C, Pascal, and Java abstracted away low-level details, allowing developers to focus on problem-solving rather than hardware management.

Modern languages such as Python, JavaScript, Rust, and Go emphasize productivity, safety, and readability. They come with extensive libraries and frameworks that simplify complex tasks such as web development, data analysis, and artificial intelligence.

Each language embodies a computational philosophy. Procedural languages focus on sequences of instructions, object-oriented languages organize code around data and behavior, and functional languages treat computation as the evaluation of mathematical functions.

Ultimately, programming languages are not just tools but expressions of thought. They allow humans to formalize ideas into structures that machines can interpret. Software development, therefore, becomes an act of translation between human reasoning and machine execution.

Operating Systems: The Core of Digital Control

An operating system (OS) is one of the most essential types of software. It serves as the intermediary between hardware and applications, managing resources, processes, and user interaction. Without an operating system, a computer would not be able to multitask or manage memory efficiently.

Operating systems perform numerous critical functions: they allocate memory, schedule processor time, control devices, and enforce security policies. They also provide the file systems that store and organize data.

Historically, early operating systems were simple and task-specific. As hardware evolved, OS design became increasingly sophisticated. UNIX introduced the concept of portability and modularity, influencing generations of operating systems. Microsoft Windows brought user-friendly interfaces to the masses, while macOS emphasized stability and design integration. Linux emerged as the open-source alternative, powering everything from servers to smartphones.

Today, operating systems extend far beyond traditional computers. Android and iOS dominate mobile devices, while embedded systems run on cars, smart appliances, and industrial robots. Cloud operating systems manage distributed data centers across continents. The OS is no longer confined to a single machine; it orchestrates entire networks of machines working together.

Application Software: Enabling Human Productivity

While system software provides the foundation, application software defines the user experience. Applications transform computers into tools for creation, communication, and entertainment. From office suites and web browsers to photo editors and video games, application software reflects the diversity of human needs.

The evolution of applications mirrors technological and cultural shifts. Early applications were text-based and limited in capability. As graphical interfaces became standard, software design expanded to include visual and interactive elements. The rise of the internet brought web-based applications accessible from any device.

Today, applications often run in the cloud, using remote servers to handle computation and data storage. This allows users to access powerful tools without installing them locally. Software-as-a-Service (SaaS) has become the dominant model for enterprise and consumer applications alike.

Modern applications also integrate artificial intelligence, enabling features such as voice recognition, predictive typing, and personalized recommendations. This convergence of software and intelligence has blurred the line between tools and assistants, transforming software into an active participant in human decision-making.

The Software Development Process

Creating software is both a technical and creative endeavor. It involves translating abstract requirements into executable logic that performs reliably under real-world conditions. The software development process encompasses several stages—requirements analysis, design, implementation, testing, deployment, and maintenance.

Requirements analysis defines what the software should do. Designers then create an architecture—a blueprint outlining components, interfaces, and data flow. Developers write code to implement this design, using programming languages and frameworks. Testing ensures that the software behaves as intended, detecting bugs and vulnerabilities. Once validated, the software is deployed to users, where it enters the maintenance phase involving updates, optimizations, and new features.

Various development methodologies guide this process. The traditional Waterfall model follows a linear progression, while Agile emphasizes iterative cycles and adaptability. DevOps integrates development and operations, ensuring continuous delivery and automation. Regardless of methodology, collaboration and communication are central to successful software engineering.

The Rise of Open Source

One of the most transformative movements in software history is open source. Open-source software is distributed with source code that anyone can inspect, modify, and share. This philosophy contrasts with proprietary models, where code is kept secret and controlled by its creators.

Open source fosters innovation through collaboration. Projects like Linux, Apache, PostgreSQL, and Python have become foundational components of global infrastructure. Communities of developers contribute improvements, fix bugs, and ensure transparency.

The open-source model has democratized software development. It allows individuals and small organizations to build upon existing frameworks rather than reinventing the wheel. It also promotes security and reliability through collective scrutiny—many eyes examining code for weaknesses.

Today, open source underpins the entire digital ecosystem. Most of the internet runs on open-source servers. Smartphones, cloud platforms, and even AI models depend on open-source software. The success of open source demonstrates that software development thrives when knowledge is shared rather than restricted.

Cloud Computing and Software as a Service

Cloud computing has redefined how software is delivered and consumed. Instead of running programs on a local machine, users can access software hosted on remote servers through the internet. This shift has led to the widespread adoption of Software-as-a-Service, or SaaS.

In the cloud model, infrastructure, platforms, and applications are provided on demand. Users no longer need to install or maintain software locally; they simply connect to it via browsers or APIs. This model offers scalability, reliability, and cost-efficiency. Companies can deploy updates instantly across millions of users, ensuring consistency and security.

Cloud-based software also enables collaboration across geographies. Tools like Google Workspace, Microsoft 365, and Adobe Creative Cloud exemplify how shared environments can enhance productivity. Developers benefit as well, using cloud services for hosting, analytics, and machine learning.

However, cloud computing introduces new challenges. Data privacy, latency, and dependency on network availability are critical concerns. Despite these, the cloud remains the backbone of modern digital infrastructure, making software more dynamic, accessible, and resilient than ever before.

Artificial Intelligence and the Evolution of Software

Artificial intelligence (AI) represents the next major transformation in software. Traditional software follows deterministic rules defined by humans; AI-driven software learns patterns from data and adapts over time. This shift from rule-based to learning-based systems has profound implications.

Machine learning algorithms allow software to perform tasks such as image recognition, language translation, and autonomous decision-making without explicit programming for every scenario. Neural networks, particularly deep learning architectures, have enabled remarkable advances in natural language processing, computer vision, and speech recognition.

AI also redefines the software development process itself. Developers now use AI-assisted coding tools that generate, optimize, and debug code automatically. Models like code completion assistants exemplify how AI can enhance human creativity and efficiency.

Yet, the integration of AI raises ethical and technical questions. Bias in training data can lead to unfair outcomes, and lack of transparency in model behavior challenges accountability. Ensuring that intelligent software remains ethical, explainable, and secure is one of the defining challenges of modern computing.

Embedded and Real-Time Software

Beyond personal computers and smartphones, software increasingly inhabits the physical world. Embedded software runs on microcontrollers and dedicated devices such as cars, medical instruments, and industrial machines. Unlike general-purpose applications, embedded systems operate under strict constraints of memory, power, and real-time responsiveness.

Real-time software must produce outputs within fixed deadlines, often in safety-critical environments. For example, an aircraft’s flight control system cannot afford delays or failures. Such software requires rigorous design, testing, and certification to ensure reliability.

Embedded and real-time systems illustrate how software bridges the gap between digital and physical domains. They power smart homes, automated factories, and autonomous vehicles, embodying the convergence of computing and engineering.

Cybersecurity and Software Integrity

As software governs more aspects of life, its security becomes paramount. Cybersecurity focuses on protecting software systems from unauthorized access, data breaches, and malicious attacks. Vulnerabilities in software can lead to catastrophic consequences—financial loss, privacy violations, or even threats to national infrastructure.

Secure software development begins with design. Developers must anticipate potential attack vectors and implement safeguards such as encryption, authentication, and sandboxing. Continuous monitoring and patching are essential, as new vulnerabilities emerge daily.

Open-source transparency can enhance security by allowing public review, but it also enables attackers to study weaknesses. Proprietary systems, while less exposed, risk obscurity-based vulnerabilities. Thus, security requires a balance between openness, vigilance, and responsible governance.

The rise of artificial intelligence has also introduced new forms of cyber threats. Adversarial attacks on AI models can manipulate outputs or extract sensitive data. As software grows more intelligent, ensuring its trustworthiness becomes increasingly complex.

The Human Dimension of Software

Although software is technological in nature, it is deeply human in origin and impact. Every line of code reflects decisions made by developers—choices about logic, design, ethics, and purpose. The collective creativity of millions of programmers has built the digital world we inhabit.

Software development is a social activity that depends on collaboration, communication, and empathy. Teams of engineers, designers, testers, and users contribute to shaping how software behaves. Even open-source projects rely on community consensus and shared norms.

Software also shapes human behavior. It influences how we communicate, learn, and perceive reality. Social media algorithms affect public discourse; recommendation engines shape consumer habits. In this sense, software is not only a product of society but also a force that continuously reshapes it.

Software and the Global Economy

The software industry has become one of the largest economic forces on Earth. Software drives innovation across every sector—from healthcare and education to finance and transportation. Companies like Microsoft, Google, and Amazon owe their success to the power of scalable software.

Software’s economic impact extends beyond corporate profits. It enables small businesses to compete globally, automates industrial processes, and fuels the digital marketplace. The gig economy, remote work, and e-commerce all rely on software infrastructure.

Moreover, the rise of software-defined systems—where functionality is determined by code rather than hardware—has revolutionized manufacturing, logistics, and communication. This software-centric paradigm has made adaptability the new competitive advantage.

The Future of Software

The future of software will be defined by intelligence, autonomy, and integration. Advances in quantum computing may redefine computational limits, while AI will continue to blur the boundary between software and cognition. Software will increasingly write itself, optimize itself, and perhaps even understand human intent.

Decentralized technologies such as blockchain will make software systems more transparent and secure. Edge computing will bring processing closer to users, enabling low-latency applications for autonomous vehicles and augmented reality. Meanwhile, human-computer interaction will evolve through voice, gesture, and immersive interfaces.

At the same time, software ethics will become a central concern. As code influences critical decisions—from credit approvals to medical diagnoses—society must ensure fairness, accountability, and accessibility. The future of software is not only a technological challenge but also a moral one.

Conclusion

Software is the invisible infrastructure of modern civilization. It powers economies, connects people, and drives scientific discovery. It exists everywhere—from the smallest microchip to the vastness of the internet. More than just code, software embodies human thought translated into logic that machines can execute.

Understanding software means understanding how modern life operates. It is the foundation of communication, automation, and intelligence. As we move further into the digital age, software will continue to expand its influence, shaping not just how we live but what we can imagine.

In its essence, software is the language through which humanity speaks to machines—and in return, machines help us extend our reach beyond imagination. The story of software is the story of human ingenuity: an ongoing dialogue between abstraction and reality, thought and execution, idea and implementation. It is the digital backbone of modern life, and its evolution continues to define the course of human progress.