An algorithm is one of the most fundamental concepts in the modern digital world, serving as the invisible foundation behind every process that happens inside a computer or any automated system. Whether you are scrolling through social media, searching for information on the internet, navigating using GPS, or securing data online, algorithms are quietly working behind the scenes to make it possible. They are the step-by-step procedures or sets of instructions designed to perform specific tasks, solve problems, and process information efficiently and accurately.

The concept of an algorithm predates modern computers by thousands of years. In its simplest form, an algorithm can be understood as a well-defined procedure that takes an input, performs a sequence of operations, and produces an output. From mathematical problem-solving to artificial intelligence, from biological processes to decision-making systems, algorithms provide a logical framework that connects abstract thinking with real-world applications.

Understanding algorithms is essential not only for computer scientists but also for anyone living in a technology-driven age. To comprehend what an algorithm truly is, one must explore its mathematical roots, computational structure, design principles, and far-reaching impact on human civilization.

The Origins and Etymology of the Word “Algorithm”

The word algorithm originates from the Latinized name of the Persian mathematician Muhammad ibn Musa al-Khwarizmi, who lived during the 9th century in the House of Wisdom in Baghdad. His seminal works on mathematics, algebra, and number systems were translated into Latin during the Middle Ages, introducing his name as Algoritmi. Over time, this evolved into the term algorithmus and eventually became algorithm in modern English.

Al-Khwarizmi’s contributions were revolutionary. He introduced systematic procedures for performing arithmetic calculations using the Hindu-Arabic numeral system, which replaced the less efficient Roman numerals. His step-by-step methods for addition, subtraction, multiplication, and division were among the earliest recorded algorithms in written form.

Although the term has ancient origins, the formal definition of an algorithm came much later, during the 20th century, when mathematics, logic, and computer science merged into a new discipline. Today, the word encompasses not only arithmetic rules but also computational logic, machine learning models, encryption protocols, and data processing workflows that form the backbone of modern information systems.

The Fundamental Concept of an Algorithm

At its core, an algorithm is a precise, finite set of instructions designed to achieve a particular objective. Each instruction is clearly defined and can be followed systematically by a person or a machine. The essential features of any algorithm are clarity, finiteness, and effectiveness. It must be unambiguous, must terminate after a limited number of steps, and must produce a result.

Algorithms are not restricted to computers. They can describe any process involving logical decision-making or computation. For example, a cooking recipe can be thought of as an algorithm: it defines a sequence of steps that transform raw ingredients (input) into a finished dish (output). Similarly, the long division method taught in schools is an algorithmic process for finding a quotient and remainder.

In computer science, algorithms are expressed in programming languages and implemented to perform automated tasks. They form the logic behind every software system—from simple calculator applications to complex artificial intelligence engines. The study of algorithms involves designing, analyzing, and optimizing these step-by-step procedures to ensure they perform efficiently even when processing massive amounts of data.

Algorithms and Computational Thinking

The idea of algorithmic thinking is closely tied to computational thinking—a problem-solving approach that involves breaking down complex problems into smaller, manageable components that can be addressed systematically. Computational thinking encourages abstraction, pattern recognition, and logical sequencing—all essential skills in algorithm design.

When developing an algorithm, a computer scientist first defines the problem precisely and identifies the necessary inputs and desired outputs. Then, they create a logical sequence of operations that can transform the inputs into outputs. This process mirrors the structure of human reasoning but is formalized and codified in a way that can be executed by machines.

Computational thinking also emphasizes generalization—the ability to create algorithms that are not just solutions to specific instances but can handle a broad range of similar problems. This abstraction is what enables algorithms to power everything from simple spreadsheet calculations to global communication networks.

The Structure and Components of an Algorithm

Every algorithm, regardless of its complexity, can be broken down into a few core components that define its behavior. These components include inputs, outputs, operations, control structures, and conditions.

The inputs are the data or parameters provided to the algorithm before execution. For example, in a sorting algorithm, the input is a list of numbers to be arranged in order. The output is the result produced after processing the input—in this case, the sorted list.

The operations or instructions represent the specific actions that the algorithm performs. These can include arithmetic computations, comparisons, logical decisions, and data manipulations. The control structures, such as loops and conditional statements, determine how and when these operations are executed.

An algorithm must also include termination conditions, ensuring that the procedure eventually stops rather than running indefinitely. Without a termination point, an algorithm would not be useful, as it would never produce a final result.

Together, these elements form a logical flow that can be represented using various notations, such as flowcharts, pseudocode, or programming languages. Regardless of representation, the structure remains consistent—a clear, step-by-step procedure that defines how data moves from input to output.

Mathematical Foundations of Algorithms

The concept of algorithms is deeply rooted in mathematics. Long before computers existed, mathematicians developed algorithmic procedures for solving equations, calculating square roots, and determining the greatest common divisor. One of the earliest known algorithms is the Euclidean algorithm, introduced by the ancient Greek mathematician Euclid around 300 BCE, which efficiently computes the greatest common divisor of two numbers.

In modern times, algorithms are central to discrete mathematics, number theory, combinatorics, and logic. They are also the foundation of computational complexity theory—a branch of mathematics that studies the efficiency of algorithms in terms of time and space resources.

Mathematically, an algorithm can be viewed as a function that maps inputs to outputs through a well-defined sequence of transformations. This abstract perspective allows algorithms to be analyzed formally, independent of any specific programming language or hardware. It also makes it possible to prove properties such as correctness (whether the algorithm produces the right result) and complexity (how efficiently it operates).

The Role of Algorithms in Computer Science

In computer science, algorithms are the foundation of all computation. Every program or application is essentially an implementation of one or more algorithms. The efficiency and accuracy of a computer program depend largely on the quality of its underlying algorithms.

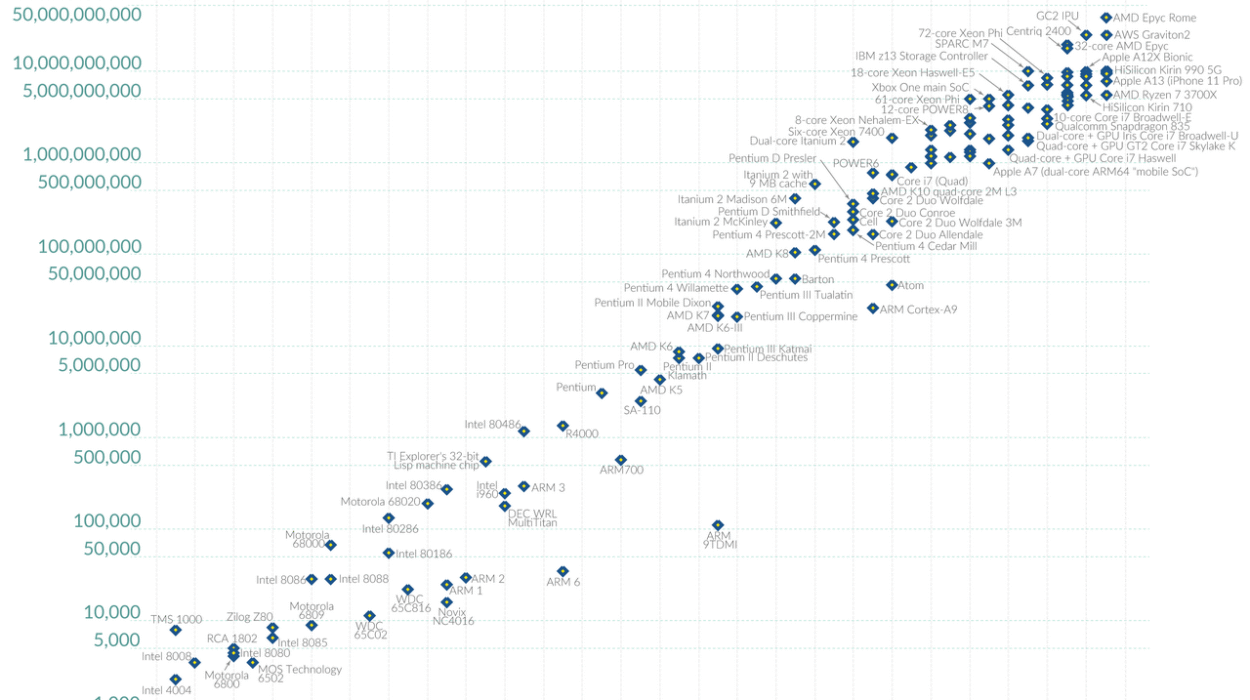

Computer scientists study algorithms from two main perspectives: design and analysis. Algorithm design focuses on creating procedures that solve specific problems effectively. Algorithm analysis evaluates the performance of these procedures in terms of speed (time complexity) and memory usage (space complexity).

For example, there are many ways to sort a list of numbers—bubble sort, merge sort, quicksort, and heapsort, among others. Although they all achieve the same result, their efficiency differs significantly depending on the size and nature of the data. Algorithm analysis helps determine which approach is optimal for a given situation.

In practice, algorithms form the logic behind all software systems. Operating systems use scheduling algorithms to manage processes. Search engines use ranking algorithms to retrieve relevant results. Cryptographic algorithms secure communications and protect data. Artificial intelligence systems rely on learning algorithms that allow them to adapt and improve over time.

Data Structures and Algorithms

Algorithms and data structures are closely related. While an algorithm defines the process for solving a problem, a data structure defines how the information involved in that process is organized and stored. Together, they form the foundation of efficient computation.

For example, a search algorithm that looks for a specific element in a list will perform differently depending on how the list is structured. A simple linear list might require scanning each element, whereas a more sophisticated data structure like a binary search tree can locate the element much faster.

Understanding the relationship between algorithms and data structures is one of the core principles of computer science. A well-designed algorithm must take advantage of appropriate data structures to minimize computation time and memory use. This is why most university courses in computer science teach them together as an integrated subject.

Algorithm Design Paradigms

Over decades of research and practice, computer scientists have developed various strategies for designing algorithms. These strategies, known as algorithm design paradigms, provide general approaches that can be applied to many types of problems.

One common paradigm is divide and conquer, in which a large problem is broken into smaller subproblems that are solved independently and then combined to form the final solution. Merge sort and quicksort are classic examples of this approach.

Another important paradigm is dynamic programming, which involves solving complex problems by breaking them down into overlapping subproblems and storing the results of these subproblems to avoid redundant calculations. This approach is widely used in optimization and decision-making problems.

Other paradigms include greedy algorithms, which build a solution step by step by choosing the locally optimal option at each stage, and backtracking, which explores all possible solutions by systematically searching through different configurations.

These paradigms demonstrate that algorithm design is as much an art as it is a science. It requires creativity, analytical thinking, and a deep understanding of both the problem and the tools available to solve it.

Algorithm Complexity and Efficiency

One of the most important aspects of algorithm study is understanding how efficiently an algorithm performs. Efficiency is typically measured in terms of time complexity and space complexity—that is, how long the algorithm takes to execute and how much memory it uses.

To describe efficiency, computer scientists use asymptotic notation, such as Big O notation, which expresses how an algorithm’s running time grows with the size of its input. For example, an algorithm with time complexity O(n) scales linearly with input size, while O(n²) grows quadratically, becoming significantly slower for large inputs.

Efficient algorithms are essential for modern computing, where data sets can reach billions of elements. Even small improvements in algorithmic performance can have enormous effects when scaled across global systems like search engines, cloud servers, and data centers.

However, not all problems have efficient solutions. Some problems belong to the class known as NP-complete, meaning that no known algorithm can solve them efficiently for all possible cases. The question of whether every NP problem has an efficient solution—the famous “P vs NP problem”—remains one of the most significant unsolved questions in computer science.

Algorithms in Artificial Intelligence

In the field of artificial intelligence (AI), algorithms play a central role in enabling machines to learn, reason, and make decisions. AI algorithms are designed to process vast amounts of data, identify patterns, and adapt based on experience.

Machine learning algorithms, a subset of AI, use data-driven techniques to improve performance over time without explicit reprogramming. Examples include decision trees, neural networks, support vector machines, and reinforcement learning models. These algorithms are responsible for the remarkable progress seen in image recognition, language processing, autonomous vehicles, and recommendation systems.

In deep learning, complex algorithms inspired by the human brain—known as artificial neural networks—enable systems to process massive datasets and perform tasks that were once thought to require human intelligence. These include understanding speech, generating realistic images, and even composing music.

The success of modern AI demonstrates the immense power of algorithms when combined with computational resources and data. Yet, it also raises ethical questions about transparency, fairness, and accountability, as algorithmic decisions increasingly affect society in significant ways.

Algorithms in Everyday Life

Even beyond computing, algorithms have become deeply embedded in modern life. They determine what news articles appear on social media feeds, how search engines rank websites, and how online stores recommend products. They manage air traffic, control financial trading, diagnose diseases, and optimize logistics in supply chains.

In many cases, algorithms are invisible to the user but profoundly influential. They filter the overwhelming flood of digital information, helping individuals find what they need quickly. However, their pervasiveness also introduces challenges—bias in algorithmic decision-making, privacy concerns, and the need for greater transparency in automated systems.

Understanding algorithms helps people become more informed digital citizens, capable of critically evaluating the systems they interact with daily.

The Ethics and Responsibility of Algorithms

As algorithms take on more decision-making power in society, ethical considerations become increasingly important. Algorithms used in hiring, lending, policing, and healthcare can have life-altering consequences. If they are based on biased data or flawed design, they can perpetuate or amplify social inequalities.

Algorithmic bias occurs when a model reflects or reinforces existing prejudices in its training data. This can lead to unfair treatment of individuals or groups, even when the algorithm’s decisions appear objective. Addressing this issue requires transparency, rigorous testing, and ethical oversight during algorithm development.

Another concern is accountability. When an algorithm makes a decision—such as denying a loan or recommending a medical treatment—who is responsible if that decision is wrong? Ensuring that algorithms remain explainable and auditable is critical for maintaining public trust in automated systems.

As society becomes more reliant on algorithms, the field of algorithmic ethics has emerged to address these challenges, emphasizing the need for fairness, accountability, and human oversight.

The Future of Algorithms

The future of algorithms lies in their increasing complexity and integration into every aspect of human life. As quantum computing develops, new kinds of algorithms—quantum algorithms—are being designed to exploit the unique properties of quantum mechanics. These could solve problems that are currently infeasible for classical computers, such as large-scale cryptographic analysis and molecular simulation.

In the coming decades, algorithms will continue to shape advances in artificial intelligence, biotechnology, robotics, and data science. They will become more adaptive, autonomous, and context-aware, capable of interacting seamlessly with human environments.

However, with greater power comes greater responsibility. The challenge for the next generation of scientists and engineers is not only to make algorithms more powerful but also more ethical, transparent, and aligned with human values.

Conclusion

An algorithm is far more than a sequence of computational steps—it is a universal idea that bridges logic, mathematics, and technology. From ancient arithmetic to artificial intelligence, algorithms have guided humanity’s understanding of how to solve problems systematically and efficiently.

They embody the very essence of reasoning and structure, transforming abstract ideas into concrete actions that power the digital age. In every sense, algorithms are the language of modern civilization, shaping how we compute, communicate, and make decisions.

As we move deeper into an era defined by automation and artificial intelligence, understanding algorithms becomes essential not just for scientists and engineers but for everyone. They are not merely tools of computation; they are frameworks of thought that reveal how logic, data, and creativity combine to shape the future of human knowledge.