Quantum computers have long been hailed as the next great leap in technology, promising to outperform classical machines in tasks that are currently impossible or impractically slow. Unlike traditional computers, which process information in binary bits of 0s and 1s, quantum computers use qubits—quantum bits that can exist in multiple states simultaneously. This ability, known as superposition, along with entanglement between qubits, gives quantum computers extraordinary potential power.

But with this promise comes a daunting challenge: how do we know a quantum computer is truly doing what we expect? While classical computers can be checked line by line, quantum processors are far more fragile. Their states are complex, fleeting, and easily disrupted by noise. As quantum devices grow larger and more sophisticated, verifying their correct behavior becomes not only harder but also absolutely essential for the field to progress.

The Need for a Clearer Picture

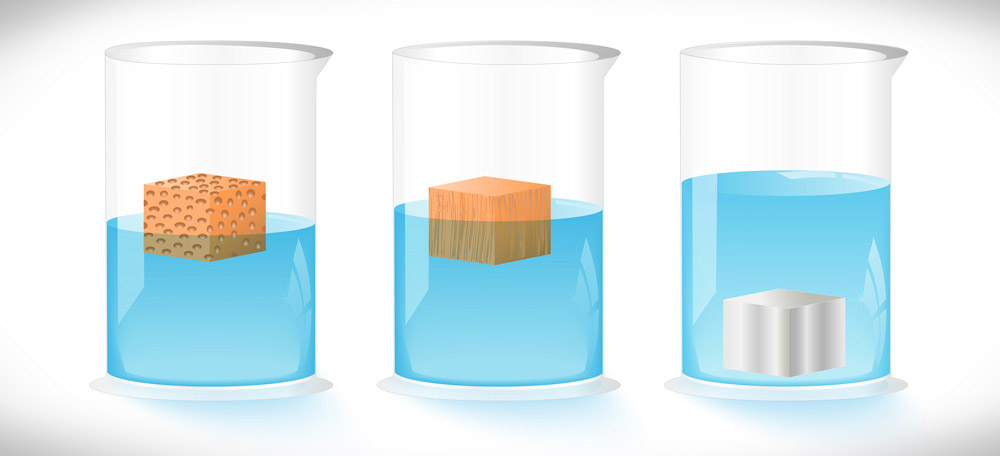

Traditionally, physicists have relied on quantum state tomography (QST), a process that attempts to reconstruct the full description of a quantum system based on measurements. In principle, QST works much like taking many photographs of an object from different angles and then piecing them together into a 3D model. But in practice, it runs into severe limitations. Each additional qubit in a quantum system increases the number of measurements needed exponentially. For systems larger than just a handful of qubits, the process becomes nearly impossible—consuming enormous computational resources and producing unreliable results when data is incomplete or noisy.

This bottleneck poses a critical question for the future of quantum computing: as processors expand beyond 10, 20, or 100 qubits, how can we still see clearly what’s happening inside? Without an answer, building trustworthy quantum technology would be like constructing a powerful machine with no way to test whether its gears truly work.

A New Tool for the Quantum Age

A team of researchers led by Dapeng Yu at Shenzhen International Quantum Academy, along with collaborators at Tongji University and other institutes in China, believe they have found a promising solution. Their work, recently published in Physical Review Letters, introduces a new mathematical method designed to characterize quantum states with much greater accuracy, even in the noisy and resource-limited conditions of real-world hardware.

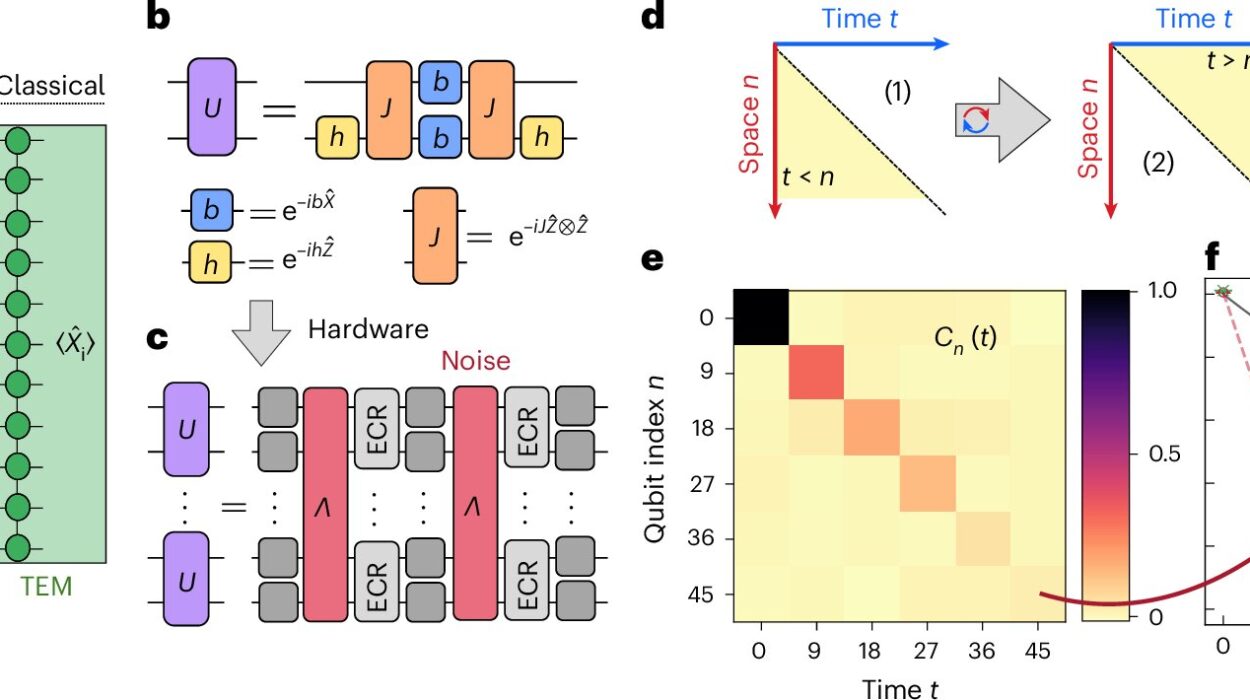

The breakthrough lies in what the team calls a purity-regularized least-squares estimator—a sophisticated algorithm that acts like a photo-editing tool for the quantum world. Imagine trying to sharpen a blurry photograph taken in poor light. The algorithm doesn’t simply accept the raw, noisy image; instead, it applies a guiding principle, using what is known about the expected “purity” of a quantum state to clean up the picture. The result is a much clearer and more faithful reconstruction of the quantum system.

From Blurry Data to Sharp Insights

In quantum mechanics, a “pure” state represents a perfectly defined quantum condition, while a “mixed” state reflects one that has been degraded by noise. The team’s method cleverly uses information about purity as a constraint during reconstruction. This prevents the algorithm from wandering too far off-course when data is incomplete or corrupted.

Shuming Cheng, one of the senior authors, explained the analogy: “It’s like transforming a blurry and incomplete photograph of a quantum system into sharp focus. The raw measurements we collect are imperfect, but our algorithm helps reveal the true underlying image.”

This guiding principle makes a dramatic difference. Where traditional tomography requires an overwhelming number of measurements to achieve clarity, the purity-regularized method can produce accurate reconstructions from far fewer, making it practical for the complex systems being developed today.

Demonstrating 17-Qubit Entanglement

To test their approach, the researchers applied it to a superconducting quantum processor of their own design. They focused on reconstructing a highly entangled state known as the Greenberger-Horne-Zeilinger (GHZ) state, involving up to 17 qubits.

The results were remarkable. Their method achieved a state fidelity—a measure of how closely the reconstructed state matched the ideal target—of about 0.6817 for the 17-qubit GHZ state. While that number may not sound high by everyday standards, in quantum physics it represents a significant achievement. For a system of that size, obtaining such fidelity is extraordinarily difficult, and their results stand among the largest and most accurate reconstructions of a quantum state performed on hardware to date.

Perhaps even more importantly, the team’s work conclusively certified the presence of genuine 17-qubit entanglement in their processor. Entanglement is the secret sauce of quantum computing, the property that allows qubits to act together in ways that classical bits never can. Confirming that such large-scale entanglement exists and can be reliably characterized is a major step forward for the field.

Why This Matters for the Future of Quantum Technology

This new approach does more than solve an academic puzzle. It addresses one of the most practical challenges facing the scaling of quantum computers: verifying that they are operating as intended. As quantum processors grow, traditional methods simply cannot keep up. The purity-regularized technique offers a way to benchmark and calibrate these systems without being crushed under the weight of impossible measurement demands.

For researchers and engineers, this tool could help identify errors, guide improvements in design, and deepen understanding of how noise affects quantum algorithms. For the broader vision of fault-tolerant quantum computing—the holy grail of the field—it provides a crucial stepping stone. Without the ability to see clearly inside these machines, progress would stall. With this new lens, the path forward looks brighter.

Beyond the Horizon: Next Steps

Looking ahead, the team plans to push their method further, applying it to even larger quantum systems and more complex states. They also hope to use the tool during the execution of quantum algorithms, not just in static tests, to better understand how noise influences performance in real time. Such insights could pave the way toward more effective error mitigation strategies, bringing truly reliable quantum computation closer to reality.

As Shuming Cheng put it, “Our approach provides an effective pathway toward the full characterization of noisy, intermediate-scale quantum systems. This is a crucial step in the journey toward fault-tolerant quantum computing.”

The Human Story Behind the Science

Behind the equations and algorithms, this story is also about human determination. It reflects the same spirit that has driven science for centuries: the relentless pursuit of clarity in the face of complexity. Just as Galileo sharpened his telescope to peer into the skies, today’s physicists sharpen their mathematical tools to peer into the invisible worlds of quantum states.

Quantum computing is not just about machines; it’s about humanity’s quest to push beyond limits, to harness the weird and wonderful laws of the quantum universe, and to build technologies that might one day transform everything from medicine to cryptography.

In this light, the purity-regularized estimator is more than a technical advancement. It is another step on a long journey—toward understanding, toward control, and toward the realization of a future where quantum computers deliver on their extraordinary promise.

More information: Chang-Kang Hu et al, Full Characterization of Genuine 17-qubit Entanglement on the Superconducting Processor, Physical Review Letters (2025). DOI: 10.1103/qy9y-7ywp.