In a world where technology is no longer just something we hold in our hands but something that surrounds us, breathes with us, and moves as we move, surveillance has taken on a new and unnerving dimension. The walls don’t just have ears—they may soon have eyes as well, even if those eyes are invisible.

This is not science fiction. It’s not a scene from a dystopian novel. It’s the very real future imagined and engineered by researchers at La Sapienza University in Rome. Their invention doesn’t need a lens to see you. It doesn’t need your face, your fingerprint, or even your voice. All it needs is your body’s silent conversation with the airwaves.

They call it WhoFi—a technological marvel, and perhaps a privacy nightmare.

A Ghost in the Network

Unlike facial recognition systems or fingerprint scanners that rely on explicit physical features, WhoFi functions in the realm of the unseen. It listens to how your body changes the invisible waves of a Wi-Fi signal as you move through a space. These changes, imperceptible to human senses, are captured by a technique called Channel State Information (CSI).

Every time a Wi-Fi signal travels from a router to a device, it interacts with the physical environment—bouncing off walls, furniture, and yes, human bodies. Each person disrupts the signal in a slightly different way, much like how everyone has a unique gait, voice, or thermal pattern. These tiny perturbations in the signal become a kind of digital aura—an electromagnetic fingerprint.

This is what WhoFi learns to read.

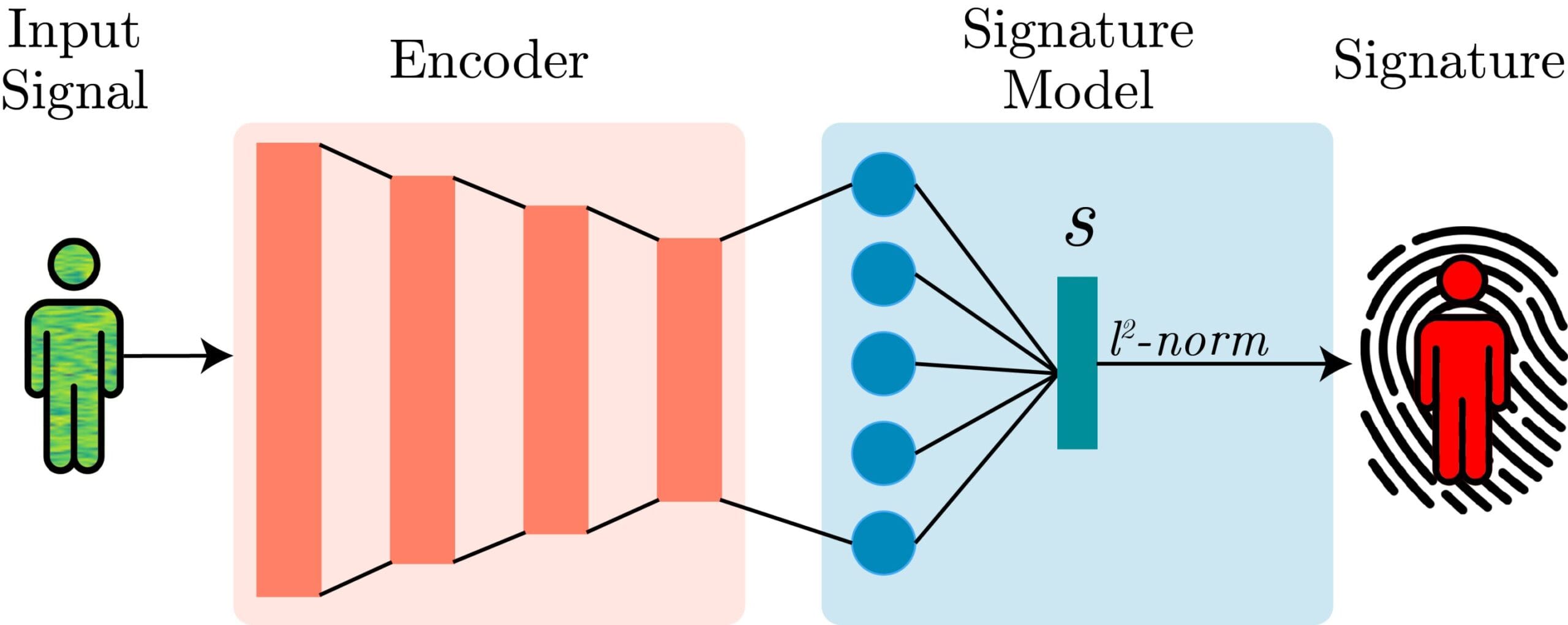

Researchers Danilo Avalo, Daniele Pannone, Dario Montagnini, and Emad Emam didn’t invent CSI, but they did push its boundaries. CSI had already been explored in earlier studies, including their own 2020 project “EyeFi,” which explored how Wi-Fi could be used to detect presence and movement. But WhoFi takes the concept much further. By training a deep neural network on CSI data, they created a system that can not only recognize that someone is there—but determine exactly who is there, just from how they alter the signal.

With 95.5 percent accuracy, WhoFi claims the ability to distinguish individuals in different environments, without requiring visual input, and without them ever knowing they’re being tracked.

The Science Behind the Shadows

To understand just how profound this is, imagine walking into a room. You don’t speak. You don’t touch anything. There are no cameras. And yet, a computer knows it’s you.

This recognition stems from the nuances of how your body—its shape, density, the way you move—absorbs and reflects radio waves. The Wi-Fi signal emitted by a router contains complex subcarrier information; essentially, it’s broken into multiple smaller frequency bands. As these subcarriers travel, they are disturbed by everything in the environment.

WhoFi records how these subcarriers fluctuate when a person is present. It then uses deep learning algorithms, particularly convolutional neural networks (CNNs), to detect subtle, consistent patterns that differentiate one person’s interference from another’s.

It doesn’t matter if you’re wearing different clothes, walking at different speeds, or even if the room has been rearranged. Like the brushstrokes of an unseen painter, your presence leaves behind a signature in the wireless fabric of the air. WhoFi learns that signature, and once it does, it remembers.

This means it doesn’t need a line of sight, like a camera. It doesn’t need a microphone to hear you. It doesn’t even require your permission.

Surveillance That Sees Through Walls

The implications of such a system are both thrilling and chilling. On the one hand, WhoFi represents a quantum leap in the field of ubiquitous sensing—a technology that could make smart homes smarter, improve elder care by detecting falls or irregular movements, and revolutionize security systems.

Imagine hospitals that monitor patients’ movements in real-time without intrusive cameras. Think of airports where security can detect unusual loitering or evasive behavior even in blind spots. Picture search-and-rescue operations where authorities can “see” through debris using only Wi-Fi signals. This isn’t a dream. This is the near-future capability that systems like WhoFi offer.

Because Wi-Fi signals can pass through many materials, such systems could function in darkness, fog, smoke, or behind closed doors. They could operate discreetly—completely invisible, completely silent, always on.

But this is where the double-edged nature of surveillance technology cuts deep.

The Right to Be Forgotten by the Air

Surveillance has always been a balance of trade-offs: between security and liberty, visibility and privacy, control and freedom. WhoFi brings this tension into sharp, almost existential focus.

The most unsettling feature of this system is its passive nature. Unlike facial recognition, where a camera must be in place and looking in your direction, or fingerprint scanning, which requires your touch, WhoFi requires only that you move through a space where Wi-Fi is active. That’s it. There are no cues. No consent. No escape—unless you live in a Faraday cage.

The researchers themselves acknowledge this challenge. They insist that WhoFi doesn’t capture identity in the traditional sense—no names, no faces, no government IDs. What it captures is a biometric signature, unique to you but detached from your persona. It’s a kind of anonymized surveillance, if such a thing can even exist.

But critics will rightly point out: once a system can track an individual persistently and with such accuracy, anonymity is an illusion. Movement patterns over time can reveal routines, relationships, even religious practices or health conditions. As data accumulates, re-identification becomes inevitable. The line between a “non-visual biometric” and a full personal profile is razor-thin.

And if this technology were ever commercialized or adopted by governments, who would be watching the watchers?

Innovation in the Shadows

For now, WhoFi remains an academic prototype. There are no known plans for deployment in public or private sectors. The system has been tested under controlled conditions, and the ethical guidelines surrounding its use are still being debated within scholarly and policy circles.

But the trajectory of surveillance history suggests that once a capability exists, it will eventually find application. In a post-9/11 world, and now in the age of AI-powered governance, governments and corporations have never been more incentivized to monitor, analyze, and predict human behavior.

WhoFi may have been built for research, but its potential uses are as vast as they are unsettling.

What Happens When Silence Speaks?

There is something deeply philosophical about WhoFi—something almost poetic. That our bodies, without words or images, leave behind a trace in the ether. That the very air around us can learn who we are. It speaks to the intimacy of technology, and the fragility of privacy in the digital age.

What does it mean to live in a world where your presence is never unrecorded, where even the act of walking across a room becomes a data point?

Are we inching toward a society where obscurity—once the natural state of most people—is no longer possible? Where every motion, every hesitation, every pause is not just noticed, but analyzed?

And if so, what kind of freedom remains?

The Need for a New Social Contract

The emergence of systems like WhoFi demands more than technical scrutiny—it demands ethical and legal frameworks that can keep up with innovation. Our existing privacy laws, most of which were written in an age of rotary phones and floppy disks, are wholly inadequate to govern the complexities of biometric data collected through ambient signals.

We must ask hard questions. Who owns the data generated by our bodies? Who has the right to interpret it? Can consent ever be truly informed when surveillance is silent and seamless?

These are not questions for scientists alone. They are for lawmakers, philosophers, designers, and citizens. We are all participants in this future, whether we know it or not.

Conclusion: Between Light and Shadow

WhoFi stands at the edge of technological possibility and moral ambiguity. It is a triumph of engineering—a system that turns invisible signals into identifiers with astonishing accuracy. But it is also a mirror, reflecting our deepest anxieties about power, privacy, and personhood.

As surveillance grows ever more subtle, the need for transparency grows ever more urgent. Because the real danger is not in what machines can do. It is in what we fail to do while they evolve.

The air may be watching. But who will watch the air?

Reference: Danilo Avola et al, WhoFi: Deep Person Re-Identification via Wi-Fi Channel Signal Encoding, arXiv (2025). DOI: 10.48550/arxiv.2507.12869