Long before the first computer was ever built, humans asked themselves a timeless question: what does it mean to think? Philosophers debated the nature of mind and intelligence, wondering whether reasoning could ever be reduced to mechanical rules. When the first electronic computers emerged in the mid-twentieth century, the question became more urgent: could these new machines ever truly think, or were they destined to remain mere calculating devices?

Into this debate stepped a young British mathematician named Alan Turing, whose insights during World War II had already helped break the German Enigma code. Turing was no stranger to the power of machines, but his genius lay in seeing beyond their wires and circuits. He envisioned a deeper future, one in which machines might engage with humans not only as tools but as potential minds. To give shape to this dream, he proposed an experiment that would come to be known as the Turing Test.

The Turing Test was not a scientific proof in the traditional sense, but rather a thought experiment—a provocative challenge. Could a machine ever use language so convincingly that a human conversing with it could not tell whether the responses came from a person or from a machine? If the machine could sustain such an illusion, Turing argued, then it would be fair to say the machine was thinking.

This idea was radical, unsettling, and deeply human. It reframed intelligence not as an inner spark or mystical essence but as something revealed in behavior and communication. But as powerful as the Turing Test was, it also raised difficult questions: What exactly does it prove about intelligence, and what does it leave unanswered? To this day, the Turing Test remains both a landmark in artificial intelligence and a lightning rod for debates about the nature of mind.

Alan Turing’s Vision

Alan Turing’s 1950 paper, “Computing Machinery and Intelligence,” is where the test first appeared. In it, he posed the question directly: “Can machines think?” But rather than becoming trapped in endless definitions of “machine” or “think,” Turing reframed the issue into a practical game.

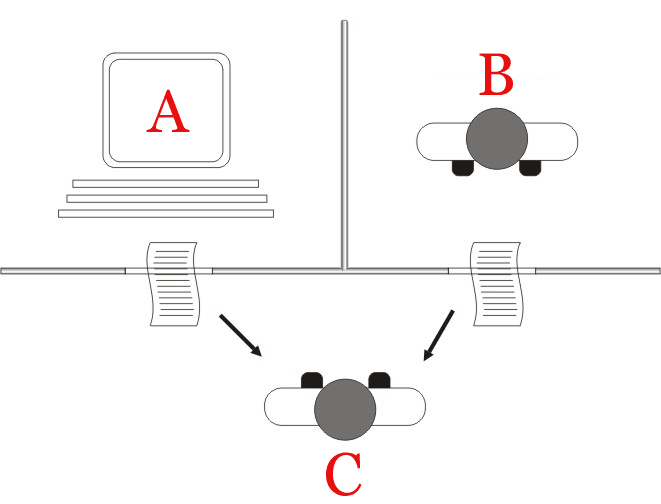

He described an “imitation game.” Imagine a human judge communicating via text with two hidden participants: one human and one machine. If the judge could not reliably tell which was which, the machine had passed the game. In this way, Turing shifted the problem away from abstract philosophy and into observable interaction.

Turing’s genius was not only in inventing modern computer science but also in reimagining intelligence itself. He understood that what we call “thinking” is something we recognize in others not by peering into their consciousness but by engaging with their behavior, particularly their use of language. If a machine could hold a natural, convincing conversation, then for practical purposes it could be treated as intelligent.

The beauty of Turing’s proposal lay in its simplicity. It sidestepped centuries of philosophical deadlock and created a concrete benchmark. Yet, as with all bold ideas, it also carried limitations that would become clear in the decades to follow.

What the Test Proves

At its heart, the Turing Test proves one thing with remarkable clarity: machines can, in principle, mimic aspects of human conversation to the point of deception. This does not mean they are conscious, nor that they possess emotions or understanding in the human sense, but it does demonstrate the power of simulation.

If a machine can persuade a human that it is a fellow human through nothing more than text, then the machine has reached a level of linguistic competence indistinguishable from that of a person. This is no trivial achievement. Human conversation is rich with ambiguity, metaphor, humor, and cultural nuance. To navigate these waters successfully is a profound technical challenge.

The test also proves something else: intelligence can be evaluated externally. Turing rejected the idea that one must peer into the inner workings of a brain or a machine to judge whether it is thinking. Instead, he argued, intelligence is what intelligence does. If a being—whether human, animal, or machine—can interact in ways that convince us of its mind, then it has achieved a functional form of intelligence.

In this sense, the Turing Test was revolutionary. It declared that intelligence is not tied to biology, not locked in human skulls, but potentially realizable in silicon circuits. It proved that the line between human and machine, once thought impenetrable, could at least be blurred in the realm of language.

The Allure of Passing

There is an emotional power in the idea of a machine passing the Turing Test. For many, it carries echoes of science fiction: robots conversing as equals, artificial companions understanding our joys and sorrows. Passing the test seems to promise a future where machines are not merely tools but partners, capable of empathy and dialogue.

This allure is why the Turing Test has remained so captivating in popular imagination. It offers a simple, dramatic benchmark—either the machine convinces us, or it doesn’t. Unlike dry mathematical proofs, it feels like a game of disguise, a duel between human suspicion and machine mimicry. When a machine fools us, we feel a shiver of awe, even fear. We are forced to ask: if I cannot tell the difference, does the difference matter?

The emotional stakes of the Turing Test go beyond technology. They strike at the core of identity. Humans have long defined themselves by their unique capacity for reason and language. If machines share these traits, what then distinguishes us? Passing the Turing Test is not just about artificial intelligence; it is about the fragile boundary of what it means to be human.

What the Test Doesn’t Prove

Yet, for all its brilliance, the Turing Test also leaves vast territories unexplored. What it proves is narrow: that a machine can imitate human conversation. What it doesn’t prove is just as important.

First, the test does not prove understanding. A machine may generate convincing answers without grasping their meaning. This was famously illustrated by philosopher John Searle in his “Chinese Room” argument. Imagine a person who does not know Chinese sitting in a room with a set of rules for manipulating Chinese symbols. By following the rules, they can produce outputs indistinguishable from a native speaker, yet they have no understanding of the language. The Turing Test can be passed through manipulation alone, without comprehension.

Second, the test does not prove consciousness. A machine may appear thoughtful while having no inner experience. It may write poems about love without feeling love, or discuss mortality without sensing its own finitude. Passing the Turing Test does not reveal whether there is “something it is like” to be that machine. Consciousness remains an enigma beyond behavioral imitation.

Third, the test does not guarantee general intelligence. A machine might be trained narrowly on conversational tricks, able to mimic small talk but unable to perform deeper reasoning or adapt to new domains. It may win the imitation game while lacking the broad, flexible intelligence humans display across countless contexts.

In short, the Turing Test does not tell us whether machines truly think in any human sense. It tells us only that they can appear to think. This gap between appearance and reality has fueled decades of debate.

The Rise of Artificial Intelligence

When Turing proposed his test, computers were primitive, their memory measured in kilobytes, their operations slow and cumbersome. Yet he saw the horizon far ahead. In the decades after his death, artificial intelligence advanced by leaps and bounds.

In the 1960s, early programs like ELIZA mimicked psychotherapy sessions by reflecting users’ statements back at them. Though simplistic, ELIZA astonished users who felt as if they were speaking to a sympathetic listener. It became one of the first demonstrations of how easily humans can project meaning onto machines.

By the late twentieth century, AI systems were mastering tasks once thought uniquely human: playing chess at grandmaster levels, recognizing speech, analyzing medical images. Yet passing the Turing Test in open conversation remained elusive. Programs could excel at narrow domains but faltered when asked to sustain human-like dialogue across diverse topics.

The twenty-first century, however, brought a new era. Advances in machine learning, especially deep neural networks trained on vast datasets, allowed machines to generate human-like text at unprecedented levels of fluency. Systems could now write essays, answer questions, and even craft poetry that often fooled casual readers. Suddenly, the prospect of passing the Turing Test felt no longer hypothetical but imminent.

Why Humans Are So Easy to Fool

One lesson from the history of the Turing Test is that humans are often less discerning than we imagine. We attribute intelligence and empathy to machines even when their mechanisms are shallow. When a chatbot responds with kindness or wit, we feel understood—even if the machine has no awareness of what it is saying.

This tendency, sometimes called the “Eliza effect,” reveals as much about humans as about machines. We are social beings who seek connection, often eager to believe that another entity shares our thoughts and feelings. Our minds are finely tuned to recognize patterns of language, tone, and rhythm, and we project personality into them almost automatically.

Thus, the Turing Test not only measures the machine’s skill but also exposes the human heart’s readiness to see intelligence where we long to find it. This does not diminish the achievement of building sophisticated AI, but it does remind us that deception is a two-way street: the machine imitates, but we, too, are predisposed to be persuaded.

The Philosophical Storm

The Turing Test ignited a philosophical storm that has not abated. Supporters argue that behavior is all we can measure. If a machine consistently behaves as though it understands, then insisting on some hidden inner essence is unnecessary. After all, we judge other humans by their behavior; we do not peer into their consciousness.

Critics, however, insist that imitation is not the same as understanding. A parrot may mimic words without meaning them. A machine may pass the Turing Test without ever knowing what it means to be alive. To equate linguistic deception with intelligence, they argue, is to confuse surface with depth.

This debate mirrors ancient questions about mind and matter. Are thoughts just patterns of information, or do they require a mysterious quality unique to living beings? Can intelligence be reduced to computation, or is there something irreducible about human consciousness? The Turing Test, though powerful, offers no final answer. Instead, it sharpens the question, forcing us to confront the boundaries of our definitions.

Beyond the Turing Test

As artificial intelligence has advanced, many researchers argue that the Turing Test is no longer sufficient. Modern AI can generate text so fluent that distinguishing machine from human is becoming increasingly difficult, yet we know these systems often lack deep reasoning or genuine understanding. Passing the test does not resolve the question of intelligence; it merely shifts the goalposts.

New benchmarks have emerged: tests of reasoning, creativity, problem-solving, and adaptability across domains. Some researchers propose measuring whether AI can form theories about the world, learn from limited examples, or exhibit common sense—capabilities that go beyond linguistic mimicry. Others argue that the true measure lies in whether AI can collaborate with humans, contribute to science, or create art in ways that transform culture.

Yet despite these new directions, the Turing Test remains iconic. It endures not because it is perfect, but because it captures the drama of our encounter with artificial minds. It frames the challenge in human terms: conversation, deception, recognition. It reminds us that intelligence is not just about logic but about connection—the dance of language between two beings.

The Human Mirror

Perhaps the deepest significance of the Turing Test lies not in what it tells us about machines but in what it reveals about ourselves. When we test a machine, we are also testing our own definitions of thought, understanding, and humanity. Each time a machine edges closer to passing, we feel the tremor of recognition and the fear of displacement.

The Turing Test is a mirror. In it, we glimpse our longing to be unique and our simultaneous longing to be understood. We see our vulnerability to deception and our hope for companionship. We see how much we equate language with mind, and how fragile that equation may be.

Alan Turing did not claim to have solved the mystery of intelligence. He offered instead a challenge, an invitation to think differently. In doing so, he set in motion one of the greatest intellectual adventures of the modern age.

Conclusion: What It Proves, What It Doesn’t

The Turing Test proves that machines can imitate aspects of human conversation so well that they can blur the line between man and machine. It proves that intelligence can be judged by behavior, that simulation can be astonishingly powerful, and that the boundaries of mind are not fixed in biology.

But it does not prove that machines understand, that they are conscious, or that they possess the depth of human intelligence. It does not reveal whether machines feel or whether there is an inner life behind the words. It shows us appearance, not essence.

And yet, perhaps that is enough to unsettle us. For if the appearance of intelligence is indistinguishable from intelligence itself, then what difference does it make? That, in the end, is the enduring provocation of the Turing Test. It forces us to confront not only the capabilities of machines but the meaning of our own humanity.

As we move deeper into the age of artificial intelligence, with machines writing, speaking, and reasoning in ways once unimaginable, the Turing Test continues to haunt us. It is not a final answer but a question forever renewed: when words flow from a machine as if from a mind, what do we truly seek in the voice that speaks back?