Artificial Intelligence often feels like the most visible technology in the world today—on our phones, in our search engines, and even in our cars. Yet behind the polished announcements, glossy product launches, and headlines, lies a shadowy history of experiments most people never heard about. These hidden projects, tucked away in research labs, military facilities, and private companies, shaped the AI we know today in ways that are both fascinating and unsettling. To truly understand the power and peril of AI, one must peer into the experiments conducted away from the public eye—projects that blurred the lines between curiosity and control, promise and danger, science and secrecy.

The story of AI has always been more than a tale of algorithms and machines. It is the story of human ambition, our hunger to replicate intelligence, and the ethical dilemmas that arise when we create something that mirrors our own minds. In exploring the secret experiments of AI, we discover not only the hidden chapters of technological history but also the very questions of what it means to be human in a world increasingly shared with intelligent machines.

Whispers from the Early Days

The roots of AI stretch back long before the term was even coined. In the 1940s and 50s, the first electronic computers gave birth to bold ideas: could machines learn, reason, or even think? In 1956, the famous Dartmouth Conference officially launched the field of Artificial Intelligence, but even then, much of the work was cloaked in ambition that exceeded the public narrative.

Researchers like John McCarthy, Marvin Minsky, and Herbert Simon were not just writing academic papers; they were exploring how far machine reasoning could be pushed in military, government, and corporate projects. In Cold War America, intelligence agencies quietly funded studies in machine translation, automated reasoning, and information retrieval. The idea was simple but profound: whoever mastered thinking machines would hold the ultimate strategic advantage.

The public heard about clunky chess programs and simple problem-solving demonstrations, but behind the scenes, governments invested in secret research. Machines that could sift through massive intelligence data, translate Russian to English, or even simulate human decision-making were already in development decades before most people imagined such things possible.

The Military’s Quiet Obsession

War has always been a driver of technological progress, and AI was no exception. While the public was shown early computers solving puzzles or calculating trajectories, military institutions imagined something far more radical: machines that could think fast enough to outwit adversaries.

During the Vietnam War era, experiments in automated surveillance, data analysis, and even predictive modeling of enemy movements began. By the 1980s, military projects were testing AI for autonomous vehicles, drones, and target recognition. Few outside the defense world knew these experiments were underway, yet they laid the foundation for today’s unmanned aerial systems and smart weapons.

One particularly secretive line of research focused on battlefield decision systems—AI designed to recommend or even make tactical choices faster than human commanders. These experiments raised terrifying ethical questions. Could a machine decide who lived and who died in war? The public rarely heard about these projects, but they shaped the debates we face now about autonomous weapons and AI’s role in combat.

Even today, many details of military AI projects remain classified. We glimpse them only through leaks, budget reports, or occasional declassified studies. Yet it is clear: long before the world debated ChatGPT or self-driving cars, AI was already being tested in the shadows of war.

The Experiments with Human Minds

Perhaps the most unsettling secret AI experiments were not about machines acting alone but about machines probing, manipulating, and predicting human behavior.

In the 1960s, psychologist Joseph Weizenbaum created ELIZA, a simple conversational program that mimicked a psychotherapist. It shocked him how quickly people became attached to the machine, confiding in it as if it truly understood. What began as an experiment in natural language processing revealed something deeper: humans were emotionally vulnerable to even the illusion of intelligence.

What the public did not always see was how quickly intelligence agencies, corporations, and researchers recognized the potential for manipulation. By the 1970s and 80s, experiments in psychological profiling, behavior prediction, and even persuasion through computer systems were quietly underway. These systems were primitive compared to today’s AI, but they opened the door to modern recommender systems, targeted advertising, and even political influence campaigns.

The ethical tension was immense. Machines that could predict human desires could also manipulate them. Experiments in adaptive learning, attention modeling, and behavior nudging remained mostly hidden from public view, but they quietly evolved into the algorithms that now shape our social media feeds, online purchases, and even our political opinions.

Secret Corporate Laboratories

While government secrecy shaped early AI, the corporate world soon embraced its own hidden experiments. In the 1990s and 2000s, as computing power exploded, technology companies began conducting vast AI experiments, often shielded from public scrutiny.

Search engines, social networks, and e-commerce platforms became giant laboratories, running invisible experiments on millions of users. Algorithms were tested to see what captured attention, what triggered clicks, and what influenced behavior. These were not malicious in intent at first—they were designed to optimize user experience and profits—but they were experiments nonetheless.

The sheer scale was unprecedented. A company could test how subtle changes in color, wording, or recommendations influenced millions of people, gathering data at a rate no psychologist in history could imagine. The public rarely realized they were participants in these secret trials. Over time, these experiments shaped not only individual choices but entire cultures. The way we consume news, the way we perceive reality, and the way we interact with one another online has been deeply sculpted by algorithms born in these corporate labs.

As secrecy gave way to profit-driven opacity, a new kind of hidden AI experiment emerged: not conducted in basements or military bunkers, but in broad daylight—hidden in plain sight because no one outside the companies could truly see how the algorithms worked.

When AI Crossed into Biology

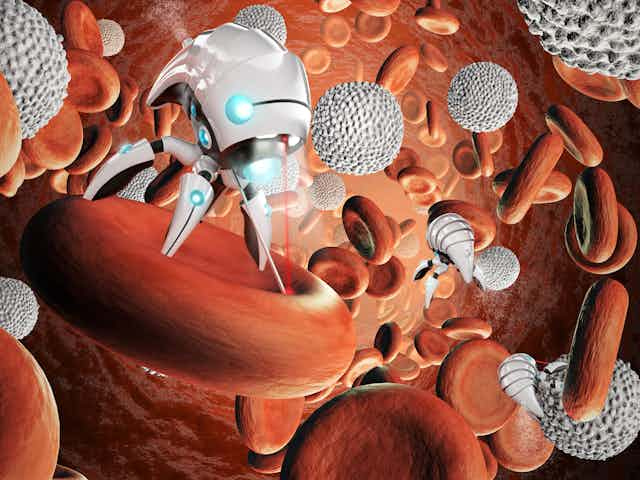

One of the most extraordinary but lesser-known frontiers of AI has been its role in biology and medicine. While the public heard about IBM’s Deep Blue beating Garry Kasparov in chess or AlphaGo defeating Go champions, quieter breakthroughs were happening in labs where AI was used to probe the mysteries of life.

Early experiments in bioinformatics used AI to analyze genetic data, predict protein structures, and identify disease markers. Some of these projects were publicized, but others remained in scientific obscurity until years later. Governments and private labs alike used AI to accelerate drug discovery, simulate biological systems, and even experiment with the possibilities of genetic editing.

In recent years, AI has been used to design entirely new proteins, raising the possibility of synthetic biology on a scale once considered science fiction. These experiments, though scientifically groundbreaking, remain largely invisible to the general public. Yet their consequences—new cures, new forms of life, or even new biological risks—are among the most profound of all AI applications.

The Experiments with Ethics

What makes AI experiments different from those in chemistry, physics, or biology is that they directly touch the human condition. They deal not only with matter and energy but with thought, decision, and morality. Many secret AI experiments have revolved around ethical questions: What happens if a machine is allowed to make moral choices? Can a machine decide fairly? Can it understand justice?

In research labs, scientists have quietly tested AI on moral dilemmas. Should a self-driving car swerve to save its passengers or pedestrians? Should an algorithm prioritize one patient over another for scarce medical resources? These are not theoretical questions—they are the foundation of ongoing experiments in ethics and AI.

The secrecy often lies not in the fact that such experiments exist, but in their outcomes. Corporations rarely disclose the details of how their AI systems make ethical trade-offs. Governments rarely share the moral frameworks embedded in autonomous systems. The public, whose lives are directly affected, remains largely in the dark.

The Rise of Autonomous Intelligence

Perhaps the most closely guarded AI experiments are those exploring autonomy—machines that operate with little or no human supervision. Early drones, robotic vehicles, and adaptive learning systems hinted at this future, but the most advanced work remains secret.

In robotics labs, machines are tested for their ability to learn from their environments, adapt to new tasks, and even collaborate with one another. Some of these experiments are published in academic journals, but many are not. The fear of misuse, the potential for military exploitation, and the sheer disruptive power of autonomy keep much of this work hidden.

The public often imagines AI as a tool—something we use. Yet these secret experiments reveal the possibility of AI as an actor—something that makes decisions, learns from experience, and pursues goals. This transition from tool to actor is one of the most profound shifts in human history, and it is unfolding largely outside of public view.

The Shadows of Today

Today, in the age of generative AI, the experiments continue—often hidden in the training data, the parameters, and the closed labs of major corporations. What images were used to train models that can generate art? What texts were fed into systems that can mimic human reasoning? What biases remain embedded, unseen but powerful, shaping outputs in ways the public cannot easily detect?

Researchers quietly test AI models on tasks far beyond what is publicly announced—legal reasoning, medical diagnosis, even autonomous scientific discovery. Some experiments succeed spectacularly; others fail in ways that are quietly concealed. The secrecy is not always sinister—it is often practical, protecting intellectual property or preventing misuse. But it creates a gap between what the public believes AI can do and what AI is already capable of behind closed doors.

Why Secrecy Matters

The hidden history of AI experiments is not just a curiosity—it is a profound ethical and political challenge. When experiments shape how wars are fought, how economies function, how health is managed, and how truth is perceived, secrecy becomes dangerous. Without transparency, society cannot make informed choices about the technologies that define its future.

At the same time, secrecy has been essential to progress. Early AI needed the funding and protection of governments. Corporate AI experiments needed the freedom to innovate before revealing results. The tension between secrecy and openness, between hidden experiments and public accountability, is at the very heart of AI’s story.

The Future Unveiled

As we move deeper into the age of AI, the secret experiments of the past offer lessons. They remind us that what we see on the surface—chatbots, recommendation systems, driverless cars—is only part of the story. Beneath lie hidden trials, ethical puzzles, and scientific explorations that shape the trajectory of technology.

The future of AI will almost certainly hold new experiments the public may never fully see: AI designing other AI, autonomous systems making high-stakes decisions, algorithms guiding economies and governance. The secrecy may protect innovation, but it also shields power from accountability.

To understand the future, we must look with clear eyes at the past and present: AI has always been shaped as much by what was hidden as by what was revealed. The secret experiments we never heard about are not just stories of science in the shadows—they are the foundation of the world we live in today, and the key to the world we are building for tomorrow.

Conclusion: The Hidden Mirror

Artificial Intelligence is, in the end, a mirror of humanity. Its hidden experiments reflect our deepest ambitions, fears, and contradictions. We seek to create intelligence outside ourselves, yet we are often afraid of what it will reveal. We hope for machines that heal, help, and enlighten, yet we also build machines that watch, manipulate, and even kill.

The secret experiments of AI show us both sides of this mirror. They remind us that intelligence, whether human or artificial, is never neutral—it is shaped by the values, desires, and fears of its creators.

The question is not whether AI will continue to advance—it will. The real question is whether humanity will have the courage to confront the secrets, demand transparency, and guide this powerful force toward a future that serves us all.

Because in the end, the true experiment is not AI itself. The true experiment is us—how we choose to wield the most transformative technology of our age.