Artificial intelligence is everywhere—translating languages, diagnosing diseases, painting surreal masterpieces, and holding conversations. Behind these abilities lie deep neural networks, layered webs of artificial neurons trained to recognize patterns and make decisions. But despite their astonishing power, the inner workings of these networks remain partly a mystery. Why do some networks learn efficiently while others seem to collapse under their own complexity? What determines whether a neural network becomes a blazing fire of intelligence—or fizzles out before it learns anything useful?

In a breakthrough study published in Physical Review Research, a team of scientists from the University of Tokyo, in collaboration with engineers at Aisin Corporation, has uncovered a surprising key to these questions—physics. Specifically, the kind of physics that explains how systems behave near tipping points, like a fire burning out or water freezing into ice. Their findings reveal that universal scaling laws—mathematical rules that describe how certain systems change with size—also apply to deep neural networks, which, it turns out, behave like physical systems undergoing absorbing phase transitions.

This discovery isn’t just an academic curiosity. It gives researchers a new way to predict the learning behavior of AI systems and opens a potential pathway toward a unified theory of artificial intelligence. In the words of lead author Keiichi Tamai, it might even bring us closer to understanding “the physics of intelligence itself.”

Phase Transitions in the Heart of Deep Learning

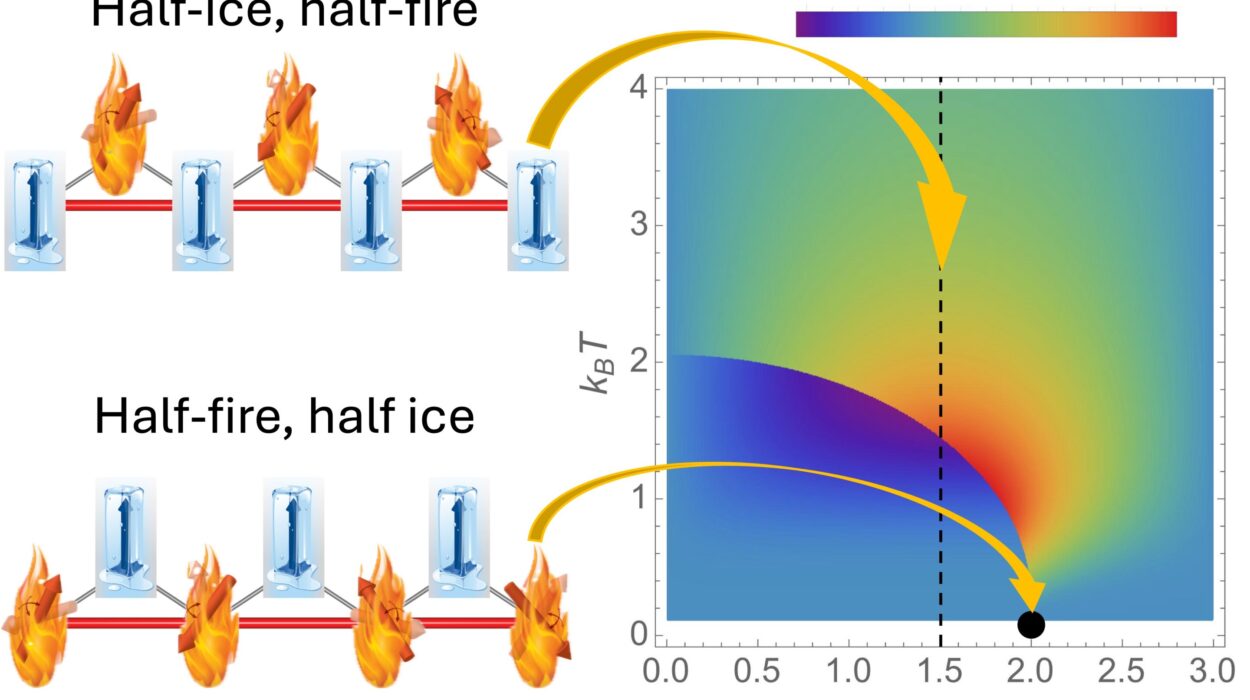

The concept of an absorbing phase transition may seem far removed from AI. It comes from statistical physics—the branch of science that deals with how particles, systems, and entire universes behave in bulk. These transitions describe what happens when a system shifts dramatically from one state to another, usually irreversibly, unless something external intervenes.

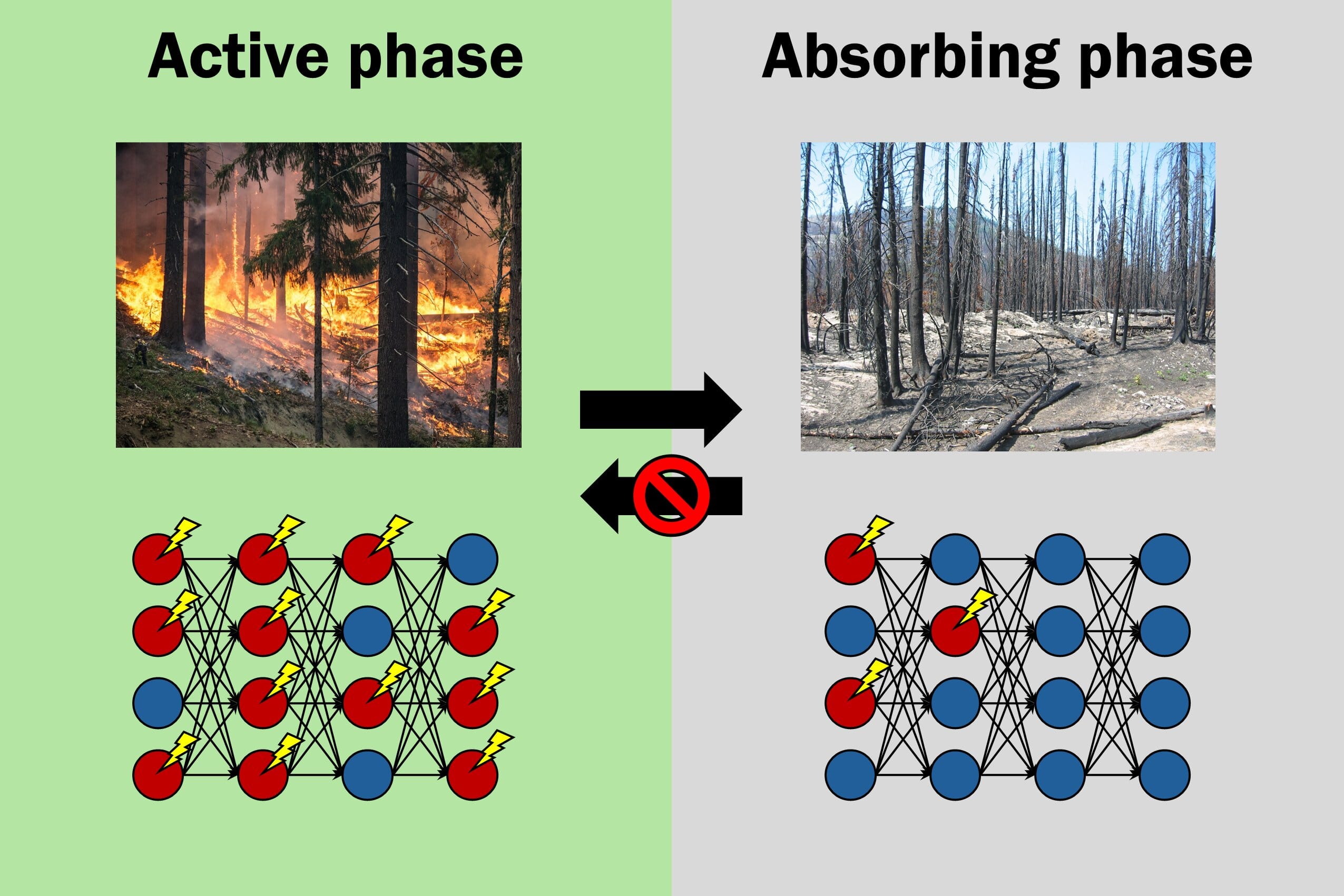

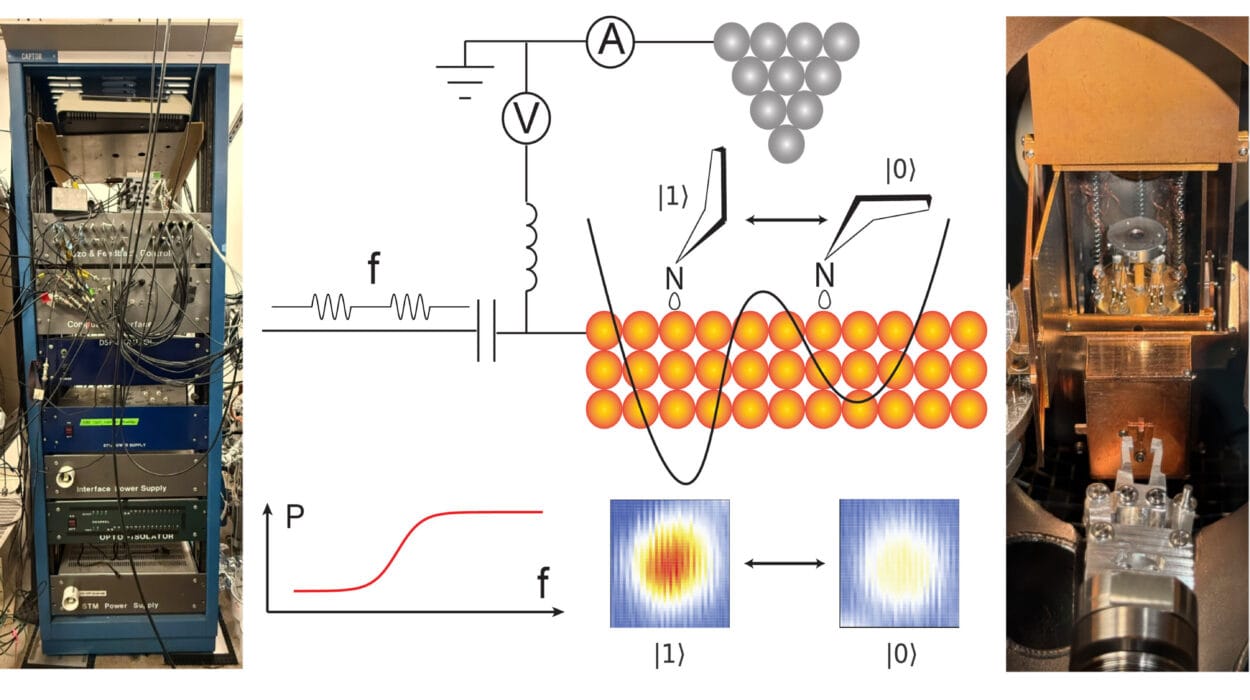

Imagine a wildfire roaring through a forest. For a time, the fire spreads actively. But eventually, it may reach a point where it has burned all available fuel. It enters an absorbing state—a dead end from which it cannot recover without fresh trees to burn. Tamai recognized a familiar rhythm here. Signals in a deep neural network, like flames in a fire, propagate from neuron to neuron. But in some configurations, those signals fade, the network becomes inactive, and learning stalls. In essence, the model gets stuck in a computational dead end.

This analogy was more than poetic. It became scientific prophecy.

Tamai, whose academic roots are in the statistical physics of critical phenomena, realized that deep learning systems might behave like physical systems on the brink of a phase change. If true, then universal scaling laws—the same mathematical principles that describe boiling water or magnetizing metals—could explain how deep neural networks function, grow, and sometimes fail.

Scaling the Unknown

Universal scaling laws describe how measurable properties change when the system scales in size. In physical systems, these laws hold near critical points—tipping points where the system undergoes a fundamental change. The key insight of Tamai’s research is that deep neural networks may exhibit similar critical behavior, particularly when the learning dynamics transition from one regime to another.

To test this hypothesis, the research team used a blend of theory and computational simulations. They derived critical exponents, which are universal constants shared by all systems within the same class of phase transition. Then, they compared these theoretical values with what they saw in simulated deep learning systems.

Remarkably, the numbers matched.

Neural networks, it turns out, exhibit the same universal behaviors near their learning tipping points as physical systems do near theirs. The idea that digital brains could be governed by the same mathematical patterns as a melting crystal or an extinguishing flame is not only poetic—it’s now empirically grounded.

This means researchers can now predict how changes in architecture, size, or learning parameters will affect the outcome of training. Will a signal burn brightly through the network, allowing the model to learn quickly and effectively? Or will it die out, leaving behind an inert and untrainable system? With scaling laws in hand, scientists finally have a tool to answer these questions in advance.

From Brute Force to Elegance

Modern AI development often relies on trial and error. Engineers and scientists test countless configurations—changing the number of layers, tweaking learning rates, or adjusting the size of datasets—until they find a combination that works. This brute-force method is not only time-consuming but also energy-intensive, contributing to the growing environmental cost of machine learning.

Tamai’s work offers a way out.

By identifying where a neural network lies on the spectrum between activity and absorption, developers can predict trainability without endless testing. They can fine-tune models to hover just near the critical point—where learning is both stable and dynamic. This is the edge of chaos where the brain seems to function most efficiently. It’s also where, according to Tamai, intelligence seems to bloom.

This insight doesn’t just help machines. It could also reshape how we think about human cognition.

The Brain as a Critical System

Tamai’s research echoes a long-standing, but still controversial, idea in neuroscience known as the criticality hypothesis. This theory proposes that the human brain operates near a critical point—balanced between order and chaos, stability and flexibility. At this tipping point, the brain may be most capable of forming complex thoughts, adapting to new situations, and generating creativity.

The parallels between deep neural networks and physical critical systems lend new weight to this hypothesis.

For decades, researchers have tried to understand how intelligence emerges from the simple electrical firings of neurons. Now, with AI systems mimicking these same firing patterns and demonstrating similar scaling behaviors, we have a chance to understand how both artificial and biological networks may obey the same physical laws.

Even Alan Turing—the father of modern computing—hinted at this idea in the 1950s. But in his time, the tools of neuroscience and computational theory were too limited. Today, Tamai believes we finally stand at the edge of realizing Turing’s vision.

“Back then,” he says, “we didn’t have the ability to see the patterns. But now, with the convergence of physics, neuroscience, and AI, we can start to explore what intelligence really is—from a physics perspective.”

Toward a Unified Theory of Learning

The discovery that deep learning systems can be described by universal scaling laws is more than a fascinating footnote. It may be a step toward something truly revolutionary: a unified framework that connects intelligence—whether artificial or organic—to the fundamental laws of the universe.

For now, that dream remains distant. But Tamai and his colleagues have lit a torch in the dark.

Their work opens the possibility that the mysteries of consciousness, thought, and learning are not boundless or unknowable—but can be measured, modeled, and predicted. Intelligence, in this view, is not just a trait or a tool. It’s a phase, a state of matter, a self-organizing phenomenon poised delicately between order and entropy.

And perhaps, in time, we will learn not only how to build intelligent machines—but also how to understand ourselves.

A New Kind of Fire

In the end, Tamai’s journey from statistical physics to artificial intelligence feels inevitable. The fire that once danced in equations of combustion and collapse now burns in lines of code and neurons. The idea that a neural network can behave like a fire—alive one moment, extinguished the next—does more than explain technical behaviors. It invites us to think differently about intelligence itself.

What if learning is not a ladder but a flame?

What if consciousness is not a blueprint but a balancing act?

And what if the key to artificial intelligence is not more data or deeper networks, but a better understanding of where the flame flickers, and where it goes out?

In the language of physics, the signal must propagate. The system must remain active. The fire must not die.

Thanks to this research, we may finally have a map to where intelligence burns brightest—and how to keep that spark alive.

Reference: Keiichi Tamai et al., Universal scaling laws of absorbing phase transitions in artificial deep neural networks, Physical Review Research (2025). DOI: 10.1103/jp61-6sp2