In a quiet revolution that may reshape the foundation of quantum computing, scientists have solved a key problem in quantum error correction that was once thought to be fundamentally unsolvable. The breakthrough centers on decoding—the process of identifying and correcting errors in fragile quantum systems—and could accelerate our path toward practical, fault-tolerant quantum computers.

The team, led by researchers from the Singapore University of Technology and Design, the Chinese Academy of Sciences, and the Beijing Academy of Quantum Information Sciences (BAQIS), has developed a new algorithm known as PLANAR. This isn’t just a modest improvement—PLANAR achieved a 25% reduction in logical error rates when applied to Google Quantum AI’s experimental data, rewriting what experts thought they knew about hardware limits.

What makes this achievement so significant is that it challenges a long-standing assumption in the field: that a portion of errors—called the “error floor”—was intrinsic to the hardware. Instead, PLANAR reveals that a quarter of those errors were algorithmic, not physical, caused by limitations in the decoding methods rather than the quantum devices themselves.

This insight not only breathes new hope into the quest for scalable quantum computing—it redefines what we thought was possible.

The Fragile Reality of Quantum Machines

Quantum computers operate in a realm where bits are replaced by qubits—units that exist in delicate superpositions of 0 and 1. These qubits can solve certain problems that classical computers can’t touch, but they come with a fatal flaw: they’re exquisitely sensitive to errors from heat, radiation, interference, and even slight fluctuations in the environment.

Without some form of quantum error correction (QEC), large-scale quantum computation would be impossible.

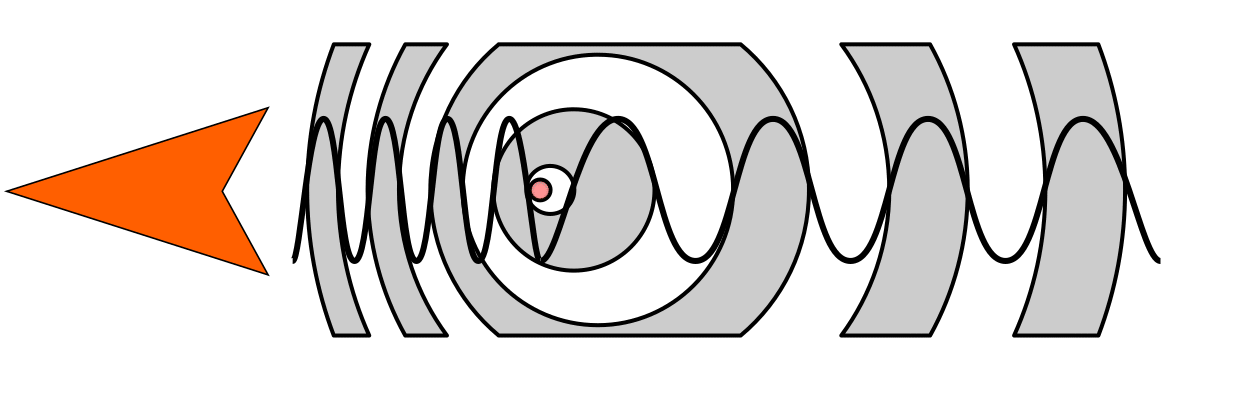

The challenge lies in decoding these errors correctly. Imagine having a conversation in a thunderstorm and trying to guess what someone said based on garbled fragments. That’s what a quantum decoder must do: infer the original message (or state) from noisy, ambiguous signals called syndromes.

Traditionally, researchers relied on minimum-weight perfect matching (MWPM) algorithms to do the job. These algorithms look for the shortest paths between error signals, assuming that the simplest explanation is usually the best. And in many cases, they work well enough.

But “well enough” isn’t sufficient when your goal is fault-tolerant quantum computation, where the error rate must plunge to virtually zero.

The Quest for the Optimal Decoder

For decades, physicists knew that the gold standard of error correction would be maximum-likelihood decoding (MLD). This method doesn’t just guess the shortest path—it finds the most probable cause of the observed errors. It’s the difference between a hunch and a statistically grounded conclusion.

But there was a catch: MLD belongs to the dreaded #P complexity class, a level of computational difficulty that’s effectively intractable for real-world systems. Even small error correction codes would require exponential computing power.

Or so everyone thought.

Enter PLANAR.

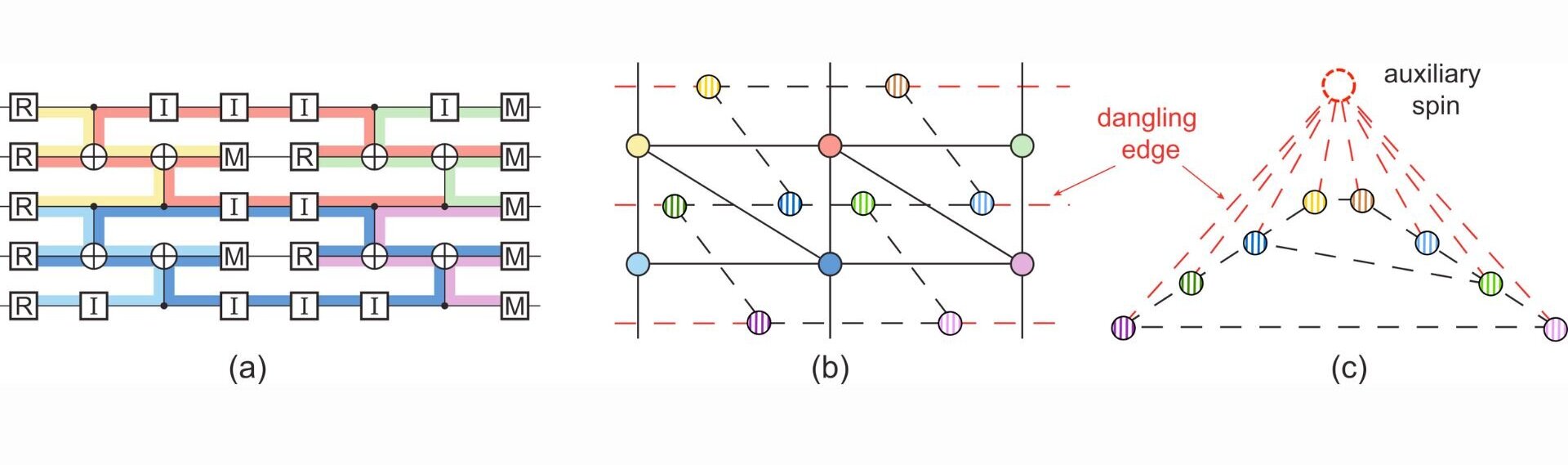

The researchers noticed something extraordinary. When applied to a specific kind of quantum code—repetition codes under circuit-level noise—the problem transformed. The messy decoding landscape took on a new mathematical shape: a planar graph, where connections don’t cross over one another.

This configuration is rare and valuable in physics, because it allows for exact, efficient solutions that are otherwise impossible in more complex topologies.

By borrowing tools from statistical physics, especially techniques used to study materials called spin glasses, the researchers unlocked a powerful insight: decoding quantum errors could be recast as a problem of calculating the partition function of a spin system—a calculation that is solvable in polynomial time using the Kac-Ward formalism.

It wasn’t just a clever trick. It was a scientific reframing of the entire problem, and it led to the world’s first exact maximum-likelihood decoding algorithm with real-world computational feasibility.

From Abstract Math to Real-World Chips

The theory was elegant. But would it work?

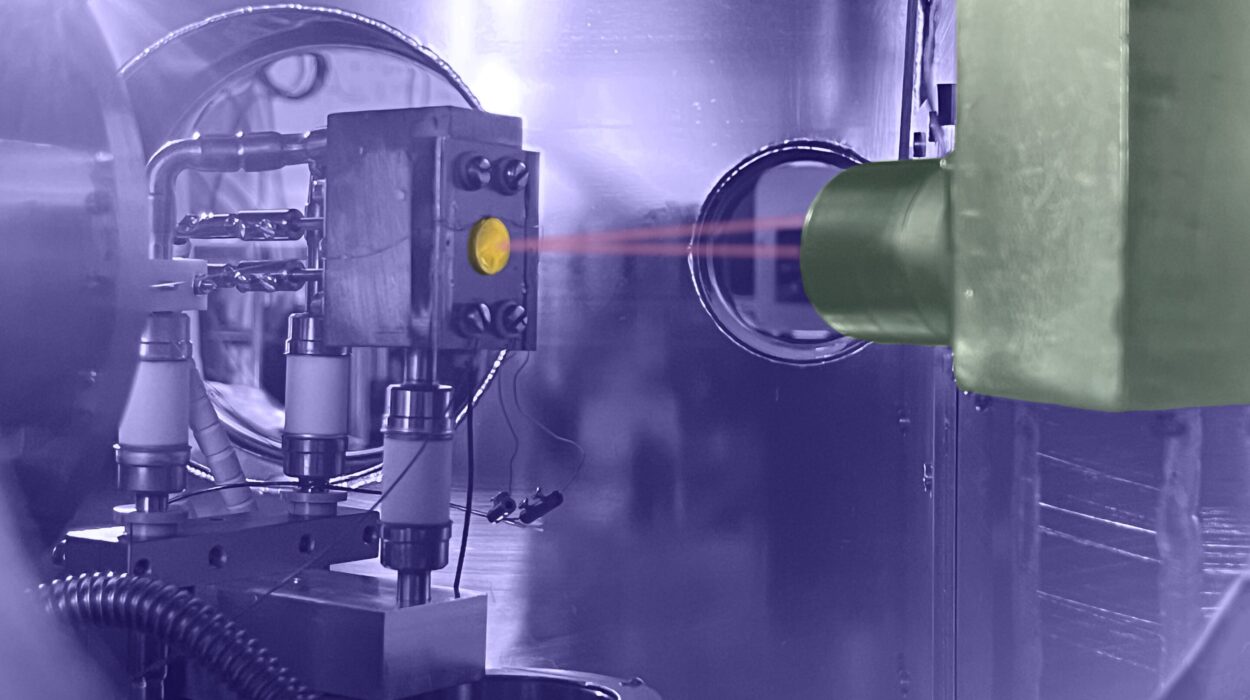

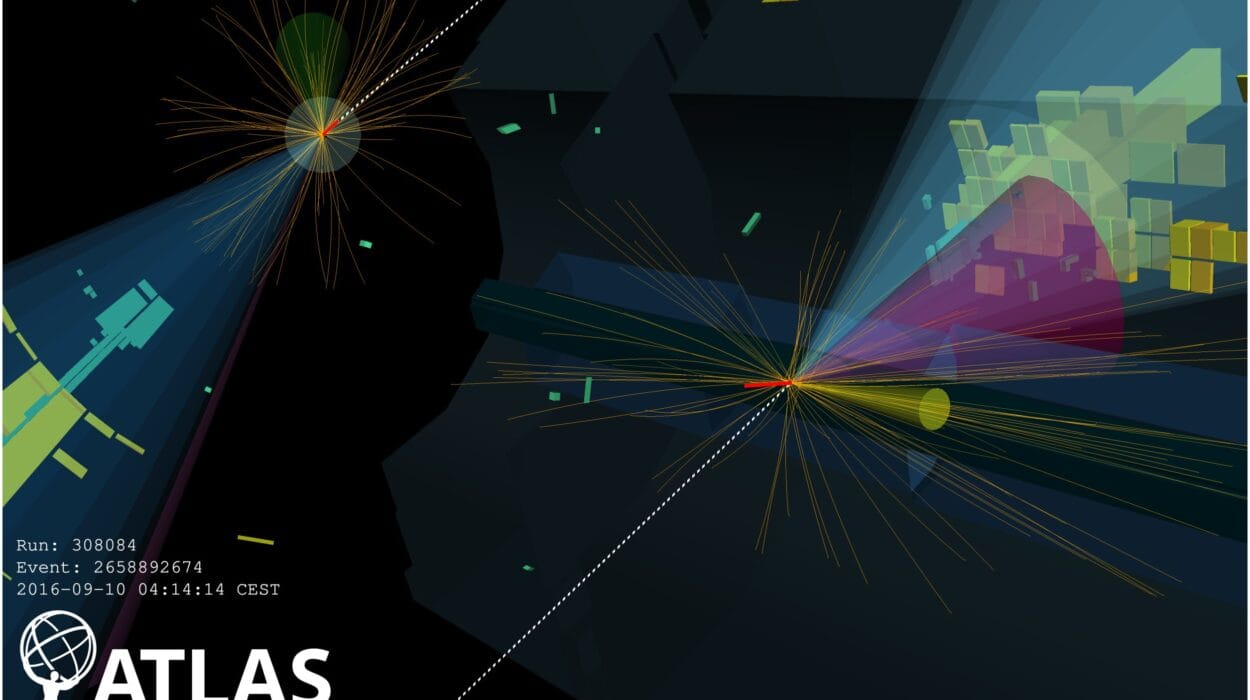

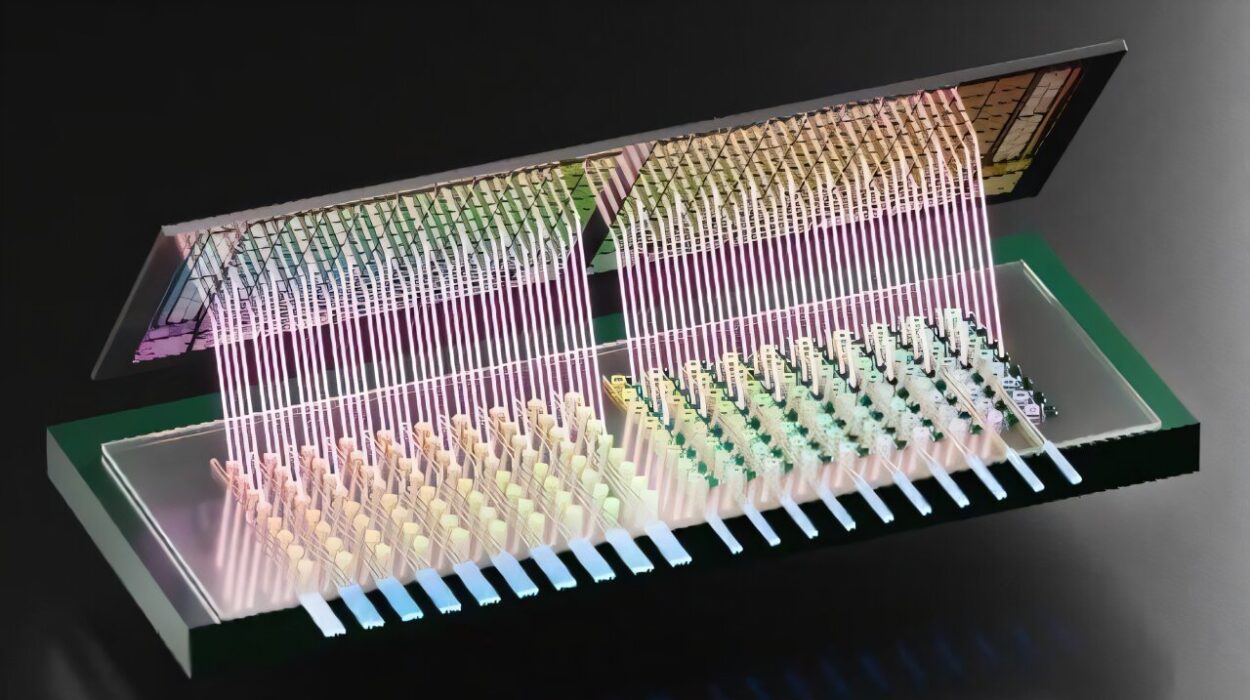

To answer that, the team tested PLANAR on three separate fronts: synthetic data, Google’s experimental quantum results, and their own in-house 72-qubit quantum processor. The results spoke volumes.

When applied to Google Quantum AI’s memory experiments, PLANAR slashed logical error rates by 25%. What had been assumed to be an unavoidable “error floor” turned out to be an illusion—merely the byproduct of suboptimal decoding.

Even more impressively, PLANAR improved the error suppression factor—the rate at which errors fall as code size increases—from 8.11 to 8.28. That subtle bump is a massive win in the high-stakes world of error correction, where every decimal matters.

On synthetic benchmarks, the algorithm didn’t just perform well—it set new standards, precisely calculating previously unknown thresholds for noise tolerance: 6.7% for depolarizing noise and 2.0% for superconductor SI1000 noise.

But perhaps the most exciting demonstration came from the researchers’ own 72-qubit chip, a system operated in harsh conditions without reset gates to simulate unpredictable error environments. Even in this challenging setting, PLANAR outperformed the best standard decoders by as much as 8.76%.

Why Repetition Codes Matter More Than You Think

Some might wonder: Why focus on repetition codes when more sophisticated error-correcting methods exist, like surface codes or color codes?

The answer lies in scalability.

Repetition codes have demonstrated something rare in the quantum world: they scale to large distances and achieve ultra-low error rates, sometimes reaching a mind-boggling 1 in 10 billion. They’re simple, reliable, and ideal for benchmarking quantum hardware.

As Prof. Feng Pan and his colleagues explained to Phys.org, “The repetition code scales to large distances while achieving ultra-low error rates, unlike surface codes limited to small distances on current hardware.”

Repetition codes also play a critical role in identifying cosmic-ray events that can corrupt quantum systems—an area of increasing concern for researchers trying to harden these devices against natural background radiation.

In this light, PLANAR doesn’t just offer a clever mathematical fix. It provides a practical tool for diagnosing and improving real quantum hardware—today.

A New Era for Error Correction and Beyond

The implications of PLANAR stretch far beyond one code or one experiment. For the first time, researchers have shown that exact maximum-likelihood decoding is not just a theoretical dream, but a practical reality, at least under the right conditions.

Even more promising, the team has already demonstrated that PLANAR can work for surface codes under particular noise conditions—codes widely viewed as foundational for the future of scalable quantum computing.

Their roadmap now includes adapting PLANAR to non-planar graphs with finite genus—a move that could bring efficient decoding to a broader family of quantum error-correcting codes.

As quantum computers inch closer to solving problems beyond the reach of classical machines, decoding will be one of the last great bottlenecks. Algorithms like PLANAR might be the key to clearing that hurdle.

The researchers are also exploring how to integrate PLANAR into real-time systems, enabling on-the-fly correction in operational quantum devices. Because PLANAR runs in polynomial time, it’s fast enough to meet the brutal demands of live quantum computation—where delays, even microseconds long, can be fatal.

The Hidden Power of Cross-Disciplinary Science

One of the most compelling aspects of this story is how it bridges fields.

The researchers behind PLANAR didn’t start in quantum computing. They began in statistical physics, studying Ising spin-glass models, a far cry from quantum algorithms.

But as they ventured into the world of QEC, they realized something beautiful: the decoding problem mirrored their own work. Most-likely decoding was analogous to calculating ground states of spin systems. Maximum-likelihood decoding mirrored calculating partition functions.

This kind of cross-pollination—where insights from one domain illuminate another—is where some of science’s greatest breakthroughs occur. PLANAR is not just an algorithm. It’s a testament to the power of thinking differently, of refusing to accept limits that others take for granted.

As the team put it, “While the QEC community knew planar graphs simplified MLE decoding, few recognized MLD could also be efficient for such topologies. This insight directly motivated our work.”

Toward Fault-Tolerant Quantum Computing

In the end, quantum computing will only change the world if it becomes fault-tolerant, able to operate reliably over long periods, across millions of operations. That will require robust error correction—not just at the hardware level, but also in the algorithms we use to interpret and fix errors.

PLANAR represents a major step forward on that path. By showing that what was once computationally forbidden can, under the right lens, become accessible, it redefines what’s possible in quantum science.

As we look ahead to quantum systems with hundreds, thousands, or even millions of qubits, the need for tools like PLANAR will only grow.

Because in the quantum world, where every bit of certainty must be wrestled from chaos, the right algorithm can make the impossible not just thinkable—but real.

Reference: Hanyan Cao et al, Exact Decoding of Quantum Error-Correcting Codes, Physical Review Letters (2025). DOI: 10.1103/PhysRevLett.134.190603.