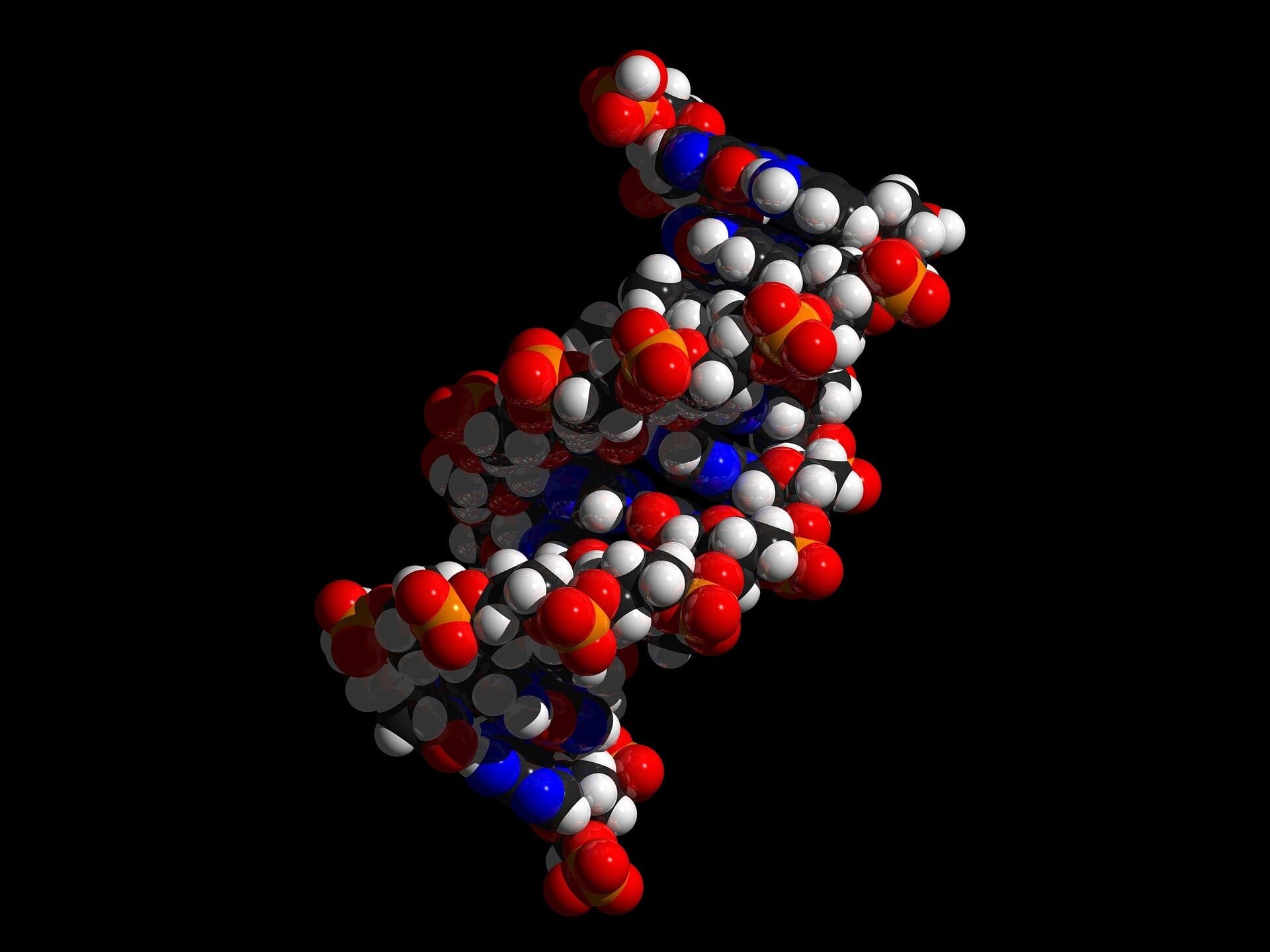

Proteins are the workhorses of life. They fold into intricate shapes that determine everything from how our muscles contract to how our immune systems fight infection. Understanding the structure and function of proteins has long been one of biology’s greatest challenges. A protein’s shape is not obvious from the string of amino acids that compose it, yet its three-dimensional form determines its role in the cell and, by extension, in health and disease.

In recent years, a quiet revolution has swept through biology. Borrowing ideas from artificial intelligence, researchers have begun using protein language models—AI systems originally inspired by the technology behind large language models like ChatGPT. Instead of analyzing words, these models analyze amino acid sequences. By studying vast databases of protein sequences, they learn the “grammar” of biology, spotting patterns that humans cannot see.

The results have been astonishing. These models can now predict a protein’s structure with remarkable accuracy. They can even suggest which proteins might serve as drug targets or how a virus may evolve. From designing new therapeutic antibodies to predicting vaccine targets, protein language models are becoming indispensable tools for modern science.

And yet, for all their success, they share one fundamental problem: they are black boxes. They make predictions with uncanny precision, but no one really knows how.

Why the Black Box Matters

When a protein model predicts that a molecule could make a good drug target, scientists want to know why. Which features of the protein matter most? Is it a specific fold, a chemical property, or a hidden pattern in its sequence? Without this knowledge, researchers can only trust the model blindly, never fully understanding the reasoning behind its answers.

This lack of transparency is not just a philosophical issue. It has real-world consequences. If we understood the inner workings of these models, we could pick the best one for a particular problem, fine-tune them more effectively, and perhaps even uncover new biological truths hidden in the data.

As Bonnie Berger, the Simons Professor of Mathematics at MIT, puts it: “Identifying features that protein language models track has the potential to reveal novel biological insights.” In other words, opening the black box might not just improve AI—it could transform biology itself.

A New Way to See Inside

In a recent study, MIT researchers led by Berger, along with graduate student Onkar Gujral, developed a new approach to peer into these mysterious models. Their method builds on a clever concept from artificial intelligence called a sparse autoencoder.

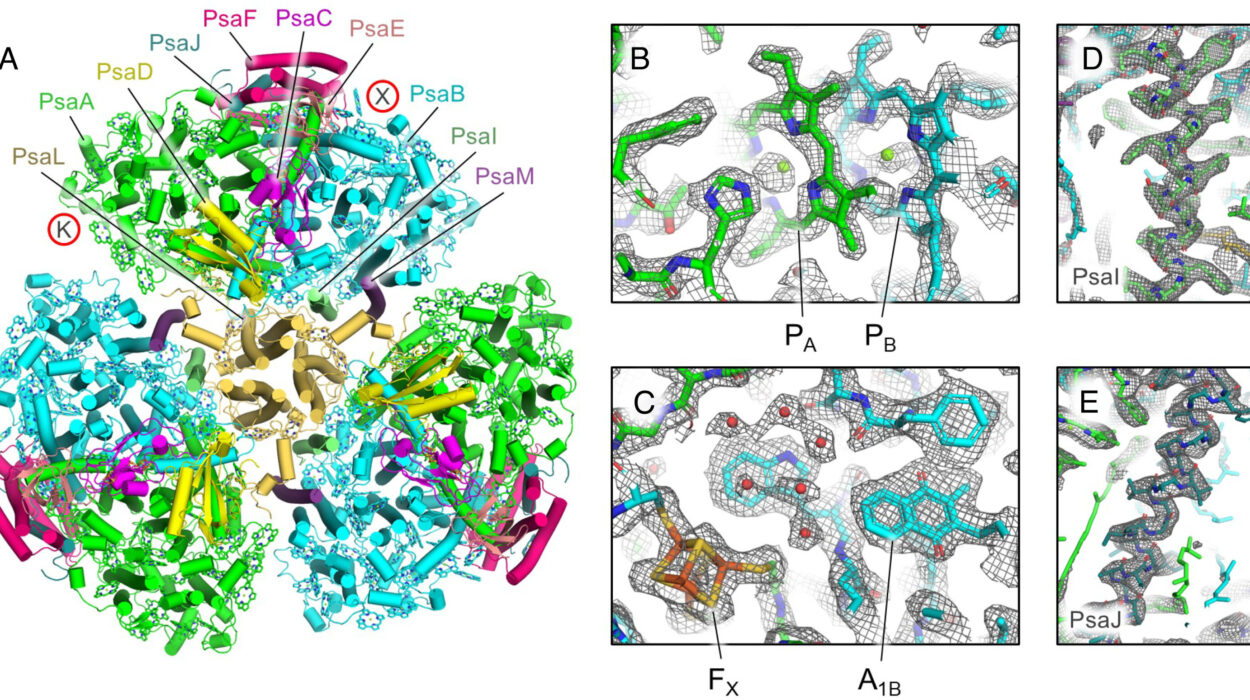

To understand this, imagine how a protein is normally represented inside a model. Its information is compressed into a pattern of activity across a limited number of “nodes” in the network—say, 480. Each of these nodes encodes many overlapping features. As a result, it’s almost impossible to tell which node represents what. The information is packed too tightly, like dozens of voices talking at once in the same room.

A sparse autoencoder changes the game. It expands the representation into a much larger space—20,000 nodes instead of 480. With this expansion, and a constraint that only a small fraction of the nodes can activate at once, the model is forced to spread the information out. Suddenly, features that were once crammed together into a single node now occupy separate ones. The room goes quiet, and the voices become distinct.

“In a sparse representation, the neurons lighting up are doing so in a more meaningful manner,” Gujral explains. What was once inscrutable now begins to take shape.

Teaching AI to Speak Biology

But identifying which nodes light up is only the first step. The researchers needed to connect those patterns to biology. For this, they turned to another AI assistant—Claude, a model related to modern chatbots.

Claude was asked to compare the sparse patterns with known features of proteins, such as whether they belong to a certain family, perform a specific molecular function, or reside in a particular part of the cell. By analyzing thousands of cases, the system could translate abstract patterns into plain biological language.

For example, Claude might interpret a node’s activity as: “This neuron appears to be detecting proteins involved in transmembrane transport of ions or amino acids, particularly those located in the plasma membrane.”

Suddenly, the nodes were no longer meaningless numbers. They became interpretable features, grounded in real biology.

From Prediction to Understanding

The results of this approach were illuminating. The MIT team discovered that protein language models often encode very specific biological properties—protein families, structural motifs, and even metabolic processes. These features emerge naturally from the training process, even though the models were never explicitly told about them.

“When you train a sparse autoencoder, you aren’t training it to be interpretable,” Gujral notes. “But by incentivizing the representation to be really sparse, that ends up resulting in interpretability.”

This interpretability opens the door to a new era of AI in biology. Instead of treating models as magical oracles, researchers can now begin to see why they make their predictions. This understanding could guide scientists in choosing the right model for a particular problem, designing better experiments, and even uncovering new principles of biology.

The Road Ahead

The implications are vast. Imagine a future where researchers can not only predict which protein might serve as a drug target but also explain precisely which features make it suitable. Or a scenario where vaccine developers can identify viral sites that are least likely to mutate, with a clear rationale backed by both AI and biology.

The work also hints at a deeper possibility. As these models grow more powerful, they may begin to encode biological insights that even humans have not yet discovered. By opening up the black box, we may one day learn new rules of protein biology directly from the models themselves.

A Step Toward Transparency

The story of protein language models is a reminder of how closely intertwined AI and biology have become. Each field pushes the other forward, creating tools that were unimaginable just a decade ago. But with power comes responsibility, and transparency is key.

The MIT team’s breakthrough represents more than a technical advance. It is a philosophical shift, from blind reliance on machines to genuine collaboration with them. By learning to interpret the language of proteins as spoken by AI, we gain not just better tools, but deeper understanding.

And in that understanding lies the future of medicine—smarter drugs, safer vaccines, and perhaps entirely new insights into the molecular fabric of life.

More information: Berger, Bonnie, Sparse autoencoders uncover biologically interpretable features in protein language model representations, Proceedings of the National Academy of Sciences (2025). DOI: 10.1073/pnas.2506316122. doi.org/10.1073/pnas.2506316122