In the world of light, precision is everything. How it bends, scatters, and spreads determines what we can see—from the faint outlines of a distant galaxy to the intricate machinery inside a single living cell. Now, engineers at UCLA have taken a giant leap in the art of light manipulation, introducing a groundbreaking optical framework that could transform how we capture and interpret the three-dimensional world.

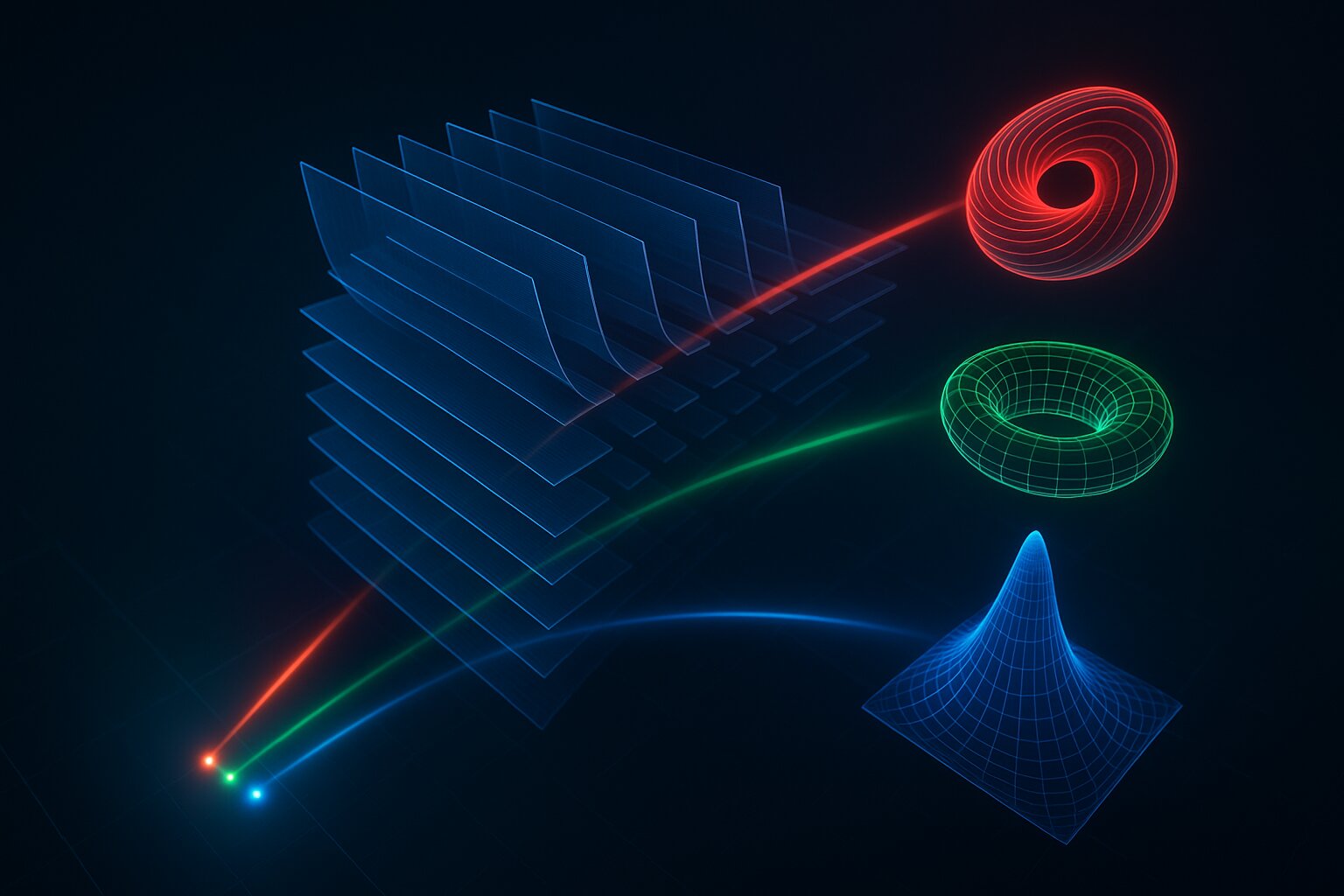

At the heart of this leap is a universal method to engineer point spread functions (PSFs)—the fundamental fingerprints of how light behaves in an imaging system. The team’s breakthrough, published in the journal Light: Science & Applications, doesn’t just tweak or refine existing methods. It reimagines them entirely.

Their innovation? A compact, passive, all-optical processor built not with wires or lenses, but with carefully sculpted diffractive surfaces—tiny structures optimized by deep learning to reshape light in previously impossible ways.

Seeing in 3D Without Moving a Muscle

The significance of this work lies in its simplicity and elegance. For decades, 3D imaging has relied on a complex dance of mechanisms: scanning systems that move back and forth to build up a volume, filters to isolate different wavelengths of light, or powerful algorithms that stitch everything together after the fact.

But this new framework sidesteps all of that.

Instead of using motors or digital computations, the UCLA team’s optical processor performs its magic in real time, using nothing but the passive flow of light through intricately designed layers. These layers—etched with patterns engineered using neural networks—convert incoming light into exquisitely tailored 3D patterns, known as spatially varying PSFs. This allows the device to simultaneously capture depth and color information in a single snapshot, without the need for mechanical motion, spectral filters, or postprocessing.

“We’re talking about a fundamentally new way to control light,” said Dr. Md Sadman Sakib Rahman, co-lead author of the study and a researcher in the Electrical and Computer Engineering Department at UCLA. “What we’ve built is a universal platform—a sort of optical engine—that can shape and manipulate 3D light fields with extraordinary precision.”

Diffractive Intelligence: When Optics Meets AI

The key to this new system is its use of diffractive optical processors—passive devices composed of a sequence of thin surfaces that bend light in controlled, customized ways. These aren’t traditional lenses. They’re more like light-sculpting canvases, etched with structures so fine they manipulate light at the scale of its wavelength.

To design these processors, the UCLA team employed deep learning—training neural networks to create the exact patterns needed to produce any desired 3D transformation of light. In technical terms, the system can perform arbitrary linear mappings between 3D optical intensity distributions in its input and output spaces.

In everyday terms, that means the device can be trained to extract any kind of 3D information from incoming light—its depth, its color, its spatial features—and output it in a form ready to be captured by a standard image sensor.

“This is as close as we’ve come to physically embedding intelligence into optics,” said Dr. Aydogan Ozcan, principal investigator on the project and a professor of electrical engineering and bioengineering at UCLA. “The result is an all-optical platform that processes complex spatial and spectral information without needing a computer at all.”

Applications as Vast as Light Itself

The implications of this breakthrough stretch across disciplines. In biomedical imaging, it could lead to high-throughput 3D microscopes that scan entire cellular volumes instantly, capturing real-time dynamics in tissues and organoids. In environmental science, it could create compact, multispectral imagers capable of analyzing vegetation health or water pollution from drones or satellites.

And in fields like optical data transmission or quantum information, this new PSF engineering framework could unlock modes of encoding and processing light that were once thought too complex to implement in hardware.

Because the approach is entirely passive—requiring no energy or moving parts—it is not only fast but also energy efficient. Its solid-state design makes it robust, scalable, and suitable for integration into a wide variety of optical platforms.

“This is a toolkit for the future of optics,” said Rahman. “Whether you’re building a medical device, a scientific instrument, or even an optical computer, the ability to custom-engineer 3D light behavior opens up a world of possibilities.”

The Power of Universality

What sets this work apart isn’t just its speed or accuracy—it’s the universality of the method. Prior techniques in PSF engineering have been constrained by the physics of lenses and phase masks, which often limit the shapes and functions that can be physically realized. Typically, such designs assume a uniform PSF across space or operate within strict spectral bands.

The UCLA framework throws those limitations out the window.

By engineering PSFs that vary in three dimensions—not only in space but also in wavelength—the team’s diffractive processors can accommodate complex optical goals, like capturing full 3D multispectral data in a single exposure. That’s a dramatic departure from how imaging systems have traditionally worked.

And because the design process is driven by AI, it’s adaptable. Want to optimize your imaging system for near-infrared fluorescence microscopy? Or perhaps you need a custom 3D PSF to improve contrast in underwater imaging? The same neural design approach can be retrained for the job.

A Glimpse Into the Optics of Tomorrow

This research is more than just an academic exercise. It’s a blueprint for a new generation of optical systems—ones that are smarter, faster, and far more capable than anything before.

While today’s digital cameras and microscopes rely heavily on computation after image capture, UCLA’s diffractive processors suggest a future where much of that processing happens before the light even hits the sensor. It’s a paradigm shift from computational imaging to optical computing—where the medium itself becomes the processor.

“The physics of light hasn’t changed,” said Ozcan. “But our ability to mold it, to teach it new tricks—that’s where the revolution is happening.”

As the line between optics and computation continues to blur, innovations like this hint at a future where imaging systems are no longer passive recorders, but active participants—thinking in light, computing in waves, and offering us views of the world more vivid and precise than ever before.

One snapshot at a time.

Reference: Md Sadman Sakib Rahman et al, Universal point spread function engineering for 3D optical information processing, Light: Science & Applications (2025). DOI: 10.1038/s41377-025-01887-x